1.环境准备

配置:一台虚机,4C16G50G

系统: ubuntu22.04

K8S版本: 1.28

部署方式:kubeadm安装

2.部署前操作

# sysctl params required by setup, params persist across reboots cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf net.ipv4.ip_forward = 1 EOF # Apply sysctl params without reboot sudo sysctl --system sysctl net.ipv4.ip_forward swapoff -a vi /etc/fstab注释相关 #setenforce 0

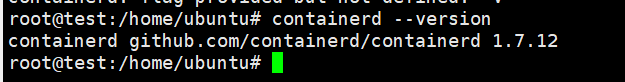

3.containerd安装

sudo apt-get update sudo apt-get install -y containerd systemctl start containerd #获取配置文件 sudo mkdir -p /etc/containerd sudo containerd config default > /etc/containerd/config.toml #修改配置: sandbox_image = "registry.cn-hangzhou.aliyuncs.com/mytest_docker123/pause:3.9" SystemdCgroup = true

name:主机名

#重启服务 systemctl restart containerd

4.安装kubeadm、kubectl、kubelet

# 基础软件和安装源配置

sudo apt-get install -y apt-transport-https ca-certificates curl curl -s https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | sudo apt-key add - sudo tee /etc/apt/sources.list.d/kubernetes.list << EOF deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main EOF sudo apt-get update # 查看可安装的版本: apt-cache madison kubeadm apt-get install kubeadm=1.28.2-00 kubectl=1.28.2-00 kubelet=1.28.2-00 -y sudo apt-mark hold kubelet kubeadm kubectl

systemctl start kubelet

systemctl enable kubelet

5.部署K8S

kubeadm config print init-defaults > init.defaulsts.yaml 变更内容 advertiseAddress: 主机ip imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers #networking下添加podSubnet,网段不能跟宿主机和service网络一样 networking: podSubnet: 20.10.0.0/16 kubeadm init --config init.defaulsts.yaml

apiVersion: kubeadm.k8s.io/v1beta3 bootstrapTokens: - groups: - system:bootstrappers:kubeadm:default-node-token token: abcdef.0123456789abcdef ttl: 24h0m0s usages: - signing - authentication kind: InitConfiguration localAPIEndpoint: advertiseAddress: 172.16.16.3 bindPort: 6443 nodeRegistration: criSocket: unix:///var/run/containerd/containerd.sock imagePullPolicy: IfNotPresent name: test taints: null --- apiServer: timeoutForControlPlane: 4m0s apiVersion: kubeadm.k8s.io/v1beta3 certificatesDir: /etc/kubernetes/pki clusterName: kubernetes controllerManager: {} dns: {} etcd: local: dataDir: /var/lib/etcd imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers kind: ClusterConfiguration kubernetesVersion: 1.28.0 networking: dnsDomain: cluster.local serviceSubnet: 10.96.0.0/12 podSubnet: 20.10.0.0/16 scheduler: {}

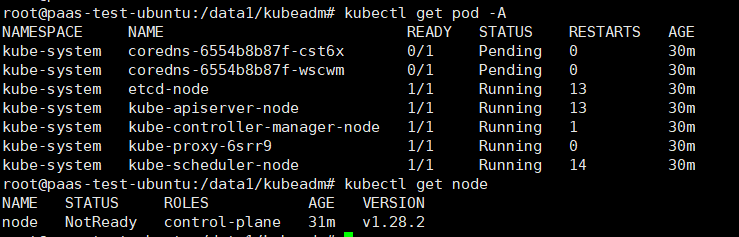

安装成功效果

安装好的信息: [bootstrap-token] Configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace [kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully! To start using your cluster, you need to run the following as a regular user: mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config Alternatively, if you are the root user, you can run: export KUBECONFIG=/etc/kubernetes/admin.conf You should now deploy a pod network to the cluster. Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/ Then you can join any number of worker nodes by running the following on each as root: kubeadm join 192.168.3.68:6443 --token abcdef.0123456789abcdef \ --discovery-token-ca-cert-hash sha256:3a158c08c3dd180ced3dd6576cb8c839d90aadd0219c98d4fbfe327a0151d0f8

安装后配置:

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

6.服务验证

kubectl get pod -A

kubectl get node

7.网络calico安装

#官网:https://docs.tigera.io/calico/3.28/getting-started/kubernetes/quickstart kubectl create -f https://raw.githubusercontent.com/projectcalico/calico/v3.28.2/manifests/tigera-operator.yaml

wget https://raw.githubusercontent.com/projectcalico/calico/v3.28.2/manifests/custom-resources.yaml

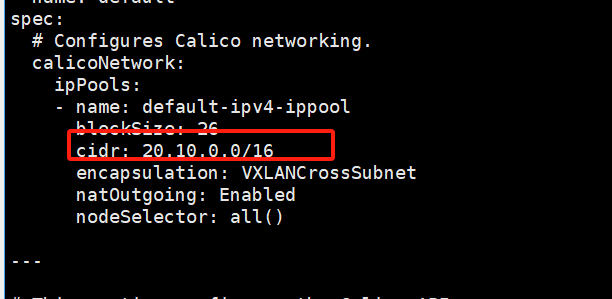

#修改custom-resources.yaml,cidr改成与k8s部署时,初始文件init.defaulsts.yaml里podSubnet值一致

kubectl create -f custom-resources.yaml

kubectl taint nodes --all node-role.kubernetes.io/control-plane-

通过网盘分享的文件:calico1.28

链接: https://pan.baidu.com/s/1t5d1KLlBj82kREX3pmdkMw 提取码: 1234

因为网络问题,镜像获取不到,可以直接以下命令

ctr -n k8s.io image pull registry.cn-hangzhou.aliyuncs.com/mytest_docker123/calico-pod2daemon-typha:v3.28.2 ctr -n k8s.io image pull registry.cn-hangzhou.aliyuncs.com/mytest_docker123/calico-node:v3.28.1 ctr -n k8s.io image pull registry.cn-hangzhou.aliyuncs.com/mytest_docker123/calico-cni:v3.28.1 ctr -n k8s.io image pull registry.cn-hangzhou.aliyuncs.com/mytest_docker123/calico-pod2daemon-typha:v3.28.2 ctr -n k8s.io image pull registry.cn-hangzhou.aliyuncs.com/mytest_docker123/calico-cni:v3.28.2 ctr -n k8s.io image pull registry.cn-hangzhou.aliyuncs.com/mytest_docker123/calico-csi:v3.28.2 ctr -n k8s.io image pull registry.cn-hangzhou.aliyuncs.com/mytest_docker123/calico-node-driver-registrar:v3.28.2 ctr -n k8s.io image pull registry.cn-hangzhou.aliyuncs.com/mytest_docker123/calico-node:v3.28.2 ctr -n k8s.io image pull registry.cn-hangzhou.aliyuncs.com/mytest_docker123/calico-pod2daemon-flexvol:v3.28.2 ctr -n k8s.io image pull registry.cn-hangzhou.aliyuncs.com/mytest_docker123/calico-csi:v3.28.2 ctr -n k8s.io images pull registry.cn-hangzhou.aliyuncs.com/mytest_docker123/calico-kube-controllers:v3.28.2 ctr -n k8s.io images pull registry.cn-hangzhou.aliyuncs.com/mytest_docker123/calico-apiserver:v3.28.2 ctr -n k8s.io images tag registry.cn-hangzhou.aliyuncs.com/mytest_docker123/calico-apiserver:v3.28.2 docker.io/calico/apiserver:v3.28.2 ctr -n k8s.io images tag registry.cn-hangzhou.aliyuncs.com/mytest_docker123/calico-kube-controllers:v3.28.2 docker.io/calico/kube-controllers:v3.28.2 ctr -n k8s.io image tag registry.cn-hangzhou.aliyuncs.com/mytest_docker123/calico-csi:v3.28.2 docker.io/calico/csi:v3.28.2 ctr -n k8s.io image tag registry.cn-hangzhou.aliyuncs.com/mytest_docker123/calico-pod2daemon-typha:v3.28.2 docker.io/calico/typha:v3.28.2 ctr -n k8s.io image tag registry.cn-hangzhou.aliyuncs.com/mytest_docker123/calico-node:v3.28.1 docker.io/calico/node:v3.28.1 ctr -n k8s.io image tag registry.cn-hangzhou.aliyuncs.com/mytest_docker123/calico-cni:v3.28.1 docker.io/calico/cni:v3.28.1 ctr -n k8s.io image tag registry.cn-hangzhou.aliyuncs.com/mytest_docker123/calico-pod2daemon-typha:v3.28.2 docker.io/calico/typha:v3.28.2 ctr -n k8s.io image tag registry.cn-hangzhou.aliyuncs.com/mytest_docker123/calico-cni:v3.28.2 docker.io/calico/cni:v3.28.2 ctr -n k8s.io image tag registry.cn-hangzhou.aliyuncs.com/mytest_docker123/calico-node-driver-registrar:v3.28.2 docker.io/calico/node-driver-registrar:v3.28.2 ctr -n k8s.io image tag registry.cn-hangzhou.aliyuncs.com/mytest_docker123/calico-node:v3.28.2 docker.io/calico/node:v3.28.2 ctr -n k8s.io image tag registry.cn-hangzhou.aliyuncs.com/mytest_docker123/calico-pod2daemon-flexvol:v3.28.2 docker.io/calico/pod2daemon-flexvol:v3.28.2

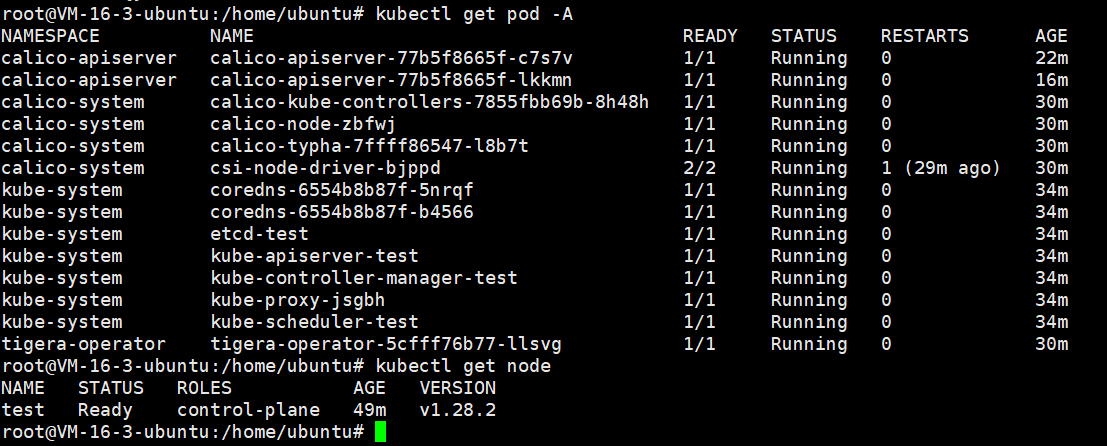

8.确认calico及k8s服务

9.部署服务验证

创建busy.yaml文件

apiVersion: apps/v1 kind: Deployment metadata: name: busybox1 namespace: default spec: replicas: 1 selector: matchLabels: app: busybox template: metadata: labels: app: busybox spec: containers: - args: - "36000" command: - sleep image: registry.cn-hangzhou.aliyuncs.com/mytest_docker123/alpine imagePullPolicy: IfNotPresent name: busybox dnsPolicy: ClusterFirst

kubectl apply -f busy.yaml

10.确认部署结果