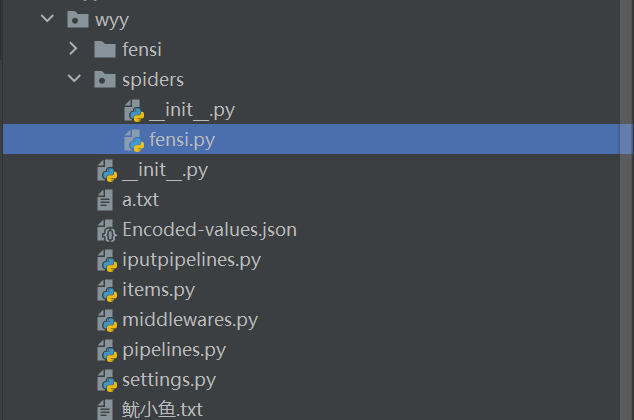

Scrapy 抓取网易云粉丝 利用API,速度最快 【六】

首先创建工程,然后建立fensi.py

# -*- coding: utf-8 -*- import sys if sys.version_info[0] > 2: from urllib.parse import urlencode else: from urllib import urlencode import scrapy import random import math from Crypto.Cipher import AES import codecs import base64 import json class WyyFansSpider(scrapy.Spider): name = 'wyy' allowed_domains = ['163.com'] # start_urls = ['http://163.com/'] def __init__(self): self.key = '0CoJUm6Qyw8W8jud' self.f = '00e0b509f6259df8642dbc35662901477df22677ec152b5ff68ace615bb7b725152b3ab17a876aea8a5aa76d2e417629ec4ee341f56135fccf695280104e0312ecbda92557c93870114af6c9d05c4f7f0c3685b7a46bee255932575cce10b424d813cfe4875d3e82047b97ddef52741d546b8e289dc6935b3ece0462db0a22b8e7' self.e = '010001' self.singer_id = '1411492497' self.post_url1 = 'https://music.163.com/weapi/user/getfolloweds?csrf_token=' #self.post_url2 = 'https://music.163.com/weapi/v1/play/record?csrf_token=' # 生成16个随机字符 def _generate_random_strs(self, length): string = "abcdefghijklmnopqrstuvwxyzABCDEFGHIJKLMNOPQRSTUVWXYZ0123456789" # 控制次数参数i i = 0 # 初始化随机字符串 random_strs = "" while i < length: e = random.random() * len(string) # 向下取整 e = math.floor(e) random_strs = random_strs + list(string)[e] i = i + 1 return random_strs # AES加密 def _AESencrypt(self, msg, key): # 如果不是16的倍数则进行填充(paddiing) padding = 16 - len(msg) % 16 msg = msg + padding * chr(padding) # 用来加密或者解密的初始向量(必须是16位) iv = '0102030405060708' cipher = AES.new(key.encode('utf8'), AES.MODE_CBC, iv.encode('utf8')) # 加密后得到的是bytes类型的数据 encryptedbytes = cipher.encrypt(msg.encode('utf-8')) # 使用Base64进行编码,返回byte字符串 encodestrs = base64.b64encode(encryptedbytes) # 对byte字符串按utf-8进行解码 enctext = encodestrs.decode('utf-8') return enctext # RSA加密 def _RSAencrypt(self, randomstrs, key, f): # 随机字符串逆序排列 string = randomstrs[::-1] # 将随机字符串转换成byte类型数据 text = bytes(string, 'utf-8') seckey = int(codecs.encode(text, encoding='hex'), 16) ** int(key, 16) % int(f, 16) return format(seckey, 'x').zfill(256) # 获取参数 def _get_params1(self, page): offset = (page - 1) * 20 msg = '{"userId": "1411492497", "offset":' + str(offset) + ', "total": "false", "limit": "20", "csrf_token": ""}' enctext = self._AESencrypt(msg, self.key) # 生成长度为16的随机字符串 i = self._generate_random_strs(16) # 两次AES加密之后得到params的值 encText = self._AESencrypt(enctext, i) # RSA加密之后得到encSecKey的值 encSecKey = self._RSAencrypt(i, self.e, self.f) return encText, encSecKey def start_requests(self): for i in range(1,int(4923265/20)+1): #修改抓取粉丝数 params, encSecKey = self._get_params1(i) headers = {'Host': 'music.163.com', 'Connection': 'keep-alive', 'Content-Length': '476', 'Pragma': 'no-cache', 'Cache-Control': 'no-cache', 'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/81.0.4044.138 Safari/537.36', 'Content-Type': 'application/x-www-form-urlencoded', 'Accept': '*/*', 'Origin': 'https://music.163.com', 'Sec-Fetch-Site': 'same-origin', 'Sec-Fetch-Mode': 'cors', 'Sec-Fetch-Dest': 'empty', 'Referer': 'https://music.163.com/user/fans?id=1411492497', 'Accept-Encoding': 'gzip, deflate, br', 'Accept-Language': 'zh-CN,zh;q=0.9', # 'Cookie': '_iuqxldmzr_=32; _ntes_nnid=008eb89f93bb80b8c5abbbfeb29cf783,1601351876041; _ntes_nuid=008eb89f93bb80b8c5abbbfeb29cf783; NMTID=00OjXQcC8_wl8Qc5Eyzj_hKZKF_GlUAAAF02AJ4-A; WM_NI=Nz8nT1vsX8DoejbrC5yMqBrqv70bOcl%2Fe9pgZSO9wSff8VZdQamhdi38Tu5LOB4kn7SaIJfCij4ENk3o9AkK0xpJ9ALg8jqb0bfyIAprddlPL1%2FzcgWpVXoiyEbZoNNKdHQ%3D; WM_NIKE=9ca17ae2e6ffcda170e2e6eea2e47b9bbaaf93b6508d9a8ab7d44b828b9aafb546a2909db9b3489c949eaff22af0fea7c3b92a8ea6f88fd73bb6ad98d9c765a7b6ae93c23fbb93bfa3f17bb6e90083ce69b0f1abdaf36d9a8d81daf659a6baf8b7d97093bfa389e763f1e89c96b8488d9efc8ced3d91bf87abe549abb6fca6cd61f1b1f7a2ea41f4a8c0abbc4b90b9f88bf46af4beaa9acc4bb4aeac85ef5c90efe19be26d95b09ab9ee63bb9baebbee468e95aca9d437e2a3; WM_TID=5UHlf7z1yZ1FQBVRREY%2FJaeDTwOCfBMs; JSESSIONID-WYYY=khAdF6WsaT8Vl%2FBmeuUxNUJzXuSo9AuMAkkyWuiGbGlShWwbk%5CW3flpBsDz0ZTNpKPz8PcvsO%2FYH8jX9F07a5ACh0KqO5O0nAoEJO5W%2FR8yfJSJdCm95FQaQxo7QQzQ%2FfJpypzjeXQI8RO3opWeXr1x7z1GUBQQ2sn4P5sEWeDNkPoSO%3A1601382242186' } url = 'https://music.163.com/weapi/user/getfolloweds?csrf_token=' formdata = { 'params': params, 'encSecKey': encSecKey, } yield scrapy.FormRequest(url = self.post_url1, formdata = formdata, callback = self.parse) def parse(self, response): response = json.loads(response.text) followeds = response['followeds'] for followed in followeds: avatar = followed['avatarUrl'] userId = followed['userId'] vipType = followed['vipType'] gender = followed['gender'] eventCount = followed['eventCount'] fan_followeds = followed['followeds'] fan_follows = followed['follows'] signature = followed['signature'] time1 = followed['time'] nickname = followed['nickname'] playlistCount = followed['playlistCount'] fan = { 'userId': userId, 'avatar': avatar, 'vipType': vipType, 'gender': gender, 'eventCount': eventCount, 'followeds': fan_followeds, 'follows': fan_follows, 'signature': signature, 'time': time1, 'nickname': nickname, 'playlistCount': playlistCount } yield fan

接下来写入items.py

# Define here the models for your scraped items # # See documentation in: # https://docs.scrapy.org/en/latest/topics/items.html import scrapy class WyyItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() # define the fields for your item here like: # name = scrapy.Field() avatar = scrapy.Field() userId = scrapy.Field() # vipRights = scrapy.Field() vipType = scrapy.Field() gender = scrapy.Field() eventCount = scrapy.Field() fan_followeds = scrapy.Field() fan_follows = scrapy.Field() signature = scrapy.Field() time = scrapy.Field() nickname = scrapy.Field() playlistCount = scrapy.Field() total_record_count = scrapy.Field() week_record_count = scrapy.Field()

接下来有两种选择,可以写入mongo数据库,或者写入txt文件,自行选择。

写入mongo 则在pipelines.py

# Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html # useful for handling different item types with a single interface from itemadapter import ItemAdapter from pymongo import MongoClient class WyyPipeline(object): def __init__(self) -> None: # 连接 self.client = MongoClient(host='localhost', port=27017) # 如果设置有权限, 则需要先登录 # db_auth = self.client.admin # db_auth.authenticate('root', 'root') # 需要保存到的collection self.col = self.client['wyy'] self.fans = self.col.fans2 def process_item(self, item, spider): res = dict(item) self.fans.update_one({"userId": res['userId']}, {"$set": res}, upsert=True) return item def open_spider(self, spider): pass def close_spider(self, spider): self.client.close()

我不知道为啥,我的写入mongo只能抓取1000条,所以放弃了。

选择写入txt,在创建一个iputpipelines.py

import random import os class WyyPipeline(object): def process_item(self, item, spider): print ('---------write------------------') mingzi = item['nickname'] guanzhu = item['follows'] fensi = item['followeds'] dongtai = item['eventCount'] shuju = '名字' + ':' + str(mingzi) + ';' + '动态' + ':' + str(dongtai) + ';' + '关注' + ':' + str(guanzhu) + ';' + '粉丝' + ':' + str(fensi) + '\n' suiji = random.randint(1,50) fileName = 'D:/Study/pythonProject/网易云音乐——粉丝/scrapy/wyy/wyy/fensi/鱿小鱼粉丝'+ str(suiji) + '.txt' #换成自己的绝对地址 fs = round(os.path.getsize(fileName) / float(1024 * 1024), 2) # 将文件大小的单位转换成MB if fs <= 1: f = open(fileName, "a+", encoding='utf-8') f.write(shuju) f.close() return item

接着在相同目录下,创建fensi文件夹,里面创建shengcheng.py 里面创建50个txt。以供写入:

for i in range(1,51): fp = '粉丝' + str(i) + '.txt' with open(fp,'w',encoding='utf-8') as fn: # 如果文件存在时,先进行清空,实现对一个文件重复 pass

接着写settings.py,加入以下内容。

CONCURRENT_REQUESTS = 100 CONCURRENT_REQUESTS_PER_DOMAIN = 100 CONCURRENT_REQUESTS_PER_IP = 100 COOKIES_ENABLED = False ITEM_PIPELINES = { 'wyy.iputpipelines.WyyPipeline': 300, #ipupipelinse ,可以修改成pipelines }

这样可以抓取500w粉丝,分配到50个txt里面,看电脑配置了,电脑越好抓的越快,我没试过抓这么多。

如果人生还有重来,那就不叫人生。

浙公网安备 33010602011771号

浙公网安备 33010602011771号