安装jdk:

- jdk-8u201-linux-x64.tar.gz

配置java环境变量

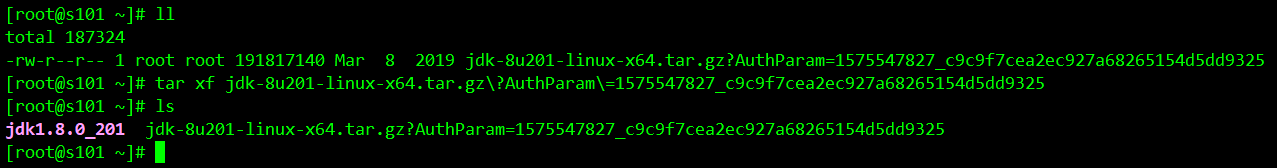

上传jdk-8u201-linux-x64.tar.gz到/opt/目录并解压,在/etc/profile文件中修改环境变量

下载目录 https://www.oracle.com/java/technologies/javase-java-archive-javase8-downloads.html

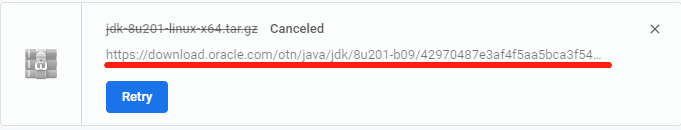

可复制标红处链接下载

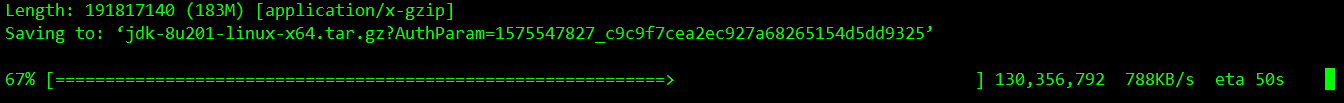

wget https://download.oracle.com/otn/java/jdk/8u201-b09/42970487e3af4f5aa5bca3f542482c60/jdk-8u201-linux-x64.tar.gz?AuthParam=1575547827_c9c9f7cea2ec927a68265154d5dd9325

正常解压即可

安装配置信息

tar xf jdk-8u201-linux-x64.tar.gz

echo -e '\n#配置java环境变量' >> /etc/profile

echo -e 'export JAVA_HOME=/opt/jdk1.8.0_201' >> /etc/profile

echo -e 'export PATH=$PATH:$JAVA_HOME/bin' >> /etc/profile

source /etc/profile

java -version

java version "1.8.0_201"

Java(TM) SE Runtime Environment (build 1.8.0_201-b09)

Java HotSpot(TM) 64-Bit Server VM (build 25.201-b09, mixed mode)

出现上述表示java环境安装配置成功

配置jar

Jar包准备:

- apache-maven-3.6.0-bin.tar.gz

- hadoop-3.2.0-src.tar.gz

- protobuf-2.5.0.tar.gz

- apache-ant-1.9.14-bin.zip

配置maven环境变量

上传 apache-maven-3.6.0-bin.tar.gz到/opt/目录并解压,在/etc/profile文件中修改环境变量

tar xf apache-maven-3.6.0-bin.tar.gz

echo -e '\n#配置maven环境变量' >> /etc/profile

echo -e 'export MAVEN_HOME=/opt/apache-maven-3.6.0' >> /etc/profile

echo -e 'export PATH=$PATH:$MAVEN_HOME/bin' >> /etc/profile

source /etc/profile

mvn -v

Apache Maven 3.6.0 (97c98ec64a1fdfee7767ce5ffb20918da4f719f3; 2018-10-25T02:41:47+08:00)

Maven home: /opt/apache-maven-3.6.0

Java version: 1.8.0_201, vendor: Oracle Corporation, runtime: /opt/jdk1.8.0_201/jre

Default locale: en_US, platform encoding: UTF-8

OS name: "linux", version: "3.10.0-957.el7.x86_64", arch: "amd64", family: "unix"

出现上述表示maven环境安装配置成功

配置ant环境变量**

unzip apache-ant-1.9.14-bin.zip

echo -e '\n#配置maven环境变量' >> /etc/profile

echo -e 'export ANT_HOME=/opt/apache-ant-1.9.14' >> /etc/profile

echo -e 'export PATH=$PATH:$ANT_HOME/bin' >> /etc/profile

source /etc/profile

ant -version

Apache Ant(TM) version 1.9.14 compiled on March 12 2019

出现上述表示maven环境安装配置成功

安装编译器及相关依赖**

yum install -y glibc-headers gcc-c++ make cmake

安装protobuf**

上传 protobuf-2.5.0.tar.gz到/opt/目录并解压,编译安装,并在/etc/profile文件中修改环境变量

tar xf protobuf-2.5.0.tar.gz

cd protobuf-2.5.0/

./configure

make

make check

make install

ldconfig

echo -e '\n#配置protobuf环境变量' >> /etc/profile

echo -e 'export PROTOBUF_HOME=/opt/protobuf-2.5.0' >> /etc/profile

echo -e 'export PATH=$PATH:$PROTOBUF_HOME/bin' >> /etc/profile

source /etc/profile

protoc --version

libprotoc 2.5.0

出现上述表示protoc环境安装配置成功

安装openssl及ncurses库**

yum install -y openssl-devel ncurses-devel

源码编译hadoop**

hadoop下载地址https://archive.apache.org/dist/hadoop/common

或使用下载网址查找

https://www.apache.org/dyn/closer.cgi/hadoop/common/

tar xf hadoop-3.2.1-src.tar.gz

cd /opt/hadoop-3.2.1-src

mvn clean package –Pdist,native –DskipTests –Dtar

提示hadoop编译需要30分钟左右,请耐心等待。编译完成后,进行下面的步骤

cd /opt

rm -rf /opt/hadoop-3.2.1-src

#此时已没有3.2.0版本hadoop

wget http://mirrors.tuna.tsinghua.edu.cn/apache/hadoop/common/hadoop-3.2.1/hadoop-3.2.1.tar.gz

tar xf hadoop-3.2.1.tar.gz

echo -e '\n#配置hadoop环境变量' >> /etc/profile

echo -e 'export HADOOP_HOME=/opt/hadoop-3.2.1' >> /etc/profile

echo -e 'export PATH=$PATH:$HADOOP_HOME/bin' >> /etc/profile

echo -e 'export PATH=$PATH:$HADOOP_HOME/sbin' >> /etc/profile

source /etc/profile

修改hadoop核心配置文件/opt/hadoop-3.2.1/etc/hadoop/core-site.xml

<configuration>

<!--指定HDFS中NameNode的址-->

<property>

<name>fs.defaultFS</name>

<value>hdfs://centos7-hadoop01:9000</value>

</property>

<!--指定hadoop运行产生文件(DataNode)的存储目录-->

<property>

<name>hadoop.tmp.dir</name>

<value>/opt/module/hadoop-2.7.2/data/tmp</value>

</property>

</configuration>

修改hadoop核心配置文件/opt/hadoop-3.2.1/etc/hadoop/hdfs-site.xml

<configuration>

<!--指定HDFS副本数量,这里只设置了一个节点(hadoop01)副本数量为1)-->

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

</configuration>

修改hadoop核心配置文件/opt/hadoop-3.2.1/etc/hadoop/hadoop-env.sh追加以下内容

export JAVA_HOME=/opt/jdk1.8.0_201

##伪分布式启动集群**

格式化namenode:第一次启动时格式化,以后就不要格式化了,如果之后再格式化则namenode为新生成的,就找不到DataNode

hdfs namenode -format

启动namenode:

```(bash)

hadoop-daemon.sh start namenode

启动datanode

hadoop-daemon.sh start datanode

查看系统启动进程:jps,结果如下:

8229 NameNode

8246 Jps

表明namenode启动成功

测试是否启动成功: http://locahost:50070

注意,如果格式化代码失败关闭防火墙,重启系统

配置系统主机名**

修改/etc/hosts文件

echo -e '192.168.148.13 centos7-hadoop01' >> /etc/hosts

echo -e '192.168.148.14 centos7-hadoop02' >> /etc/hosts

echo -e '192.168.148.15 centos7-hadoop03' >> /etc/hosts

帮忙现错误**

Starting namenodes on [centos-hadoop01]

ERROR: Attempting to operate on hdfs namenode as root

ERROR: but there is no HDFS_NAMENODE_USER defined. Aborting operation.

Starting datanodes

ERROR: Attempting to operate on hdfs datanode as root

ERROR: but there is no HDFS_DATANODE_USER defined. Aborting operation.

Starting secondary namenodes [centos7-hadoop01]

ERROR: Attempting to operate on hdfs secondarynamenode as root

ERROR: but there is no HDFS_SECONDARYNAMENODE_USER defined. Aborting operation.

出现以上问题,表明不接受root用户启动hdfs。把sbin/start-dfs.sh和sbin/stop-dfs.sh在文件头部追加以下内容

HDFS_DATANODE_USER=root

HADOOP_SECURE_DN_USER=hdfs

HDFS_NAMENODE_USER=root

HDFS_SECONDARYNAMENODE_USER=root

课下问题**

- namenode的工作原理

- datanode的工作原理

- checkpoint的工作原理

- 机架感知作用

- 编辑日志与镜像文件存在作用是什么`

浙公网安备 33010602011771号

浙公网安备 33010602011771号