In software Transactional Memory(STM), word-based or object-based, which is better?

Current software transactional memory algorithms can be categorized into two classes, word-based or object-based according to different locking granularity. So a problem arises: which is better?

TinySTM is word-based, whose basic locking units are memory words. In [1], section 4 shows how the authors dynamically tune parameters so as to reach the best performance of TinyTM for different workloads. In TinySTM, multiple memory addresses are mapped into an entry i in a lock array by using a hash function f:

i = f(address) mod size_of_lock_array

The authors tried number of entries in the lock array(i.e., size of the lock array) from 2^8 to 2^24 for each application, the red-black tree and the sorted lists. This huge range of tries-and-errors seems that people would never know the exact number of locks needed for a specific multi-core application. What people can only do is asking machines to find the best configuration using brutal force methods.

Actually, words can seldom represent meanings unless grouped into a larger meaningful entity. The concepts of object-oriented programming has enabled programmers to write increasingly large number of lines of code since it reflects how people recognize the world. Both word-based and object-based STM map multiple memory addresses into a lock. Too many addresses mapping into a lock would result in unnecessary false sharing, therefore unnecessary transactional aborts. However, a smaller amount of addresses mapping into a lock would lead to a extremely larger number of entries in the lock array, which might slower down the validation procedure (because there are more locks to check) for the update transactions. Word-based STM and object-based STM are different in that they use different mapping strategies in mapping. Word-based STM maps fixed number of memory addresses into a lock and does not use any semantics information in the program. That’s why they tried a large range of lock array size in order to find the best number of locks. However, object-based TM can easily employs the information from the semantics of users’ program, which is hidden in the OOP designing process of programmers.

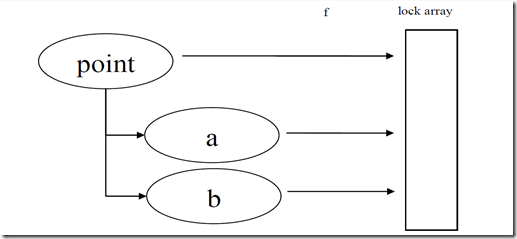

So what not we map different granularity of objects into the lock array so that each lock entry can be used to dynamically lock the addresses that semantically needs to be locked together, not just the addresses that is either physically adjacent or reach the same value after evaluated by the hash function f? Suppose there is an object constructed from the class point containing two fields a and b:

1: class point

2: {3: int a;

4: int b;

5: };Is that possible that we map the addresses of this object as well as the addresses of each field in this object into several entries in the lock array?

In this way, we would be able dynamically tune the TM so that it can lock the semantically correct objects in need. The problem with this method is that it would waste lots of lock entries storing unnecessary objects, but after all, this kind of waste is expected since it’s a tradeoff for better performance.

Reference:

[1] Pascal Felber and Christof Fetzer and Torvald Riegel. Dynamic Performance Tuning of Word-Based Software Transactional Memory.

posted on 2012-11-09 16:37 Fractal Matrix 阅读(302) 评论(0) 收藏 举报

浙公网安备 33010602011771号

浙公网安备 33010602011771号