rv1126开发板v8实时目标识别

项目背景

项目背景是想通过v8实时监测落石。以往开发板实时监测可能是摄像头(通过usb等)直连开发板,可以参考人脸识别/车牌识别等场景。这个项目的摄像头是通过rtsp协议给开发板传递数据,在开发板上进行识别后,将识别的结果返回服务器平台。

1.搭建环境

我的rv1126板子厂家给了很多的教程,按照他的教程基本上不会踩坑。其他的板子也会有教程,根据你自己的开发板型号去找。

2.视频拉流

踩过一次坑,当时看EASY EAI灵眸科技 | 让边缘AI落地更简单这个链接下的多路网络摄像头取流方案。以为将摄像头数量改成1,然后把v8检测模型一接,就万事大吉了。等到仔细看代码的时候发现,创建视频流通道的函数为阻塞函数,函数调用成功后不会返回,而是进入无限事件循环,直到断开连接或函数内部出现错误,否则该视频流通道就不会断,所以此函数是没有退出机制的,更不用提后面接v8检测模型了。

于是乎放弃,改用相对熟悉的opencv方式取视频流,符合(取帧+检测+返回结果)这一流程。拉取视频流代码如下:

std::string rtsp_url = "rtsp://用户名:密码@摄像头ip/streaming/channels/1"; // 打开RTSP流 cv::VideoCapture cap(rtsp_url, cv::CAP_FFMPEG); if (!cap.isOpened()) { std::cerr << "Error: Unable to open RTSP stream." << std::endl; return -1; } std::cout << "RTSP stream opened successfully." << std::endl; // 获取流的属性 double fps = cap.get(cv::CAP_PROP_FPS); int frame_width = static_cast<int>(cap.get(cv::CAP_PROP_FRAME_WIDTH)); int frame_height = static_cast<int>(cap.get(cv::CAP_PROP_FRAME_HEIGHT)); std::cout << "Stream properties: " << std::endl; std::cout << " Frame rate (FPS): " << fps << std::endl; std::cout << " Frame width: " << frame_width << std::endl; std::cout << " Frame height: " << frame_height << std::endl;

3.v8检测

这一部分参考https://github.com/airockchip/rknn_model_zoo/tree/main/examples/yolov8中的图像检测代码和EASY EAI灵眸科技 | 让边缘AI落地更简单中的v8检测代码(以后者为主),以及通过显示屏实时检测人脸的代码(链接同上),目录结构如下:

yolov8_detect_C_demo

├─ CMakeLists.txt

├─ main.cpp

├─ yolov8_detect_demo_release

│ ├─ yolov8m_rv1126.rknn

├─ lib

│ ├─ file_utils.cpp

│ ├─ yolov8.cpp

│ └─ yolov8_postprocess.cpp

└─ include

├─ file_utils.h

├─ postprocess.h

└─ yolov8.h

main.cpp文件和CMakeLists.txt文件需要自己写。main函数中有这几个部分:

构建v8检测器类:源代码中yolov8_detect_init函数放到类构造函数,源代码中yolov8_detect_release函数放到类析构函数,yolov8_detect_run函数放到类成员函数,定义成员变量

class YOLOv8Detector { public: YOLOv8Detector(const std::string& model_path) { // 初始化 YOLOv8 模型 yolov8_detect_init(model_path.c_str(), &rknn_app_ctx); } ~YOLOv8Detector() { // 释放 YOLOv8 模型资源 yolov8_detect_release(&rknn_app_ctx); } int detect(cv::Mat& frame, detect_result_group_t& detect_result_group) { // 运行 YOLOv8 检测 return yolov8_detect_run(&rknn_app_ctx, frame, &detect_result_group); } private: rknn_app_context_t rknn_app_ctx; };

std::string getClassLabel(int cls_id) { std::vector<std::string> coco_classes = { "person", "bicycle", "car", "motorbike", "aeroplane", "bus", "train", "truck", "boat", "traffic light", "fire hydrant", "stop sign", "parking meter", "bench", "bird", "cat", "dog", "horse", "sheep", "cow", "elephant", "bear", "zebra", "giraffe", "backpack", "umbrella", "handbag", "tie", "suitcase", "frisbee", "skis", "snowboard", "sports ball", "kite", "baseball bat", "baseball glove", "skateboard", "surfboard", "tennis racket", "bottle", "wine glass", "cup", "fork", "knife", "spoon", "bowl", "banana", "apple", "sandwich", "orange", "broccoli", "carrot", "hot dog", "pizza", "donut", "cake", "chair", "sofa", "pottedplant", "bed", "diningtable", "toilet", "tvmonitor", "laptop", "mouse", "remote", "keyboard", "cell phone", "microwave", "oven", "toaster", "sink", "refrigerator", "book", "clock", "vase", "scissors", "teddy bear", "hair drier", "toothbrush" }; if (cls_id >= 0 && cls_id < coco_classes.size()) { return coco_classes[cls_id]; } return "unknown"; // 如果类别 ID 未找到,返回 "unknown" }

int processFrame(cv::Mat& frame, detect_result_group_t& detect_result_group, YOLOv8Detector& detector) { // 运行 YOLOv8 检测 int ret = detector.detect(frame, detect_result_group); if (ret != 0) { std::cerr << "YOLOv8 detection failed!" << std::endl; return -1; } // 在帧上绘制检测结果 for (int i = 0; i < detect_result_group.count; i++) { detect_result_t* det_result = &detect_result_group.results[i]; cv::Rect box(det_result->box.left, det_result->box.top, det_result->box.right - det_result->box.left, det_result->box.bottom - det_result->box.top); // 获取类别名称 std::string label = getClassLabel(det_result->cls_id); // 绘制检测框 cv::rectangle(frame, box, cv::Scalar(0, 255, 0), 4); // 加粗边框 // 绘制标签 cv::putText(frame, label, cv::Point(box.x, box.y - 10), cv::FONT_HERSHEY_SIMPLEX, 1.0, cv::Scalar(0, 255, 0), 3); // 加粗字体 } return 0; }

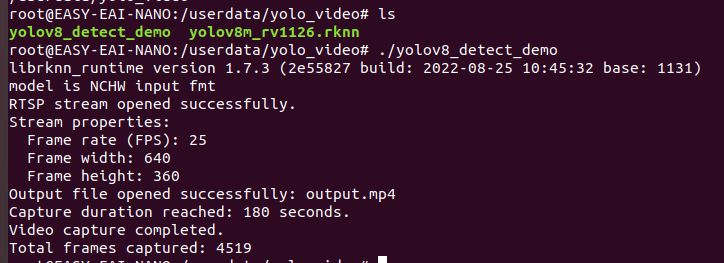

主函数:一开始的时候,是打算将显示结果保存到本地,只保存3分钟的视频段,看看能不能识别到目标。最后可以识别到目标,但是速度非常慢。想了一下可能这几个原因:1.分辨率过大(可以把视频流的主码流改为子码流,获取视频流)2.opencv的频繁读写(不保存为视频段,而是通过显示屏实时显示)3.获取帧,进行检测,将帧写入视频段上述过程都是单线程(采用多线程进行处理)

int main() { std::string rtsp_url = "rtsp://用户名:密码@IP/streaming/channels/1"; std::string output_file = "output.mp4"; int duration_seconds = 180; // 捕获持续时间(秒) std::string model_path = "yolov8m_rv1126.rknn"; YOLOv8Detector detector(model_path); // 打开 RTSP 流 cv::VideoCapture cap(rtsp_url, cv::CAP_FFMPEG); if (!cap.isOpened()) { std::cerr << "Error: Unable to open RTSP stream." << std::endl; return -1; } std::cout << "RTSP stream opened successfully." << std::endl; // 获取流的属性 double fps = cap.get(cv::CAP_PROP_FPS); int frame_width = static_cast<int>(cap.get(cv::CAP_PROP_FRAME_WIDTH)); int frame_height = static_cast<int>(cap.get(cv::CAP_PROP_FRAME_HEIGHT)); std::cout << "Stream properties: " << std::endl; std::cout << " Frame rate (FPS): " << fps << std::endl; std::cout << " Frame width: " << frame_width << std::endl; std::cout << " Frame height: " << frame_height << std::endl; // 创建保存对象 cv::VideoWriter writer(output_file, cv::VideoWriter::fourcc('m', 'p', '4', 'v'), fps, cv::Size(frame_width, frame_height)); if (!writer.isOpened()) { std::cerr << "Error: Unable to open output file." << std::endl; return -1; } std::cout << "Output file opened successfully: " << output_file << std::endl; // 开始捕获 cv::Mat frame; auto start_time = std::chrono::system_clock::now(); int frame_count = 0; while (true) { cap >> frame; // 从流中读取帧 if (frame.empty()) { std::cerr << "Error: Unable to read frame from RTSP stream." << std::endl; break; } detect_result_group_t detect_result_group; // 处理帧 processFrame(frame, detect_result_group, detector); // 将处理后的帧写入输出文件 writer.write(frame); frame_count++; // 检查是否达到指定的持续时间 auto elapsed_time = std::chrono::duration_cast<std::chrono::seconds>(std::chrono::system_clock::now() - start_time).count(); if (elapsed_time >= duration_seconds) { std::cout << "Capture duration reached: " << duration_seconds << " seconds." << std::endl; break; } } std::cout << "Video capture completed. Total frames captured: " << frame_count << std::endl; // 释放资源 cap.release(); writer.release(); return 0; }

改进版本1:上述代码是将检测视频段截取3分钟保存在本地,由于单线程+i/o频繁读取+拉流拉取的主码流,导致速度很慢无法进行实时显示。下面的代码对此进行改进,适用于无显示屏的情况。依然是保存为3分钟的本地视频段,与此同时将主码流改为子码流,以及引入多进程。

int main() { // 各种初始化// 打开RTSP流// 创建保存对象// 多线程开始(替代开始捕获中的单线程) std::mutex frame_mutex; std::condition_variable frame_cond; cv::Mat current_frame; // 将读取到的帧存储到共享变量 current_frame 中 bool is_running = true; // 标志变量 int total_frames_captured = 0; // 总帧数计数器 // 捕获帧的线程 std::thread capture_thread([&] { cv::Mat frame; auto start_time = std::chrono::system_clock::now(); while (is_running) { cap >> frame; // 从流中读取帧 if (frame.empty()) { std::cerr << "Error: Unable to read frame from RTSP stream." << std::endl; break; } // 使用 std::lock_guard 获取 frame_mutex 锁,将帧复制到 current_frame { std::lock_guard<std::mutex> lock(frame_mutex); current_frame = frame.clone(); total_frames_captured++; // 增加总帧数计数器 } frame_cond.notify_one(); //调用 frame_cond.notify_one() 通知处理帧的线程,唤醒一个等待的线程 // 检查是否达到指定的持续时间 auto elapsed_time = std::chrono::duration_cast<std::chrono::seconds>(std::chrono::system_clock::now() - start_time).count(); if (elapsed_time >= duration_seconds) { std::cout << "Capture duration reached: " << duration_seconds << " seconds." << std::endl; { std::lock_guard<std::mutex> lock(frame_mutex); is_running = false; current_frame.release(); // 清空 current_frame } frame_cond.notify_one(); // 再次调用 frame_cond.notify_one() 通知处理帧的线程 break; } } }); // 处理帧的线程 std::thread process_thread([&] { while (true) { std::unique_lock<std::mutex> lock(frame_mutex); // 调用 frame_cond.wait 等待条件变量,条件是 current_frame 不为空或 is_running 为 false frame_cond.wait(lock, [&] { return !current_frame.empty() || !is_running; }); if (!is_running && current_frame.empty()) { break; } // 将 current_frame 复制到 frame_to_process,释放锁 cv::Mat frame_to_process = current_frame.clone(); current_frame.release(); // 清空 current_frame lock.unlock(); //调用 processFrame 处理帧,并将结果写入输出流 detect_result_group_t detect_result_group; processFrame(frame_to_process, detect_result_group, detector); writer.write(frame_to_process); } }); // 等待线程完成 capture_thread.join(); process_thread.join(); // 释放资源 cap.release(); writer.release(); std::cout << "Video capture completed." << std::endl; std::cout << "Total frames captured: " << total_frames_captured << std::endl; return 0; }

改进之后的主函数部分分为两个线程,一个是取流线程capture_thread,一个是帧处理线程process_thread(关于多线程的讲解,可以见我另一篇笔记)。最后的效果其实已经可以做到实时性了,帧率为4519/180=25fps。

改进版本2:如果没有显示屏,看到这就可以不用看了。下面的改进版本是在显示屏进行实时显示(显示屏的h,w记得修改)

int main() { // 各种初始化// 打开 RTSP 流// 初始化显示(替代创建保存对象) int display_width = 720; int display_height = 1080; int ret = disp_init(display_width, display_height); if (ret) { std::cerr << "Error: Unable to initialize display." << std::endl; return -1; } // 多线程开始// 捕获帧的线程// 处理帧的线程 std::thread process_thread([&] { while (true) { std::unique_lock<std::mutex> lock(frame_mutex); frame_cond.wait(lock, [&] { return !current_frame.empty() || !is_running; }); if (!is_running && current_frame.empty()) { break; } cv::Mat frame_to_process = current_frame.clone(); current_frame.release(); // 清空 current_frame lock.unlock(); detect_result_group_t detect_result_group; processFrame(frame_to_process, detect_result_group, detector); // 缩放帧以匹配显示屏分辨率 cv::Mat display_frame; cv::resize(frame_to_process, display_frame, cv::Size(display_width, display_height), 0, 0, cv::INTER_LINEAR); // 显示帧 disp_commit(display_frame.data, display_width * display_height * 3); } }); // 等待线程完成 capture_thread.join(); process_thread.join(); cap.release(); disp_exit(); std::cout << "Video capture completed." << std::endl; std::cout << "Total frames captured: " << total_frames_captured << std::endl; return 0; }

最后的检测效果如图:

浙公网安备 33010602011771号

浙公网安备 33010602011771号