1.读取

file_path = r"E:\da3xia\jiqixuexi\SMSSpamCollection" sms = open(file_path, 'r', encoding='utf-8') sms_data = [] sms_lable = [] csv_reader = csv.reader(sms, delimiter='\t') for r in csv_reader: sms_lable.append(r[0]) sms_data.append(preprocessing(r[1])) #对每封邮件做预处理 sms.close()

2.数据预处理

#预处理 def preprocessing(text): tokens = [word for sent in nltk.sent_tokenize(text) for word in nltk.word_tokenize(sent)] #用nltk做分词 stops = stopwords.words('english') #停用词 tokens = [token for token in tokens if token not in stops] #去掉停用词 lemmatizer = WordNetLemmatizer() tag = nltk.pos_tag(tokens) #词性标注 newtokens = [] for i, token in enumerate(tokens): if token: pos = get_wordnet_pos(tag[i][1]) if pos: word = lemmatizer.lemmatize(token, pos) newtokens.append(word) return newtokens

3.数据划分—训练集和测试集数据划分

from sklearn.model_selection import train_test_split

x_train,x_test, y_train, y_test = train_test_split(data, target, test_size=0.2, random_state=0, stratify=y_train)

x_train, x_test, y_train, y_test = train_test_split(sms_data, sms_label, test_size=0.2, stratify=sms_label)

4.文本特征提取

sklearn.feature_extraction.text.CountVectorizer

sklearn.feature_extraction.text.TfidfVectorizer

from sklearn.feature_extraction.text import TfidfVectorizer

tfidf2 = TfidfVectorizer()

观察邮件与向量的关系

向量还原为邮件

tfidf2 = TfidfVectorizer() X_train = tfidf2.fit_transform(x_train) X_test = tfidf2.transform(x_test)

4.模型选择

from sklearn.naive_bayes import GaussianNB

from sklearn.naive_bayes import MultinomialNB

说明为什么选择这个模型?

mnb = MultinomialNB()

mnb.fit(X_train, y_train)

y_mnb = mnb.predict(X_test)

因为多项式适用于离散。

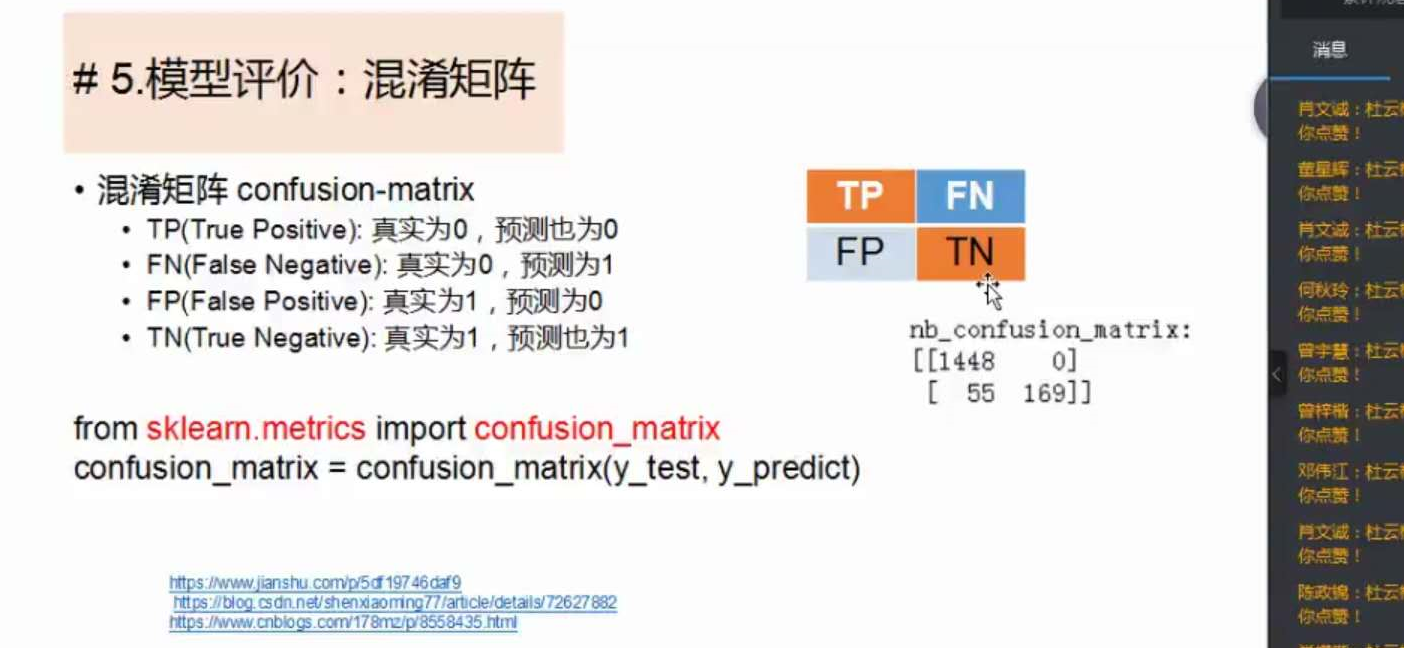

5.模型评价:混淆矩阵,分类报告

from sklearn.metrics import confusion_matrix

confusion_matrix = confusion_matrix(y_test, y_predict)

说明混淆矩阵的含义

from sklearn.metrics import classification_report

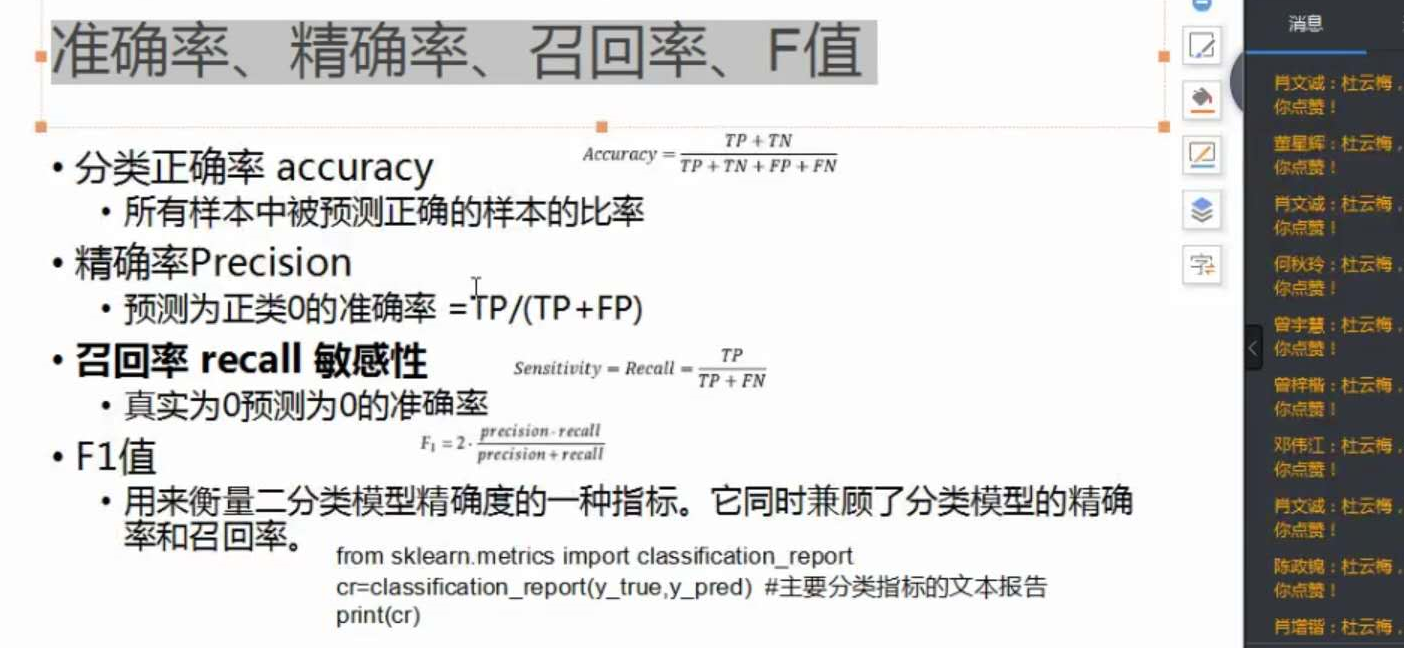

说明准确率、精确率、召回率、F值分别代表的意义

cm = confusion_matrix(y_test, y_mnb) print("混淆矩阵", cm) cr = classification_report(y_test, y_mnb) print("分类报告", cr) print("精确率", (cm[0][0]+cm[1][1])/np.sum(cm))

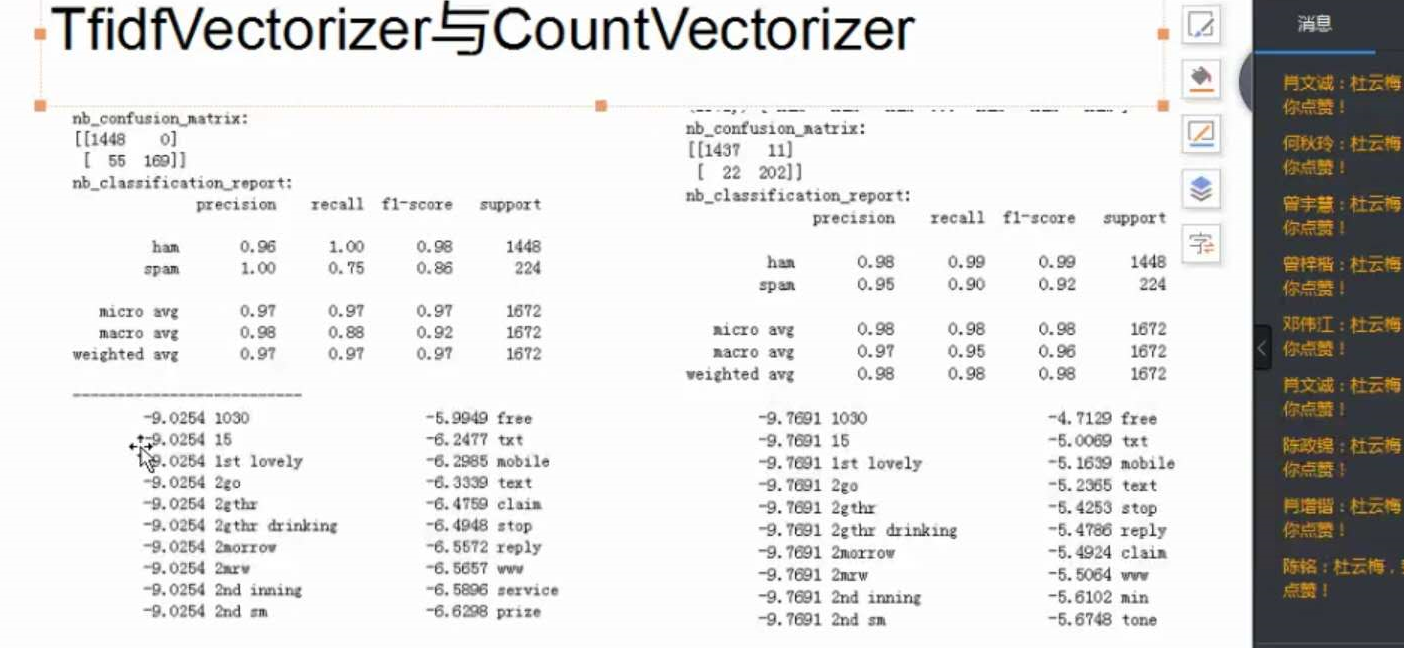

6.比较与总结

如果用CountVectorizer进行文本特征生成,与TfidfVectorizer相比,效果如何?

浙公网安备 33010602011771号

浙公网安备 33010602011771号