torch& tensorflow

#torch

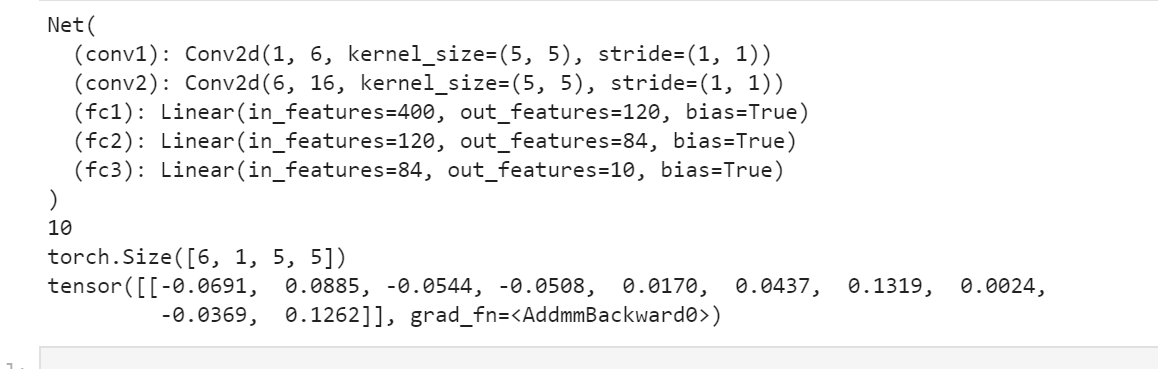

import torch import torch.nn as nn import torch.nn.functional as F class Net(nn.Module): def __init__(self): super(Net, self).__init__() # 1 input image channel, 6 output channels, 5x5 square convolution # kernel self.conv1 = nn.Conv2d(1, 6, 5) self.conv2 = nn.Conv2d(6, 16, 5) # an affine operation: y = Wx + b self.fc1 = nn.Linear(16 * 5 * 5, 120) self.fc2 = nn.Linear(120, 84) self.fc3 = nn.Linear(84, 10) def forward(self, x): # Max pooling over a (2, 2) window x = F.max_pool2d(F.relu(self.conv1(x)), (2, 2)) # If the size is a square you can only specify a single number x = F.max_pool2d(F.relu(self.conv2(x)), 2) x = x.view(-1, self.num_flat_features(x)) x = F.relu(self.fc1(x)) x = F.relu(self.fc2(x)) x = self.fc3(x) return x def num_flat_features(self, x): size = x.size()[1:] # all dimensions except the batch dimension num_features = 1 for s in size: num_features *= s return num_features net = Net() print(net) params = list(net.parameters()) print(len(params)) print(params[0].size()) # conv1's .weigh input = torch.randn(1, 1, 32, 32) out = net(input) print(out)

vgg

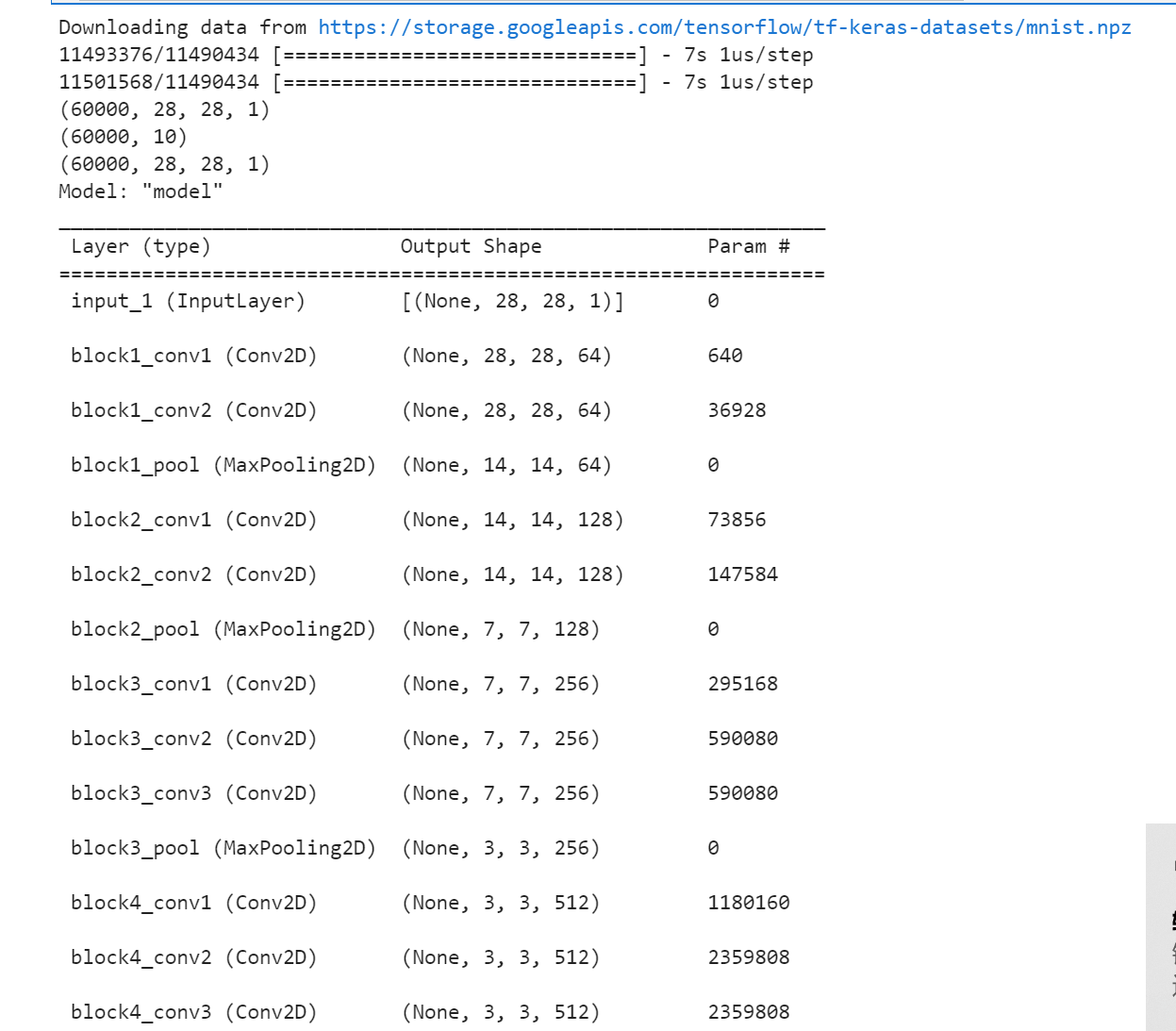

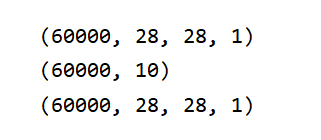

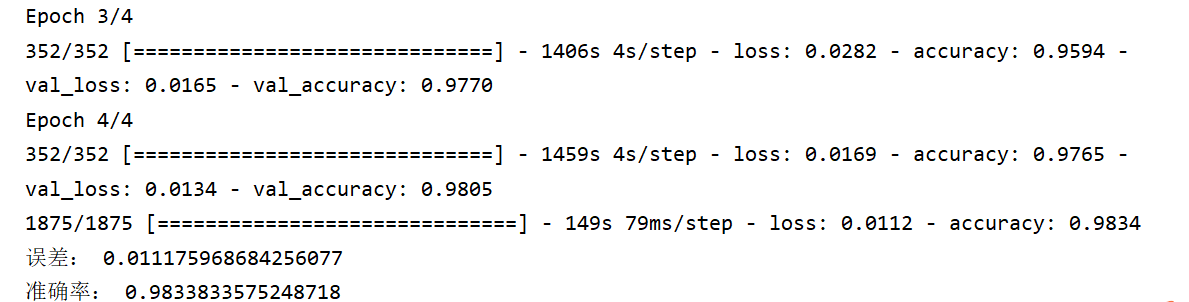

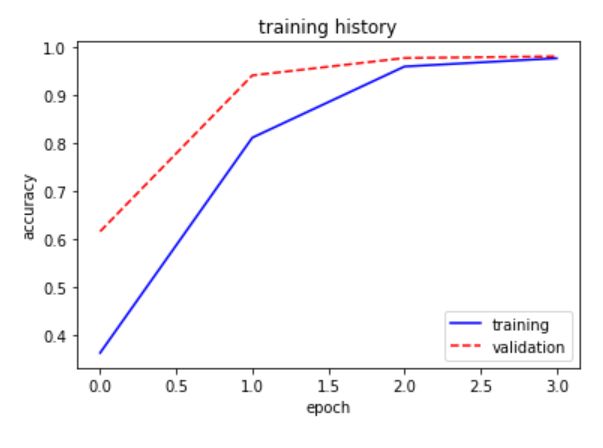

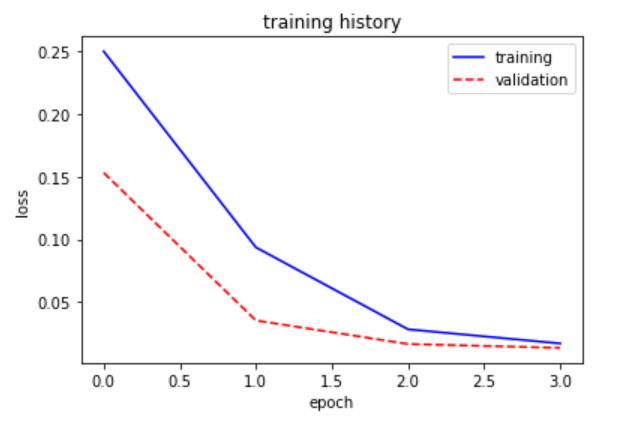

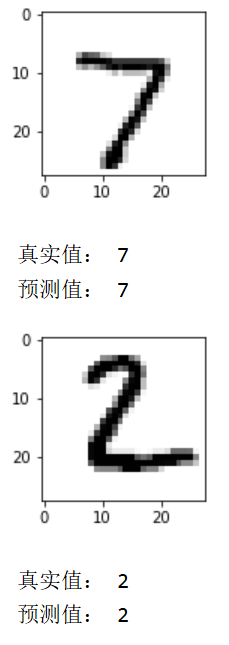

#从keras.model中导入model模块,为函数api搭建网络做准备 from tensorflow.keras import Model from tensorflow.keras.layers import Flatten,Dense,Dropout,MaxPooling2D,Conv2D,BatchNormalization,Input,ZeroPadding2D,Concatenate from tensorflow.keras import * from tensorflow.keras import regularizers #正则化 from tensorflow.keras.optimizers import RMSprop #优化选择器 from tensorflow.keras.layers import AveragePooling2D from tensorflow.keras.datasets import mnist import matplotlib.pyplot as plt import numpy as np from tensorflow.python.keras.utils import np_utils #数据处理 (X_train,Y_train),(X_test,Y_test)=mnist.load_data() X_test1=X_test Y_test1=Y_test X_train=X_train.reshape(-1,28,28,1).astype("float32")/255.0 X_test=X_test.reshape(-1,28,28,1).astype("float32")/255.0 Y_train=np_utils.to_categorical(Y_train,10) Y_test=np_utils.to_categorical(Y_test,10) print(X_train.shape) print(Y_train.shape) print(X_train.shape) def vgg16(): x_input = Input((28, 28, 1)) # 输入数据形状28*28*1 # Block 1 x = Conv2D(64, (3, 3), activation='relu', padding='same', name='block1_conv1')(x_input) x = Conv2D(64, (3, 3), activation='relu', padding='same', name='block1_conv2')(x) x = MaxPooling2D((2, 2), strides=(2, 2), name='block1_pool')(x) # Block 2 x = Conv2D(128, (3, 3), activation='relu', padding='same', name='block2_conv1')(x) x = Conv2D(128, (3, 3), activation='relu', padding='same', name='block2_conv2')(x) x = MaxPooling2D((2, 2), strides=(2, 2), name='block2_pool')(x) # Block 3 x = Conv2D(256, (3, 3), activation='relu', padding='same', name='block3_conv1')(x) x = Conv2D(256, (3, 3), activation='relu', padding='same', name='block3_conv2')(x) x = Conv2D(256, (3, 3), activation='relu', padding='same', name='block3_conv3')(x) x = MaxPooling2D((2, 2), strides=(2, 2), name='block3_pool')(x) # Block 4 x = Conv2D(512, (3, 3), activation='relu', padding='same', name='block4_conv1')(x) x = Conv2D(512, (3, 3), activation='relu', padding='same', name='block4_conv2')(x) x = Conv2D(512, (3, 3), activation='relu', padding='same', name='block4_conv3')(x) x = MaxPooling2D((2, 2), strides=(2, 2), name='block4_pool')(x) # Block 5 x = Conv2D(512, (3, 3), activation='relu', padding='same', name='block5_conv1')(x) x = Conv2D(512, (3, 3), activation='relu', padding='same', name='block5_conv2')(x) x = Conv2D(512, (3, 3), activation='relu', padding='same', name='block5_conv3')(x) #BLOCK 6 x=Flatten()(x) x=Dense(256,activation="relu")(x) x=Dropout(0.5)(x) x = Dense(256, activation="relu")(x) x = Dropout(0.5)(x) #搭建最后一层,即输出层 x = Dense(10, activation="softmax")(x) # 调用MDOEL函数,定义该网络模型的输入层为X_input,输出层为x.即全连接层 model = Model(inputs=x_input, outputs=x) # 查看网络模型的摘要 model.summary() return model model=vgg16() optimizer=RMSprop(lr=1e-4) model.compile(loss="binary_crossentropy",optimizer=optimizer,metrics=["accuracy"]) #训练加评估模型 n_epoch=4 batch_size=128 def run_model(): #训练模型 training=model.fit( X_train, Y_train, batch_size=batch_size, epochs=n_epoch, validation_split=0.25, verbose=1 ) test=model.evaluate(X_train,Y_train,verbose=1) return training,test training,test=run_model() print("误差:",test[0]) print("准确率:",test[1]) def show_train(training_history,train, validation): plt.plot(training.history[train],linestyle="-",color="b") plt.plot(training.history[validation] ,linestyle="--",color="r") plt.title("training history") plt.xlabel("epoch") plt.ylabel("accuracy") plt.legend(["training","validation"],loc="lower right") plt.show() show_train(training,"accuracy","val_accuracy") def show_train1(training_history,train, validation): plt.plot(training.history[train],linestyle="-",color="b") plt.plot(training.history[validation] ,linestyle="--",color="r") plt.title("training history") plt.xlabel("epoch") plt.ylabel("loss") plt.legend(["training","validation"],loc="upper right") plt.show() show_train1(training,"loss","val_loss") prediction=model.predict(X_test) def image_show(image): fig=plt.gcf() #获取当前图像 fig.set_size_inches(2,2) #改变图像大小 plt.imshow(image,cmap="binary") #显示图像 plt.show() def result(i): image_show(X_test1[i]) print("真实值:",Y_test1[i]) print("预测值:",np.argmax(prediction[i])) result(0) result(1)

浙公网安备 33010602011771号

浙公网安备 33010602011771号