log4j2日志发给kafka

logback

直接看文档:https://github.com/danielwegener/logback-kafka-appender

<dependency> <groupId>com.github.danielwegener</groupId> <artifactId>logback-kafka-appender</artifactId> <version>0.2.0</version> <scope>runtime</scope> </dependency> <dependency> <groupId>ch.qos.logback</groupId> <artifactId>logback-classic</artifactId> <version>1.2.3</version> <scope>runtime</scope> </dependency>

<configuration> <appender name="STDOUT" class="ch.qos.logback.core.ConsoleAppender"> <encoder> <pattern>%d{HH:mm:ss.SSS} [%thread] %-5level %logger{36} - %msg%n</pattern> </encoder> </appender> <!-- This is the kafkaAppender --> <appender name="kafkaAppender" class="com.github.danielwegener.logback.kafka.KafkaAppender"> <encoder> <pattern>%d{HH:mm:ss.SSS} [%thread] %-5level %logger{36} - %msg%n</pattern> </encoder> <topic>logs</topic> <keyingStrategy class="com.github.danielwegener.logback.kafka.keying.NoKeyKeyingStrategy" /> <deliveryStrategy class="com.github.danielwegener.logback.kafka.delivery.AsynchronousDeliveryStrategy" /> <!-- Optional parameter to use a fixed partition --> <!-- <partition>0</partition> --> <!-- Optional parameter to include log timestamps into the kafka message --> <!-- <appendTimestamp>true</appendTimestamp> --> <!-- each <producerConfig> translates to regular kafka-client config (format: key=value) --> <!-- producer configs are documented here: https://kafka.apache.org/documentation.html#newproducerconfigs --> <!-- bootstrap.servers is the only mandatory producerConfig --> <producerConfig>bootstrap.servers=localhost:9092</producerConfig> <!-- this is the fallback appender if kafka is not available. --> <appender-ref ref="STDOUT" /> </appender> <root level="info"> <appender-ref ref="kafkaAppender" /> </root> </configuration>

log4j2

<!-- kafka appender 需要额外的依赖 https://logging.apache.org/log4j/2.x/runtime-dependencies.html--> <dependency> <groupId>org.apache.kafka</groupId> <artifactId>kafka-clients</artifactId> <version>2.7.2</version> </dependency>

<Properties> <!-- 日志输出格式配置 --> <!-- <property name="kafkaPatternLayout" value="%d{yyyy-MM-dd'T'HH:mm:ss.SSS} [%thread] %-5level %logger{36} - %msg%n" />--> <!-- 监控预警平台Kafka ip --> <property name="kafkaServers" value="127.0.0.1:9092" /> </Properties> <appenders> <!--异步--> <Kafka name="Kafka" topic="xxx" syncSend="true"> <!-- <PatternLayout pattern="${kafkaPatternLayout}"/>--> <!-- JsonLayout:json格式 compact true会让消息一行,false的话是pretty。 本来就是会带有时间戳信息的,配置includeTimeMillis可以让时间戳信息更精简。 另外可以自己配置自定义key及value。 --> <JsonLayout compact="true" includeTimeMillis="true"> <KeyValuePair key="time" value="${date:yyyy-MM-dd'T'HH:mm:ss.SSS}"/> </JsonLayout> <Property name="bootstrap.servers">${kafkaServers}</Property> </Kafka> </appenders> <loggers> <Logger name="org.apache.kafka" level="info" /> <root level="info"> <appender-ref ref="Kafka"/> </root> </loggers>

首先搭建ELK,其中es和kibana没有特别要求,能正常访问即可

logstash需要配置接收kafka的消息

input { kafka { bootstrap_servers => "kafka_network:9092" auto_offset_reset => "latest" topics => ["kafka-test"] type => "kafka" } } filter { if [type] == "kafka" { json { source => "message" } } } output { elasticsearch { hosts => "elasticsearch_network:9200" index => "kafka-%{+YYYY.MM.dd}" } }

log4j2配置

<Properties> <property name="kafkaServers" value="127.0.0.1:9092" /> </Properties> <appenders> <!--异步--> <Kafka name="Kafka" topic="kafka-test" syncSend="false"> <!--JsonTemplateLayout provides more capabilitites and should be used instead.--> <!--这个文件是官网ECS示例,然后时间改成了时间戳,删除了ECS版本号,可以根据实际情况配置模板--> <JsonTemplateLayout eventTemplateUri="classpath:EcsLayout.json"/> <Property name="bootstrap.servers">${kafkaServers}</Property> </Kafka> </appenders> <loggers> <Logger name="org.apache.kafka" level="info" /> <root level="info"> <appender-ref ref="Kafka"/> </root> </loggers>

依赖

<log4j.version>2.17.0</log4j.version> <kafka-client.version>2.7.2</kafka-client.version> <!-- kafka appender 需要额外的依赖 https://logging.apache.org/log4j/2.x/runtime-dependencies.html--> <dependency> <groupId>org.apache.kafka</groupId> <artifactId>kafka-clients</artifactId> </dependency> <dependency> <groupId>org.apache.logging.log4j</groupId> <artifactId>log4j-layout-template-json</artifactId> </dependency>

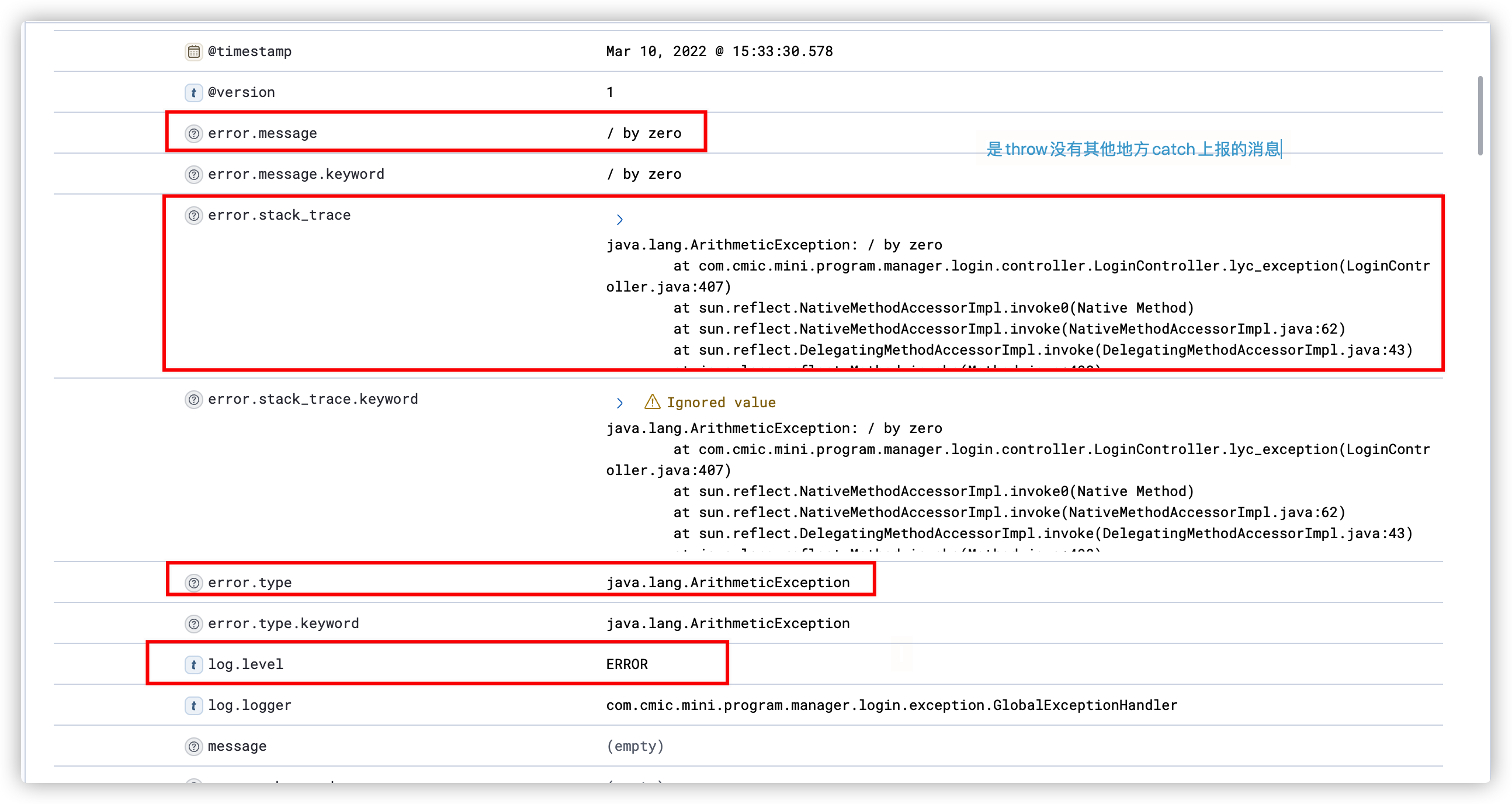

在resources目录下创建EcsLayout.json文件

{ "timestamp": { "$resolver": "timestamp", "epoch": { "unit": "millis", "rounded": true } }, "log.level": { "$resolver": "level", "field": "name" }, "log.logger": { "$resolver": "logger", "field": "name" }, "message": { "$resolver": "message", "stringified": true, "field": "message" }, "process.thread.name": { "$resolver": "thread", "field": "name" }, "labels": { "$resolver": "mdc", "flatten": true, "stringified": true }, "tags": { "$resolver": "ndc" }, "error.type": { "$resolver": "exception", "field": "className" }, "error.message": { "$resolver": "exception", "field": "message" }, "error.stack_trace": { "$resolver": "exception", "field": "stackTrace", "stackTrace": { "stringified": true } } }

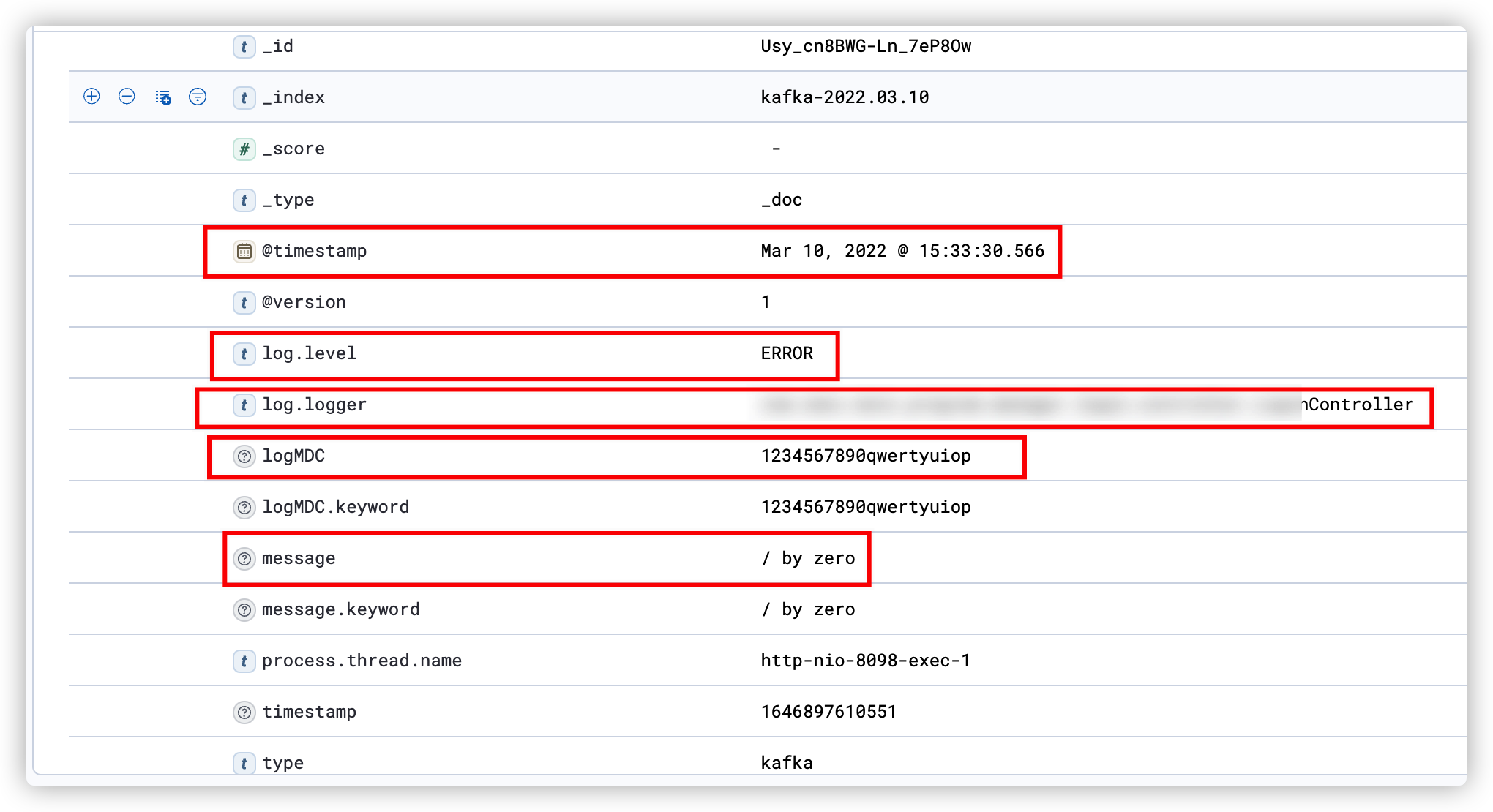

写一个测试方法

@GetMapping(value = "/lyc_exception") public void lyc_exception() { try{ MDC.put("logMDC", "1234567890qwertyuiop"); log.info("sdasdad"); int i = 1/0; }catch (Exception e){ log.error(e.getMessage()); }finally { MDC.remove("logMDC"); } }

就可以运行了,结果

这只是一个简化版,一般还需要补充环境配置和失败回落文件

<SpringProfile>需要用到此依赖(v2.17.0) <dependency> <groupId>org.apache.logging.log4j</groupId> <artifactId>log4j-spring-boot</artifactId> <version>${log4j.version}</version> </dependency> 若使用asyncLogger需要用到此依赖 <dependency> <groupId>com.lmax</groupId> <artifactId>disruptor</artifactId> <version>3.4.2</version> </dependency> ------------------------------------------ <appenders> <!--在需要受环境影响的地方使用SpringProfile标签包裹即可--> <SpringProfile name="prod"> <!--不使用syncSend=false--> <Kafka name="Kafka" topic="card-mini-program-manager" ignoreExceptions="false"> <!--JsonTemplateLayout provides more capabilitites and should be used instead.--> <JsonTemplateLayout eventTemplateUri="classpath:EcsLayout.json"/> <Property name="bootstrap.servers">${kafkaServers}</Property> <Property name="max.block.ms">2000</Property> <Property name="timeout.ms">3000</Property> </Kafka> <RollingFile name="FailoverKafkaLog" fileName="${FILE_PATH}/${KAFKA_FAILOVER_FILE_NAME}.log" filePattern="${FILE_PATH}/${KAFKA_FAILOVER_FILE_NAME}-%d{yyyy-MM-dd}"> <ThresholdFilter level="INFO" onMatch="ACCEPT" onMismatch="DENY"/> <JsonTemplateLayout eventTemplateUri="classpath:EcsLayout.json"/> <Policies> <TimeBasedTriggeringPolicy interval="1" /> </Policies> <DefaultRolloverStrategy max="15"/> </RollingFile> <!--这60秒期间都是使用第二选择,60秒后重试第一选择--> <Failover name="FailoverKafka" primary="Kafka" retryIntervalSeconds="60"> <Failovers> <AppenderRef ref="FailoverKafkaLog"/> </Failovers> </Failover> <!--异步使用AsyncLogger或者AsyncAppender,是由于sendSync会被捕获异常,导致failover认为没有异常,就无法使用failover了--> <Async name="AsyncKafka"> <AppenderRef ref="FailoverKafka"/> </Async> </SpringProfile> </appenders> <loggers> <root level="info"> <SpringProfile name="prod"> <appender-ref ref="AsyncKafka"/> </SpringProfile> </root> </loggers>

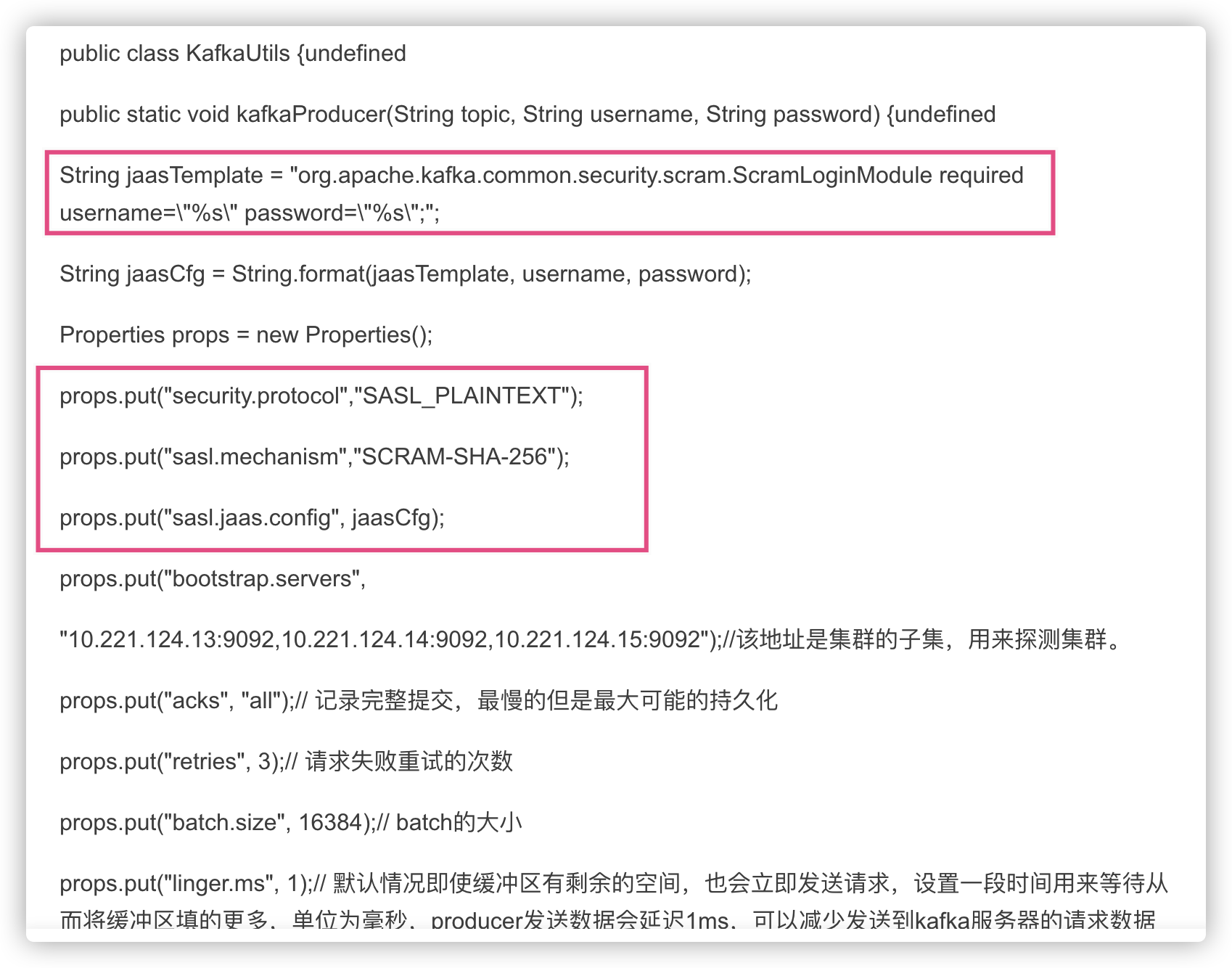

如果kafka是有配置账号密码的话,这里我没测试过,但根据网上的一些资料,猜测是

猜测增加这几个property <Property name="security.protocol">SASL_PLAINTEXT</Property> <Property name="sasl.mechanism">SCRAM-SHA-256</Property> <Property name="sasl.jaas.config">org.apache.kafka.common.security.scram.ScramLoginModule required username="xxx" password="yyy"</Property>

浙公网安备 33010602011771号

浙公网安备 33010602011771号