《数据挖掘导论》研读(3)

数据库中的知识发现

一、知识发现的基本过程

KDD过程

1.经典KDD处理模型又称阶梯处理模型,步骤:

- 数据准备:了解领域情况,熟悉相关背景知识,确定用户要求;

- 数据选择:根据用户的要求从数据库中提取与KDD相关的数据,KDD将主要从这些数据中进行数据提取;

- 数据预处理:对从数据库中提取的数据进行加工,检查数据的完整性及数据的一致性,对其中的噪声数据,缺失数据进行处理;

- 数据缩减:对经过预处理的数据,进行再处理,通过投影或数据中的其他操作减少数据量;

- 确定KDD目标,根据用户的要求,确定KDD是发现何种类型的知识;

- 确定知识发现算法;

- 数据发掘;

- 模式解释;

- 知识评价。

2.CRISP-DM过程模型

3.联机KDD模型OLAM

知识发现软件

- 独立的知识发现软件

- 横向的知识发现软件

- 纵向的知识发现软件

KDD参与者

业务分析人员、数据分析人员、数据管理人员

二、KDD过程模型的应用

- 商业理解

- 任务——确定商业目标

- 任务——评估形势

- 任务——确定KDD目标

- 任务——制定项目计划

- 数据理解

- 收集和描述数据

- 探查数据

- 数据准备

- 抽取数据:如果数据来源多样,需要抽取合并为一个数据集,学会选择属性和实例,来让数据挖掘的质量提高

- 清洗数据:处理噪声数据(重复、错误)可使用数据平滑技术或删除孤立点,补充缺失数据

- 变换数据:一方面,可将分类数据变化为等价的数值数据,另一方面,一些数据挖掘技术不能处理的某些初始格式的数值数据

- 建模

- 选择建模技术

- 检验设计

- 建模和估计

- 评估

- 评估结果

- 回顾和确定下一步方案

- 部署和采取行动

三、实验:KDD案例

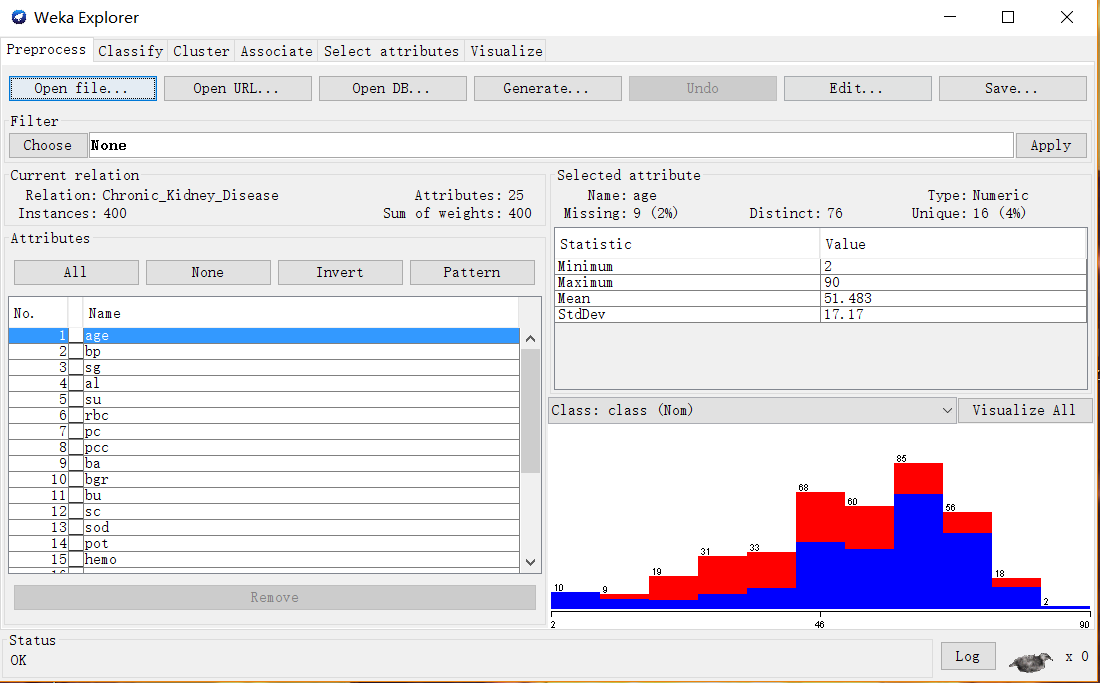

数据来源为UCI数据集中的疾病信息,目标就是查看一下里面的数据,建立用户信用筛选分类模型并分析该模型。

使用Weka加载数据集,

选择C4.5算法,将test options设置为Percentage split,使用默认百分比,输出为class,选中Output predictions,以显示在检验集上的预测结果。

=== Run information === Scheme: weka.classifiers.trees.J48 -C 0.25 -M 2 Relation: Chronic_Kidney_Disease Instances: 400 Attributes: 25 age bp sg al su rbc pc pcc ba bgr bu sc sod pot hemo pcv wbcc rbcc htn dm cad appet pe ane class Test mode: split 66.0% train, remainder test === Classifier model (full training set) === J48 pruned tree ------------------ sc <= 1.2 | pe = yes: ckd (15.57/0.08) | pe = no | | dm = yes: ckd (12.63/0.14) | | dm = no | | | hemo <= 12.9: ckd (16.02/0.56) | | | hemo > 12.9 | | | | sg = 1.005: notckd (0.0) | | | | sg = 1.010: ckd (4.4/0.14) | | | | sg = 1.015: ckd (2.91/0.09) | | | | sg = 1.020: notckd (76.27/0.45) | | | | sg = 1.025: notckd (70.64) sc > 1.2: ckd (201.57/2.52) Number of Leaves : 9 Size of the tree : 14 Time taken to build model: 0.03 seconds === Predictions on test split === inst# actual predicted error prediction 1 1:ckd 1:ckd 0.992 2 1:ckd 1:ckd 0.992 3 1:ckd 1:ckd 0.529 4 2:notckd 2:notckd 1 5 2:notckd 2:notckd 1 6 2:notckd 2:notckd 1 7 1:ckd 1:ckd 0.992 8 1:ckd 1:ckd 0.957 9 2:notckd 2:notckd 1 10 1:ckd 1:ckd 0.992 11 1:ckd 1:ckd 0.992 12 1:ckd 1:ckd 0.992 13 1:ckd 1:ckd 0.992 14 1:ckd 1:ckd 0.992 15 1:ckd 1:ckd 0.992 16 1:ckd 1:ckd 0.992 17 1:ckd 1:ckd 0.992 18 1:ckd 1:ckd 0.992 19 1:ckd 1:ckd 1 20 1:ckd 1:ckd 0.992 21 1:ckd 1:ckd 0.992 22 1:ckd 1:ckd 0.992 23 2:notckd 2:notckd 1 24 1:ckd 1:ckd 0.97 25 2:notckd 2:notckd 1 26 2:notckd 2:notckd 1 27 1:ckd 1:ckd 0.996 28 2:notckd 2:notckd 0.93 29 2:notckd 2:notckd 1 30 1:ckd 1:ckd 0.992 31 2:notckd 2:notckd 1 32 1:ckd 1:ckd 0.992 33 1:ckd 1:ckd 0.992 34 2:notckd 2:notckd 1 35 1:ckd 1:ckd 0.992 36 1:ckd 1:ckd 0.992 37 2:notckd 2:notckd 0.519 38 1:ckd 1:ckd 0.992 39 2:notckd 2:notckd 1 40 1:ckd 1:ckd 0.992 41 2:notckd 2:notckd 1 42 1:ckd 1:ckd 0.992 43 1:ckd 1:ckd 0.992 44 1:ckd 1:ckd 0.992 45 2:notckd 2:notckd 1 46 2:notckd 2:notckd 1 47 2:notckd 2:notckd 1 48 2:notckd 2:notckd 0.96 49 1:ckd 1:ckd 0.992 50 1:ckd 1:ckd 0.992 51 2:notckd 2:notckd 1 52 1:ckd 1:ckd 0.992 53 1:ckd 1:ckd 0.992 54 1:ckd 1:ckd 0.992 55 1:ckd 1:ckd 0.957 56 2:notckd 2:notckd 1 57 1:ckd 1:ckd 0.971 58 2:notckd 2:notckd 0.917 59 1:ckd 1:ckd 0.992 60 1:ckd 1:ckd 0.992 61 1:ckd 1:ckd 0.992 62 1:ckd 1:ckd 0.992 63 1:ckd 1:ckd 0.992 64 1:ckd 1:ckd 0.992 65 1:ckd 1:ckd 0.992 66 1:ckd 1:ckd 0.992 67 1:ckd 1:ckd 0.992 68 1:ckd 1:ckd 0.992 69 1:ckd 1:ckd 0.992 70 2:notckd 2:notckd 0.96 71 1:ckd 1:ckd 0.992 72 1:ckd 1:ckd 0.992 73 1:ckd 1:ckd 0.992 74 2:notckd 2:notckd 1 75 1:ckd 1:ckd 0.992 76 2:notckd 2:notckd 1 77 2:notckd 2:notckd 1 78 1:ckd 1:ckd 0.957 79 2:notckd 2:notckd 1 80 1:ckd 1:ckd 0.992 81 2:notckd 2:notckd 1 82 2:notckd 2:notckd 1 83 1:ckd 1:ckd 1 84 1:ckd 1:ckd 0.992 85 1:ckd 1:ckd 0.992 86 1:ckd 1:ckd 0.992 87 2:notckd 2:notckd 1 88 2:notckd 2:notckd 1 89 1:ckd 1:ckd 0.992 90 2:notckd 2:notckd 1 91 1:ckd 1:ckd 0.992 92 1:ckd 1:ckd 0.992 93 2:notckd 2:notckd 1 94 1:ckd 1:ckd 0.992 95 1:ckd 1:ckd 1 96 1:ckd 1:ckd 0.996 97 2:notckd 2:notckd 0.519 98 2:notckd 2:notckd 1 99 1:ckd 1:ckd 0.992 100 1:ckd 1:ckd 0.992 101 2:notckd 2:notckd 0.906 102 1:ckd 1:ckd 0.971 103 2:notckd 2:notckd 1 104 1:ckd 1:ckd 1 105 1:ckd 1:ckd 0.992 106 2:notckd 2:notckd 0.519 107 1:ckd 1:ckd 0.992 108 1:ckd 1:ckd 0.992 109 2:notckd 2:notckd 1 110 2:notckd 2:notckd 1 111 1:ckd 1:ckd 0.974 112 1:ckd 1:ckd 0.992 113 2:notckd 2:notckd 1 114 1:ckd 1:ckd 0.992 115 1:ckd 1:ckd 0.992 116 1:ckd 1:ckd 0.992 117 2:notckd 2:notckd 1 118 1:ckd 1:ckd 0.992 119 1:ckd 1:ckd 1 120 2:notckd 2:notckd 1 121 2:notckd 2:notckd 1 122 1:ckd 1:ckd 0.992 123 1:ckd 1:ckd 0.992 124 2:notckd 2:notckd 1 125 2:notckd 2:notckd 1 126 1:ckd 1:ckd 0.992 127 1:ckd 1:ckd 0.992 128 1:ckd 1:ckd 0.992 129 1:ckd 1:ckd 0.992 130 1:ckd 1:ckd 0.992 131 2:notckd 2:notckd 1 132 2:notckd 2:notckd 1 133 1:ckd 1:ckd 0.992 134 2:notckd 2:notckd 1 135 1:ckd 1:ckd 0.992 136 1:ckd 1:ckd 0.957 === Evaluation on test split === Time taken to test model on training split: 0.06 seconds === Summary === Correctly Classified Instances 136 100 % Incorrectly Classified Instances 0 0 % Kappa statistic 1 Mean absolute error 0.0226 Root mean squared error 0.0837 Relative absolute error 4.8492 % Root relative squared error 17.4751 % Coverage of cases (0.95 level) 100 % Mean rel. region size (0.95 level) 52.5735 % Total Number of Instances 136 === Detailed Accuracy By Class === TP Rate FP Rate Precision Recall F-Measure MCC ROC Area PRC Area Class 1.000 0.000 1.000 1.000 1.000 1.000 1.000 1.000 ckd 1.000 0.000 1.000 1.000 1.000 1.000 1.000 1.000 notckd Weighted Avg. 1.000 0.000 1.000 1.000 1.000 1.000 1.000 1.000 === Confusion Matrix === a b <-- classified as 88 0 | a = ckd 0 48 | b = notckd

很棒的数据集。

评估,可以利用无指导聚类技术进行优化数据集,逐一通过不同的属性,看看它们各自分类能力,然而这个数据集有点强啊,尴尬。

浙公网安备 33010602011771号

浙公网安备 33010602011771号