如何搭建属于你自己的kubernetes高可用集群

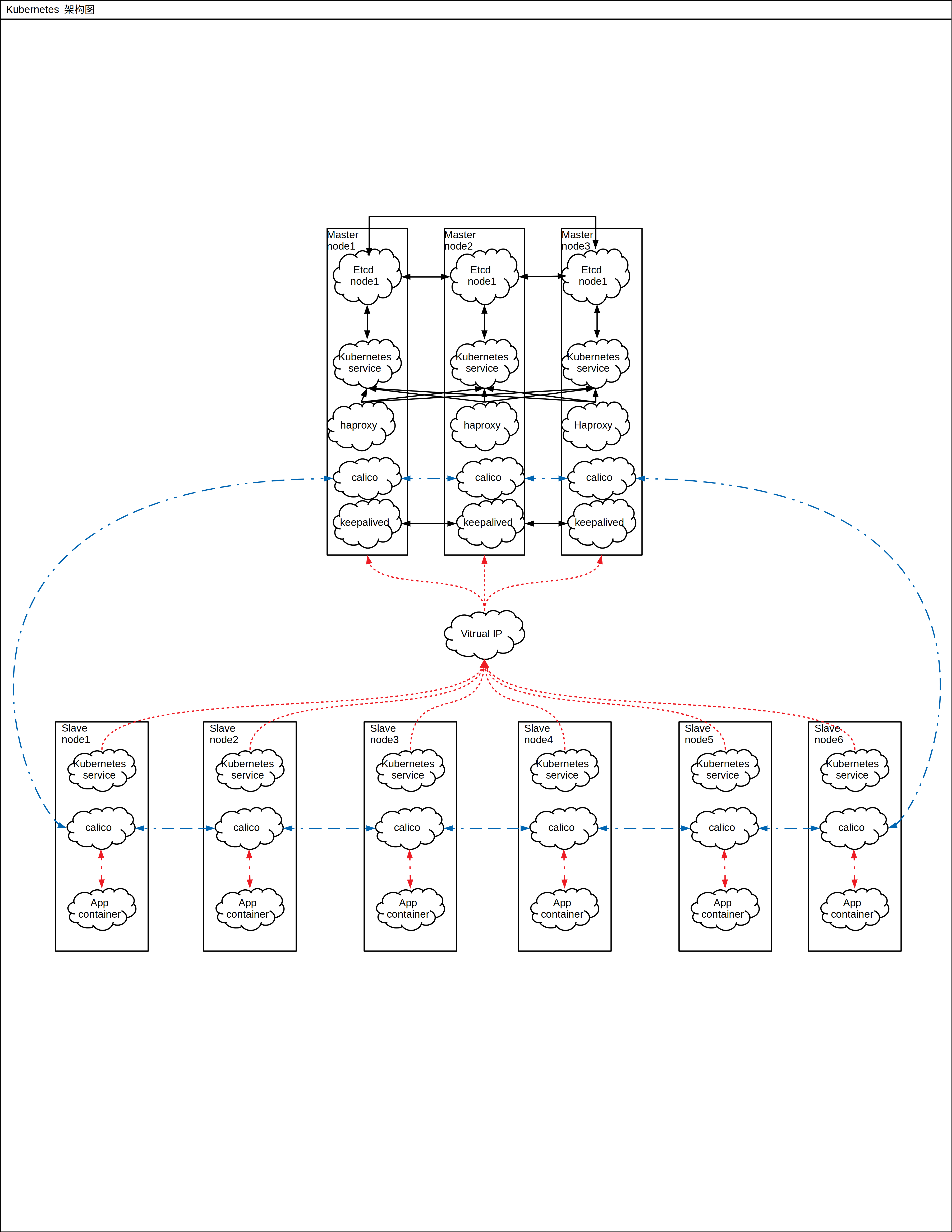

系统架构图

安装docker(每个节点)

# install-docker.sh

# system: centos7.5+

yum update -y yum install -y \ yum-utils \ device-mapper-persistent-data \ lvm2 yum-config-manager -y \ --add-repo \ https://download.docker.com/linux/centos/docker-ce.repo yum update -y yum install -y docker-ce systemctl enable docker systemctl start docker systemctl status docker

更改hostname(每个节点)

编辑/etc/hostname 文件,将每个节点的hostname 修改的不一样

编辑/etc/hosts,在其中将hostname 和 每个节点的ip地址对应起来,如果节点数量太多,使用dns或者不配置也可

修改内核参数(每个节点)

cat > /etc/sysctl.d/k8s.conf <<EOF

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_nonlocal_bind = 1

net.ipv4.ip_forward = 1

vm.swappiness=0

EOF

sysctl --system

安装并启用ipvs 和管理工具 ipset ipvsadm(每个节点)

cat > /etc/sysconfig/modules/ipvs.modules <<EOF

#!/bin/bash

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack_ipv4

EOF

#执行脚本

chmod 755 /etc/sysconfig/modules/ipvs.modules && bash /etc/sysconfig/modules/ipvs.modules && lsmod | grep -e ip_vs -e nf_conntrack_ipv4

#安装相关管理工具

yum install ipset ipvsadm -y

启动haproxy和keepalived

在所有节点上运行start_haproxy.sh

# start_haproxy.sh

#!/bin/bash MasterIP1=192.168.1.81 MasterIP2=192.168.1.82 MasterIP3=192.168.1.83 MasterPort=6443 docker run -d --restart=always --name HAProxy-K8S -p 6444:6444 \ -e MasterIP1=$MasterIP1 \ -e MasterIP2=$MasterIP2 \ -e MasterIP3=$MasterIP3 \ -e MasterPort=$MasterPort \

wise2c/haproxy-k8s

在所有master节点上运行start_keepalived.sh

#start_keepalived.sh

#!/bin/bash VIRTUAL_IP=192.168.1.90 INTERFACE=ens192 NETMASK_BIT=24 CHECK_PORT=6444 RID=10 VRID=90 MCAST_GROUP=224.0.0.18 docker run -itd --restart=always --name=Keepalived-K8S \ --net=host --cap-add=NET_ADMIN \ -e VIRTUAL_IP=$VIRTUAL_IP \ -e INTERFACE=$INTERFACE \ -e CHECK_PORT=$CHECK_PORT \ -e RID=$RID \ -e VRID=$VRID \ -e NETMASK_BIT=$NETMASK_BIT \ -e MCAST_GROUP=$MCAST_GROUP \ wise2c/keepalived-k8s

关闭swap(每个节点)

swapoff -a

yes | cp /etc/fstab /etc/fstab_bak

cat /etc/fstab_bak | grep -v swap > /etc/fstab

安装kubeadm

#众所周知的原因,这里使用阿里云yum源进行替换:

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

#安装kubeadm、kubelet、kubectl,注意这里默认安装当前最新版本

yum install -y kubeadm kubelet kubectl

systemctl enable kubelet && systemctl start kubelet

初始化master节点

配置文件(kubeadm-config.yaml)

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 192.168.1.x

bindPort: 6443

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: node81

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: "192.168.1.90:6444"

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.18.0

networking:

dnsDomain: cluster.local

serviceSubnet: 10.96.0.0/12

scheduler: {}

---

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

featureGates:

SupportIPVSProxyMode: true

mode: ipvs

说明:

- advertiseAddress: 此处为你运行第一个kubeadm 的内网地址

- bindPort: 端口号默认6443

- controlPlaneEndpoint: 配置虚拟ip的haproxy的前端端口号

- imageRepository: 配置镜像拉取的地址,应为国内无法访问默认地址,此处配置为阿里云的地址,如果你自己有私有的镜像仓库并且其中有所有镜像,可以写你自己的

- kubernetesVersion: 版本拉取镜像时候用

- serviceSubnet: calico子网的大小,决定你能运行多少pod

- 最后的配置,是让kubenetes知道使用的网络模式

初始化kubenetesmaster 节点

kubeadm init --config=kubeadm-config.yaml --upload-certs > kubeadm-init.log

说明:

- --upload-certs 上传证书,这样添加其他节点的时候会自动拉取,不用手动复制了

- --config 编辑完成的配置文件的路径和名称

配置kubectl

# root用户

cat << EOF >> ~/.bashrc export KUBECONFIG=/etc/kubernetes/admin.conf EOF source ~/.bashrc

# 普通用户

mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

安装网路组件calico

配置文件 (下载地址https://docs.projectcalico.org/manifests/calico.yaml)

部署 calico

kubectl apply -f calico.yaml

添加其他master节点 & 添加slave节点

在初始化过程中产生的logs文件中有添加文件的命令找到并在相应的节点上运行

添加slave

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.1.90:6444 --token abcdef.012345678aaaaaaaa \

--discovery-token-ca-cert-hash sha256:2bde1f5f2e9b47f90acd2be8442f6ca7abcaaaaaaaaaaaaaa

添加master

You can now join any number of the control-plane node running the following command on each as root:

kubeadm join 192.168.1.90:6444 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:2bde1f5f2e9b47f90acd2be8442f6ca7abcfd1cc16135fe77aaaaaaaaaa \

--control-plane --certificate-key 2b41e901aac5fd70da7ef9371758d59c32a61f52cb65bdcf7aaaaa

参考:

https://blog.csdn.net/networken/article/details/89599004#t9/

https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/high-availability/

建议:

在管理的节点比较少,应用结构相对简单的情况下,可以使用docker swarm 学习搭建的成本很低,性价比高;

当要管理的节点上升到100+的时候,这个时候kubenetes的优势会比较明显;

浙公网安备 33010602011771号

浙公网安备 33010602011771号