Tensorflow2(预课程)---7.6、cifar10分类-层方式-卷积神经网络-Inception10

Tensorflow2(预课程)---7.6、cifar10分类-层方式-卷积神经网络-Inception10

一、总结

一句话总结:

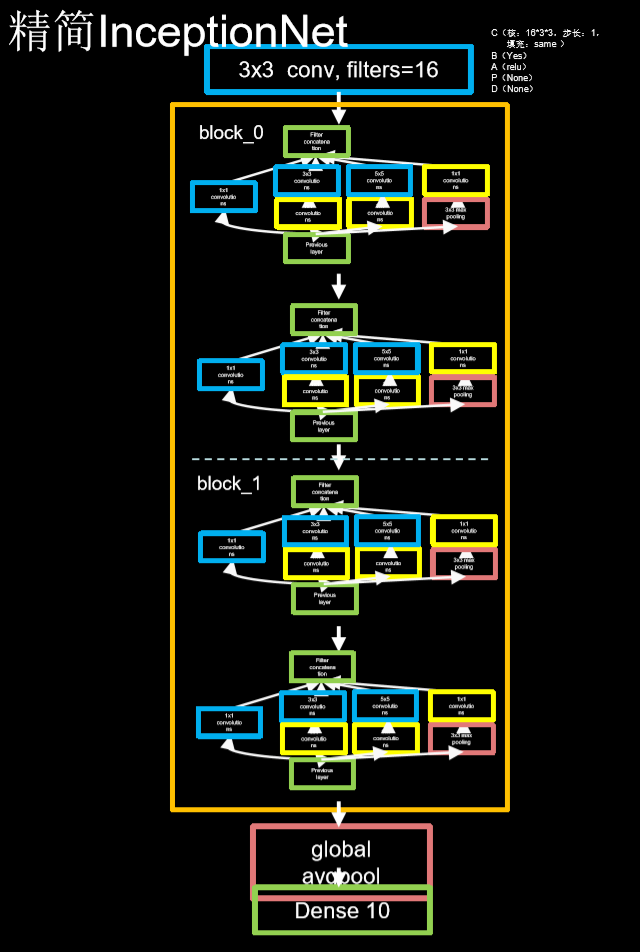

InceptionNet:一层内使用不同尺寸卷积核,提升感知力使用批标准化,缓解梯度消失

InceptionNet:1、1*1卷积;2、3*3卷积+1*1卷积;3、5*5卷积+1*1卷积;4、1*1卷积+3*3卷积;

class ConvBNRelu(Model): def __init__(self, ch, kernelsz=3, strides=1, padding='same'): super(ConvBNRelu, self).__init__() self.model = tf.keras.models.Sequential([ Conv2D(ch, kernelsz, strides=strides, padding=padding), BatchNormalization(), Activation('relu') ]) def call(self, x): x = self.model(x, training=False) #在training=False时,BN通过整个训练集计算均值、方差去做批归一化,training=True时,通过当前batch的均值、方差去做批归一化。推理时 training=False效果好 return x class InceptionBlk(Model): def __init__(self, ch, strides=1): super(InceptionBlk, self).__init__() self.ch = ch self.strides = strides self.c1 = ConvBNRelu(ch, kernelsz=1, strides=strides) self.c2_1 = ConvBNRelu(ch, kernelsz=1, strides=strides) self.c2_2 = ConvBNRelu(ch, kernelsz=3, strides=1) self.c3_1 = ConvBNRelu(ch, kernelsz=1, strides=strides) self.c3_2 = ConvBNRelu(ch, kernelsz=5, strides=1) self.p4_1 = MaxPool2D(3, strides=1, padding='same') self.c4_2 = ConvBNRelu(ch, kernelsz=1, strides=strides) def call(self, x): x1 = self.c1(x) x2_1 = self.c2_1(x) x2_2 = self.c2_2(x2_1) x3_1 = self.c3_1(x) x3_2 = self.c3_2(x3_1) x4_1 = self.p4_1(x) x4_2 = self.c4_2(x4_1) # concat along axis=channel x = tf.concat([x1, x2_2, x3_2, x4_2], axis=3) return x class Inception10(Model): def __init__(self, num_blocks, num_classes, init_ch=16, **kwargs): super(Inception10, self).__init__(**kwargs) self.in_channels = init_ch self.out_channels = init_ch self.num_blocks = num_blocks self.init_ch = init_ch self.c1 = ConvBNRelu(init_ch) self.blocks = tf.keras.models.Sequential() # 代码非常简单:4个layer,两个block,block的前一个layer都不一样 for block_id in range(num_blocks): for layer_id in range(2): # 第一个InceptionBlock if layer_id == 0: block = InceptionBlk(self.out_channels, strides=2) else: block = InceptionBlk(self.out_channels, strides=1) self.blocks.add(block) # enlarger out_channels per block # channel数每次增加2倍,resnet和vgg里面好像也是这么做的 self.out_channels *= 2 self.p1 = GlobalAveragePooling2D() self.f1 = Dense(num_classes, activation='softmax') def call(self, x): x = self.c1(x) x = self.blocks(x) x = self.p1(x) y = self.f1(x) return y model = Inception10(num_blocks=2, num_classes=10)

二、cifar10分类-层方式-卷积神经网络-Inception10

博客对应课程的视频位置:

步骤

1、读取数据集

2、拆分数据集(拆分成训练数据集和测试数据集)

3、构建模型

4、训练模型

5、检验模型需求

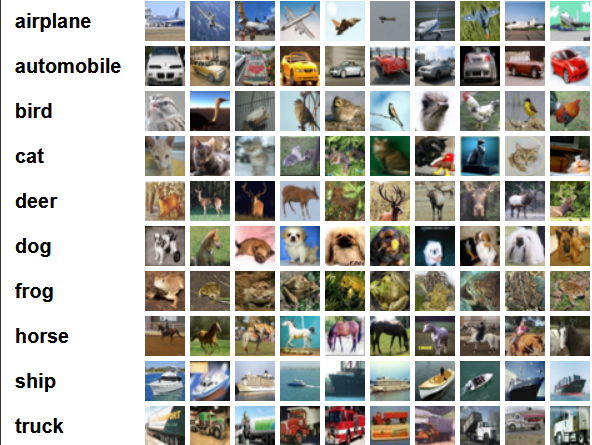

cifar10(物品分类)

该数据集共有60000张彩色图像,这些图像是32*32,分为10个类,每类6000张图。这里面有50000张用于训练,构成了5个训练批,每一批10000张图;另外10000用于测试,单独构成一批。测试批的数据里,取自10类中的每一类,每一类随机取1000张。抽剩下的就随机排列组成了训练批。注意一个训练批中的各类图像并不一定数量相同,总的来看训练批,每一类都有5000张图。

In [1]:

import pandas as pd

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

1、读取数据集

直接从tensorflow的dataset来读取数据集即可

In [2]:

(train_x, train_y), (test_x, test_y) = tf.keras.datasets.cifar10.load_data()

print(train_x.shape, train_y.shape)

这是32*32的彩色图,rgb三个通道如何处理呢

In [3]:

plt.imshow(train_x[0])

plt.show()

In [4]:

plt.figure()

plt.imshow(train_x[1])

plt.figure()

plt.imshow(train_x[2])

plt.show()

In [5]:

print(test_y)

In [6]:

# 像素值 RGB

np.max(train_x[0])

Out[6]:

2、拆分数据集(拆分成训练数据集和测试数据集)

上一步做了拆分数据集的工作

In [7]:

# 图片数据如何归一化

# 直接除255即可

train_x = train_x/255.0

test_x = test_x/255.0

In [8]:

# 像素值 RGB

np.max(train_x[0])

Out[8]:

In [9]:

train_y=train_y.flatten()

test_y=test_y.flatten()

train_y = tf.one_hot(train_y, depth=10)

test_y = tf.one_hot(test_y, depth=10)

print(test_y.shape)

3、构建模型

应该构建一个怎么样的模型:

In [11]:

from tensorflow.keras.layers import Conv2D, BatchNormalization, Activation, MaxPool2D, Dropout, Flatten, Dense, \

GlobalAveragePooling2D

from tensorflow.keras import Model

In [13]:

class ConvBNRelu(Model):

def __init__(self, ch, kernelsz=3, strides=1, padding='same'):

super(ConvBNRelu, self).__init__()

self.model = tf.keras.models.Sequential([

Conv2D(ch, kernelsz, strides=strides, padding=padding),

BatchNormalization(),

Activation('relu')

])

def call(self, x):

x = self.model(x, training=False) #在training=False时,BN通过整个训练集计算均值、方差去做批归一化,training=True时,通过当前batch的均值、方差去做批归一化。推理时 training=False效果好

return x

class InceptionBlk(Model):

def __init__(self, ch, strides=1):

super(InceptionBlk, self).__init__()

self.ch = ch

self.strides = strides

self.c1 = ConvBNRelu(ch, kernelsz=1, strides=strides)

self.c2_1 = ConvBNRelu(ch, kernelsz=1, strides=strides)

self.c2_2 = ConvBNRelu(ch, kernelsz=3, strides=1)

self.c3_1 = ConvBNRelu(ch, kernelsz=1, strides=strides)

self.c3_2 = ConvBNRelu(ch, kernelsz=5, strides=1)

self.p4_1 = MaxPool2D(3, strides=1, padding='same')

self.c4_2 = ConvBNRelu(ch, kernelsz=1, strides=strides)

def call(self