Tensorflow2(预课程)---7.7、cifar10分类-层方式-卷积神经网络-ResNet18

Tensorflow2(预课程)---7.7、cifar10分类-层方式-卷积神经网络-ResNet18

一、总结

一句话总结:

可以看到ResNet18得到的结果比较稳定,测试集准确率在81左右,感觉batchsize好像对准确率有影响

# 构建容器 model = tf.keras.Sequential() # =================================================== # ResnetBlock:同维度:3*3卷积层、3*3卷积层 model.add(tf.keras.layers.Conv2D(filters=64, kernel_size=(3, 3),strides=1,padding='same',use_bias=False,input_shape=(32,32,3))) # 卷积层 model.add(tf.keras.layers.BatchNormalization()) # BN层 model.add(tf.keras.layers.Activation('relu')) # 激活层 model.add(tf.keras.layers.Conv2D(filters=64, kernel_size=(3, 3),strides=1,padding='same',use_bias=False)) # 卷积层 model.add(tf.keras.layers.BatchNormalization()) # BN层 model.add(tf.keras.layers.Activation('relu')) # ResnetBlock:同维度:3*3卷积层、3*3卷积层 model.add(tf.keras.layers.Conv2D(filters=64, kernel_size=(3, 3),strides=1,padding='same',use_bias=False)) # 卷积层 model.add(tf.keras.layers.BatchNormalization()) # BN层 model.add(tf.keras.layers.Activation('relu')) # 激活层 model.add(tf.keras.layers.Conv2D(filters=64, kernel_size=(3, 3),strides=1,padding='same',use_bias=False)) # 卷积层 model.add(tf.keras.layers.BatchNormalization()) # BN层 model.add(tf.keras.layers.Activation('relu')) # =================================================== # ResnetBlock:不同纬度:3*3卷积层、3*3卷积层、1*1卷积层 model.add(tf.keras.layers.Conv2D(filters=128, kernel_size=(3, 3),strides=2,padding='same',use_bias=False)) # 卷积层 model.add(tf.keras.layers.BatchNormalization()) # BN层 model.add(tf.keras.layers.Activation('relu')) # 激活层 model.add(tf.keras.layers.Conv2D(filters=128, kernel_size=(3, 3),strides=1,padding='same',use_bias=False)) # 卷积层 model.add(tf.keras.layers.BatchNormalization()) # BN层 model.add(tf.keras.layers.Activation('relu')) model.add(tf.keras.layers.Conv2D(filters=128, kernel_size=(1, 1),strides=2,padding='same',use_bias=False)) # 卷积层 model.add(tf.keras.layers.BatchNormalization()) # BN层 model.add(tf.keras.layers.Activation('relu')) # ResnetBlock:同维度:3*3卷积层、3*3卷积层 model.add(tf.keras.layers.Conv2D(filters=128, kernel_size=(3, 3),strides=1,padding='same',use_bias=False)) # 卷积层 model.add(tf.keras.layers.BatchNormalization()) # BN层 model.add(tf.keras.layers.Activation('relu')) # 激活层 model.add(tf.keras.layers.Conv2D(filters=128, kernel_size=(3, 3),strides=1,padding='same',use_bias=False)) # 卷积层 model.add(tf.keras.layers.BatchNormalization()) # BN层 model.add(tf.keras.layers.Activation('relu')) # =================================================== # ResnetBlock:不同纬度:3*3卷积层、3*3卷积层、1*1卷积层 model.add(tf.keras.layers.Conv2D(filters=256, kernel_size=(3, 3),strides=2,padding='same',use_bias=False)) # 卷积层 model.add(tf.keras.layers.BatchNormalization()) # BN层 model.add(tf.keras.layers.Activation('relu')) # 激活层 model.add(tf.keras.layers.Conv2D(filters=256, kernel_size=(3, 3),strides=1,padding='same',use_bias=False)) # 卷积层 model.add(tf.keras.layers.BatchNormalization()) # BN层 model.add(tf.keras.layers.Activation('relu')) model.add(tf.keras.layers.Conv2D(filters=256, kernel_size=(1, 1),strides=2,padding='same',use_bias=False)) # 卷积层 model.add(tf.keras.layers.BatchNormalization()) # BN层 model.add(tf.keras.layers.Activation('relu')) # ResnetBlock:同维度:3*3卷积层、3*3卷积层 model.add(tf.keras.layers.Conv2D(filters=256, kernel_size=(3, 3),strides=1,padding='same',use_bias=False)) # 卷积层 model.add(tf.keras.layers.BatchNormalization()) # BN层 model.add(tf.keras.layers.Activation('relu')) # 激活层 model.add(tf.keras.layers.Conv2D(filters=256, kernel_size=(3, 3),strides=1,padding='same',use_bias=False)) # 卷积层 model.add(tf.keras.layers.BatchNormalization()) # BN层 model.add(tf.keras.layers.Activation('relu')) # =================================================== # ResnetBlock:不同纬度:3*3卷积层、3*3卷积层、1*1卷积层 model.add(tf.keras.layers.Conv2D(filters=512, kernel_size=(3, 3),strides=2,padding='same',use_bias=False)) # 卷积层 model.add(tf.keras.layers.BatchNormalization()) # BN层 model.add(tf.keras.layers.Activation('relu')) # 激活层 model.add(tf.keras.layers.Conv2D(filters=512, kernel_size=(3, 3),strides=1,padding='same',use_bias=False)) # 卷积层 model.add(tf.keras.layers.BatchNormalization()) # BN层 model.add(tf.keras.layers.Activation('relu')) model.add(tf.keras.layers.Conv2D(filters=512, kernel_size=(1, 1),strides=2,padding='same',use_bias=False)) # 卷积层 model.add(tf.keras.layers.BatchNormalization()) # BN层 model.add(tf.keras.layers.Activation('relu')) # ResnetBlock:同维度:3*3卷积层、3*3卷积层 model.add(tf.keras.layers.Conv2D(filters=512, kernel_size=(3, 3),strides=1,padding='same',use_bias=False)) # 卷积层 model.add(tf.keras.layers.BatchNormalization()) # BN层 model.add(tf.keras.layers.Activation('relu')) # 激活层 model.add(tf.keras.layers.Conv2D(filters=512, kernel_size=(3, 3),strides=1,padding='same',use_bias=False)) # 卷积层 model.add(tf.keras.layers.BatchNormalization()) # BN层 model.add(tf.keras.layers.Activation('relu')) # model.add(tf.keras.layers.GlobalAveragePooling2D()) # 输出层 model.add(tf.keras.layers.Dense(10, activation='softmax', kernel_regularizer=tf.keras.regularizers.l2())) # 模型的结构 model.summary()

1、1*1卷积核作用?

多加一个1*1卷积核,步长为2,可以让维度扩大一倍

# ResnetBlock:不同纬度:3*3卷积层、3*3卷积层、1*1卷积层 model.add(tf.keras.layers.Conv2D(filters=128, kernel_size=(3, 3),strides=2,padding='same',use_bias=False)) # 卷积层 model.add(tf.keras.layers.BatchNormalization()) # BN层 model.add(tf.keras.layers.Activation('relu')) # 激活层 model.add(tf.keras.layers.Conv2D(filters=128, kernel_size=(3, 3),strides=1,padding='same',use_bias=False)) # 卷积层 model.add(tf.keras.layers.BatchNormalization()) # BN层 model.add(tf.keras.layers.Activation('relu')) model.add(tf.keras.layers.Conv2D(filters=128, kernel_size=(1, 1),strides=2,padding='same',use_bias=False)) # 卷积层 model.add(tf.keras.layers.BatchNormalization()) # BN层 model.add(tf.keras.layers.Activation('relu')) # ResnetBlock:同维度:3*3卷积层、3*3卷积层 model.add(tf.keras.layers.Conv2D(filters=128, kernel_size=(3, 3),strides=1,padding='same',use_bias=False)) # 卷积层 model.add(tf.keras.layers.BatchNormalization()) # BN层 model.add(tf.keras.layers.Activation('relu')) # 激活层 model.add(tf.keras.layers.Conv2D(filters=128, kernel_size=(3, 3),strides=1,padding='same',use_bias=False)) # 卷积层 model.add(tf.keras.layers.BatchNormalization()) # BN层 model.add(tf.keras.layers.Activation('relu'))

二、cifar10分类-层方式-卷积神经网络-ResNet18

博客对应课程的视频位置:

步骤

1、读取数据集

2、拆分数据集(拆分成训练数据集和测试数据集)

3、构建模型

4、训练模型

5、检验模型需求

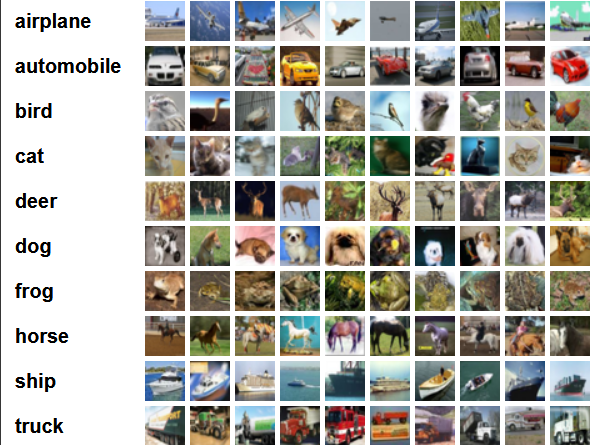

cifar10(物品分类)

该数据集共有60000张彩色图像,这些图像是32*32,分为10个类,每类6000张图。这里面有50000张用于训练,构成了5个训练批,每一批10000张图;另外10000用于测试,单独构成一批。测试批的数据里,取自10类中的每一类,每一类随机取1000张。抽剩下的就随机排列组成了训练批。注意一个训练批中的各类图像并不一定数量相同,总的来看训练批,每一类都有5000张图。

In [1]:

import pandas as pd

import numpy as np

import tensorflow as tf

import matplotlib.pyplot as plt

1、读取数据集

直接从tensorflow的dataset来读取数据集即可

In [2]:

(train_x, train_y), (test_x, test_y) = tf.keras.datasets.cifar10.load_data()

print(train_x.shape, train_y.shape)

这是32*32的彩色图,rgb三个通道如何处理呢

In [3]:

plt.imshow(train_x[0])

plt.show()

In [4]:

plt.figure()

plt.imshow(train_x[1])

plt.figure()

plt.imshow(train_x[2])

plt.show()

In [5]:

print(test_y)

In [6]:

# 像素值 RGB

np.max(train_x[0])

Out[6]:

2、拆分数据集(拆分成训练数据集和测试数据集)

上一步做了拆分数据集的工作

In [7]:

# 图片数据如何归一化

# 直接除255即可

train_x = train_x/255.0

test_x = test_x/255.0

In [8]:

# 像素值 RGB

np.max(train_x[0])

Out[8]:

In [9]:

train_y=train_y.flatten()

test_y=test_y.flatten()

train_y = tf.one_hot(train_y, depth=10)

test_y = tf.one_hot(test_y, depth=10)

print(test_y.shape)

3、构建模型

应该构建一个怎么样的模型:

In [ ]:

# Resnet的两种不同块

# ResnetBlock:同维度:3*3卷积层、3*3卷积层

# ResnetBlock:不同纬度:3*3卷积层、3*3卷积层、1*1卷积层

# ResnetBlock:同维度:3*3卷积层、3*3卷积层

model.add(tf.keras.layers.Conv2D(filters=64, kernel_size=(3, 3),strides=1,padding='same',use_bias=False,input_shape=(32,32,3))) # 卷积层

model.add(tf.keras.layers.BatchNormalization()) # BN层

model.add(tf.keras.layers.Activation('relu')) # 激活层

model.add(tf.keras.layers.Conv2D(filters=64, kernel_size=(3, 3),strides=1,padding='same',use_bias=False)) # 卷积层

model.add(tf.keras.layers.BatchNormalization()) # BN层

model.add(tf.keras.layers.Activation('relu'))

# ResnetBlock:不同纬度:3*3卷积层、3*3卷积层、1*1卷积层

model.add(tf.keras.layers.Conv2D(filters=64, kernel_size=(3, 3),strides=2,padding='same',use_bias=False)) # 卷积层

model.add(tf.keras.layers.BatchNormalization()) # BN层

model.add(tf.keras.layers.Activation('relu')) # 激活层

model.add(tf.keras.layers.Conv2D(filters=64, kernel_size=(3, 3),strides=1,padding='same',use_bias=False