使用Java和python实现redis的Stream数据类型做消息发送

上一篇介绍了Redis的stream数据类型和一些用法,使用场景,使用过程中需要注意的事项等。这里我使用Springboot工程搭建的环境来实现stream类型消息的生产,Java消费者,python消费者按照分组来消费消息。

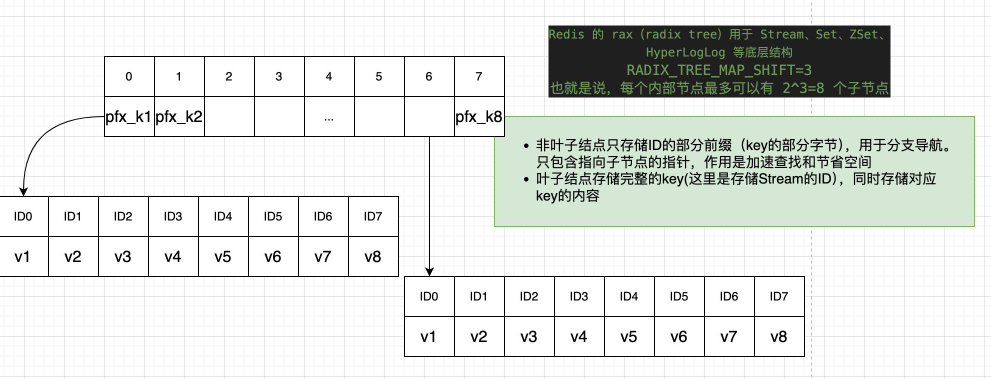

下图为redis中基数树实现stream的基本结构

环境搭建要求

- redis的版本要求:最好使用最新的稳定版本,虽然stream是5.0之后就引入的,但是后续的版本中对于一些命令进行了优化,调整了一些参数,加入了一些新的命令

- 我这里使用的是spring-boot-3.4.5

- 如果要在客户端观察redis的数据,推荐使用官方提供的Redis Insight.可以很方便的看到steram的分组、消费者、PEL等

代码实现

1.maven依赖

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0</modelVersion>

<parent>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-parent</artifactId>

<version>3.4.5</version>

<relativePath/> <!-- lookup parent from repository -->

</parent>

<groupId>com.example</groupId>

<artifactId>stream-demo</artifactId>

<version>0.0.1-SNAPSHOT</version>

<name>demo2</name>

<description>Demo project for Spring Boot</description>

<properties>

<java.version>21</java.version>

</properties>

<dependencies>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-data-redis</artifactId>

</dependency>

<dependency>

<groupId>com.fasterxml.jackson.core</groupId>

<artifactId>jackson-databind</artifactId> <!-- For JSON serialization -->

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-autoconfigure-processor</artifactId>

<scope>compile</scope>

</dependency>

<dependency>

<groupId>org.projectlombok</groupId>

<artifactId>lombok</artifactId>

<scope>provided</scope>

</dependency>

<dependency>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-starter-test</artifactId>

<scope>test</scope>

</dependency>

</dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.boot</groupId>

<artifactId>spring-boot-maven-plugin</artifactId>

</plugin>

</plugins>

</build>

</project>

2.配置文件和属性类

- redis的连接配置这里忽略,没有任何特殊对待

- 这里自定义了配置properties文件,用来自定义配置

#定义了一个stream的key

app.redis.stream.product-key=product-stream

#消息分组名称

app.redis.stream.product-group=product-group

#多个消费者名称

app.redis.stream.product-consumers=jconsumer-1,jconsumer-2

- 配置类读取属性

import lombok.Data;

import org.springframework.boot.context.properties.ConfigurationProperties;

import org.springframework.stereotype.Component;

import java.util.List;

@Component

@ConfigurationProperties(prefix = "app.redis.stream",ignoreInvalidFields = true)

@Data

public class StreamProperties {

private String productKey;

private String productGroup;

private List<String> productConsumers;

}

3.redis的配置类

import lombok.extern.slf4j.Slf4j;

import org.apache.commons.lang3.exception.ExceptionUtils;

import org.springframework.boot.CommandLineRunner;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import org.springframework.data.redis.connection.RedisConnectionFactory;

import org.springframework.data.redis.connection.stream.*;

import org.springframework.data.redis.stream.StreamMessageListenerContainer;

import javax.security.auth.Destroyable;

import java.time.Duration;

@Configuration

@Slf4j

public class RedisStreamConfig implements Destroyable {

@Bean

public RedisTemplate<String, Object> redisTemplate(RedisConnectionFactory factory,ObjectMapper objectMapper) {

RedisTemplate<String, Object> template = new RedisTemplate<>();

template.setConnectionFactory(factory);

// 设置值的序列化方式为 Jackson 序列化器(默认使用 JDK 序列化)

Jackson2JsonRedisSerializer<Object> serializer = new Jackson2JsonRedisSerializer<>(objectMapper,Object.class);

// 设置序列化器

template.setValueSerializer(serializer);

template.setHashValueSerializer(serializer);

// 设置 key 的序列化方式为 String 序列化器

template.setKeySerializer(new StringRedisSerializer());

template.setHashKeySerializer(new StringRedisSerializer());

return template;

}

@Bean(destroyMethod = "stop")

public StreamMessageListenerContainer<String, ObjectRecord<String,String>> streamMessageListenerContainer(RedisConnectionFactory redisConnectionFactory,

ProductStreamListener productStreamListener,

StreamProperties streamProperties) {

//意思就是消费者每次取10条来消费,最多等待5s(没有消息时的等待时间)

StreamMessageListenerContainer.StreamMessageListenerContainerOptions<String, ObjectRecord<String,String>> options =

StreamMessageListenerContainer.StreamMessageListenerContainerOptions.builder()

.pollTimeout(Duration.ofSeconds(5))//拉取消息超时时间

.batchSize(10)//批量获取消息

.targetType(String.class)

.build();

//创建消费者监听容器

StreamMessageListenerContainer<String, ObjectRecord<String,String>> container = StreamMessageListenerContainer.create(redisConnectionFactory, options);

//如果没有创建stream group,就先创建

try {

redisConnectionFactory.getConnection().streamCommands().xGroupCreate(

streamProperties.getProductKey().getBytes(),

streamProperties.getProductGroup(),

ReadOffset.from("0-0"),//意思时从流的初始位置开始消费。(前提时流存在,不存在的情况下这里设置$,0都一样)

true // mkstream, 如果stream不存在则创建

);

} catch (Exception e) {

log.warn("stream已经存在:{},error:{}",streamProperties.getProductKey(), ExceptionUtils.getRootCauseMessage(e));

// 如果组已存在会抛异常,可以忽略

}

//指定消费最新的消息--每次启动都消费所有积压消息(不管是否已处理过)ReadOffset.from("0-0"),***都是消费pending状态的消息,但只对新建group有效,也就是没有任何投递***

//注意redis中stream并不是消费完(XACK)就立马删除已经消费的消息,而是存放一段时间,由redis内部过期机制定时删除。它的设计初衷是为了支持多个消费者组、消息回溯等高级功能

//Redis 内部的“未确认消息保留时间”是永久,也就是不消费的话永远存在。

try {

// 动态注册多个消费者

for (String consumerName : streamProperties.getProductConsumers()) {

StreamOffset<String> offset = StreamOffset.create(streamProperties.getProductKey(), ReadOffset.lastConsumed());

StreamMessageListenerContainer.StreamReadRequest<String> streamReadRequest =

StreamMessageListenerContainer.StreamReadRequest.builder(offset)

.consumer(Consumer.from(streamProperties.getProductGroup(), consumerName))

.autoAcknowledge(false)

.errorHandler(error -> log.error("stream消费异常:", error))

.cancelOnError(e -> false)

.build();

//注册消费者监听

container.register(streamReadRequest, new ConsumerAwareStreamListener(consumerName, productStreamListener));

}

} catch (Exception e) {

log.error(e.getMessage());

}

container.start();

return container;

}

@Bean

public CommandLineRunner streamPendingHandlerRunner(StreamPendingHandler streamPendingHandler){

return args -> streamPendingHandler.handlePendingMessages();

}

4.实现stream的监听

这里为了区别具体是哪个消费者消费的,设计了一个包装类,将消费者名称传递给具体监听处理程序

- 具体监听实现类:

import com.fasterxml.jackson.databind.ObjectMapper;

import com.loo.product.entity.Product;

import jakarta.annotation.Resource;

import lombok.extern.slf4j.Slf4j;

import org.springframework.data.redis.connection.stream.ObjectRecord;

import org.springframework.data.redis.connection.stream.RecordId;

import org.springframework.data.redis.core.StringRedisTemplate;

import org.springframework.data.redis.stream.StreamListener;

import org.springframework.stereotype.Component;

//@Scope(scopeName =ConfigurableBeanFactory.SCOPE_PROTOTYPE)

@Component

@Slf4j

public class ProductStreamListener implements StreamListener<String, ObjectRecord<String,String>> {

@Resource

private ObjectMapper objectMapper;

@Resource

private StringRedisTemplate stringRedisTemplate;

@Override

public void onMessage(ObjectRecord<String,String> message) {

try {

RecordId recordId = message.getId();

log.info("Received message with ID: {}", recordId.getValue());

//得到具体消息

Product product = objectMapper.readValue(message.getValue(), Product.class);

//TODO 使用product处理业务

//手动确认消息,否则消息一直是pending状态,ID被放到XPENDING列表

stringRedisTemplate.opsForStream().acknowledge("product-group", message);

} catch (Exception e) {

log.error("Error processing message", e);

}

}

public void onMessageWithConsumer(ObjectRecord<String, String> message, String consumerName) {

log.info("Recieved message with Consumer: {}", consumerName);

onMessage(message);

}

}

- 包装类:

import org.springframework.data.redis.connection.stream.ObjectRecord;

import org.springframework.data.redis.stream.StreamListener;

public class ConsumerAwareStreamListener implements StreamListener<String, ObjectRecord<String, String>> {

private final String consumerName;

private final ProductStreamListener delegate;

public ConsumerAwareStreamListener(String consumerName, ProductStreamListener delegate) {

this.consumerName = consumerName;

this.delegate = delegate;

}

@Override

public void onMessage(ObjectRecord<String, String> message) {

delegate.onMessageWithConsumer(message, consumerName);

}

}

5.处理PEL数据(消费补偿)

import com.fasterxml.jackson.databind.ObjectMapper;

import com.loo.product.entity.Product;

import jakarta.annotation.Resource;

import lombok.extern.slf4j.Slf4j;

import org.springframework.data.domain.Range;

import org.springframework.data.redis.connection.stream.*;

import org.springframework.data.redis.core.StringRedisTemplate;

import org.springframework.stereotype.Component;

import java.util.List;

@Slf4j

@Component

public class StreamPendingHandler {

@Resource

private StringRedisTemplate stringRedisTemplate;

@Resource

private StreamProperties streamProperties;

@Resource

private ObjectMapper objectMapper;

public void handlePendingMessages() {

int count = 0;

try {

for(String consumer: streamProperties.getProductConsumers()){

// 查询pending消息,独占的形式(范围是所有,也就是- +)其实就是XPENDING PENDING key group [[IDLE min-idle-time] start end count [consumer]]

PendingMessages pendingMessages = stringRedisTemplate.opsForStream()

.pending(streamProperties.getProductKey(), Consumer.from(streamProperties.getProductGroup(), consumer), Range.unbounded(), Integer.MAX_VALUE);

if (pendingMessages.isEmpty()) {

return;

}

for (PendingMessage pending : pendingMessages) {

String messageId = pending.getIdAsString();

//XRANGE key start end [COUNT count],比如这里就是XRANGE product-stream 1750696771676-0 1750696771676-0 意思是获取指定stream的数据

//可能recordList大小为0,比如被trim掉了一部分数据、或者由于发送消息时设置了长度选项且消息超过设定数量而丢失。

List<MapRecord<String, Object, Object>> recordList = stringRedisTemplate.opsForStream().range(streamProperties.getProductKey(), Range.just(messageId));

for (MapRecord<String, Object, Object> record : recordList) {

try {

count ++;

// 业务处理

log.info("[PENDING补偿] 处理id:{}",record.getId());

Product product = objectMapper.readValue(record.getValue().get("payload").toString(), Product.class);

// 手动ack

stringRedisTemplate.opsForStream().acknowledge(streamProperties.getProductGroup(), record);

} catch (Exception e) {

log.error("[PENDING补偿] 处理消息异常", e);

}

}

}

}

log.info("总共处理pending count:{}",count);

} catch (Exception e) {

log.error("[PENDING补偿] 扫描异常", e);

}

}

}

6.再设计一个收缩stream大小的任务类

前面已经说了,redis中对于stream数据类型的设计是,无论消息是否被ACK,消息将永久持久化,除非设置了最大长度和自行Xtrim。我这里并没有设置Maxlen,所以启用一个定时任务扫描和执行Xtrim

import jakarta.annotation.Resource;

import lombok.extern.slf4j.Slf4j;

import org.springframework.data.redis.connection.stream.StreamInfo;

import org.springframework.data.redis.core.StringRedisTemplate;

import org.springframework.scheduling.annotation.Scheduled;

import org.springframework.stereotype.Component;

import java.util.concurrent.TimeUnit;

@Component

@Slf4j

public class StreamTrimTask {

//定时运行,收缩stream。先确认stream的每个consumer待消费的数量,收缩的数量不能低于pending数量,避免丢失消息

@Resource

private StringRedisTemplate stringRedisTemplate;

@Resource

private StreamProperties streamProperties;

@Scheduled(fixedRate = 1, initialDelay = 1,timeUnit = TimeUnit.HOURS)

public void delStream(){

StreamInfo.XInfoConsumers consumers = stringRedisTemplate.opsForStream().consumers(streamProperties.getProductKey(), streamProperties.getProductGroup());

//没有consumer就不要删了,新增的消息,之后添加了consumer,会自动从头开始消费

if (consumers.isEmpty()) {

log.info("没有消费者,不执行收缩操作。stream:{},group:{}",streamProperties.getProductKey(),streamProperties.getProductGroup());

return;

}

long pendingCount = consumers

.get().map(StreamInfo.XInfoConsumer::pendingCount).reduce(Long::sum).orElse(0L);

//这个方法是直接使用Xpending返回PEL的长度。

//long pendingCount = stringRedisTemplate.opsForStream().pending(streamProperties.getProductKey(), streamProperties.getProductGroup()).getTotalPendingMessages();

//近似值删除,提高性能

stringRedisTemplate.opsForStream().trim(streamProperties.getProductKey(),pendingCount + 10,true);

}

}

7.模拟消息推送服务

import com.fasterxml.jackson.databind.ObjectMapper;

import com.loo.product.entity.Product;

import lombok.extern.slf4j.Slf4j;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.data.redis.connection.stream.ObjectRecord;

import org.springframework.data.redis.connection.stream.RecordId;

import org.springframework.data.redis.core.StringRedisTemplate;

import org.springframework.scheduling.annotation.Scheduled;

import org.springframework.stereotype.Component;

@Component

@Slf4j

public class ProductProducer {

private final ObjectMapper objectMapper;

private final StringRedisTemplate redisTemplate;

@Autowired

public ProductProducer(ObjectMapper objectMapper, StringRedisTemplate redisTemplate) {

this.objectMapper = objectMapper;

this.redisTemplate = redisTemplate;

}

@Scheduled(fixedRate = 5000)

public void sendMessage() throws Exception {

Product product = new Product();

product.setProductId(1L);

product.setName("Example Product" + System.currentTimeMillis());

product.setDescription("我是一只小猫咪,我会非,飞啊飞,要到☁️干🦅");

ObjectRecord<String, String> objectRecord = ObjectRecord.create("product-stream", objectMapper.writeValueAsString(product));

// 添加记录到Redis Stream,,这里如果设置最大长度,超过会自动删除老数据。。。注意这里的长度只是消息主体也就是当前stream的最大长度,不是pending的消息长度。

// 也就是如果设置了maxlen,但是如果一直有生产者生产消息,且没有被XACK,那么pending的长度会大雨maxlen,但是超过maxlen的这部分消息体是“悬空”了,无法被消费和ACK。直接点就是这部分消息会丢失。

// 因此不要去设置maxlen,然后依据实际业务可以Xdel已经ack的消息,或者定时清理已经消费的消息

RecordId recordId = redisTemplate.opsForStream().add(objectRecord);//, RedisStreamCommands.XAddOptions.maxlen(20)

log.info("Sent message with ID: {}" ,recordId.getValue());

}

}

使用python创建的消费者

import redis

import threading

import time

import json

REDIS_HOST = 'localhost'

REDIS_PORT = 16379

STREAM_KEY = 'product-stream'

GROUP = 'product-group'

BLOCK_TIME = 2000 # 毫秒

def process_message(message_id, data, consumer):

# 业务处理逻辑

print(f"[{consumer}] 消费消息: id={message_id}, data={data}")

def consumer_loop(consumer):

r = redis.Redis(host=REDIS_HOST, port=REDIS_PORT, decode_responses=True)

# 确保group存在

try:

r.xgroup_create(STREAM_KEY, GROUP, id='0-0', mkstream=True)

except redis.exceptions.ResponseError as e:

if "BUSYGROUP" not in str(e):

raise

while True:

try:

# 1. 先补偿pending

# handle_pending(r, consumer)

# 2. 拉取新消息

resp = r.xreadgroup(GROUP, consumer, {STREAM_KEY: '>'}, count=10, block=BLOCK_TIME)

for stream, messages in resp:

for msg_id, fields in messages:

process_message(msg_id, fields, consumer)

r.xack(STREAM_KEY, GROUP, msg_id)

except Exception as e:

print(f"[{consumer}] 消费异常: {e}")

time.sleep(1)

if __name__ == '__main__':

threads = []

consumer = input("请输入消费者名称: ")

if not consumer:

print("消费者名称不能为空")

exit(1)

t = threading.Thread(target=consumer_loop, args=(consumer,), daemon=True)

t.start()

threads.append(t)

print("消费者启动,按Ctrl+C退出")

while True:

time.sleep(10)

常见疑问

- stream和group需要提前创建好吗

需要。但是一般代码中会自动创建,以上代码已经有处理。不用担心并发的问题,readis服务器会自行判断,一个stream必须拥有一个唯一的key,而且group也不能重复 - 需要提前创建好消费者吗

一般不需要手动创建消费者,当我们在客户端使用消费者名称去消费数据的时候,redis服务器会自动创建消费者(无论用什么语言的客户端,都一个道理)。而且相同group的消费者名称不能重复,不同group的可以 - 使用Maxlen或者Xtrim裁剪消息列表大小时,如果消息没有消费(或者压根还没有消费者)会被清理吗

会。消息的裁剪删除,并不关心消息是什么状态,它只是针对stream的最大长度,然后做物理删除。因此这种操作要根据实际业务情况做具体的策略,避免丢失消息 - 分布式环境下并发运行上面的消费、消息裁剪会有问题吗

不会。首先同一个stream相同group的消息,只会被其中一个消费者消息(这是由redis服务器来实现,不会同时并发发给多个客户端)。消息裁剪也不会因为重复执行而异常,服务器内部实现机制会判断裁剪量,只是返回不同结果而已 - 多个消费者的顺序怎样的

基本上时FIFO的策略,多个消费监听,谁先到服务器打卡,谁就先获得消息。当然这个也可以由不同的客户端去实现不同的策略,比如轮训、权重什么的。因为本质上redids的stream不是推送,而是拉取。

浙公网安备 33010602011771号

浙公网安备 33010602011771号