lightgbm 进行二分类

import pandas as pd

import numpy as np

import lightgbm as lgb

from sklearn.model_selection import StratifiedKFold

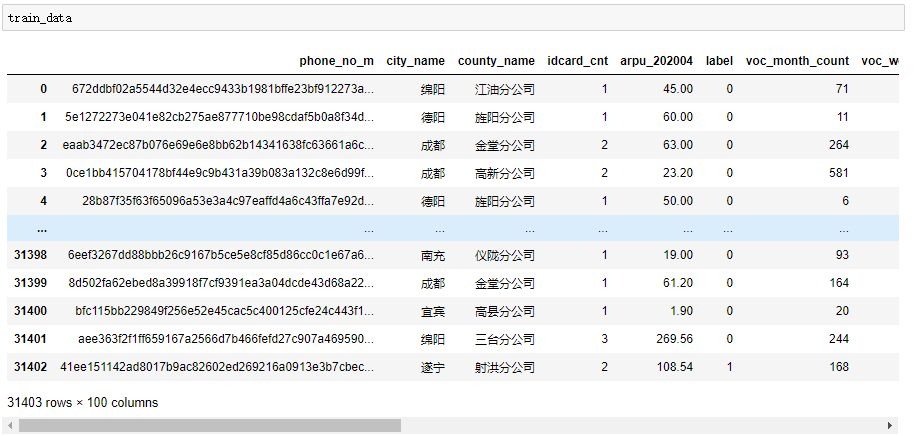

train_data=pd.read_csv(r'C:\Users\win10\Desktop\诈骗电话分月数据\trainfinal.csv',dtype={'city_name': 'category', 'county_name': 'category'})

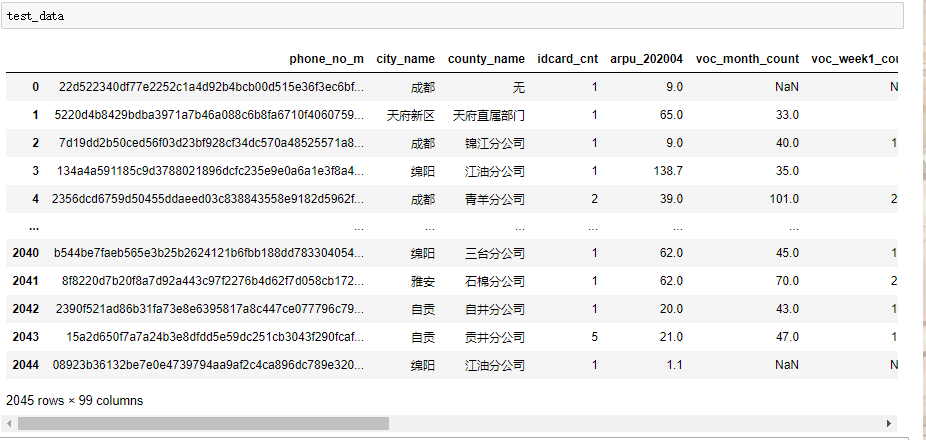

test_data=pd.read_csv(r'C:\Users\win10\Desktop\诈骗电话分月数据\testfinal.csv',encoding='GBK',dtype={'city_name': 'category', 'county_name': 'category'})

test_data 多了label 列 :

train_label=train_data['label'] #取出Y train_data.drop(['phone_no_m','label'],axis=1,inplace=True) #去除Y和无关的名字变量 # 交叉验证评价指标 :额外的 from sklearn.metrics import f1_score def culatescore(predict,real): f1=f1_score(real, predict, average='macro') scores.append(f1) return scores params = { 'objective': 'binary', 'metric': 'auc', 'num_leaves': 31, 'max_bin': 50, 'max_depth': 6, "learning_rate": 0.02, "colsample_bytree": 0.8, # 每次迭代中随机选择特征的比例 "bagging_fraction": 0.8, # 每次迭代时用的数据比例 'min_child_samples': 25, 'n_jobs': -1, 'silent': True, # 信息输出设置成1则没有信息输出 'seed': 1000, } #设置出参数 results=[] #这个是f1的值,每一次交叉验证的f1 bigtestresults=[] #这个是测试集各个交叉验证汇总后的结果 smalltestresults=[] #每一次运行这一大段代码,初始化各个list,这份是测试集预测的交叉验证的每一次存放结果的list scores=[] #这是汇总后的交叉验证 cat = ["city_name", "county_name"] #这个是类别特征,catgorical_feature=cat kf=StratifiedKFold(n_splits=3,shuffle=True,random_state=123) #类别交叉严重 x,y=pd.DataFrame(train_data),pd.DataFrame(train_label) #将数据dataframe化,后面进行iloc 等选项 for i,(train_index,valid_index) in enumerate(kf.split(x,y)): #这里需要输入y print("第",i+1,"次") x_train,y_train=x.iloc[train_index],y.iloc[train_index] x_valid,y_valid=x.iloc[valid_index],y.iloc[valid_index] #取出数据 lgb_train = lgb.Dataset(x_train, y_train,categorical_feature=cat,silent=True) lgb_eval = lgb.Dataset(x_valid, y_valid, reference=lgb_train, categorical_feature=cat,silent=True) gbm = lgb.train(params, lgb_train, num_boost_round=400, valid_sets=[lgb_train, lgb_eval], categorical_feature=cat,verbose_eval=100, early_stopping_rounds=200) #varbose_eval 迭代多少次打印 early_stopping_rounds:有多少次分数没有提高就停止 #categorical_feature:lightgbm可以处理标称型(类别)数据。通过指定'categorical_feature' 这一参数告诉它哪些feature是标称型的。 #它不需要将数据展开成独热码(one-hot),其原理是对特征的所有取值,做一个one-vs-others,从而找出最佳分割的那一个特征取值 #bagging_fraction:和bagging_freq同时使用可以更快的出结果 vaild_preds = gbm.predict(x_valid, num_iteration=gbm.best_iteration) #对测试集进行操作 test_pre = gbm.predict(test_data.iloc[:,1:], num_iteration=gbm.best_iteration) threshold = 0.45 #设置阈值 smalltestresults=[] #这个是测试集预测的交叉验证的每一次存放结果的list # 对每个交叉验证的测试集进行0 , 1 化,然后将每次结果放入bigtestresults中汇总 for w in test_pre: temp = 1 if w > threshold else 0 smalltestresults.append(temp) bigtestresults.append(smalltestresults) # 对每次交叉验证的验证集进行 0 ,1 化,然后评估f1值 results=[] for pred in vaild_preds: result = 1 if pred > threshold else 0 results.append(result) c=culatescore(results,y_valid) print(c) print('---N折交叉验证分数---') print(np.average(c)) #将汇总的交叉验证的测试集的数据转变为dataframe,取出出现次数最多的那类,用作预测结果。 finalpres=pd.DataFrame(bigtestresults) finaltask=[] lss=[] #这个是最终结果 for i in finalpres.columns: temp1=finalpres.iloc[:,i].value_counts().index[0] lss.append(temp1)

----------------------------------------------------------------------------以上是2分类任务的简单应用,还没有调参----------------------------------------------------------------------

调参代码:

--------------------------普通手动网格搜索调参:只适用于全是数字的,没有种类的 dataset----------------------------

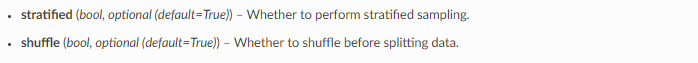

#设定初始参数 params = { 'boosting_type': 'gbdt', 'objective': 'binary', 'metric': 'auc', 'n_jobs':-1, 'learning_rate':0.1, #先设置大一点 ,尽量小一些。 'num_leaves':250, 'max_depth': 8, 'subsample': 0.8, 'colsample_bytree': 0.8 } #先调整 n_estimators 迭代的次数/残差树的数目 尽量将nfold 设置大一点 lgb_train=lgb.Dataset(x, y,categorical_feature=cat,silent=True) cv_results = lgb.cv(params,lgb_train, num_boost_round=1000, nfold=4, stratified=False, shuffle=True, metrics='auc',early_stopping_rounds=50,seed=0) print('best n_estimators:', len(cv_results['auc-mean'])) print('best cv score:', pd.Series(cv_results['auc-mean']).max())

best n_estimators: 325 best cv score: 0.9776274857159668

然后网格搜索调参

#求解 max_depth / num_leaves from sklearn.model_selection import GridSearchCV from sklearn.model_selection import KFold kf=KFold(n_splits=2,shuffle=True,random_state=123) #后面KFold网格搜索和交叉验证用 params_test1={'max_depth': range(5,8,1), 'num_leaves':range(10, 60, 10)} gsearch1 = GridSearchCV(estimator = lgb.LGBMClassifier(boosting_type='gbdt',objective='binary',metrics='auc',learning_rate=0.1, n_estimators=325, max_depth=8, bagging_fraction = 0.8,feature_fraction = 0.8), param_grid = params_test1, scoring='roc_auc',cv=kf,n_jobs=-1) gsearch1.fit(x,y) gsearch1.best_params_, gsearch1.best_score_

({'max_depth': 7, 'num_leaves': 50}, 0.9643225388549975)

这样一直进行下去。

max_depth 1 num_leaves 1 min_data_in_leaf #2 max_bin #2 feature_fraction 3 构建弱学习器时,对特征随机采样的比例,默认值为1。推荐的候选值为:[0.6, 0.7, 0.8, 0.9, 1] bagging_fraction 3 bagging_freq 3 min_child_samples 4 叶节点样本的最少数量,默认值20,用于防止过拟合。 min_child_weight 4 指定孩子节点中最小的样本权重和,如果一个叶子节点的样本权重和小于min_child_weight则拆分过程结束,默认值为1。推荐的候选值为:[1, 3, 5, 7] lambda_l1 5 lambda_l2 5 min_gain_to_split 6 指定叶节点进行分支所需的损失减少的最小值,默认值为0。设置的值越大,模型就越保守。**推荐的候选值为:[0, 0.05 ~ 0.1, 0.3, 0.5, 0.7, 0.9, 1] ** learning_rate boosting_type objective metric n_jobs

----------全自动调参--+categorical_feature=cat ,注意,如果有categorical_feature,则在每次循环的时候,都要加载数据,否则数据会过期。-----------

#这个调参是有充足的时间来的 import pandas as pd import lightgbm as lgb from sklearn import metrics from sklearn.datasets import load_breast_cancer from sklearn.model_selection import train_test_split #这个可以不要 canceData=load_breast_cancer() X=canceData.data y=canceData.target X_train,X_test,y_train,y_test=train_test_split(X,y,random_state=0,test_size=0.2) ### 数据转换 print('数据转换') lgb_train = lgb.Dataset(X_train, y_train) lgb_eval = lgb.Dataset(X_test, y_test, reference=lgb_train) #这个可以不要 ### 设置初始参数--不含交叉验证参数 print('设置参数') params = { 'boosting_type': 'gbdt', 'objective': 'binary', 'metric': 'auc', 'n_jobs':-1, 'learning_rate':0.1 } ### 交叉验证(调参) print('交叉验证') max_auc = float('0') best_params = {} # 准确率 print("调参1:提高准确率") for num_leaves in range(5,100,5): #指定叶子的个数,默认值为31,此参数的数值应该小于 2^{max\_depth} for max_depth in range(3,8,1): #指定树的最大深度,默认值为-1,表示不做限制,合理的设置可以防止过拟合。 params['num_leaves'] = num_leaves params['max_depth'] = max_depth cv_results = lgb.cv( params, lgb_train, seed=1, nfold=2, metrics=['auc'], early_stopping_rounds=10, verbose_eval=True ) mean_auc = pd.Series(cv_results['auc-mean']).max() boost_rounds = pd.Series(cv_results['auc-mean']).idxmax() if (mean_auc >= max_auc): max_auc = mean_auc best_params['num_leaves'] = num_leaves best_params['max_depth'] = max_depth if ('num_leaves' and 'max_depth' in best_params.keys()): params['num_leaves'] = best_params['num_leaves'] params['max_depth'] = best_params['max_depth'] # 过拟合 print("调参2:降低过拟合") for max_bin in range(5,256,10): #最大的桶的数量,用来装数值的; for min_data_in_leaf in range(1,102,10): #每个叶子上的最少数据; params['max_bin'] = max_bin params['min_data_in_leaf'] = min_data_in_leaf cv_results = lgb.cv( params, lgb_train, seed=1, nfold=2, metrics=['auc'], early_stopping_rounds=10, verbose_eval=True ) mean_auc = pd.Series(cv_results['auc-mean']).max() boost_rounds = pd.Series(cv_results['auc-mean']).idxmax() if (mean_auc >= max_auc): max_auc = mean_auc best_params['max_bin']= max_bin best_params['min_data_in_leaf'] = min_data_in_leaf if ('max_bin' and 'min_data_in_leaf' in best_params.keys()): params['min_data_in_leaf'] = best_params['min_data_in_leaf'] params['max_bin'] = best_params['max_bin'] print("调参3:降低过拟合") for feature_fraction in [0.6,0.7,0.8,0.9,1.0]: #默认值为1;指定每次迭代所需要的特征部分; for bagging_fraction in [0.6,0.7,0.8,0.9,1.0]: #默认值为1;指定每次迭代所需要的数据部分,并且它通常是被用来提升训练速度和避免过拟合的。 for bagging_freq in range(0,50,5): params['feature_fraction'] = feature_fraction params['bagging_fraction'] = bagging_fraction params['bagging_freq'] = bagging_freq cv_results = lgb.cv( params, lgb_train, seed=1, nfold=2, metrics=['auc'], early_stopping_rounds=10, verbose_eval=True ) mean_auc = pd.Series(cv_results['auc-mean']).max() boost_rounds = pd.Series(cv_results['auc-mean']).idxmax() if (mean_auc >= max_auc): max_auc=mean_auc best_params['feature_fraction'] = feature_fraction best_params['bagging_fraction'] = bagging_fraction best_params['bagging_freq'] = bagging_freq if( 'feature_fraction' and 'bagging_fraction' and 'bagging_freq' in best_params.keys()): params['feature_fraction'] = best_params['feature_fraction'] params['bagging_fraction'] = best_params['bagging_fraction'] params['bagging_freq'] = best_params['bagging_freq'] print("调参4:降低过拟合") for lambda_l1 in [1e-5,1e-3,1e-1,0.0,0.1,0.3,0.5,0.7,0.9,1.0]: for lambda_l2 in [1e-5,1e-3,1e-1,0.0,0.1,0.4,0.6,0.7,0.9,1.0]: params['lambda_l1'] = lambda_l1 params['lambda_l2'] = lambda_l2 cv_results = lgb.cv( params, lgb_train, seed=1, nfold=2, metrics=['auc'], early_stopping_rounds=10, verbose_eval=True ) mean_auc = pd.Series(cv_results['auc-mean']).max() boost_rounds = pd.Series(cv_results['auc-mean']).idxmax() if( mean_auc >= max_auc): max_auc=mean_auc best_params['lambda_l1'] = lambda_l1 best_params['lambda_l2'] = lambda_l2 if ('lambda_l1' and 'lambda_l2' in best_params.keys()): params['lambda_l1'] = best_params['lambda_l1'] params['lambda_l2'] = best_params['lambda_l2'] print("调参5:降低过拟合2") for min_split_gain in [0.0,0.1,0.2,0.3,0.4,0.5,0.6,0.7,0.8,0.9,1.0]: #指定叶节点进行分支所需的损失减少的最小值,默认值为0。设置的值越大,模型就越保守。 params['min_split_gain'] = min_split_gain cv_results = lgb.cv( params, lgb_train, seed=1, nfold=2, metrics=['auc'], early_stopping_rounds=10, verbose_eval=True ) mean_auc = pd.Series(cv_results['auc-mean']).max() boost_rounds = pd.Series(cv_results['auc-mean']).idxmax() if mean_auc >= max_auc: max_auc=mean_auc best_params['min_split_gain'] = min_split_gain if 'min_split_gain' in best_params.keys(): params['min_split_gain'] = best_params['min_split_gain'] print(best_params)

{'num_leaves': 5, 'max_depth': 7, 'max_bin': 255, 'min_data_in_leaf': 41, 'feature_fraction': 0.7, 'bagging_fraction': 0.7, 'bagging_freq': 45, 'lambda_l1': 0.5, 'lambda_l2': 0.0, 'min_split_gain': 0.1}

如果还想增加某些参数,还可以单独拎几个参数出来仔细挑

### 设置初始参数--不含交叉验证参数 print('设置参数') params = { 'boosting_type': 'gbdt', 'objective': 'binary', 'metric': 'auc', 'n_jobs':-1, 'learning_rate':0.1 } print('交叉验证') max_auc = float('0') best_params = {} for num_leaves in range(220,230,5): for max_depth in range(6,7,1): ### 数据转换 必须放在这里,否则会出错,数据过期了。 x,y=pd.DataFrame(train_data),pd.DataFrame(train_label) #将数据dataframe化,后面进行iloc 等选项 lgb_train=lgb.Dataset(x, y,categorical_feature=cat,silent=True) params['num_leaves'] = num_leaves params['max_depth'] = max_depth cv_results = lgb.cv(params,lgb_train, num_boost_round=1000, nfold=2, stratified=False, shuffle=True, metrics='auc',early_stopping_rounds=50,seed=0) mean_auc = pd.Series(cv_results['auc-mean']).max() boost_rounds = pd.Series(cv_results['auc-mean']).idxmax() if (mean_auc >= max_auc): max_auc = mean_auc best_params['num_leaves'] = num_leaves best_params['max_depth'] = max_depth if ('num_leaves' and 'max_depth' in best_params.keys()): params['num_leaves'] = best_params['num_leaves'] params['max_depth'] = best_params['max_depth'] print('best cv score:', pd.Series(cv_results['auc-mean']).max()) print(best_params)

设置参数

交叉验证

best cv score: 0.9625448274375306

{'num_leaves': 225, 'max_depth': 6}

没有撤退可言!

浙公网安备 33010602011771号

浙公网安备 33010602011771号