CentOS7.2 hadoop-3.2.1 单机、伪分布式

网上很多教程杂乱、在参考官方文档下自己手动实现 hadoop-3.2.1 单机、伪分布式。记录下自己走过的坑

环境centos7.2, jdk1.8, hadoop-3.2.1

参考 ApachHadoop官网

- 官网下载 hadoop-3.2.1,解压到 /usr/local/,修改hadoop-3.2.1 所属用户为非root用户;

[root@node001 opt]# tar -zxvf hadoop-3.2.1.tar.gz -C /usr/local/

[root@node001 opt]# chown admin:admin /usr/local/hadoop-3.2.1/

- 配置 JAVA_HOME,我习惯单独写一个 java.sh 如下

[root@cloud01 hadoop-3.2.1]$ cat /etc/profile.d/java.sh

export JAVA_HOME=/opt/apps/java/jdk1.8.0_92

export JRE_HOME=/opt/apps/java/jdk1.8.0_92/jre

export CLASSPATH=.:$JAVA_HOME/lib:$JRE_HOME/lib:$CLASSPATH

export PATH=$JAVA_HOME/bin:$JRE_HOME/bin:$PATH

[root@node001 opt]# source /etc/profile.d/java.sh

[root@node001 opt]# java -version

java version "1.8.0_92"

Java(TM) SE Runtime Environment (build 1.8.0_92-b14)

Java HotSpot(TM) 64-Bit Server VM (build 25.92-b14, mixed mode)

[root@node001 opt]#

- 禁用selinux,关闭防火墙

[root@node001 opt]# sed -i 's/SELINUX=enforcing/SELINUX=disabled/g' /etc/selinux/config

[root@node001 opt]# setenforce 0

[root@node001 opt]# getenforce 0

Permissive

[root@node001 opt]#

[root@node001 opt]# systemctl stop firewalld

- 配置HADOOP_HOME、IP映射

[root@cloud01 hadoop-3.2.1]$vi /etc/profile

export HADOOP_HOME=/usr/local/hadoop-3.2.1

export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/bin

[root@node001 hadoop-3.2.1]# source /etc/profile

[root@node001 hadoop-3.2.1]# hadoop version

Hadoop 3.2.1

Source code repository https://gitbox.apache.org/repos/asf/hadoop.git -r b3cbbb467e22ea829b3808f4b7b01d07e0bf3842

Compiled by rohithsharmaks on 2019-09-10T15:56Z

Compiled with protoc 2.5.0

From source with checksum 776eaf9eee9c0ffc370bcbc1888737

This command was run using /usr/local/hadoop-3.2.1/share/hadoop/common/hadoop-common-3.2.1.jar

[root@node001 hadoop-3.2.1]# vim /etc/hosts

[root@node001 hadoop-3.2.1]# cat /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.0.110 node001

[root@node001 hadoop-3.2.1]#

- 伪分布式 修改配置文件

- /usr/local/hadoop-3.2.1/../core-site.xml:

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://nodeoo1:9000</value>

</property>

</configuration>

- /usr/local/hadoop-3.2.1/../hdfs-site.xml:

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

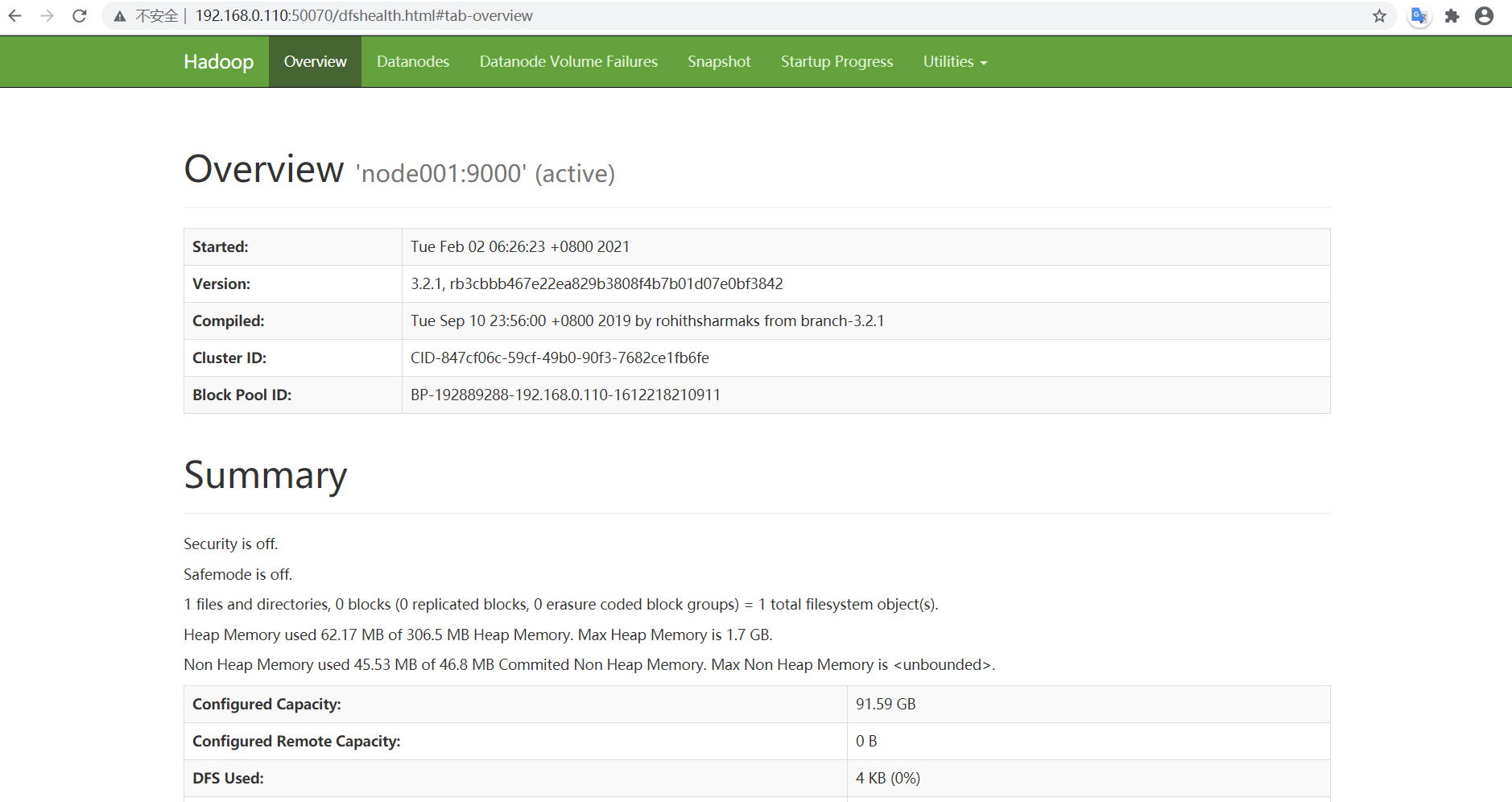

<name>dfs.namenode.http-address</name>

<value>node001:50070</value>

<final>true</final>//网上很多教程没有这个、我使用的hadoop-3.2.1 不加这个 50070页面出不来;主要自己版本

</property>

</configuration>

- /usr/local/hadoop-3.2.1/../mapred-site.xml:

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.application.classpath</name>

<value>$HADOOP_HOME/share/hadoop/mapreduce/*:$HADOOP_HOME/share/hadoop/mapreduce/lib/*</value>

</property>

</configuration>

- /usr/local/hadoop-3.2.1/../yarn-site.xml:

<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

- 需要对admin 做免密、start dfs 会报错

[admin@node001 hadoop-3.2.1]$ ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/home/admin/.ssh/id_rsa):

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/admin/.ssh/id_rsa.

Your public key has been saved in /home/admin/.ssh/id_rsa.pub.

The key fingerprint is:

e3:28:7c:00:eb:fe:7a:e2:ef:00:bd:be:b4:5b:e3:3d admin@node001

The key's randomart image is:

+--[ RSA 2048]----+

| |

| |

| . |

| . o |

|. o . S |

| o o . o . |

| = = o . |

| +.=.=E |

| .OX= .. |

+-----------------+

[admin@node001 hadoop-3.2.1]$ ssh-copy-id node001

/usr/bin/ssh-copy-id: INFO: attempting to log in with the new key(s), to filter out any that are already installed

/usr/bin/ssh-copy-id: INFO: 1 key(s) remain to be installed -- if you are prompted now it is to install the new keys

admin@node001's password:

Number of key(s) added: 1

Now try logging into the machine, with: "ssh 'node001'"

and check to make sure that only the key(s) you wanted were added.

[admin@node001 hadoop-3.2.1]$

- 起服务

Format the filesystem:

[admin@node001 hadoop-3.2.1]$ bin/hdfs namenode -format

[admin@node001 hadoop-3.2.1]$ sbin/start-dfs.sh

Starting namenodes on [node001]

Starting datanodes

Starting secondary namenodes [node001]

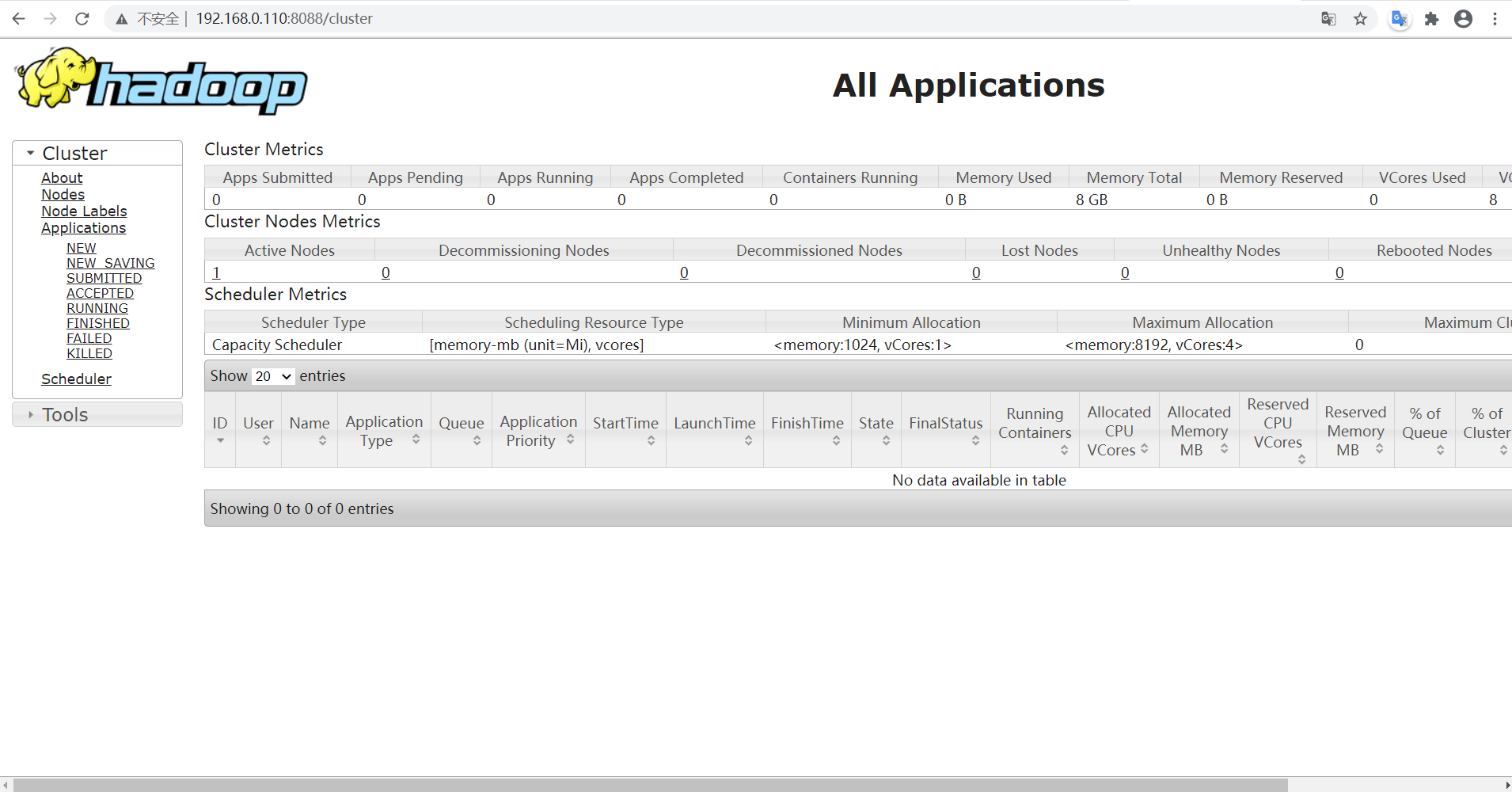

[admin@node001 hadoop-3.2.1]$ sbin/start-yarn.sh

Starting resourcemanager

Starting nodemanagers

[admin@node001 hadoop-3.2.1]$ jps

11664 NameNode

12241 ResourceManager

11781 DataNode

11973 SecondaryNameNode

12519 Jps

12351 NodeManager

[admin@node001 hadoop-3.2.1]$

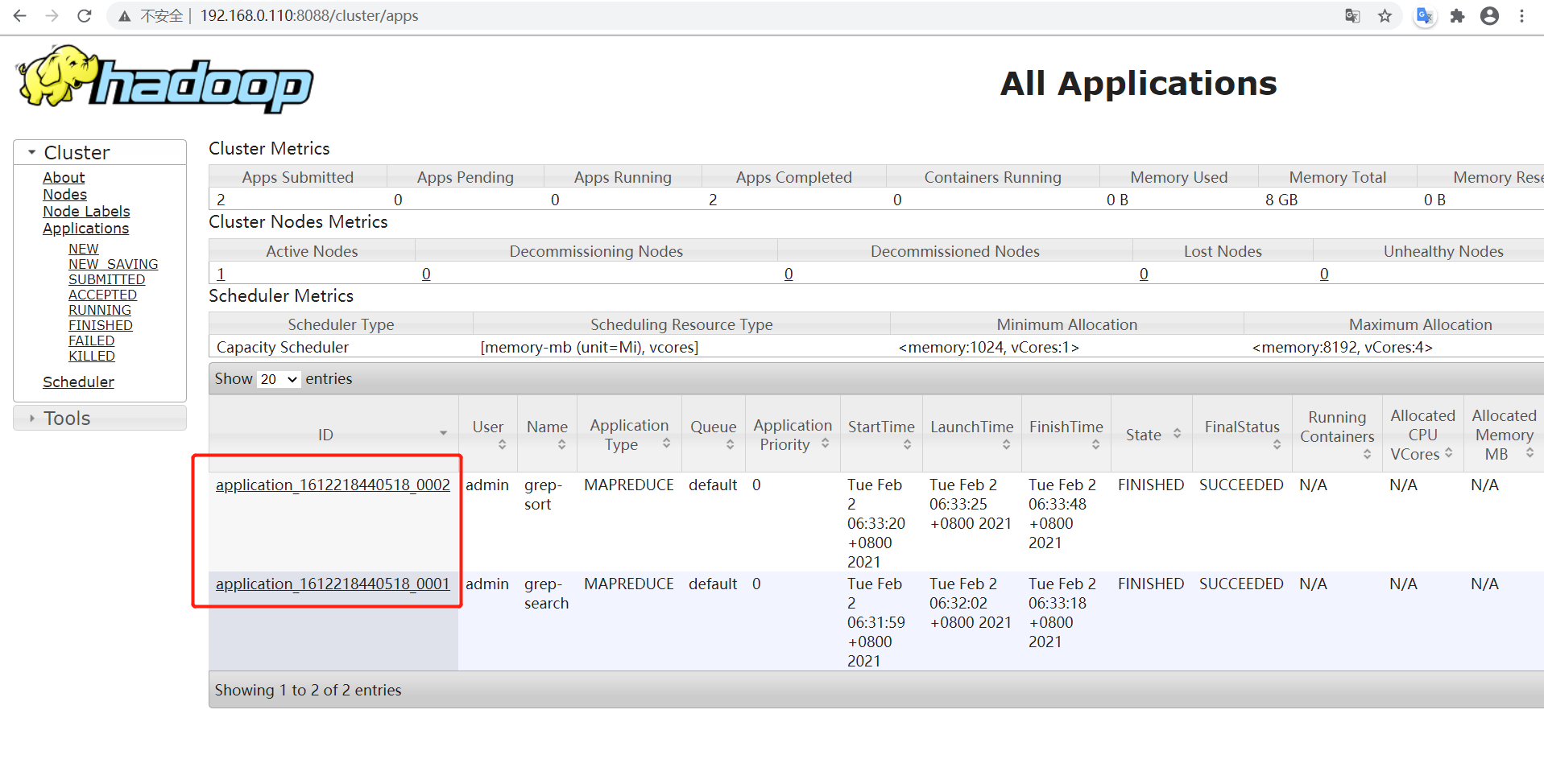

- 测试官网的 例子

[admin@node001 hadoop-3.2.1]$ history

1 exit

2 bin/hdfs namenode -format

3 sbin/start-dfs.sh

4 ssh-keygen

5 ssh-copy-id node001

6 jps

7 sbin/start-dfs.sh

8 sbin/start-yarn.sh

9 jps

10 lsof -i:50070

11 lsof -i:8088

12 bin/hdfs dfs -mkdir /user

13 bin/hdfs dfs -ls /

14 bin/hdfs dfs -mkdir /user/admin

15 bin/hdfs dfs -mkdir input

16 bin/hdfs dfs -put etc/hadoop/*.xml input

17 bin/hadoop jar share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar grep input output 'dfs[a-z.]+'

18 hdfs dfs -cat output/*

19 history

[admin@node001 hadoop-3.2.1]$ hdfs dfs -cat output/*

2021-02-02 06:35:01,598 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

1 dfsadmin

1 dfs.replication

1 dfs.namenode.http

[admin@node001 hadoop-3.2.1]$

- 学会看官方文档很重要、牢记菜鸡初学多半是自己环境问题~

我是一只快乐的小菜鸡~——

浙公网安备 33010602011771号

浙公网安备 33010602011771号