https://colin-scott.github.io/personal_website/research/interactive_latency.html

Tutorial https://commscope1.udemy.com/course/pragmatic-system-design/learn/lecture/23340674#notes

Estimation

| Component | Reads per second | Writes per second | |

| RDBMS | 10,000 | 5,000 | |

| No-SQL | 10,000 - 50,000 | 10,000 - 25,000 | |

| Distributed Cache | 100,000 | 10,0000 | |

| Queue | 100,000 | 100,000 | |

| Componenent | Space Size | |

| RDBMS | 3TB | |

| No-SQL | ||

| Distributed Cache | 16GB-128GB |

Caching -

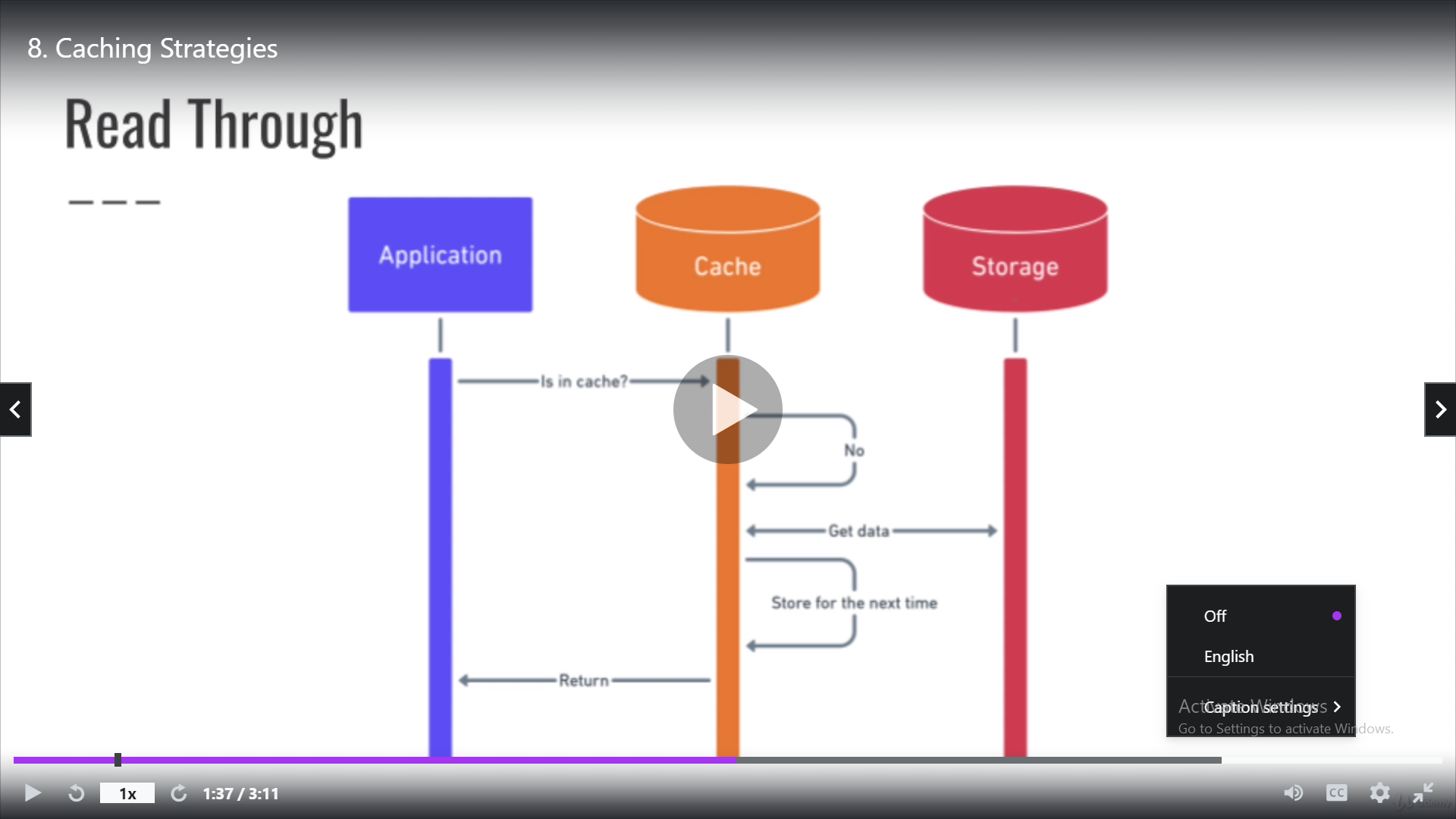

Readthru (commonly used)

Benefits - 1. reduce cpu consumption by caching result of complex algor 2. reduce io consumption by caching resource

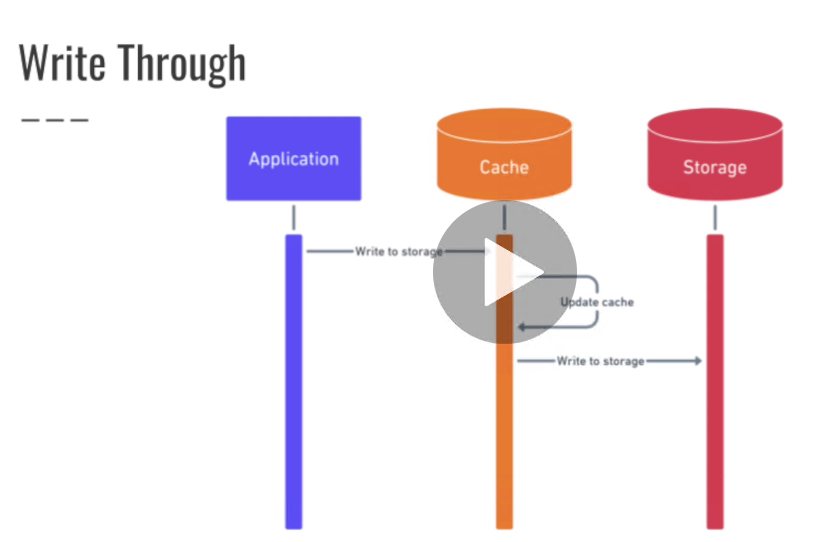

Writethru, only write cache when there is a CUD operation, might be wasted if the data is not visited after update.

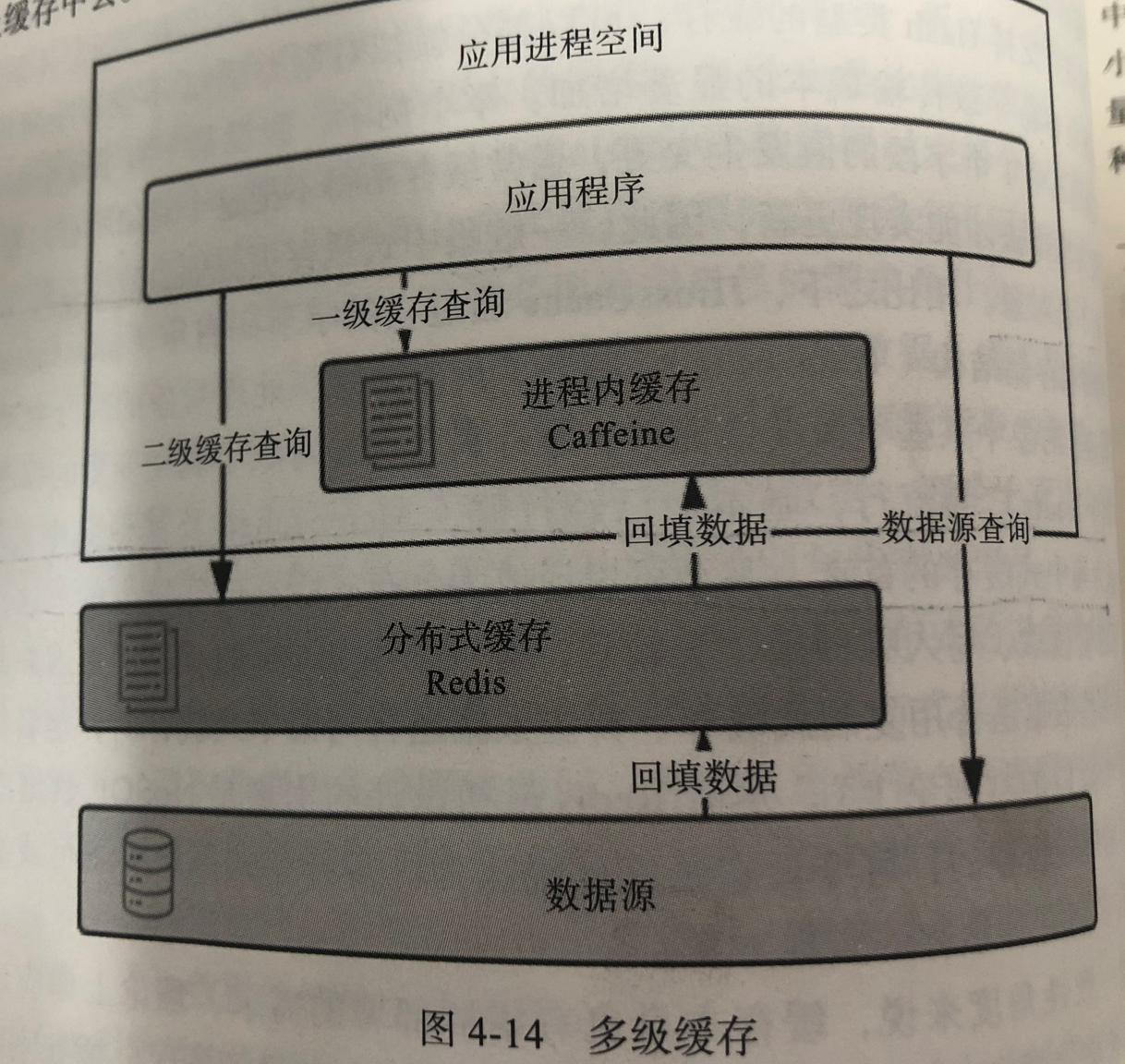

Benefit of in memory cache (ease of maintainence, timely, no network overhead) Satisfy AP (Service Availability and Partition Tolerance)

Drawback of in memory cache: Lacking consistency

Beneift of dist cache (Highly consistence) Satisfy CP (Service Availability and Partition Tolerance)

浙公网安备 33010602011771号

浙公网安备 33010602011771号