PACT: Parameterized Clipping Activation for Quantized Neural Networks

概

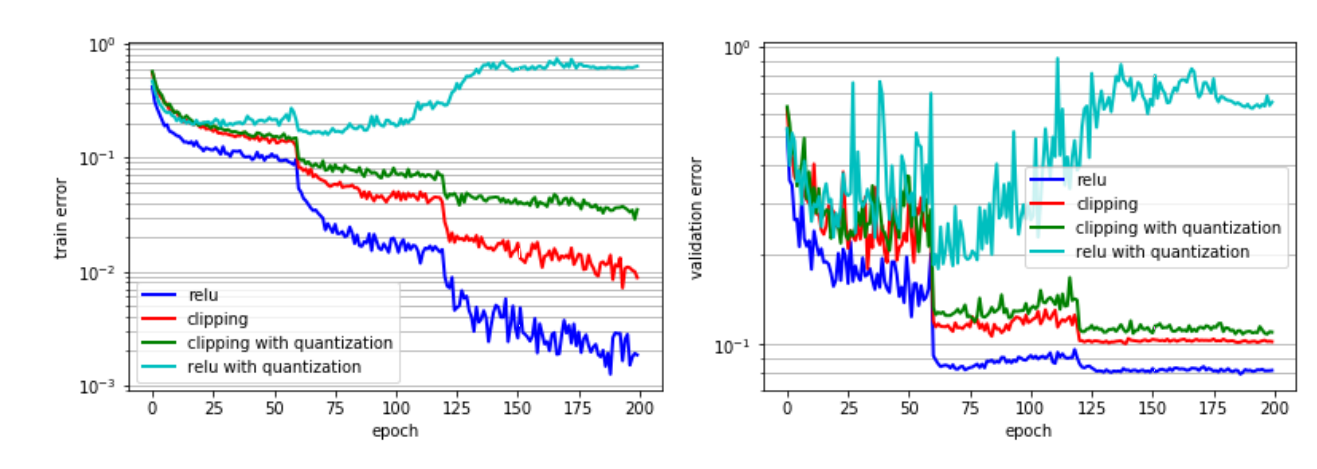

本文提出对网络中的激活值进行裁剪以实现更低量化.

主要内容

-

作者的思想很简单, 作者认为正常的 relu 往右是无界的, 这会对量化造成困难. 所以, 作者的做法就是对 relu 右侧进行一个截断:

\[y = PACT(x) = 0.5 (|x| - |x - \alpha| + \alpha) =\left \{ \begin{array}{ll} 0, & x \in (-\infty, 0), \\ x, & x \in [0, \alpha), \\ \alpha, & x \in [\alpha, +\infty). \end{array} \right . \] -

并且训练的时候通过 STE 进行训练:

\[\frac{\partial y_q}{\partial \alpha} =\frac{\partial y_q}{\partial y} \frac{\partial y}{\partial \alpha} =\left \{ \begin{array}{ll} 0, & x \in (-\infty, \alpha), \\ 1, & x \in [\alpha, +\infty]. \end{array} \right., \]其中 \(y_q = round(y \cdot \frac{2^k - 1}{\alpha}) \cdot \frac{\alpha}{2^k - 1}\).

浙公网安备 33010602011771号

浙公网安备 33010602011771号