K8s(v1.21.9)集群中使用Helm部署Consul(最新)集群

所有服务器环境

cat /etc/redhat-release

CentOS Linux release 7.6.1810 (Core)

Helm Chart 安装

先决条件

Consul Helm 仅支持 Helm 3.2+

安装 Consul

1.添加 HashiCorp Helm Repository:

helm repo add hashicorp https://helm.releases.hashicorp.com

2.在添加的图表中搜索关键字

helm search repo hashicorp/consul

helm search repo consul

NAME CHART VERSION APP VERSION DESCRIPTION

hashicorp/consul 0.44.0 1.12.0 Official HashiCorp Consul Chart

3.从存储库下载图表,并(可选地)将其解压缩到本地目录中

helm pull hashicorp/consul

consul-server需提供存储供应

由于 consul部署的时候会创建使用 PVC:PersistentVolumeClaim的 Pod,Pod中的应用通过 PVC进行数据的持久化,而 PVC使用 PV: PersistentVolume进行数据的最终持久化处理。所以我们要准备好存储资源供应,否则 consul-server会因为获取不到存储资源而一直处于 pending

状态,有以下两种方案:

方案 1,手动创建静态 PV 进行存储供应

方案 2,通过 StorageClass 实现动态卷供应

采用方案2通过 StorageClass 实现动态卷供应步骤如下:

1.在k8s集群所有Master节点上需添加参数如下,不然StorageClass 实现动态卷pv绑定有问题:

vim /etc/kubernetes/manifests/kube-apiserver.yaml

--requestheader-extra-headers-prefix=X-Remote-Extra-

- --requestheader-group-headers=X-Remote-Group

- --requestheader-username-headers=X-Remote-User

- --secure-port=6443

- --service-account-issuer=https://kubernetes.default.svc.cluster.local

- --service-account-key-file=/etc/kubernetes/pki/sa.pub

- --service-account-signing-key-file=/etc/kubernetes/pki/sa.key

- --service-cluster-ip-range=10.96.0.0/12

- --tls-cert-file=/etc/kubernetes/pki/apiserver.crt

- --tls-private-key-file=/etc/kubernetes/pki/apiserver.key

- --feature-gates=RemoveSelfLink=false #添加这个参数

过一会apiserver服务就自动重启

2.在所有Node节点上安装 nfs-utils,因为Master节点不进行调度pod

yum install -y nfs-utils

3.准备一台节点作为nfs server

yum install -y nfs-utils

创建nfs共享目录

mkdir -p /data/nfs_data

# 配置nfs及权限

cat /etc/exports

/data/nfs_data *(rw,insecure,sync,no_subtree_check,no_root_squash)

# 启动nfs服务

systemctl start rpcbind.service

systemctl start nfs-server.service

# 设置开机自启

systemctl enable rpcbind.service

systemctl enable nfs-server.service

# 配置生效

exportfs -r

4.在k8s集群里使用helm安装nfs-provisioner

添加仓库源

helm repo add azure http://mirror.azure.cn/kubernetes/charts/

搜索nfs-client-provisioner

helm search repo nfs-client-provisioner

从存储库下载图表,并(可选地)将其解压缩到本地目录中

helm pull azure/nfs-client-provisioner

nfs-client-provisioner-1.2.10.tgz

tar -zxvf nfs-client-provisioner-1.2.10.tgz

修改后values.yaml

replicaCount: 1

strategyType: Recreate

image:

repository: quay.io/external_storage/nfs-client-provisioner

tag: v3.1.0-k8s1.11

pullPolicy: IfNotPresent

nfs:

server: 192.168.101.xx #nfs-server主机

path: /data/nfs_data #nfs-server主机共享的目录

mountOptions:

storageClass:

create: true #打开storageClass

defaultClass: true #设置为默认的storageClass

name: nfs-client

allowVolumeExpansion: true

reclaimPolicy: Delete

archiveOnDelete: true

accessModes: ReadWriteOnce

rbac:

create: true

podSecurityPolicy:

enabled: false

serviceAccount:

create: true

name:

resources: {}

nodeSelector: {}

tolerations: []

affinity: {}

[root@node1 nfs-client-provisioner]# helm install nfs-client . -f values.yaml

部署成功

kubectl get pods

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-67cd9c9567-kjh5h 1/1 Running 1 18d

查看创建的默认storageClass

kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client (default) cluster.local/nfs-client-provisioner Delete Immediate true 18d

5.使用StorageClass,定义pvc来动态创建pv(示例)

stroage class在集群中的作用?

pvc是无法直接去向nfs-client-provisioner申请使用的存储空间的,这时,就需要通过SC这个资源对象去申请了,SC的根本作用就是根据pvc定义的来动态创建pv,不仅节省了我们管理员的时间,还可以封装不同类型的存储供pvc选用。

每个sc都包含以下三个重要的字段,这些字段会在sc需要动态分配pv时会使用到:

Provisioner(供给方):提供了存储资源的存储系统。

ReclaimPolicy:pv的回收策略,可用的值有Delete(默认)和Retiain。

Parameters(参数):存储类使用参数描述要关联到存储卷。

kubectl create namespace liang

vim pvc_dynamic.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-storage

namespace: liang

spec:

storageClassName: "nfs-client"

accessModes:

- ReadWriteMany

resources:

requests:

storage: 300Mi

vim deploy-nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

namespace: liang

spec:

replicas: 2

selector:

matchLabels:

app: nginx

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

#启用数据卷的名字为wwwroot,并挂载到nginx的html目录下

volumeMounts:

- name: wwwroot

mountPath: /usr/share/nginx/html

ports:

- containerPort: 80

#定义数据卷名字为wwwroot,类型为pvc

volumes:

- name: wwwroot

persistentVolumeClaim:

claimName: pvc-storage

kubectl apply -f pvc_dynamic.yaml

kubectl apply -f deploy-nginx.yaml

kubectl get pv,pvc -n liang

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

persistentvolume/pvc-cca921ba-2daf-4f01-a57a-427856d641da 300Mi RWX Delete Bound liang/pvc-storage nfs-client 90d

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

persistentvolumeclaim/pvc-storage Bound pvc-cca921ba-2daf-4f01-a57a-427856d641da 300Mi RWX nfs-client 90d

helm chart安装Consul

consul-0.44.0.tgz

tar -zxvf consul-0.44.0.tgz

修改后values.yaml

global:

enabled: true

logLevel: "info"

logJSON: false

name: null

domain: consul

adminPartitions:

enabled: false

name: "default"

service:

type: "NodePort" #使用NodePort暴露

nodePort:

rpc: null

serf: null

https: null

annotations: null

image: "hashicorp/consul:1.12.0"

imagePullSecrets: []

imageK8S: "hashicorp/consul-k8s-control-plane:0.44.0"

datacenter: dc1

enablePodSecurityPolicies: false

secretsBackend:

vault:

enabled: false

consulServerRole: ""

consulClientRole: ""

consulSnapshotAgentRole: ""

manageSystemACLsRole: ""

adminPartitionsRole: ""

agentAnnotations: null

consulCARole: ""

ca:

secretName: ""

secretKey: ""

connectCA:

address: ""

authMethodPath: "kubernetes"

rootPKIPath: ""

intermediatePKIPath: ""

additionalConfig: |

{}

gossipEncryption:

autoGenerate: false

secretName: ""

secretKey: ""

recursors: []

tls:

enabled: false

enableAutoEncrypt: false

serverAdditionalDNSSANs: []

serverAdditionalIPSANs: []

verify: true

httpsOnly: true

caCert:

secretName: null

secretKey: null

caKey:

secretName: null

secretKey: null

enableConsulNamespaces: false

acls:

manageSystemACLs: false

bootstrapToken:

secretName: null

secretKey: null

createReplicationToken: false

replicationToken:

secretName: null

secretKey: null

partitionToken:

secretName: null

secretKey: null

enterpriseLicense:

secretName: null

secretKey: null

enableLicenseAutoload: true

federation:

enabled: false

createFederationSecret: false

primaryDatacenter: null

primaryGateways: []

k8sAuthMethodHost: null

metrics:

enabled: false

enableAgentMetrics: false

agentMetricsRetentionTime: 1m

enableGatewayMetrics: true

consulSidecarContainer:

resources:

requests:

memory: "25Mi"

cpu: "20m"

limits:

memory: "50Mi"

cpu: "20m"

imageEnvoy: "envoyproxy/envoy:v1.22.0"

openshift:

enabled: false

consulAPITimeout: 5s

server:

enabled: true

image: null

replicas: 3

bootstrapExpect: null

serverCert:

secretName: null

exposeGossipAndRPCPorts: false

ports:

serflan:

port: 8301

storage: 10Gi

storageClass: "nfs-client" #使用storageclass的名字

connect: false

serviceAccount:

annotations: null

resources:

requests:

memory: "100Mi"

cpu: "100m"

limits:

memory: "100Mi"

cpu: "100m"

securityContext:

runAsNonRoot: true

runAsGroup: 1000

runAsUser: 100

fsGroup: 1000

containerSecurityContext:

server: null

updatePartition: 0

disruptionBudget:

enabled: true

maxUnavailable: null

extraConfig: |

{}

extraVolumes: []

extraContainers: []

affinity: |

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchLabels:

app: {{ template "consul.name" . }}

release: "{{ .Release.Name }}"

component: server

topologyKey: kubernetes.io/hostname

tolerations: ""

topologySpreadConstraints: ""

nodeSelector: null

priorityClassName: ""

extraLabels: null

annotations: null

service:

annotations: null

extraEnvironmentVars: {}

externalServers:

enabled: false

hosts: []

httpsPort: 8501

tlsServerName: null

useSystemRoots: false

k8sAuthMethodHost: null

client:

enabled: "-"

image: null

join: null

dataDirectoryHostPath: null

grpc: true

nodeMeta:

pod-name: ${HOSTNAME}

host-ip: ${HOST_IP}

exposeGossipPorts: false

serviceAccount:

annotations: null

resources:

requests:

memory: "100Mi"

cpu: "100m"

limits:

memory: "100Mi"

cpu: "100m"

securityContext:

runAsNonRoot: true

runAsGroup: 1000

runAsUser: 100

fsGroup: 1000

containerSecurityContext:

client: null

aclInit: null

tlsInit: null

extraConfig: |

{}

extraVolumes: []

extraContainers: []

tolerations: ""

nodeSelector: null

affinity: null

priorityClassName: ""

annotations: null

extraLabels: null

extraEnvironmentVars: {}

dnsPolicy: null

hostNetwork: false

updateStrategy: null

snapshotAgent:

enabled: false

replicas: 2

configSecret:

secretName: null

secretKey: null

serviceAccount:

annotations: null

resources:

requests:

memory: "50Mi"

cpu: "50m"

limits:

memory: "50Mi"

cpu: "50m"

caCert: null

dns:

enabled: "-"

enableRedirection: false

type: ClusterIP

clusterIP: null

annotations: null

additionalSpec: null

ui:

enabled: true

service:

enabled: true

type: "NodePort" #consul-ui使用NodePort暴露

port:

http: 80

https: 443

nodePort:

http: null

https: null

annotations: null

additionalSpec: null

ingress:

enabled: false

ingressClassName: ""

pathType: Prefix

hosts: []

tls: []

annotations: null

metrics:

enabled: "-"

provider: "prometheus"

baseURL: http://prometheus-server

dashboardURLTemplates:

service: ""

syncCatalog:

enabled: false

image: null

default: true

priorityClassName: ""

toConsul: true

toK8S: true

k8sPrefix: null

k8sAllowNamespaces: ["*"]

k8sDenyNamespaces: ["kube-system", "kube-public"]

k8sSourceNamespace: null

consulNamespaces:

consulDestinationNamespace: "default"

mirroringK8S: false

mirroringK8SPrefix: ""

addK8SNamespaceSuffix: true

consulPrefix: null

k8sTag: null

consulNodeName: "k8s-sync"

syncClusterIPServices: true

nodePortSyncType: ExternalFirst

aclSyncToken:

secretName: null

secretKey: null

nodeSelector: null

affinity: null

tolerations: null

serviceAccount:

annotations: null

resources:

requests:

memory: "50Mi"

cpu: "50m"

limits:

memory: "50Mi"

cpu: "50m"

logLevel: ""

consulWriteInterval: null

extraLabels: null

connectInject:

enabled: false

replicas: 2

image: null

default: false

transparentProxy:

defaultEnabled: true

defaultOverwriteProbes: true

metrics:

defaultEnabled: "-"

defaultEnableMerging: false

defaultMergedMetricsPort: 20100

defaultPrometheusScrapePort: 20200

defaultPrometheusScrapePath: "/metrics"

envoyExtraArgs: null

priorityClassName: ""

imageConsul: null

logLevel: ""

serviceAccount:

annotations: null

resources:

requests:

memory: "50Mi"

cpu: "50m"

limits:

memory: "50Mi"

cpu: "50m"

failurePolicy: "Fail"

namespaceSelector: |

matchExpressions:

- key: "kubernetes.io/metadata.name"

operator: "NotIn"

values: ["kube-system","local-path-storage"]

k8sAllowNamespaces: ["*"]

k8sDenyNamespaces: []

consulNamespaces:

consulDestinationNamespace: "default"

mirroringK8S: false

mirroringK8SPrefix: ""

nodeSelector: null

affinity: null

tolerations: null

aclBindingRuleSelector: "serviceaccount.name!=default"

overrideAuthMethodName: ""

aclInjectToken:

secretName: null

secretKey: null

sidecarProxy:

resources:

requests:

memory: null

cpu: null

limits:

memory: null

cpu: null

initContainer:

resources:

requests:

memory: "25Mi"

cpu: "50m"

limits:

memory: "150Mi"

cpu: "50m"

controller:

enabled: false

replicas: 1

logLevel: ""

serviceAccount:

annotations: null

resources:

limits:

cpu: 100m

memory: 50Mi

requests:

cpu: 100m

memory: 50Mi

nodeSelector: null

tolerations: null

affinity: null

priorityClassName: ""

aclToken:

secretName: null

secretKey: null

meshGateway:

enabled: false

replicas: 2

wanAddress:

source: "Service"

port: 443

static: ""

service:

enabled: true

type: LoadBalancer

port: 443

nodePort: null

annotations: null

additionalSpec: null

hostNetwork: false

dnsPolicy: null

consulServiceName: "mesh-gateway"

containerPort: 8443

hostPort: null

serviceAccount:

annotations: null

resources:

requests:

memory: "100Mi"

cpu: "100m"

limits:

memory: "100Mi"

cpu: "100m"

initCopyConsulContainer:

resources:

requests:

memory: "25Mi"

cpu: "50m"

limits:

memory: "150Mi"

cpu: "50m"

initServiceInitContainer:

resources:

requests:

memory: "50Mi"

cpu: "50m"

limits:

memory: "50Mi"

cpu: "50m"

affinity: |

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchLabels:

app: {{ template "consul.name" . }}

release: "{{ .Release.Name }}"

component: mesh-gateway

topologyKey: kubernetes.io/hostname

tolerations: null

nodeSelector: null

priorityClassName: ""

annotations: null

ingressGateways:

enabled: false

defaults:

replicas: 2

service:

type: ClusterIP

ports:

- port: 8080

nodePort: null

- port: 8443

nodePort: null

annotations: null

additionalSpec: null

serviceAccount:

annotations: null

resources:

requests:

memory: "100Mi"

cpu: "100m"

limits:

memory: "100Mi"

cpu: "100m"

initCopyConsulContainer:

resources:

requests:

memory: "25Mi"

cpu: "50m"

limits:

memory: "150Mi"

cpu: "50m"

affinity: |

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchLabels:

app: {{ template "consul.name" . }}

release: "{{ .Release.Name }}"

component: ingress-gateway

topologyKey: kubernetes.io/hostname

tolerations: null

nodeSelector: null

priorityClassName: ""

terminationGracePeriodSeconds: 10

annotations: null

consulNamespace: "default"

gateways:

- name: ingress-gateway

terminatingGateways:

enabled: false

defaults:

replicas: 2

extraVolumes: []

resources:

requests:

memory: "100Mi"

cpu: "100m"

limits:

memory: "100Mi"

cpu: "100m"

initCopyConsulContainer:

resources:

requests:

memory: "25Mi"

cpu: "50m"

limits:

memory: "150Mi"

cpu: "50m"

affinity: |

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchLabels:

app: {{ template "consul.name" . }}

release: "{{ .Release.Name }}"

component: terminating-gateway

topologyKey: kubernetes.io/hostname

tolerations: null

nodeSelector: null

priorityClassName: ""

annotations: null

serviceAccount:

annotations: null

consulNamespace: "default"

gateways:

- name: terminating-gateway

apiGateway:

enabled: false

image: null

logLevel: info

managedGatewayClass:

enabled: true

nodeSelector: null

serviceType: LoadBalancer

useHostPorts: false

copyAnnotations:

service: null

serviceAccount:

annotations: null

controller:

replicas: 1

annotations: null

priorityClassName: ""

nodeSelector: null

service:

annotations: null

resources:

requests:

memory: "100Mi"

cpu: "100m"

limits:

memory: "100Mi"

cpu: "100m"

initCopyConsulContainer:

resources:

requests:

memory: "25Mi"

cpu: "50m"

limits:

memory: "150Mi"

cpu: "50m"

webhookCertManager:

tolerations: null

prometheus:

enabled: false

tests:

enabled: true

helm install consul . -f values.yaml -n liang

kubect get pods -n liang

NAME READY STATUS RESTARTS AGE

consul-consul-client-m4tw9 0/1 Running 0 15d

consul-consul-client-qj8n9 1/1 Running 0 15d

consul-consul-client-vdm4h 1/1 Running 2 15d

consul-consul-server-0 1/1 Running 2 15d

consul-consul-server-1 1/1 Running 0 15d

consul-consul-server-2 1/1 Running 0 15d

consul-server使用Nodeport暴露

cat consul-server-web.yaml

apiVersion: v1

kind: Service

metadata:

labels:

component: server

name: consul-server-web

namespace: anchnet-smartops-dev

spec:

externalTrafficPolicy: Cluster

ports:

- name: http

nodePort: 32500

port: 8500

protocol: TCP

targetPort: 8500

selector:

component: server

sessionAffinity: None

type: NodePort

kubectl get svc -n liang

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

consul-consul-dns ClusterIP 10.108.151.28 <none> 53/TCP,53/UDP 15d

consul-consul-server ClusterIP None <none> 8500/TCP,8301/TCP,8301/UDP,8302/TCP,8302/UDP,8300/TCP,8600/TCP,8600/UDP 15d

consul-consul-ui NodePort 10.102.149.211 <none> 80:31652/TCP 15d

consul-server-web NodePort 10.99.43.57 <none> 8500:32500/TCP 15d

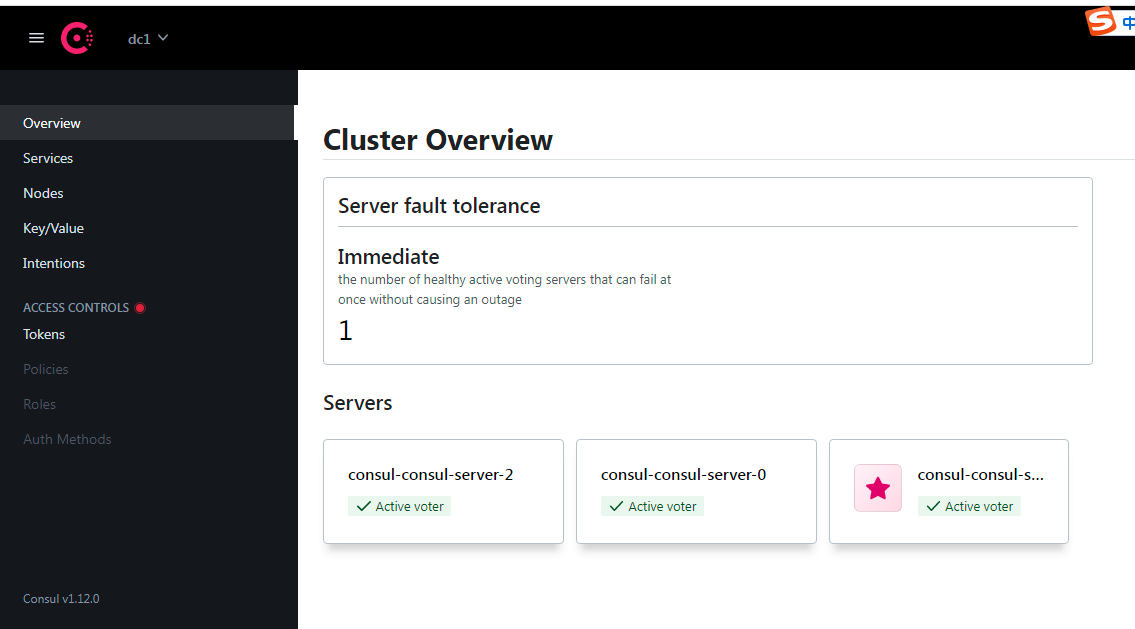

浏览器访问consul-server NodePort暴露的端口

参考

Consul:

https://www.consul.io/docs/k8s/installation/install#helm-chart-installation

https://www.consul.io/docs/k8s/helm

https://www.modb.pro/db/74448

nfs-client-provisioner:

https://blog.csdn.net/weixin_45029822/article/details/108147371

https://blog.csdn.net/wzy_168/article/details/103123345

https://www.modb.pro/db/74448

浙公网安备 33010602011771号

浙公网安备 33010602011771号