获取贴吧对应页html及写入文件

1 #!/usr/bin/env python 2 # -*- coding:utf-8 -*- 3 from urllib import request 4 from random import choice 5 import urllib.parse 6 7 def loadPage(url, filename): 8 ''' 9 作用:根据url,获取响应文件 10 url: 需要爬取的地址 11 filename: 处理的文件名 12 ''' 13 USER_AGENTS = [ 14 "Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1; AcooBrowser; .NET CLR 1.1.4322; .NET CLR 2.0.50727)", 15 "Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.0; Acoo Browser; SLCC1; .NET CLR 2.0.50727; Media Center PC 5.0; .NET CLR 3.0.04506)", 16 "Mozilla/4.0 (compatible; MSIE 7.0; AOL 9.5; AOLBuild 4337.35; Windows NT 5.1; .NET CLR 1.1.4322; .NET CLR 2.0.50727)", 17 "Mozilla/5.0 (Windows; U; MSIE 9.0; Windows NT 9.0; en-US)", 18 "Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; Win64; x64; Trident/5.0; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 2.0.50727; Media Center PC 6.0)", 19 "Mozilla/5.0 (compatible; MSIE 8.0; Windows NT 6.0; Trident/4.0; WOW64; Trident/4.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; .NET CLR 1.0.3705; .NET CLR 1.1.4322)", 20 "Mozilla/4.0 (compatible; MSIE 7.0b; Windows NT 5.2; .NET CLR 1.1.4322; .NET CLR 2.0.50727; InfoPath.2; .NET CLR 3.0.04506.30)", 21 "Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN) AppleWebKit/523.15 (KHTML, like Gecko, Safari/419.3) Arora/0.3 (Change: 287 c9dfb30)", 22 "Mozilla/5.0 (X11; U; Linux; en-US) AppleWebKit/527+ (KHTML, like Gecko, Safari/419.3) Arora/0.6", 23 "Mozilla/5.0 (Windows; U; Windows NT 5.1; en-US; rv:1.8.1.2pre) Gecko/20070215 K-Ninja/2.1.1", 24 "Mozilla/5.0 (Windows; U; Windows NT 5.1; zh-CN; rv:1.9) Gecko/20080705 Firefox/3.0 Kapiko/3.0", 25 "Mozilla/5.0 (X11; Linux i686; U;) Gecko/20070322 Kazehakase/0.4.5", 26 "Mozilla/5.0 (X11; U; Linux i686; en-US; rv:1.9.0.8) Gecko Fedora/1.9.0.8-1.fc10 Kazehakase/0.5.6", 27 "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.11 (KHTML, like Gecko) Chrome/17.0.963.56 Safari/535.11", 28 "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_7_3) AppleWebKit/535.20 (KHTML, like Gecko) Chrome/19.0.1036.7 Safari/535.20", 29 "Opera/9.80 (Macintosh; Intel Mac OS X 10.6.8; U; fr) Presto/2.9.168 Version/11.52" 30 ] 31 user_agent = choice(USER_AGENTS) 32 print('正在下载'+filename) 33 req = request.Request(url) 34 req.add_header('User-Agent',user_agent) 35 return str(request.urlopen(req).read(),encoding='utf-8')

36 37 def writePage(html, filename): 38 ''' 39 作用: 将HTML写入文件 40 html: 服务器响应文件内容 41 ''' 42 print('正在保存'+filename) 43 44 # 不需要做文件关闭操作 45 # 等同于open /write /close 46 with open(filename,'w',encoding='UTF-8') as f: 47 f.write(html) 48 print('-'*30) 49 50 def tiebaSpider(url, start, end): 51 ''' 52 作用:贴吧爬虫调度器, 负责处理每个页面的url 53 url: 贴吧url的前部分 54 start: 起始页 55 end: 终止页 56 ''' 57 for page in range(start, end+1): 58 pn = (page-1)*50 59 60 filename = '第'+str(page)+'页.html' 61 # 该贴吧每一页相对应的URL 62 fulurl = url +"&pn=" + str(pn) 63 64 # print(fulurl) 65 html = str(loadPage(fulurl, filename)) 66 writePage(html, filename) 67 68 print('Thank you') 69 70 # 当.py文件被直接运行时,if 之下的代码块将被运行 71 if __name__ == '__main__': 72 url = 'http://tieba.baidu.com/f?' 73 key = input('Please input keywords:') 74 beginPage = int(input('Please input start pageNum:')) 75 endPage = int(input('Please input end pageNum:')) 76 77 # 关键字转码 78 m = {'kw':key} 79 keyword = urllib.parse.urlencode(m) 80 # print(keyword) 81 fulurl = url + keyword 82 83 tiebaSpider(fulurl, beginPage, endPage)

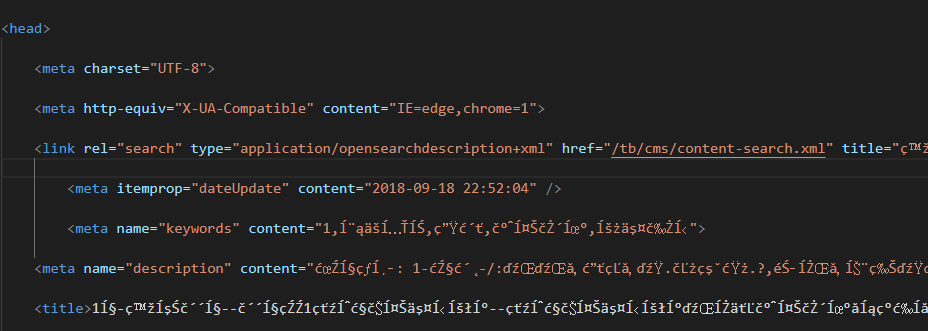

运行结果,文件中保存的数据,如下:

存在乱码问题!!!

浙公网安备 33010602011771号

浙公网安备 33010602011771号