package demo;

import java.io.FileOutputStream;

import java.io.InputStream;

import java.io.OutputStream;

import java.util.Arrays;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.BlockLocation;

import org.apache.hadoop.fs.FileStatus;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import org.junit.Test;

public class TestMetaData {

@Test

public void test1() throws Exception{

Configuration conf = new Configuration();

conf.set("fs.defaultFS","hdfs://bigdata11:9000");

FileSystem client = FileSystem.get(conf);

FileStatus[] fsList = client.listStatus(new Path("/folder1"));

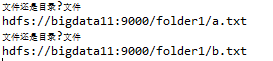

for(FileStatus s:fsList) {

System.out.println("文件还是目录?"+(s.isDirectory()? "目录" : "文件"));

System.out.println(s.getPath().toString());

}

}

//获取文件的数据块信息

@Test

public void test2() throws Exception{

Configuration conf = new Configuration();

conf.set("fs.defaultFS","hdfs://bigdata11:9000");

//创建HDFS的客户端

FileSystem client = FileSystem.get(conf);

//获取文件的信息

FileStatus fs = client.getFileStatus(new Path("/folder1/a.txt"));

BlockLocation[] blkLocations = client.getFileBlockLocations(fs,0,fs.getLen());

for(BlockLocation b : blkLocations) {

//数据块的主机信息:数组,表示同一个数据块的多个副本(冗余)被保存到了不同的主机上

System.out.println(Arrays.toString(b.getHosts()));

//获取的数据块的名称

System.out.println(Arrays.toString(b.getNames()));

}

}

}

浙公网安备 33010602011771号

浙公网安备 33010602011771号