Terrorform-自动化配置AWS EC2

1.需求:

当团队DevOps人数比较多或者外部团队申请EC2实例已经成为日常工作一部分,决定将这个过程通过Terrorform自动化进行创建,可追溯和减少手动操作的黑洞。

2.目标:

1.提高自动化覆盖率

2.提高团队规范化创建流程

3.实现方式:Terrorform

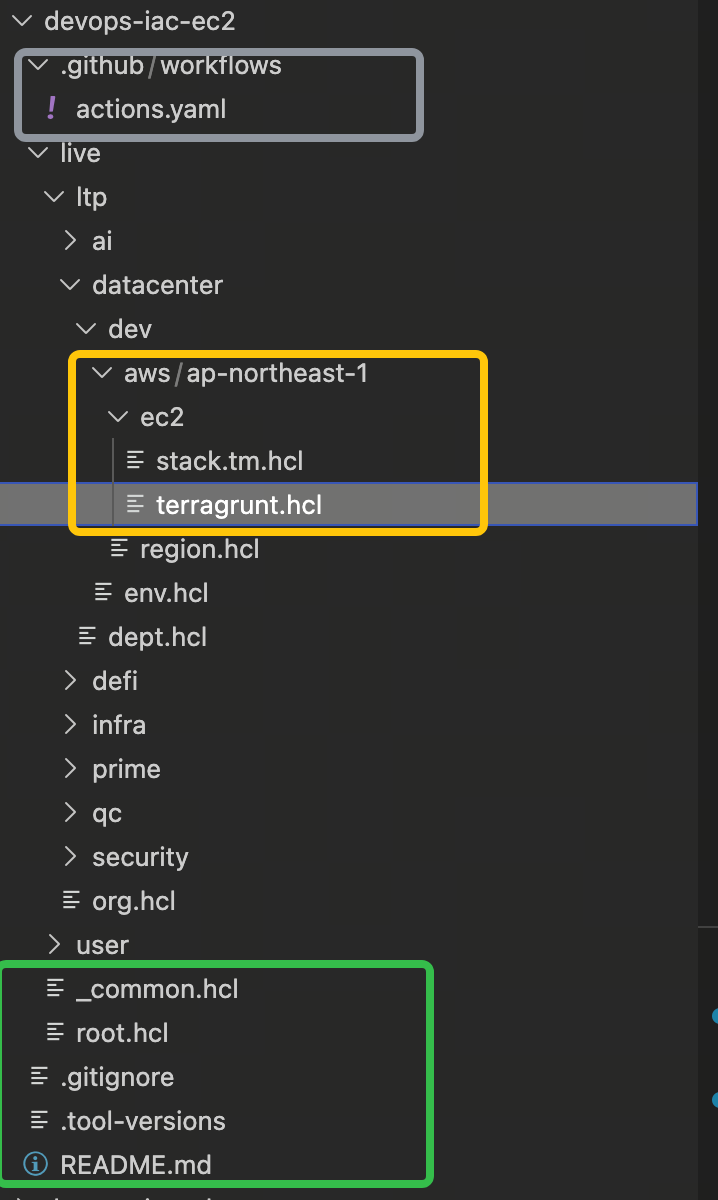

4.目录结构如下

5.实现代码

1 name: Terragrunt Workflow 2 3 on: 4 push: 5 branches: 6 - main 7 8 jobs: 9 plan: 10 name: Plan Terragrunt changes in changed Terramate stacks 11 runs-on: [self-hosted, ltp] 12 13 env: 14 GITHUB_TOKEN: ${{ github.token }} 15 TERRAGRUNT_LOG_FORMAT: bare 16 17 permissions: 18 id-token: write 19 contents: read 20 pull-requests: write 21 checks: read 22 outputs: 23 changed-stack: ${{ steps.list.outputs.stdout }} 24 steps: 25 26 - name: Configure git credentials 27 run: | 28 git config --global url."https://x-access-token:${{ secrets.SRE_TOKEN }}@github.com/".insteadOf "https://github.com/" 29 30 ### Check out the code 31 32 - name: Checkout 33 uses: actions/checkout@v4 34 with: 35 fetch-depth: 0 36 37 ### Install tooling 38 39 # - name: Install Terramate 40 # uses: terramate-io/terramate-action@v2 41 42 - name: Install asdf 43 uses: asdf-vm/actions/setup@8bf48566456f7655b13be1c8dd6118862bee7aaf 44 45 - name: Install Terraform and Terragrunt with asdf 46 run: | 47 asdf plugin add terramate 48 asdf plugin add terraform 49 asdf plugin add terragrunt 50 asdf install terramate 51 asdf install terraform 52 asdf install terragrunt 53 54 ### Linting 55 56 - name: Check Terramate formatting 57 run: terramate fmt --check 58 59 ### Check for changed stacks 60 61 - name: List changed stacks 62 id: list 63 run: | 64 output=$(terramate list --changed) 65 echo "stdout=$output" >> $GITHUB_OUTPUT 66 echo $output 67 68 ### Run the Terragrunt preview via Terramate in each changed stack 69 70 - name: Initialize Terragrunt in changed stacks 71 if: steps.list.outputs.stdout 72 run: terramate run --parallel 1 --changed -- terragrunt init -lock-timeout=5m 73 74 - name: Plan changed stacks 75 if: steps.list.outputs.stdout 76 run: | 77 terramate run \ 78 --parallel 5 \ 79 --changed \ 80 --continue-on-error \ 81 --terragrunt \ 82 -- \ 83 terragrunt plan -out out.tfplan \ 84 -lock=false 85 deploy: 86 name: Deploy Terragrunt changes in changed Terramate stacks 87 88 env: 89 GITHUB_TOKEN: ${{ github.token }} 90 JUMPSERVER_TOKEN: ${{ secrets.JUMPSERVER_TOKEN }} 91 JUMPSERVER_TOKEN_NEW: ${{ secrets.JUMPSERVER_TOKEN_NEW }} 92 TERRAGRUNT_LOG_FORMAT: bare 93 94 permissions: 95 id-token: write 96 contents: read 97 pull-requests: read 98 checks: read 99 100 runs-on: [self-hosted, ltp] 101 102 needs: [ plan ] 103 environment: 'production' 104 if: github.ref == 'refs/heads/main' && needs.plan.outputs.changed-stack 105 steps: 106 ### Check out the code 107 108 - name: Checkout 109 uses: actions/checkout@v4 110 with: 111 fetch-depth: 0 112 113 ### Install tooling 114 115 # - name: Install Terramate 116 # uses: terramate-io/terramate-action@v2 117 118 - name: Install asdf 119 uses: asdf-vm/actions/setup@8bf48566456f7655b13be1c8dd6118862bee7aaf 120 121 - name: Install Terraform and Terragrunt with asdf 122 run: | 123 asdf plugin add terramate 124 asdf plugin add terraform 125 asdf plugin add terragrunt 126 asdf install terramate 127 asdf install terraform 128 asdf install terragrunt 129 130 ### Check for changed stacks 131 132 - name: List changed stacks 133 id: list 134 run: | 135 output=$(terramate list --changed) 136 echo "stdout=$output" >> $GITHUB_OUTPUT 137 echo $output 138 139 ### Run the Terragrunt deployment via Terramate in each changed stack 140 141 - name: Run Terragrunt init on changed stacks 142 if: steps.list.outputs.stdout 143 id: init 144 run: | 145 terramate run --changed -- terragrunt init 146 147 - name: Plan changes on changed stacks 148 if: steps.list.outputs.stdout 149 id: plan 150 run: terramate run --changed -- terragrunt plan -lock-timeout=5m -out out.tfplan 151 152 - name: Pause for 15 seconds 153 run: sleep 15 154 155 - name: Apply planned changes on changed stacks 156 id: apply 157 if: steps.list.outputs.stdout 158 run: | 159 terramate run \ 160 --changed \ 161 --terragrunt \ 162 -- \ 163 terragrunt apply -input=false -auto-approve -lock-timeout=5m out.tfplan 164 165 - name: Run drift detection 166 if: steps.list.outputs.stdout && ! cancelled() && steps.apply.outcome != 'skipped' 167 run: | 168 terramate run \ 169 --changed \ 170 --terragrunt \ 171 -- \ 172 terragrunt plan -out drift.tfplan -detailed-exitcode

1 # Terraform files 2 *.tfstate 3 *.tfstate.* 4 *.tfvars 5 *.tfvars.json 6 *.tfplan 7 *.tfplan.* 8 *.terraform.lock.hcl 9 **/.terraform/* 10 11 # Terragrunt files 12 *.terragrunt-cache/ 13 *.terragrunt-debug 14 15 # General 16 .crash 17 *.log 18 *.bak 19 *.tmp 20 *.swp 21 *.backup 22 *.old 23 *.orig 24 .DS_Store 25 .idea/ 26 .vscode/ 27 *.iml 28 terramate.tm.hcl

1 locals { 2 # account_vars = read_terragrunt_config(find_in_parent_folders("account.hcl")) 3 # account_name = local.account_vars.locals.account_name 4 # account_id = local.account_vars.locals.aws_account_id 5 region_vars = read_terragrunt_config(find_in_parent_folders("region.hcl")) 6 aws_region = local.region_vars.locals.aws_region 7 } 8 9 generate "backend" { 10 path = "backend.tf" 11 if_exists = "overwrite_terragrunt" 12 contents = <<EOF 13 terraform { 14 backend "s3" { 15 bucket = "ltp-iac-terraform-state" 16 key = "live/${path_relative_to_include()}/terraform.tfstate" 17 region = "ap-northeast-1" 18 encrypt = true 19 } 20 } 21 EOF 22 } 23 24 generate "provider" { 25 path = "provider.tf" 26 if_exists = "overwrite_terragrunt" 27 contents = <<EOF 28 terraform { 29 required_providers { 30 aws = { 31 source = "hashicorp/aws" 32 version = ">= 6.0.0" 33 } 34 null = { 35 source = "hashicorp/null" 36 version = ">= 3.2.3" 37 } 38 } 39 } 40 provider "aws" { 41 region = "${local.aws_region}" 42 } 43 EOF 44 }

1 terragrunt 0.72.4 2 terramate 0.13.0 3 terraform 1.8.2

1 locals { 2 org_vars = read_terragrunt_config(find_in_parent_folders("org.hcl")) 3 dept_vars = read_terragrunt_config(find_in_parent_folders("dept.hcl")) 4 env_vars = read_terragrunt_config(find_in_parent_folders("env.hcl")) 5 region_vars = read_terragrunt_config(find_in_parent_folders("region.hcl")) 6 7 8 aws_region = local.region_vars.locals.aws_region 9 10 default_tags = { 11 "iac/managed_by" = "terraform" 12 org = local.org_vars.locals.org 13 dept = local.dept_vars.locals.dept 14 env = local.env_vars.locals.env 15 } 16 }

1 ---stack.tm.hcl 2 stack { 3 name = "ec2" 4 description = "ec2" 5 id = "0c0abb8c-60b9-4f54-bb3e-0420a48694a8" 6 } 7 8 9 ---terragrunt.hcl 10 terraform { 11 source = "git::https://github.com/xxx/devops-iac-terraform-modules.git//modules/aws/ec2?ref=main" 12 } 13 14 include "root" { 15 path = find_in_parent_folders("root.hcl") 16 } 17 18 include "common" { 19 path = "${dirname(find_in_parent_folders("root.hcl"))}/_common.hcl" 20 expose = true 21 } 22 23 locals { 24 default_tags = "${include.common.locals.default_tags}" 25 } 26 27 inputs = { 28 instance_list = { 29 "aws-jp-dev-data-realtime-kafka-producer-pressure-test-01" = { 30 ami_id = "ami-02b2ab9f421c24176" 31 instance_type = "c6a.xlarge" 32 key_name = "ltp-server" 33 subnet_id = "subnet-00d37642a7b0a1cea" // ap-northeast-1a, ltp-2 34 security_groups = ["sg-056b7dab6c6a5b5e1", "sg-0ac036112b06adad5"] // feilian & devops-base 35 36 create_eip = true 37 add_to_jumpserver = true 38 39 root_block_device = { 40 delete_on_termination = true 41 volume_size = 20 42 volume_type = "gp3" 43 iops = 3000 44 throughput = 128 45 } 46 47 data_block_device = { 48 "data" = { 49 device_name = "/dev/sdf" 50 volume_size = 50 51 volume_type = "gp3" 52 iops = 3000 53 throughput = 128 54 } 55 } 56 57 jumpserver_admin_user = "ltp-server-root" 58 59 tags = merge(local.default_tags, { 60 team = "datacenter" 61 }) 62 }, 63 } 64 } 65 66 67 ---region.hcl 68 locals { 69 aws_region = "ap-northeast-1" 70 } 71 72 73 ---env.hcl 74 locals { 75 env = "dev" 76 } 77 78 79 ---dept.hcl 80 locals { 81 dept = "datacenter" 82 }

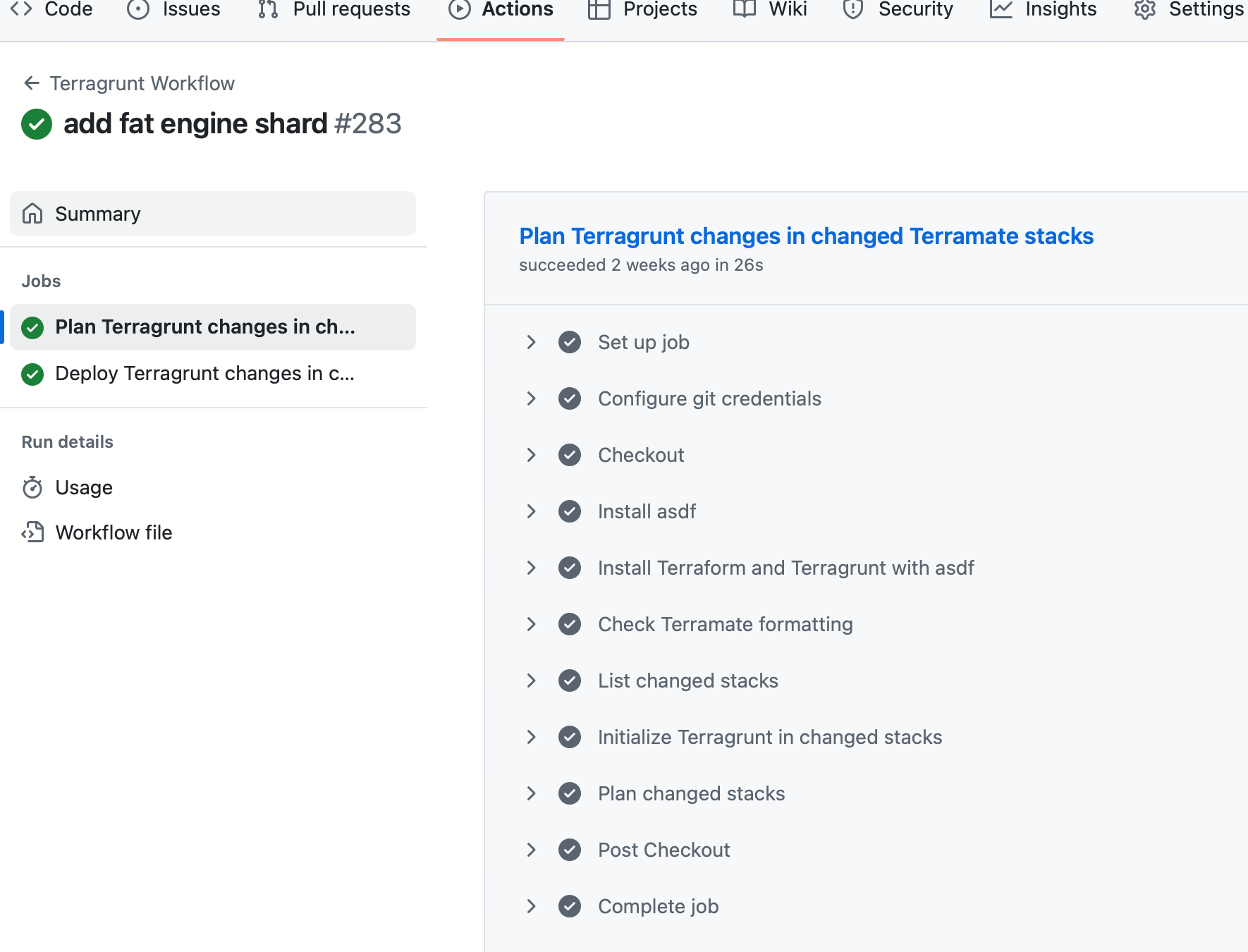

提交后,会自动创建ec2实例用于自动化构建。

http://www.cnblogs.com/Jame-mei

浙公网安备 33010602011771号

浙公网安备 33010602011771号