Prometheus+Mimir+N9e 汇聚所有集群监控指标并实现告警(Lark+电话)

- 需求1:多个Prometheus数据集群指标相互割裂,无法聚合在一个看板使用。

- 需求2: 多个Prometheus告警规则分散,需要集中管理。

-

部署kube-prometheus-stack

-

View Code

View CodenameOverride: "" namespaceOverride: "" kubeTargetVersionOverride: "" kubeVersionOverride: "" fullnameOverride: "" commonLabels: {} crds: enabled: true upgradeJob: enabled: false forceConflicts: false image: busybox: registry: docker.io repository: busybox tag: "latest" sha: "" pullPolicy: IfNotPresent kubectl: registry: registry.k8s.io repository: kubectl tag: "" # defaults to the Kubernetes version sha: "" pullPolicy: IfNotPresent env: {} resources: {} extraVolumes: [] extraVolumeMounts: [] nodeSelector: {} affinity: {} tolerations: [] topologySpreadConstraints: [] labels: {} annotations: {} podLabels: {} podAnnotations: {} serviceAccount: create: false name: "prometheus-k8s" annotations: eks.amazonaws.com/role-arn: arn:aws:iam::123456789:role/infra-prometheus-role labels: {} automountServiceAccountToken: true containerSecurityContext: allowPrivilegeEscalation: false readOnlyRootFilesystem: true capabilities: drop: - ALL podSecurityContext: fsGroup: 65534 runAsGroup: 65534 runAsNonRoot: true runAsUser: 65534 seccompProfile: type: RuntimeDefault customRules: {} defaultRules: create: true rules: alertmanager: false etcd: true configReloaders: true general: true k8sContainerCpuUsageSecondsTotal: true k8sContainerMemoryCache: true k8sContainerMemoryRss: true k8sContainerMemorySwap: true k8sContainerResource: true k8sContainerMemoryWorkingSetBytes: true k8sPodOwner: true kubeApiserverAvailability: true kubeApiserverBurnrate: true kubeApiserverHistogram: true kubeApiserverSlos: true kubeControllerManager: true kubelet: true kubeProxy: true kubePrometheusGeneral: true kubePrometheusNodeRecording: true kubernetesApps: true kubernetesResources: true kubernetesStorage: true kubernetesSystem: true kubeSchedulerAlerting: true kubeSchedulerRecording: true kubeStateMetrics: true network: true node: true nodeExporterAlerting: true nodeExporterRecording: true prometheus: true prometheusOperator: true windows: true appNamespacesOperator: "=~" appNamespacesTarget: ".*" keepFiringFor: "" labels: {} annotations: {} additionalRuleLabels: {} additionalRuleAnnotations: {} additionalRuleGroupLabels: alertmanager: {} etcd: {} configReloaders: {} general: {} k8sContainerCpuUsageSecondsTotal: {} k8sContainerMemoryCache: {} k8sContainerMemoryRss: {} k8sContainerMemorySwap: {} k8sContainerResource: {} k8sPodOwner: {} kubeApiserverAvailability: {} kubeApiserverBurnrate: {} kubeApiserverHistogram: {} kubeApiserverSlos: {} kubeControllerManager: {} kubelet: {} kubeProxy: {} kubePrometheusGeneral: {} kubePrometheusNodeRecording: {} kubernetesApps: {} kubernetesResources: {} kubernetesStorage: {} kubernetesSystem: {} kubeSchedulerAlerting: {} kubeSchedulerRecording: {} kubeStateMetrics: {} network: {} node: {} nodeExporterAlerting: {} nodeExporterRecording: {} prometheus: {} prometheusOperator: {} additionalRuleGroupAnnotations: alertmanager: {} etcd: {} configReloaders: {} general: {} k8sContainerCpuUsageSecondsTotal: {} k8sContainerMemoryCache: {} k8sContainerMemoryRss: {} k8sContainerMemorySwap: {} k8sContainerResource: {} k8sPodOwner: {} kubeApiserverAvailability: {} kubeApiserverBurnrate: {} kubeApiserverHistogram: {} kubeApiserverSlos: {} kubeControllerManager: {} kubelet: {} kubeProxy: {} kubePrometheusGeneral: {} kubePrometheusNodeRecording: {} kubernetesApps: {} kubernetesResources: {} kubernetesStorage: {} kubernetesSystem: {} kubeSchedulerAlerting: {} kubeSchedulerRecording: {} kubeStateMetrics: {} network: {} node: {} nodeExporterAlerting: {} nodeExporterRecording: {} prometheus: {} prometheusOperator: {} additionalAggregationLabels: [] runbookUrl: "https://runbooks.prometheus-operator.dev/runbooks" node: fsSelector: 'fstype!=""' disabled: {} additionalPrometheusRulesMap: {} global: rbac: create: true pspEnabled: false createAggregateClusterRoles: false imageRegistry: "" imagePullSecrets: [] windowsMonitoring: enabled: false prometheus-windows-exporter: prometheus: monitor: enabled: true jobLabel: jobLabel releaseLabel: true podLabels: jobLabel: windows-exporter config: |- collectors: enabled: '[defaults],memory,container' alertmanager: enabled: false namespaceOverride: "" annotations: {} additionalLabels: {} apiVersion: v2 enableFeatures: [] forceDeployDashboards: false networkPolicy: enabled: false policyTypes: - Ingress gateway: namespace: "" podLabels: {} additionalIngress: [] egress: enabled: false rules: [] enableClusterRules: true monitoringRules: prometheus: true configReloader: true serviceAccount: create: true name: "prometheus-k8s" annotations: eks.amazonaws.com/role-arn: arn:aws:iam::123456789:role/infra-prometheus-role automountServiceAccountToken: true podDisruptionBudget: enabled: false minAvailable: 1 unhealthyPodEvictionPolicy: AlwaysAllow config: global: resolve_timeout: 5m inhibit_rules: - source_matchers: - 'severity = critical' target_matchers: - 'severity =~ warning|info' equal: - 'namespace' - 'alertname' - source_matchers: - 'severity = warning' target_matchers: - 'severity = info' equal: - 'namespace' - 'alertname' - source_matchers: - 'alertname = InfoInhibitor' target_matchers: - 'severity = info' equal: - 'namespace' - target_matchers: - 'alertname = InfoInhibitor' route: group_by: ['namespace'] group_wait: 30s group_interval: 5m repeat_interval: 12h receiver: 'null' routes: - receiver: 'null' matchers: - alertname = "Watchdog" receivers: - name: 'null' templates: - '/etc/alertmanager/config/*.tmpl' stringConfig: "" tplConfig: false templateFiles: {} ingress: enabled: false ingressClassName: "" annotations: {} labels: {} hosts: [] paths: [] tls: [] route: main: enabled: false apiVersion: gateway.networking.k8s.io/v1 kind: HTTPRoute annotations: {} labels: {} hostnames: [] parentRefs: [] httpsRedirect: false matches: - path: type: PathPrefix value: / filters: [] additionalRules: [] secret: annotations: {} ingressPerReplica: enabled: false ingressClassName: "" annotations: {} labels: {} hostPrefix: "" hostDomain: "" paths: [] tlsSecretName: "" tlsSecretPerReplica: enabled: false prefix: "alertmanager" service: enabled: true annotations: {} labels: {} clusterIP: "" ipDualStack: enabled: false ipFamilies: ["IPv6", "IPv4"] ipFamilyPolicy: "PreferDualStack" port: 9093 targetPort: 9093 nodePort: 30903 additionalPorts: [] externalIPs: [] loadBalancerIP: "" loadBalancerSourceRanges: [] externalTrafficPolicy: Cluster sessionAffinity: None sessionAffinityConfig: clientIP: timeoutSeconds: 10800 type: ClusterIP servicePerReplica: enabled: false annotations: {} port: 9093 targetPort: 9093 nodePort: 30904 loadBalancerSourceRanges: [] externalTrafficPolicy: Cluster type: ClusterIP serviceMonitor: selfMonitor: true interval: "" additionalLabels: {} sampleLimit: 0 targetLimit: 0 labelLimit: 0 labelNameLengthLimit: 0 labelValueLengthLimit: 0 proxyUrl: "" scheme: "" enableHttp2: true tlsConfig: {} bearerTokenFile: metricRelabelings: [] relabelings: [] additionalEndpoints: [] alertmanagerSpec: persistentVolumeClaimRetentionPolicy: {} podMetadata: {} serviceName: image: registry: quay.io repository: prometheus/alertmanager tag: v0.28.1 sha: "" pullPolicy: IfNotPresent useExistingSecret: false secrets: [] automountServiceAccountToken: true configMaps: [] web: {} alertmanagerConfigSelector: {} alertmanagerConfigNamespaceSelector: {} alertmanagerConfiguration: {} alertmanagerConfigMatcherStrategy: {} additionalArgs: [] logFormat: logfmt logLevel: info replicas: 1 retention: 7d storage: volumeClaimTemplate: spec: storageClassName: "efs-sc" accessModes: ["ReadWriteMany"] resources: requests: storage: 200Gi externalUrl: routePrefix: / scheme: "" tlsConfig: {} paused: false nodeSelector: {} resources: {} podAntiAffinity: "soft" podAntiAffinityTopologyKey: kubernetes.io/hostname affinity: {} tolerations: [] topologySpreadConstraints: [] securityContext: runAsGroup: 2000 runAsNonRoot: true runAsUser: 1000 fsGroup: 2000 seccompProfile: type: RuntimeDefault listenLocal: false containers: [] volumes: [] volumeMounts: [] initContainers: [] priorityClassName: "" additionalPeers: [] portName: "http-web" clusterAdvertiseAddress: false clusterGossipInterval: "" clusterPeerTimeout: "" clusterPushpullInterval: "" clusterLabel: "" forceEnableClusterMode: false minReadySeconds: 0 additionalConfig: {} additionalConfigString: "" extraSecret: annotations: {} data: {} grafana: enabled: false namespaceOverride: "" forceDeployDatasources: false forceDeployDashboards: false defaultDashboardsEnabled: true operator: dashboardsConfigMapRefEnabled: false annotations: {} matchLabels: {} resyncPeriod: 10m folder: General defaultDashboardsTimezone: utc defaultDashboardsEditable: true defaultDashboardsInterval: 1m adminUser: admin adminPassword: prom-operator rbac: pspEnabled: false ingress: enabled: false annotations: {} labels: {} hosts: [] path: / tls: [] serviceAccount: create: true autoMount: true sidecar: dashboards: enabled: true label: grafana_dashboard labelValue: "1" searchNamespace: ALL enableNewTablePanelSyntax: false annotations: {} multicluster: global: enabled: false etcd: enabled: false provider: allowUiUpdates: false datasources: enabled: true defaultDatasourceEnabled: true isDefaultDatasource: true name: Prometheus uid: prometheus annotations: {} httpMethod: POST createPrometheusReplicasDatasources: false prometheusServiceName: prometheus-operated label: grafana_datasource labelValue: "1" exemplarTraceIdDestinations: {} alertmanager: enabled: true name: Alertmanager uid: alertmanager handleGrafanaManagedAlerts: false implementation: prometheus extraConfigmapMounts: [] deleteDatasources: [] additionalDataSources: [] prune: false service: portName: http-web ipFamilies: [] ipFamilyPolicy: "" serviceMonitor: enabled: true path: "/metrics" labels: {} interval: "" scheme: http tlsConfig: {} scrapeTimeout: 30s relabelings: [] kubernetesServiceMonitors: enabled: true kubeApiServer: enabled: true tlsConfig: serverName: kubernetes insecureSkipVerify: false serviceMonitor: enabled: true interval: "" sampleLimit: 0 targetLimit: 0 labelLimit: 0 labelNameLengthLimit: 0 labelValueLengthLimit: 0 proxyUrl: "" jobLabel: component selector: matchLabels: component: apiserver provider: kubernetes metricRelabelings: - action: drop regex: (etcd_request|apiserver_request_slo|apiserver_request_sli|apiserver_request)_duration_seconds_bucket;(0\.15|0\.2|0\.3|0\.35|0\.4|0\.45|0\.6|0\.7|0\.8|0\.9|1\.25|1\.5|1\.75|2|3|3\.5|4|4\.5|6|7|8|9|15|20|40|45|50)(\.0)? sourceLabels: - __name__ - le relabelings: [] additionalLabels: {} targetLabels: [] kubelet: enabled: true namespace: kube-system serviceMonitor: enabled: true kubelet: true attachMetadata: node: false interval: "" honorLabels: true honorTimestamps: true trackTimestampsStaleness: true sampleLimit: 0 targetLimit: 0 labelLimit: 0 labelNameLengthLimit: 0 labelValueLengthLimit: 0 proxyUrl: "" https: true insecureSkipVerify: true probes: true resource: false resourcePath: "/metrics/resource/v1alpha1" resourceInterval: 10s cAdvisor: true cAdvisorInterval: 10s cAdvisorMetricRelabelings: - sourceLabels: [__name__] action: drop regex: 'container_cpu_(cfs_throttled_seconds_total|load_average_10s|system_seconds_total|user_seconds_total)' - sourceLabels: [__name__] action: drop regex: 'container_fs_(io_current|io_time_seconds_total|io_time_weighted_seconds_total|reads_merged_total|sector_reads_total|sector_writes_total|writes_merged_total)' - sourceLabels: [__name__] action: drop regex: 'container_memory_(mapped_file|swap)' - sourceLabels: [__name__] action: drop regex: 'container_(file_descriptors|tasks_state|threads_max)' - sourceLabels: [__name__] action: drop regex: 'container_spec.*' - sourceLabels: [id, pod] action: drop regex: '.+;' probesMetricRelabelings: [] cAdvisorRelabelings: - action: replace sourceLabels: [__metrics_path__] targetLabel: metrics_path probesRelabelings: - action: replace sourceLabels: [__metrics_path__] targetLabel: metrics_path resourceRelabelings: - action: replace sourceLabels: [__metrics_path__] targetLabel: metrics_path metricRelabelings: - action: drop sourceLabels: [__name__, le] regex: (csi_operations|storage_operation_duration)_seconds_bucket;(0.25|2.5|15|25|120|600)(\.0)? relabelings: - action: replace sourceLabels: [__metrics_path__] targetLabel: metrics_path additionalLabels: {} targetLabels: [] kubeControllerManager: enabled: true endpoints: [] service: enabled: true port: null targetPort: null ipDualStack: enabled: false ipFamilies: ["IPv6", "IPv4"] ipFamilyPolicy: "PreferDualStack" serviceMonitor: enabled: true interval: "" sampleLimit: 0 targetLimit: 0 labelLimit: 0 labelNameLengthLimit: 0 labelValueLengthLimit: 0 proxyUrl: "" port: http-metrics jobLabel: jobLabel selector: {} https: null insecureSkipVerify: null serverName: null metricRelabelings: [] relabelings: [] additionalLabels: {} targetLabels: [] coreDns: enabled: true service: enabled: true port: 9153 targetPort: 9153 ipDualStack: enabled: false ipFamilies: ["IPv6", "IPv4"] ipFamilyPolicy: "PreferDualStack" serviceMonitor: enabled: true interval: "" sampleLimit: 0 targetLimit: 0 labelLimit: 0 labelNameLengthLimit: 0 labelValueLengthLimit: 0 proxyUrl: "" port: http-metrics jobLabel: jobLabel selector: {} metricRelabelings: [] relabelings: [] additionalLabels: {} targetLabels: [] kubeDns: enabled: false service: dnsmasq: port: 10054 targetPort: 10054 skydns: port: 10055 targetPort: 10055 ipDualStack: enabled: false ipFamilies: ["IPv6", "IPv4"] ipFamilyPolicy: "PreferDualStack" serviceMonitor: interval: "" sampleLimit: 0 targetLimit: 0 labelLimit: 0 labelNameLengthLimit: 0 labelValueLengthLimit: 0 proxyUrl: "" jobLabel: jobLabel selector: {} metricRelabelings: [] relabelings: [] dnsmasqMetricRelabelings: [] dnsmasqRelabelings: [] additionalLabels: {} targetLabels: [] kubeEtcd: enabled: true endpoints: [] service: enabled: true port: 2381 targetPort: 2381 ipDualStack: enabled: false ipFamilies: ["IPv6", "IPv4"] ipFamilyPolicy: "PreferDualStack" serviceMonitor: enabled: true interval: "" sampleLimit: 0 targetLimit: 0 labelLimit: 0 labelNameLengthLimit: 0 labelValueLengthLimit: 0 proxyUrl: "" scheme: http insecureSkipVerify: false serverName: "" caFile: "" certFile: "" keyFile: "" port: http-metrics jobLabel: jobLabel selector: {} metricRelabelings: [] relabelings: [] additionalLabels: {} targetLabels: [] kubeScheduler: enabled: true endpoints: [] service: enabled: true port: null targetPort: null ipDualStack: enabled: false ipFamilies: ["IPv6", "IPv4"] ipFamilyPolicy: "PreferDualStack" serviceMonitor: enabled: true interval: "" sampleLimit: 0 targetLimit: 0 labelLimit: 0 labelNameLengthLimit: 0 labelValueLengthLimit: 0 proxyUrl: "" https: null port: http-metrics jobLabel: jobLabel selector: {} insecureSkipVerify: null serverName: null metricRelabelings: [] relabelings: [] additionalLabels: {} targetLabels: [] kubeProxy: enabled: true endpoints: [] service: enabled: true port: 10249 targetPort: 10249 ipDualStack: enabled: false ipFamilies: ["IPv6", "IPv4"] ipFamilyPolicy: "PreferDualStack" serviceMonitor: enabled: true interval: "" sampleLimit: 0 targetLimit: 0 labelLimit: 0 labelNameLengthLimit: 0 labelValueLengthLimit: 0 proxyUrl: "" port: http-metrics jobLabel: jobLabel selector: {} https: false metricRelabelings: [] relabelings: [] additionalLabels: {} targetLabels: [] kubeStateMetrics: enabled: true kube-state-metrics: namespaceOverride: "" rbac: create: true releaseLabel: true prometheusScrape: false prometheus: monitor: enabled: true interval: "" sampleLimit: 0 targetLimit: 0 labelLimit: 0 labelNameLengthLimit: 0 labelValueLengthLimit: 0 scrapeTimeout: "" proxyUrl: "" honorLabels: true metricRelabelings: [] relabelings: [] selfMonitor: enabled: false nodeExporter: enabled: true operatingSystems: linux: enabled: true aix: enabled: true darwin: enabled: true forceDeployDashboards: false prometheus-node-exporter: namespaceOverride: "" podLabels: jobLabel: node-exporter releaseLabel: true extraArgs: - --collector.filesystem.mount-points-exclude=^/(dev|proc|sys|var/lib/docker/.+|var/lib/kubelet/.+)($|/) - --collector.filesystem.fs-types-exclude=^(autofs|binfmt_misc|bpf|cgroup2?|configfs|debugfs|devpts|devtmpfs|fusectl|hugetlbfs|iso9660|mqueue|nsfs|overlay|proc|procfs|pstore|rpc_pipefs|securityfs|selinuxfs|squashfs|sysfs|tracefs|erofs)$ service: portName: http-metrics ipDualStack: enabled: false ipFamilies: ["IPv6", "IPv4"] ipFamilyPolicy: "PreferDualStack" labels: jobLabel: node-exporter prometheus: monitor: enabled: true jobLabel: jobLabel interval: "" sampleLimit: 0 targetLimit: 0 labelLimit: 0 labelNameLengthLimit: 0 labelValueLengthLimit: 0 scrapeTimeout: "" proxyUrl: "" metricRelabelings: [] relabelings: [] rbac: pspEnabled: false prometheusOperator: enabled: true fullnameOverride: "" revisionHistoryLimit: 10 strategy: {} tls: enabled: true tlsMinVersion: VersionTLS13 internalPort: 10250 livenessProbe: enabled: true failureThreshold: 10 initialDelaySeconds: 60 periodSeconds: 30 successThreshold: 1 timeoutSeconds: 30 readinessProbe: enabled: true failureThreshold: 10 initialDelaySeconds: 60 periodSeconds: 30 successThreshold: 1 timeoutSeconds: 30 admissionWebhooks: failurePolicy: "" timeoutSeconds: 30 enabled: true serviceAccount: create: true name: "kube-prom-stack-kube-prome-admission" annotations: {} resources: requests: cpu: 500m memory: 500Mi limits: cpu: 2048m memory: 4096Mi caBundle: "" annotations: {} namespaceSelector: {} objectSelector: {} matchConditions: {} mutatingWebhookConfiguration: annotations: {} validatingWebhookConfiguration: annotations: {} deployment: enabled: false replicas: 1 strategy: {} podDisruptionBudget: enabled: false minAvailable: 1 unhealthyPodEvictionPolicy: AlwaysAllow revisionHistoryLimit: 10 tls: enabled: true tlsMinVersion: VersionTLS13 internalPort: 10250 serviceAccount: annotations: eks.amazonaws.com/role-arn: arn:aws:iam::123456789:role/infra-prometheus-role automountServiceAccountToken: true create: true name: "prometheus-k8s" service: annotations: {} labels: {} clusterIP: "" ipDualStack: enabled: false ipFamilies: ["IPv6", "IPv4"] ipFamilyPolicy: "PreferDualStack" nodePort: 31080 nodePortTls: 31443 additionalPorts: [] loadBalancerIP: "" loadBalancerSourceRanges: [] externalTrafficPolicy: Cluster type: ClusterIP externalIPs: [] labels: {} annotations: {} podLabels: {} podAnnotations: {} image: registry: quay.io repository: prometheus-operator/admission-webhook tag: "" sha: "" pullPolicy: IfNotPresent resources: limits: cpu: 200m memory: 200Mi requests: cpu: 100m memory: 100Mi hostNetwork: false nodeSelector: {} tolerations: [] affinity: {} dnsConfig: {} securityContext: fsGroup: 65534 runAsGroup: 65534 runAsNonRoot: true runAsUser: 65534 seccompProfile: type: RuntimeDefault containerSecurityContext: allowPrivilegeEscalation: false readOnlyRootFilesystem: true capabilities: drop: - ALL automountServiceAccountToken: true patch: enabled: true image: registry: registry.k8s.io repository: ingress-nginx/kube-webhook-certgen tag: v1.6.0 # latest tag: https://github.com/kubernetes/ingress-nginx/blob/main/images/kube-webhook-certgen/TAG sha: "" pullPolicy: IfNotPresent resources: {} priorityClassName: "" ttlSecondsAfterFinished: 60 annotations: {} podAnnotations: {} nodeSelector: {} affinity: {} tolerations: [] securityContext: runAsGroup: 2000 runAsNonRoot: true runAsUser: 2000 seccompProfile: type: RuntimeDefault serviceAccount: create: true name: "prometheus-k8s" annotations: eks.amazonaws.com/role-arn: arn:aws:iam::123456789:role/infra-prometheus-role automountServiceAccountToken: true createSecretJob: securityContext: allowPrivilegeEscalation: false readOnlyRootFilesystem: true capabilities: drop: - ALL patchWebhookJob: securityContext: allowPrivilegeEscalation: false readOnlyRootFilesystem: true capabilities: drop: - ALL certManager: enabled: false rootCert: duration: "" # default to be 5y revisionHistoryLimit: admissionCert: duration: "" # default to be 1y revisionHistoryLimit: namespaces: {} denyNamespaces: [] alertmanagerInstanceNamespaces: [] alertmanagerConfigNamespaces: [] prometheusInstanceNamespaces: [] thanosRulerInstanceNamespaces: [] networkPolicy: enabled: false flavor: kubernetes serviceAccount: create: true name: "prometheus-k8s" automountServiceAccountToken: true annotations: eks.amazonaws.com/role-arn: arn:aws:iam::123456789:role/infra-prometheus-role terminationGracePeriodSeconds: 60 lifecycle: preStop: exec: command: - "/bin/sh" - "-c" - "kill -TERM $(pidof prometheus); while [ -f /data/lock ]; do sleep 1; done" service: annotations: {} labels: {} clusterIP: "" ipDualStack: enabled: false ipFamilies: ["IPv6", "IPv4"] ipFamilyPolicy: "PreferDualStack" nodePort: 30080 nodePortTls: 30443 additionalPorts: [] loadBalancerIP: "" loadBalancerSourceRanges: [] externalTrafficPolicy: Cluster type: ClusterIP externalIPs: [] labels: {} annotations: {} podLabels: {} podAnnotations: {} podDisruptionBudget: enabled: false minAvailable: 1 unhealthyPodEvictionPolicy: AlwaysAllow kubeletService: enabled: true namespace: kube-system selector: "" name: "" kubeletEndpointsEnabled: true kubeletEndpointSliceEnabled: false extraArgs: [] serviceMonitor: selfMonitor: true additionalLabels: {} interval: "" sampleLimit: 0 targetLimit: 0 labelLimit: 0 labelNameLengthLimit: 0 labelValueLengthLimit: 0 scrapeTimeout: "" metricRelabelings: [] relabelings: [] resources: {} env: GOGC: "30" hostNetwork: false nodeSelector: {} tolerations: [] affinity: {} dnsConfig: {} securityContext: fsGroup: 65534 runAsGroup: 65534 runAsNonRoot: true runAsUser: 65534 seccompProfile: type: RuntimeDefault containerSecurityContext: allowPrivilegeEscalation: false readOnlyRootFilesystem: true capabilities: drop: - ALL verticalPodAutoscaler: enabled: false controlledResources: [] maxAllowed: {} minAllowed: {} updatePolicy: updateMode: Auto image: registry: quay.io repository: prometheus-operator/prometheus-operator tag: "" sha: "" pullPolicy: IfNotPresent prometheusConfigReloader: image: registry: quay.io repository: prometheus-operator/prometheus-config-reloader tag: "" sha: "" enableProbe: false resources: {} thanosImage: registry: quay.io repository: thanos/thanos tag: v0.39.2 sha: "" prometheusInstanceSelector: "" alertmanagerInstanceSelector: "" thanosRulerInstanceSelector: "" secretFieldSelector: "type!=kubernetes.io/dockercfg,type!=kubernetes.io/service-account-token,type!=helm.sh/release.v1" automountServiceAccountToken: true extraVolumes: [] extraVolumeMounts: [] prometheus: enabled: true livenessProbe: httpGet: path: /-/healthy port: web initialDelaySeconds: 60 timeoutSeconds: 30 periodSeconds: 30 failureThreshold: 10 successThreshold: 1 readinessProbe: httpGet: path: /-/ready port: web initialDelaySeconds: 60 timeoutSeconds: 30 periodSeconds: 30 failureThreshold: 10 successThreshold: 1 agentMode: false annotations: {} additionalLabels: {} networkPolicy: enabled: false flavor: kubernetes serviceAccount: create: true name: prometheus-k8s annotations: eks.amazonaws.com/role-arn: arn:aws:iam::123456789:role/infra-prometheus-role automountServiceAccountToken: true thanosService: enabled: false annotations: {} labels: {} externalTrafficPolicy: Cluster type: ClusterIP ipDualStack: enabled: false ipFamilies: ["IPv6", "IPv4"] ipFamilyPolicy: "PreferDualStack" portName: grpc port: 10901 targetPort: "grpc" httpPortName: http httpPort: 10902 targetHttpPort: "http" clusterIP: "None" nodePort: 30901 httpNodePort: 30902 thanosServiceMonitor: enabled: false interval: "" additionalLabels: {} scheme: "" tlsConfig: {} bearerTokenFile: metricRelabelings: [] relabelings: [] thanosServiceExternal: enabled: false annotations: {} labels: {} loadBalancerIP: "" loadBalancerSourceRanges: [] portName: grpc port: 10901 targetPort: "grpc" httpPortName: http httpPort: 10902 targetHttpPort: "http" externalTrafficPolicy: Cluster type: LoadBalancer nodePort: 30901 httpNodePort: 30902 service: enabled: true annotations: {} labels: {} clusterIP: "" ipDualStack: enabled: false ipFamilies: ["IPv6", "IPv4"] ipFamilyPolicy: "PreferDualStack" port: 9090 targetPort: 9090 reloaderWebPort: 8080 externalIPs: [] nodePort: 30090 loadBalancerIP: "" loadBalancerSourceRanges: [] externalTrafficPolicy: Cluster type: ClusterIP additionalPorts: [] publishNotReadyAddresses: false sessionAffinity: None sessionAffinityConfig: clientIP: timeoutSeconds: 10800 servicePerReplica: enabled: false annotations: {} port: 9090 targetPort: 9090 nodePort: 30091 loadBalancerSourceRanges: [] externalTrafficPolicy: Cluster type: ClusterIP ipDualStack: enabled: false ipFamilies: ["IPv6", "IPv4"] ipFamilyPolicy: "PreferDualStack" podDisruptionBudget: enabled: false minAvailable: 1 unhealthyPodEvictionPolicy: AlwaysAllow thanosIngress: enabled: false ingressClassName: "" annotations: {} labels: {} servicePort: 10901 nodePort: 30901 hosts: [] paths: [] tls: [] extraSecret: annotations: {} data: {} ingress: enabled: false ingressClassName: "" annotations: {} labels: {} hosts: [] paths: [] tls: [] route: main: enabled: false apiVersion: gateway.networking.k8s.io/v1 kind: HTTPRoute annotations: {} labels: {} hostnames: [] parentRefs: [] httpsRedirect: false matches: - path: type: PathPrefix value: / filters: [] additionalRules: [] ingressPerReplica: enabled: false ingressClassName: "" annotations: {} labels: {} hostPrefix: "" hostDomain: "" paths: [] tlsSecretName: "" tlsSecretPerReplica: enabled: false prefix: "prometheus" serviceMonitor: selfMonitor: true interval: "" additionalLabels: {} sampleLimit: 0 targetLimit: 0 labelLimit: 0 labelNameLengthLimit: 0 labelValueLengthLimit: 0 scheme: "" tlsConfig: {} bearerTokenFile: metricRelabelings: [] relabelings: [] additionalEndpoints: [] prometheusSpec: persistentVolumeClaimRetentionPolicy: {} extraArgs: - "--storage.tsdb.wal-replay-concurrency=16" # 增加 WAL 重放并发度 - "--storage.tsdb.allow-overlapping-blocks" # 允许时间重叠块 - "--storage.tsdb.wal-compression" # 启用 WAL 压缩 - "--storage.tsdb.head-chunks-write-queue-size=300000" # 增加写入队列 - "--storage.tsdb.wal-segment-size=500mb" # 增大 WAL 段大小 disableCompaction: false automountServiceAccountToken: true apiserverConfig: {} additionalArgs: [] scrapeFailureLogFile: "" scrapeInterval: "" scrapeTimeout: "" scrapeClasses: [] podTargetLabels: [] evaluationInterval: "" listenLocal: false enableOTLPReceiver: false enableAdminAPI: false version: "" web: {} exemplars: {} enableFeatures: [] otlp: {} serviceName: image: registry: quay.io repository: prometheus/prometheus tag: v3.5.0 sha: "" pullPolicy: IfNotPresent tolerations: [] topologySpreadConstraints: [] alertingEndpoints: [] externalLabels: prometheus_replica: "aws-jp-prod-ltp-infra-eks-prome" cluster: "aws-jp-prod-ltp-infra-eks" prometheus_instance: "aws-jp-prod-ltp-infra-eks-prome" enableRemoteWriteReceiver: false replicaExternalLabelName: "" replicaExternalLabelNameClear: false prometheusExternalLabelName: "" prometheusExternalLabelNameClear: false externalUrl: "" nodeSelector: {} secrets: [] configMaps: [] query: {} ruleNamespaceSelector: {} ruleSelectorNilUsesHelmValues: true ruleSelector: {} serviceMonitorSelectorNilUsesHelmValues: false serviceMonitorSelector: {} serviceMonitorNamespaceSelector: {} podMonitorSelectorNilUsesHelmValues: true podMonitorSelector: {} podMonitorNamespaceSelector: {} probeSelectorNilUsesHelmValues: true probeSelector: {} probeNamespaceSelector: {} scrapeConfigSelectorNilUsesHelmValues: true scrapeConfigSelector: {} scrapeConfigNamespaceSelector: {} retention: 30d retentionSize: "500GB" tsdb: outOfOrderTimeWindow: 0s walCompression: true paused: false replicas: 1 shards: 1 logLevel: info logFormat: logfmt routePrefix: / podMetadata: {} podAntiAffinity: "soft" podAntiAffinityTopologyKey: kubernetes.io/hostname affinity: {} remoteRead: [] additionalRemoteRead: [] remoteWrite: - url: "https://mimir.abc.com/api/v1/push" writeRelabelConfigs: - targetLabel: k8s replacement: "aws-jp-prod-ltp-infra-eks" # 覆盖k8s标签 - targetLabel: cluster replacement: "aws-jp-prod-ltp-infra-eks" # 覆盖cluster标签 - targetLabel: prometheus_replica replacement: "aws-jp-prod-ltp-infra-eks-prome" - targetLabel: prometheus replacement: "aws-jp-prod-ltp-infra-eks-prome" queueConfig: maxSamplesPerSend: 5000 maxShards: 300 capacity: 1000000 minShards: 50 batchSendDeadline: 5s minBackoff: 200ms maxBackoff: 5s retryOnRateLimit: true additionalRemoteWrite: [] remoteWriteDashboards: false resources: requests: memory: 1200Mi cpu: 500m limits: memory: 4096Mi cpu: 4096m storageSpec: volumeClaimTemplate: spec: storageClassName: "gp3" accessModes: ["ReadWriteOnce"] resources: requests: storage: 500Gi volumes: [] volumeMounts: [] additionalScrapeConfigs: - job_name: 'flink-pushgateway' honor_labels: true # 关键配置! static_configs: - targets: ['pushgateway:9091'] labels: env: prod - job_name: 'aws-ec2-nodes' ec2_sd_configs: - region: ap-northeast-1 port: 9100 - region: ap-southeast-1 port: 9100 - region: ap-east-1 port: 9100 relabel_configs: - source_labels: [__meta_ec2_tag_aws_eks_cluster_name] regex: .+ action: drop - source_labels: [__meta_ec2_tag_eks_cluster_name] regex: .+ action: drop - source_labels: [__meta_ec2_tag_ec2_sd] regex: "0" action: drop - source_labels: [__meta_ec2_instance_id] target_label: instance - source_labels: [__meta_ec2_private_ip] target_label: PrivateIpAddress # 内网IP - source_labels: [__meta_ec2_public_ip] target_label: PublicIp # 公网IP - source_labels: [__meta_ec2_instance_type] target_label: InstanceType # 实例类型 - source_labels: [__meta_ec2_availability_zone] target_label: AvailabilityZone # 可用区 - source_labels: [__meta_ec2_region] target_label: Region # 区域 - source_labels: [__meta_ec2_state] target_label: Status # 实例状态 - action: labelmap regex: __meta_ec2_tag_(.+) additionalScrapeConfigsSecret: {} additionalPrometheusSecretsAnnotations: {} additionalAlertManagerConfigs: [] additionalAlertManagerConfigsSecret: {} additionalAlertRelabelConfigs: [] additionalAlertRelabelConfigsSecret: {} securityContext: runAsGroup: 2000 runAsNonRoot: true runAsUser: 1000 fsGroup: 2000 seccompProfile: type: RuntimeDefault priorityClassName: "" thanos: {} containers: [] initContainers: [] portName: "http-web" arbitraryFSAccessThroughSMs: false overrideHonorLabels: false overrideHonorTimestamps: false ignoreNamespaceSelectors: false enforcedNamespaceLabel: "" prometheusRulesExcludedFromEnforce: [] excludedFromEnforcement: [] queryLogFile: false sampleLimit: false enforcedKeepDroppedTargets: 0 enforcedSampleLimit: false enforcedTargetLimit: false enforcedLabelLimit: false enforcedLabelNameLengthLimit: false enforcedLabelValueLengthLimit: false allowOverlappingBlocks: false nameValidationScheme: "" minReadySeconds: 0 hostNetwork: false hostAliases: [] tracingConfig: {} serviceDiscoveryRole: "" additionalConfig: {} additionalConfigString: "" maximumStartupDurationSeconds: 0 scrapeProtocols: [] additionalRulesForClusterRole: [] additionalServiceMonitors: [] additionalPodMonitors: [] thanosRuler: enabled: false annotations: {} serviceAccount: create: true name: "" annotations: {} podDisruptionBudget: enabled: false minAvailable: 1 unhealthyPodEvictionPolicy: AlwaysAllow ingress: enabled: false ingressClassName: "" annotations: {} labels: {} hosts: [] paths: [] tls: [] route: main: enabled: false apiVersion: gateway.networking.k8s.io/v1 kind: HTTPRoute annotations: {} labels: {} hostnames: [] parentRefs: [] httpsRedirect: false matches: - path: type: PathPrefix value: / filters: [] additionalRules: [] service: enabled: true annotations: {} labels: {} clusterIP: "" ipDualStack: enabled: false ipFamilies: ["IPv6", "IPv4"] ipFamilyPolicy: "PreferDualStack" port: 10902 targetPort: 10902 nodePort: 30905 additionalPorts: [] externalIPs: [] loadBalancerIP: "" loadBalancerSourceRanges: [] externalTrafficPolicy: Cluster type: ClusterIP serviceMonitor: selfMonitor: true interval: "" additionalLabels: {} sampleLimit: 0 targetLimit: 0 labelLimit: 0 labelNameLengthLimit: 0 labelValueLengthLimit: 0 proxyUrl: "" scheme: "" tlsConfig: {} bearerTokenFile: metricRelabelings: [] relabelings: [] additionalEndpoints: [] thanosRulerSpec: podMetadata: {} serviceName: image: registry: quay.io repository: thanos/thanos tag: v0.39.2 sha: "" ruleNamespaceSelector: {} ruleSelectorNilUsesHelmValues: true ruleSelector: {} logFormat: logfmt logLevel: info replicas: 1 retention: 720h evaluationInterval: "" storage: {} alertmanagersConfig: existingSecret: {} secret: {} externalPrefix: externalPrefixNilUsesHelmValues: true routePrefix: / objectStorageConfig: existingSecret: {} secret: {} alertDropLabels: [] queryEndpoints: [] queryConfig: existingSecret: {} secret: {} labels: {} paused: false additionalArgs: [] nodeSelector: {} resources: {} podAntiAffinity: "soft" podAntiAffinityTopologyKey: kubernetes.io/hostname affinity: {} tolerations: [] topologySpreadConstraints: [] securityContext: runAsGroup: 2000 runAsNonRoot: true runAsUser: 1000 fsGroup: 2000 seccompProfile: type: RuntimeDefault listenLocal: false containers: [] volumes: [] volumeMounts: [] initContainers: [] priorityClassName: "" portName: "web" web: {} additionalConfig: {} additionalConfigString: "" extraSecret: annotations: {} data: {} cleanPrometheusOperatorObjectNames: false extraManifests: null -

serviceaccount.yaml

serviceaccount.yamlserviceaccount.yaml apiVersion: v1 kind: ServiceAccount metadata: name: kube-prom-stack-kube-prome-admission namespace: monitoring --- apiVersion: rbac.authorization.k8s.io/v1 kind: Role metadata: name: kube-prom-stack-admission-role namespace: monitoring rules: - apiGroups: [""] resources: ["secrets"] verbs: ["create", "get", "update"] --- apiVersion: rbac.authorization.k8s.io/v1 kind: RoleBinding metadata: name: kube-prom-stack-admission-binding namespace: monitoring roleRef: apiGroup: rbac.authorization.k8s.io kind: Role name: kube-prom-stack-admission-role subjects: - kind: ServiceAccount name: kube-prom-stack-kube-prome-admission namespace: monitoring --- apiVersion: v1 kind: ServiceAccount metadata: name: kube-prom-stack-kube-prome-admission namespace: monitoring labels: app.kubernetes.io/managed-by: Helm annotations: meta.helm.sh/release-name: kube-prom-stack meta.helm.sh/release-namespace: monitoring --- apiVersion: v1 kind: ServiceAccount metadata: name: prometheus-k8s namespace: monitoring annotations: eks.amazonaws.com/role-arn: arn:aws:iam::123456789:role/infra-prometheus-role automountServiceAccountToken: true -

部署Grafana mimir

-

View Code

View Codeglobal: serviceAccountName: mimir-serviceaccount namespace: mimir # 替换为实际命名空间 serviceAccount: create: true name: mimir-serviceaccount annotations: eks.amazonaws.com/role-arn: arn:aws:iam::122345678:role/infra-mimir-role minio: enabled: false mimir: disableCachingValidation: true structuredConfig: multitenancy_enabled: false # 禁用多租户模式 common: storage: backend: s3 s3: endpoint: s3.ap-northeast-1.amazonaws.com bucket_name: aws-jp-prod-mimir region: ap-northeast-1 signature_version: v4 # AWS S3兼容的签名版本 blocks_storage: backend: s3 tsdb: retention_period: 8760h # 数据保留1年 block_ranges_period: [2h, 12h, 24h, 168h, 672h] # 块聚合周期 wal_compression_enabled: true usage_stats: enabled: false compactor: compaction_concurrency: 4 # 合并并发度 block_ranges: [2h, 12h, 24h, 168h, 672h] # 与块存储周期匹配 limits: ingestion_rate: 400000 # 每秒 ingestion 速率限制 ingestion_burst_size: 2000000 # 突发 ingestion 限制 max_global_series_per_user: 5000000 # 全局序列限制 max_global_series_per_metric: 800000 # 单指标序列限制 max_query_lookback: 744h ingester: ring: heartbeat_timeout: 1m heartbeat_period: 15s runtimeConfig: overrides: anonymous: # 匿名用户配置(单租户模式) ingestion_rate: 400000 ingestion_burst_size: 2000000 max_global_series_per_user: 5000000 max_global_series_per_metric: 800000 max_query_lookback: 744h ingester_limits: max_ingestion_rate: 400000 max_series: 500000 # Ingester最大序列数 distributor_limits: max_ingestion_rate: 300000 max_inflight_push_requests: 30000 # 最大并发推送请求 alertmanager: enabled: false distributor: enabled: true replicas: 2 # 冗余副本数 resources: requests: { cpu: 2024m, memory: 4096Mi } limits: { cpu: 4000m, memory: 8Gi } podDisruptionBudget: maxUnavailable: 1 ingester: enabled: true replicas: 6 # 大于replication_factor(3) podManagementPolicy: Parallel resources: requests: { cpu: 500m, memory: 8Gi } limits: { cpu: 4096m, memory: 16Gi } zone: enabled: true zones: - name: zone-a nodeSelector: topology.kubernetes.io/zone: ap-northeast-1a replicas: 2 - name: zone-b nodeSelector: topology.kubernetes.io/zone: ap-northeast-1b replicas: 2 - name: zone-c nodeSelector: topology.kubernetes.io/zone: ap-northeast-1c replicas: 2 podAntiAffinity: requiredDuringSchedulingIgnoredDuringExecution: # 将 preferred 改为 required - labelSelector: matchExpressions: - key: app.kubernetes.io/component operator: In values: ["ingester"] topologyKey: "kubernetes.io/hostname" extraPorts: - name: http-metrics containerPort: 8080 protocol: TCP startupProbe: httpGet: path: /ready port: http-metrics initialDelaySeconds: 300 periodSeconds: 15 timeoutSeconds: 30 failureThreshold: 10 livenessProbe: httpGet: path: /ready port: http-metrics initialDelaySeconds: 600 periodSeconds: 15 timeoutSeconds: 30 failureThreshold: 3 successThreshold: 1 readinessProbe: httpGet: path: /ready port: http-metrics initialDelaySeconds: 600 periodSeconds: 15 timeoutSeconds: 30 failureThreshold: 3 successThreshold: 1 podDisruptionBudget: minAvailable: 5 # 使用minAvailable而不是maxUnavailable querier: enabled: true replicas: 3 resources: requests: { cpu: 500m, memory: 2048Mi } limits: { cpu: 2000m, memory: 4Gi } podAntiAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 100 podAffinityTerm: labelSelector: matchExpressions: - key: app.kubernetes.io/component operator: In values: ["querier"] topologyKey: "kubernetes.io/hostname" podDisruptionBudget: maxUnavailable: 1 query-frontend: enabled: true replicas: 3 resources: requests: { cpu: 500m, memory: 2048Mi } limits: { cpu: 4000m, memory: 16Gi } podAntiAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 100 podAffinityTerm: labelSelector: matchExpressions: - key: app.kubernetes.io/component operator: In values: ["query-frontend"] topologyKey: "kubernetes.io/hostname" podDisruptionBudget: maxUnavailable: 1 compactor: enabled: true replicas: 3 podDisruptionBudget: maxUnavailable: 1 podManagementPolicy: Parallel strategy: type: RollingUpdate resources: requests: { cpu: 500m, memory: 2048Mi } limits: { cpu: 4000m, memory: 4Gi } podAntiAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 100 podAffinityTerm: labelSelector: matchExpressions: - key: app.kubernetes.io/component operator: In values: ["compactor"] topologyKey: "kubernetes.io/hostname" store_gateway: replicas: 3 podManagementPolicy: Parallel resources: requests: { cpu: 1000m, memory: 4Gi } # 足够内存加载历史块 limits: { cpu: 4000m, memory: 8Gi } podAntiAffinity: preferredDuringSchedulingIgnoredDuringExecution: - weight: 100 podAffinityTerm: labelSelector: matchExpressions: - key: app.kubernetes.io/component operator: In values: ["store-gateway"] topologyKey: "kubernetes.io/hostname" nginx: enabled: false image: registry: public.ecr.aws repository: nginx/nginx-unprivileged tag: 1.27-alpine pullPolicy: IfNotPresent resources: requests: { cpu: 100m, memory: 128Mi } limits: { cpu: 500m, memory: 512Mi } gateway: enabled: true enabledNonEnterprise: true replicas: 2 autoscaling: enabled: true minReplicas: 2 maxReplicas: 4 strategy: type: RollingUpdate rollingUpdate: maxUnavailable: 2 maxSurge: 15% resources: requests: { cpu: 1000m, memory: 2048Mi } limits: { cpu: 2000m, memory: 4096Mi } ruler: enabled: false memcached: image: repository: memcached tag: 1.6.38-alpine pullPolicy: IfNotPresent podSecurityContext: {} priorityClassName: null containerSecurityContext: readOnlyRootFilesystem: true capabilities: drop: [ALL] allowPrivilegeEscalation: false index-cache: enabled: true replicas: 3 port: 11211 allocatedMemory: 2048 maxItemMemory: 5 connectionLimit: 16384 podDisruptionBudget: maxUnavailable: 1 podManagementPolicy: Parallel terminationGracePeriodSeconds: 30 statefulStrategy: type: RollingUpdate extraArgs: {} resources: requests: { cpu: 100m, memory: 2048Mi } limits: { cpu: 1000m, memory: 3096Mi } metadata-cache: enabled: true replicas: 3 port: 11211 allocatedMemory: 1024 maxItemMemory: 5 connectionLimit: 16384 podDisruptionBudget: maxUnavailable: 1 podManagementPolicy: Parallel terminationGracePeriodSeconds: 30 statefulStrategy: type: RollingUpdate extraArgs: {} resources: requests: { cpu: 100m, memory: 1024Mi } limits: { cpu: 1000m, memory: 3096Mi } results-cache: enabled: true replicas: 3 port: 11211 allocatedMemory: 2048 maxItemMemory: 5 connectionLimit: 16384 podDisruptionBudget: maxUnavailable: 1 podManagementPolicy: Parallel terminationGracePeriodSeconds: 30 statefulStrategy: type: RollingUpdate extraArgs: {} resources: requests: { cpu: 100m, memory: 2048Mi } limits: { cpu: 1000m, memory: 3096Mi } -

ingress-grafana.yaml

ingress-grafana.yamlapiVersion: networking.k8s.io/v1 kind: Ingress metadata: annotations: # 配置监听端口:HTTP 80 和 HTTPS 443 alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}, {"HTTPS": 443}]' # 自动将HTTP流量重定向到HTTPS alb.ingress.kubernetes.io/ssl-redirect: '443' # 指定AWS证书ARN alb.ingress.kubernetes.io/certificate-arn: arn:aws:acm:ap-northeast-1:1111111:certificate/1111111 name: grafana namespace: grafana spec: ingressClassName: alb rules: - host: grafana.ltpin.com # 更新后的域名 http: paths: - path: / pathType: Prefix backend: service: name: grafana-release port: number: 80 # 后端服务端口(假设Grafana仍使用HTTP) -

部署 N9e夜莺

-

values-n9e.yaml

values-n9e.yamlexpose: type: clusterIP tls: enabled: false certSource: auto auto: commonName: "" secret: secretName: "" ingress: hosts: web: n9e.ltpin.con controller: default kubeVersionOverride: "" annotations: {} nightingale: annotations: {} clusterIP: name: n9e annotations: {} ports: httpPort: 80 httpsPort: 443 nodePort: name: nightingale ports: http: port: 80 nodePort: 30007 https: port: 443 nodePort: 30009 loadBalancer: name: nightingale IP: "" ports: httpPort: 80 httpsPort: 443 annotations: {} sourceRanges: [] externalURL: http://hello.n9e.info ipFamily: ipv6: enabled: false ipv4: enabled: true persistence: enabled: true resourcePolicy: "keep" persistentVolumeClaim: database: existingClaim: "" storageClass: "efs-sc" subPath: "" accessMode: ReadWriteOnce size: 50Gi redis: existingClaim: "" storageClass: "efs-sc" subPath: "" accessMode: ReadWriteOnce size: 50Gi prometheus: existingClaim: "" storageClass: "" subPath: "" accessMode: ReadWriteOnce size: 4Gi imagePullPolicy: IfNotPresent imagePullSecrets: updateStrategy: type: RollingUpdate logLevel: info caSecretName: "" secretKey: "not-a-secure-key" nginx: image: repository: docker.io/library/nginx tag: stable-alpine serviceAccountName: "" automountServiceAccountToken: false replicas: 2 resources: requests: memory: 200Mi cpu: 100m limits: memory: 512Mi cpu: 1000m nodeSelector: {} tolerations: [] affinity: {} podAnnotations: {} priorityClassName: database: external: host: "infra-mysql.prod.internal.123.com" port: "3306" name: "n9e_v6" username: "root" password: "123456789" sslmode: "disable" maxIdleConns: 100 maxOpenConns: 900 podAnnotations: {} redis: type: internal internal: serviceAccountName: "" automountServiceAccountToken: false image: repository: 123456789.dkr.ecr.ap-northeast-1.amazonaws.com/sretools tag: redis6.2 resources: requests: memory: 200Mi cpu: 100m limits: memory: 512Mi cpu: 1000m nodeSelector: {} tolerations: [] affinity: {} priorityClassName: external: addr: "192.168.0.2:6379" sentinelMasterSet: "" username: "" password: "" mode: "standalone" podAnnotations: {} prometheus: type: external external: host: "kube-prom-stack-kube-prome-prometheus.monitoring" port: "9090" categraf: type: external n9e: type: internal internal: replicas: 1 serviceAccountName: "" automountServiceAccountToken: false image: repository: flashcatcloud/nightingale tag: 8.2.2 resources: requests: memory: 500Mi cpu: 200m limits: memory: 2048Mi cpu: 2000m nodeSelector: { } tolerations: [ ] affinity: { } priorityClassName: ibexEnable: false ibexPort: "20090" external: port: "8080" ibexEnable: false ibexPort: "20090" podAnnotations: { } -

ingress-n9e.yaml

ingress-n9e.yamlapiVersion: networking.k8s.io/v1 kind: Ingress metadata: annotations: # 配置监听端口:HTTP 80 和 HTTPS 443 alb.ingress.kubernetes.io/listen-ports: '[{"HTTP": 80}, {"HTTPS": 443}]' # 自动将HTTP流量重定向到HTTPS alb.ingress.kubernetes.io/ssl-redirect: '443' # 指定AWS证书ARN alb.ingress.kubernetes.io/certificate-arn: arn:aws:acm:ap-northeast-1:123456789:certificate/11111111111 name: n9e-ingress namespace: n9e spec: ingressClassName: alb rules: - host: n9e.123.com http: paths: - backend: service: name: n9e-nightingale-center port: number: 80 path: / pathType: Prefix -

Lark通知模版

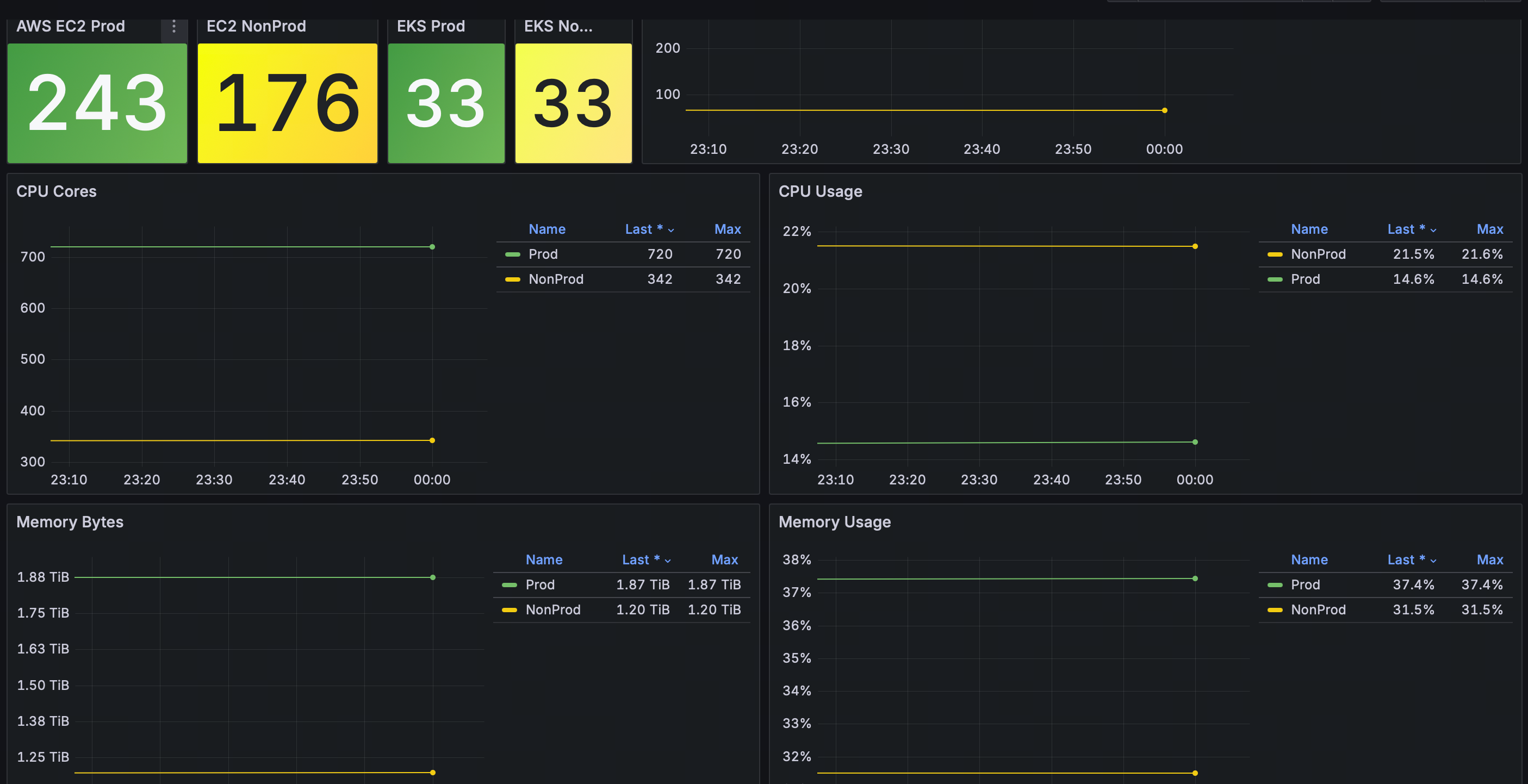

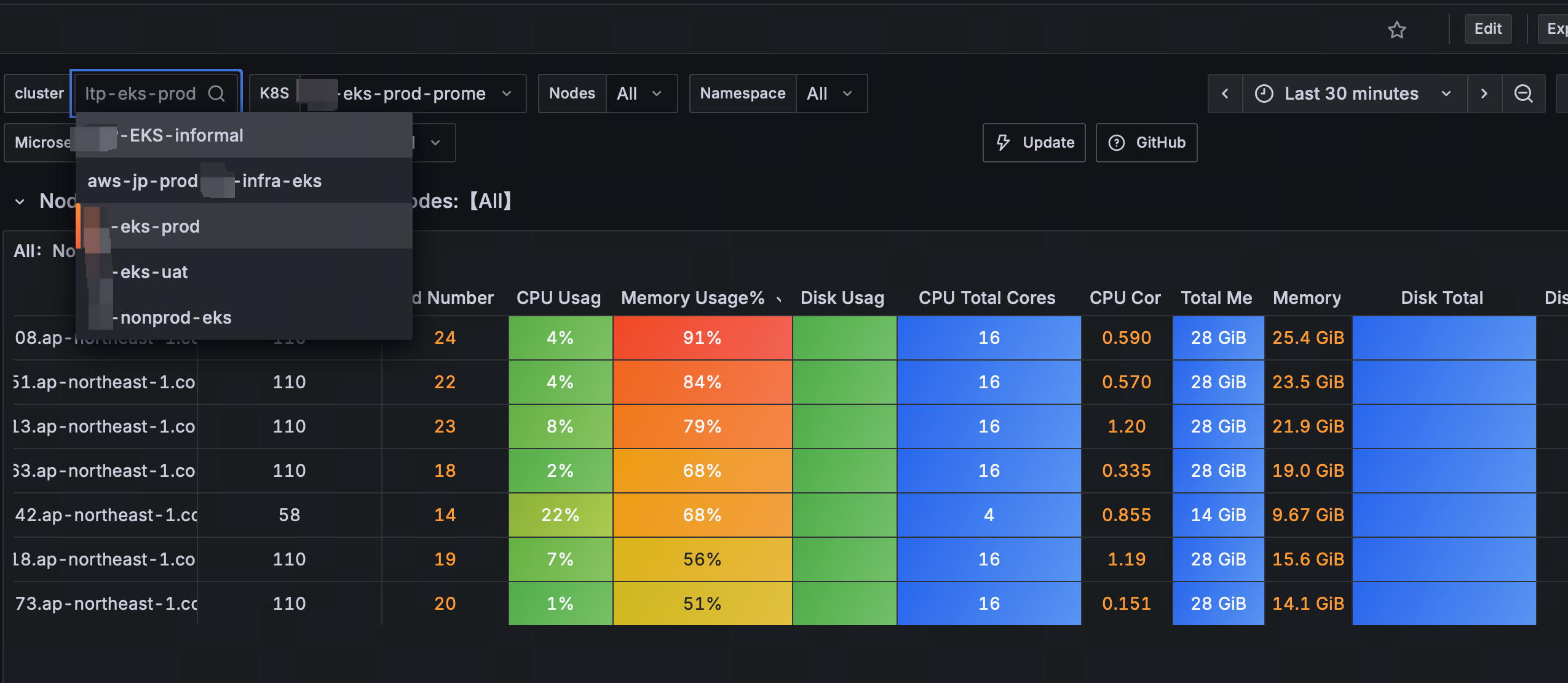

Lark通知模版{{ if $event.IsRecovered }} {{- if ne $event.Cate "host"}} **告警集群:** {{$event.Cluster}}{{end}} **级别状态:** S{{$event.Severity}} Recovered **告警名称:** {{$event.RuleName}} **事件标签:** {{range $i, $tag := $event.TagsJSON}} - {{$tag}} {{end}} **恢复时间:** {{timeformat $event.LastEvalTime}} {{$time_duration := sub now.Unix $event.FirstTriggerTime }}{{if $event.IsRecovered}}{{$time_duration = sub $event.LastEvalTime $event.FirstTriggerTime }}{{end}}**持续时长**: {{humanizeDurationInterface $time_duration}} **告警描述:** **服务已恢复** {{- else }} {{- if ne $event.Cate "host"}} **告警集群:** {{$event.Cluster}}{{end}} **级别状态:** S{{$event.Severity}} Triggered **告警名称:** {{$event.RuleName}} **事件标签:** {{range $i, $tag := $event.TagsJSON}} - {{$tag}} {{end}} **触发时间:** {{timeformat $event.TriggerTime}} **发送时间:** {{timestamp}} **触发时值:** {{$event.TriggerValue}} {{$time_duration := sub now.Unix $event.FirstTriggerTime }}{{if $event.IsRecovered}}{{$time_duration = sub $event.LastEvalTime $event.FirstTriggerTime }}{{end}}**持续时长**: {{humanizeDurationInterface $time_duration}} {{if $event.RuleNote }}**告警描述:** **{{$event.RuleNote}}**{{end}} {{- end -}} {{$domain := "https://n9e.123.com" }} [事件详情]({{$domain}}/alert-his-events/{{$event.Id}})|[屏蔽1小时]({{$domain}}/alert-mutes/add?busiGroup={{$event.GroupId}}&cate={{$event.Cate}}&datasource_ids={{$event.DatasourceId}}&prod={{$event.RuleProd}}{{range $key, $value := $event.TagsMap}}&tags={{$key}}%3D{{$value}}{{end}})|[查看曲线]({{$domain}}/metric/explorer?data_source_id={{$event.DatasourceId}}&data_source_name=prometheus&mode=graph&prom_ql={{$event.PromQl|escape}}) ---title----- {{if $event.IsRecovered}}✅ 恢复{{else}}⚠️ 告警{{end}} - {{$event.RuleName}} - ec2和eks分别采用自动发现机制和ds模式后,可以采集这2种所有资源并得出总体使用率,以提供资源决策!

-

- 多个Prometheus数据汇总后,所有的集群资源可以在一个看板查看不用来回切换,告警指标也可以基于一个metrics进行开发和覆盖。

-

http://www.cnblogs.com/Jame-mei

浙公网安备 33010602011771号

浙公网安备 33010602011771号