Deep Learning & Neural Style Transfer(VGG) ——By何子辰

这周做了一个DeepLearning在Neural Style Transfer上应用的Assignment 。参考算法论文如下

Gatys et al. (2015) (https://arxiv.org/abs/1508.06576).

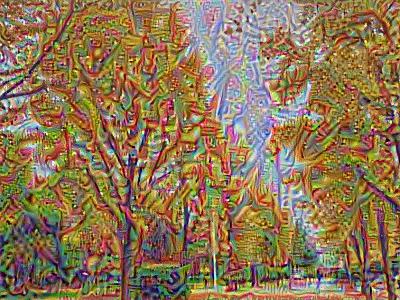

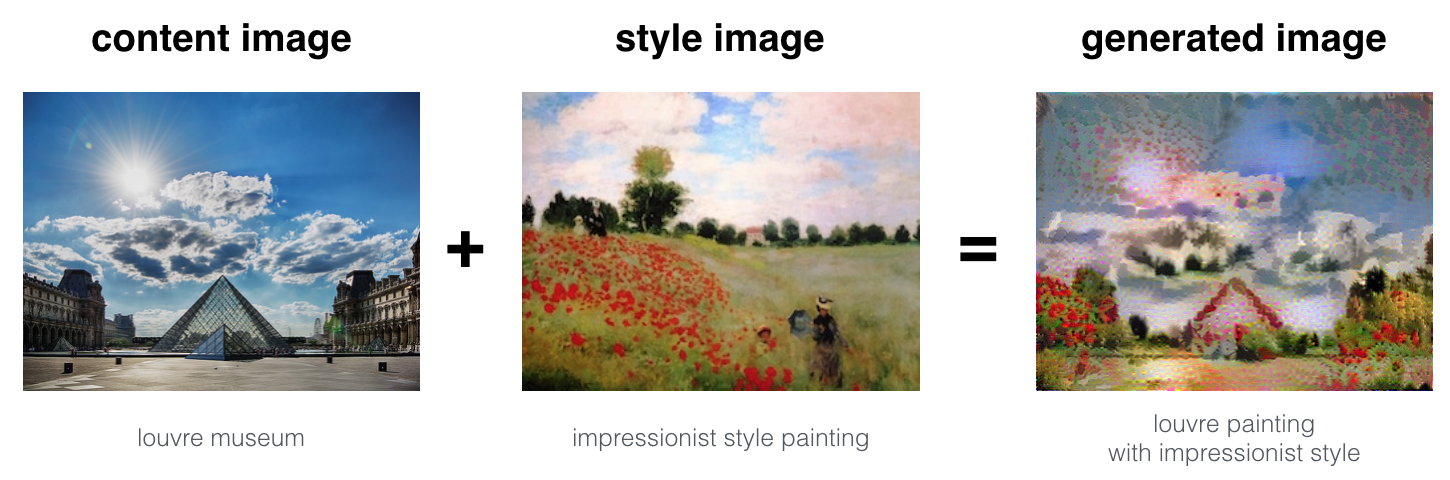

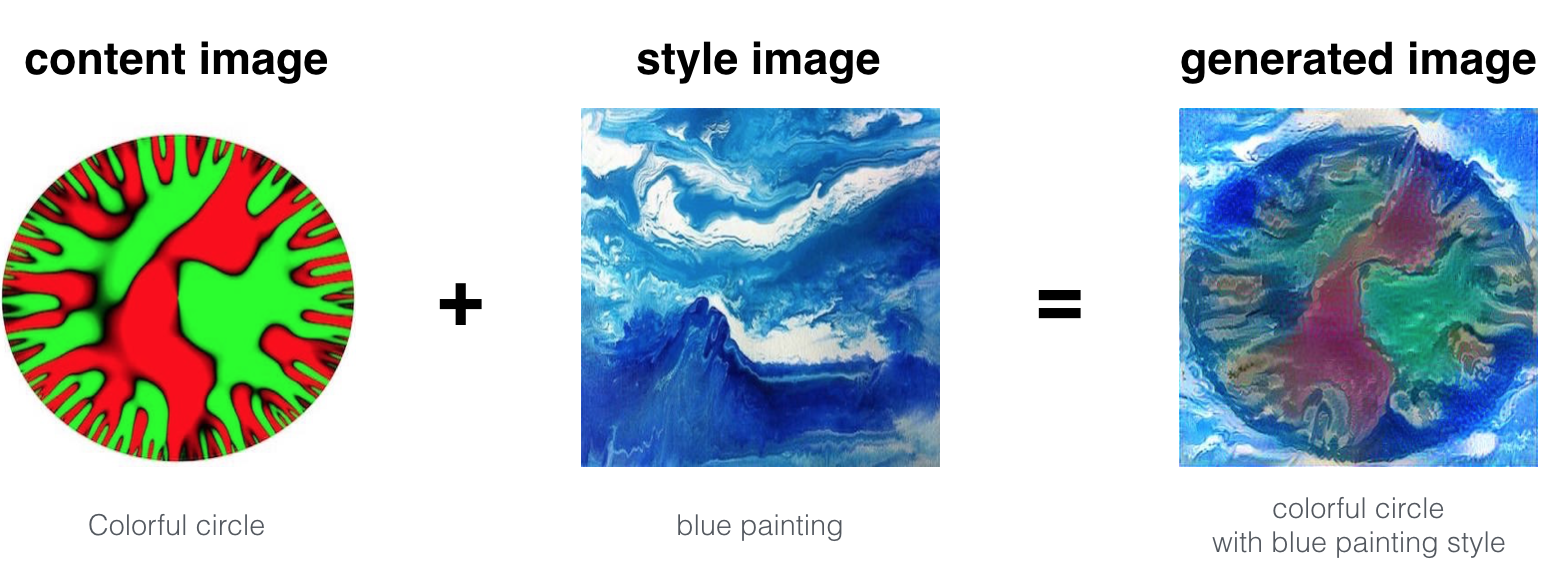

先上效果图:

① 美丽的中国石油大学(北京)+ 毕加索风格图像:(所有图像都预处理成400x300的图片)

②再放点其他的:

※※过程如下:

- Create an Interactive Session

- Load the content image

- Load the style image

- Randomly initialize the image to be generated

- Load the pretrained VGG16 model

- Build the TensorFlow graph:Initialize the TensorFlow graph and run it for a large number of iterations, updating the generated image at every step

- Run the content image through the VGG16 model and compute the content cost

- Run the style image through the VGG16 model and compute the style cost

- Compute the total cost

- Define the optimizer and the learning rate

VGG网格结构代码:

1 ### Part of this code is due to the MatConvNet team and is used to load the parameters of the pretrained VGG19 model in the notebook ###

2

3 import os

4 import sys

5 import scipy.io

6 import scipy.misc

7 import matplotlib.pyplot as plt

8 from matplotlib.pyplot import imshow

9 from PIL import Image

10 from nst_utils import *

11

12 import numpy as np

13 import tensorflow as tf

14

15 class CONFIG:

16 IMAGE_WIDTH = 400

17 IMAGE_HEIGHT = 300

18 COLOR_CHANNELS = 3

19 NOISE_RATIO = 0.6

20 MEANS = np.array([123.68, 116.779, 103.939]).reshape((1,1,1,3))

21 VGG_MODEL = 'pretrained-model/imagenet-vgg-verydeep-19.mat' # Pick the VGG 19-layer model by from the paper "Very Deep Convolutional Networks for Large-Scale Image Recognition".

22 STYLE_IMAGE = 'images/stone_style.jpg' # Style image to use.

23 CONTENT_IMAGE = 'images/content300.jpg' # Content image to use.

24 OUTPUT_DIR = 'output/'

25

26 def load_vgg_model(path):

27 """

28 Returns a model for the purpose of 'painting' the picture.

29 Takes only the convolution layer weights and wrap using the TensorFlow

30 Conv2d, Relu and AveragePooling layer. VGG actually uses maxpool but

31 the paper indicates that using AveragePooling yields better results.

32 The last few fully connected layers are not used.

33 Here is the detailed configuration of the VGG model:

34 0 is conv1_1 (3, 3, 3, 64)

35 1 is relu

36 2 is conv1_2 (3, 3, 64, 64)

37 3 is relu

38 4 is maxpool

39 5 is conv2_1 (3, 3, 64, 128)

40 6 is relu

41 7 is conv2_2 (3, 3, 128, 128)

42 8 is relu

43 9 is maxpool

44 10 is conv3_1 (3, 3, 128, 256)

45 11 is relu

46 12 is conv3_2 (3, 3, 256, 256)

47 13 is relu

48 14 is conv3_3 (3, 3, 256, 256)

49 15 is relu

50 16 is conv3_4 (3, 3, 256, 256)

51 17 is relu

52 18 is maxpool

53 19 is conv4_1 (3, 3, 256, 512)

54 20 is relu

55 21 is conv4_2 (3, 3, 512, 512)

56 22 is relu

57 23 is conv4_3 (3, 3, 512, 512)

58 24 is relu

59 25 is conv4_4 (3, 3, 512, 512)

60 26 is relu

61 27 is maxpool

62 28 is conv5_1 (3, 3, 512, 512)

63 29 is relu

64 30 is conv5_2 (3, 3, 512, 512)

65 31 is relu

66 32 is conv5_3 (3, 3, 512, 512)

67 33 is relu

68 34 is conv5_4 (3, 3, 512, 512)

69 35 is relu

70 36 is maxpool

71 37 is fullyconnected (7, 7, 512, 4096)

72 38 is relu

73 39 is fullyconnected (1, 1, 4096, 4096)

74 40 is relu

75 41 is fullyconnected (1, 1, 4096, 1000)

76 42 is softmax

77 """

78

79 vgg = scipy.io.loadmat(path)

80

81 vgg_layers = vgg['layers']

82

83 def _weights(layer, expected_layer_name):

84 """

85 Return the weights and bias from the VGG model for a given layer.

86 """

87 wb = vgg_layers[0][layer][0][0][2]

88 W = wb[0][0]

89 b = wb[0][1]

90 layer_name = vgg_layers[0][layer][0][0][0][0]

91 assert layer_name == expected_layer_name

92 return W, b

93

94 return W, b

95

96 def _relu(conv2d_layer):

97 """

98 Return the RELU function wrapped over a TensorFlow layer. Expects a

99 Conv2d layer input.

100 """

101 return tf.nn.relu(conv2d_layer)

102

103 def _conv2d(prev_layer, layer, layer_name):

104 """

105 Return the Conv2D layer using the weights, biases from the VGG

106 model at 'layer'.

107 """

108 W, b = _weights(layer, layer_name)

109 W = tf.constant(W)

110 b = tf.constant(np.reshape(b, (b.size)))

111 return tf.nn.conv2d(prev_layer, filter=W, strides=[1, 1, 1, 1], padding='SAME') + b

112

113 def _conv2d_relu(prev_layer, layer, layer_name):

114 """

115 Return the Conv2D + RELU layer using the weights, biases from the VGG

116 model at 'layer'.

117 """

118 return _relu(_conv2d(prev_layer, layer, layer_name))

119

120 def _avgpool(prev_layer):

121 """

122 Return the AveragePooling layer.

123 """

124 return tf.nn.avg_pool(prev_layer, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME')

125

126 # Constructs the graph model.

127 graph = {}

128 graph['input'] = tf.Variable(np.zeros((1, CONFIG.IMAGE_HEIGHT, CONFIG.IMAGE_WIDTH, CONFIG.COLOR_CHANNELS)), dtype = 'float32')

129 graph['conv1_1'] = _conv2d_relu(graph['input'], 0, 'conv1_1')

130 graph['conv1_2'] = _conv2d_relu(graph['conv1_1'], 2, 'conv1_2')

131 graph['avgpool1'] = _avgpool(graph['conv1_2'])

132 graph['conv2_1'] = _conv2d_relu(graph['avgpool1'], 5, 'conv2_1')

133 graph['conv2_2'] = _conv2d_relu(graph['conv2_1'], 7, 'conv2_2')

134 graph['avgpool2'] = _avgpool(graph['conv2_2'])

135 graph['conv3_1'] = _conv2d_relu(graph['avgpool2'], 10, 'conv3_1')

136 graph['conv3_2'] = _conv2d_relu(graph['conv3_1'], 12, 'conv3_2')

137 graph['conv3_3'] = _conv2d_relu(graph['conv3_2'], 14, 'conv3_3')

138 graph['conv3_4'] = _conv2d_relu(graph['conv3_3'], 16, 'conv3_4')

139 graph['avgpool3'] = _avgpool(graph['conv3_4'])

140 graph['conv4_1'] = _conv2d_relu(graph['avgpool3'], 19, 'conv4_1')

141 graph['conv4_2'] = _conv2d_relu(graph['conv4_1'], 21, 'conv4_2')

142 graph['conv4_3'] = _conv2d_relu(graph['conv4_2'], 23, 'conv4_3')

143 graph['conv4_4'] = _conv2d_relu(graph['conv4_3'], 25, 'conv4_4')

144 graph['avgpool4'] = _avgpool(graph['conv4_4'])

145 graph['conv5_1'] = _conv2d_relu(graph['avgpool4'], 28, 'conv5_1')

146 graph['conv5_2'] = _conv2d_relu(graph['conv5_1'], 30, 'conv5_2')

147 graph['conv5_3'] = _conv2d_relu(graph['conv5_2'], 32, 'conv5_3')

148 graph['conv5_4'] = _conv2d_relu(graph['conv5_3'], 34, 'conv5_4')

149 graph['avgpool5'] = _avgpool(graph['conv5_4'])

150

151 return graph

152

153 def generate_noise_image(content_image, noise_ratio = CONFIG.NOISE_RATIO):

154 """

155 Generates a noisy image by adding random noise to the content_image

156 """

157

158 # Generate a random noise_image

159 noise_image = np.random.uniform(-20, 20, (1, CONFIG.IMAGE_HEIGHT, CONFIG.IMAGE_WIDTH, CONFIG.COLOR_CHANNELS)).astype('float32')

160

161 # Set the input_image to be a weighted average of the content_image and a noise_image

162 input_image = noise_image * noise_ratio + content_image * (1 - noise_ratio)

163

164 return input_image

165

166

167 def reshape_and_normalize_image(image):

168 """

169 Reshape and normalize the input image (content or style)

170 """

171

172 # Reshape image to mach expected input of VGG16

173 image = np.reshape(image, ((1,) + image.shape))

174

175 # Substract the mean to match the expected input of VGG16

176 image = image - CONFIG.MEANS

177

178 return image

179

180

181 def save_image(path, image):

182

183 # Un-normalize the image so that it looks good

184 image = image + CONFIG.MEANS

185

186 # Clip and Save the image

187 image = np.clip(image[0], 0, 255).astype('uint8')

188 scipy.misc.imsave(path, image)

接下来就是

# Deep Learning & Art: Neural Style Transfer

# This assignment:

# - Implement the neural style transfer algorithm

# - Generate novel artistic images using your algorithm

# - Most of the algorithms you've studied optimize a cost function to get a set of parameter values. In Neural Style Transfer, you'll optimize a cost function to get pixel values.

1 # Deep Learning & Art: Neural Style Transfer

2 # This assignment:

3 # - Implement the neural style transfer algorithm

4 # - Generate novel artistic images using your algorithm

5

6 # - Most of the algorithms you've studied optimize a cost function to get a set of parameter

7 # - values. In Neural Style Transfer, you'll optimize a cost function to get pixel values.

8

9 import os

10 import sys

11 import scipy.io

12 import scipy.misc

13 import matplotlib.pyplot as plt

14 from matplotlib.pyplot import imshow

15 from PIL import Image

16 from nst_utils import *

17 import numpy as np

18 import tensorflow as tf

19

20 # Essential params

21 class CONFIG:

22 IMAGE_WIDTH = 400

23 IMAGE_HEIGHT = 300

24 COLOR_CHANNELS = 3

25 NOISE_RATIO = 0.6

26 MEANS = np.array([123.68, 116.779, 103.939]).reshape((1,1,1,3))

27 VGG_MODEL = 'pretrained-model/imagenet-vgg-verydeep-19.mat' # Pick the VGG 19-layer model by from the paper "Very Deep Convolutional Networks for Large-Scale Image Recognition".

28 STYLE_IMAGE = 'HZC_test_image/2.jpg' # Style image to use.

29 CONTENT_IMAGE = 'HZC_test_image/bjs.jpg' # Content image to use.

30 OUTPUT_DIR = 'output/'

31

32 # STYLE weights

33 # When complete the assignment, come back and experiment with different weights to see

34 # how it changes the generated image G.

35 # default value:

36 # 权重不同,最终生成图像风格也不同

37 STYLE_LAYERS = [

38 ('conv1_1', 0.2),

39 ('conv2_1', 0.2),

40 ('conv3_1', 0.4),

41 ('conv4_1', 0.4),

42 ('conv5_1', 0.4)

43 ]

44

45 # - use a previously trained convolutional network, and build on top of that.

46 # - model: vgg-19; a 19-layer version of VGG network.

47 # - this model has already been trained in the very large ImageNet database.

48

49 # step 1: run the following model to load parameters from VGG model.

50 # Use load_vgg_model function in nst_utils.py

51 model = load_vgg_model("pretrained-model/imagenet-vgg-verydeep-19.mat")

52 # print(model)

53

54 # CONTENT image

55 content_image = scipy.misc.imread("images/louvre.jpg")

56 imshow(content_image)

57

58 # STYLE image

59 style_image = scipy.misc.imread("images/monet_800600.jpg")

60 imshow(style_image)

61

62 #Tool that was necessary

63

64 # Reshape and normalize the input image (content or style)

65 def reshape_and_normalize_image(image):

66

67 # Reshape image to mach expected input of VGG16

68 # image = np.reshape(image,(300,400,3))

69 image = np.reshape(image,((1,)+image.shape))

70

71 # Substract the mean to match the expected input of VGG16

72 image = image - CONFIG.MEANS

73 return image

74

75 # Generate a noisy image bt adding random noise to the content_image

76 def generate_noise_image(content_image,noise_ratio = CONFIG.NOISE_RATIO):

77

78 # Generate a random noise_image

79 noise_image = np.random.uniform(-20,20, (1, CONFIG.IMAGE_HEIGHT, CONFIG.IMAGE_WIDTH, CONFIG.COLOR_CHANNELS)).astype('float32')

80

81 # Set the input_image to be a weighted average of the content image and a noise image

82 input_image = noise_image*noise_ratio + content_image*(1 - noise_ratio)

83

84 return input_image

85

86 #Save image

87 def save_image(path, image):

88

89 # Un-normalize the image so that it looks good

90 image = image + CONFIG.MEANS

91

92 # Clip and Save the image

93 image = np.clip(image[0], 0, 255).astype('uint8')

94 scipy.misc.imsave(path, image)

95

96 # Compute the Content Cost use Tensorflow

97 def compute_content_cost(a_C,a_G):

98 """

99 Compute the Content Cost

100

101 Arguments:

102 a_C >>> tensor of dimension(1,n_h,n_w,n_c) hidden layer activations

103 a_G >>> tensor of dimension(1,n_h,n_w,n_c) hidden layer activations

104

105 Returns:

106 J_content >>> scalar that you compute using equation that you needed

107

108 """

109 # Retrieve params

110 m,n_H,n_W,n_C = a_G.get_shape().as_list()

111

112 # Reshape a_C and a_G

113 a_C_unrolled = tf.reshape(a_C,[n_H*n_W,n_C])

114 a_G_unrolled = tf.reshape(a_G,[n_H*n_W,n_C])

115

116 # Compute the cost with tensorflow

117 params = 1/(4*n_H*n_W*n_C)

118 J_content = params*(tf.reduce_sum(tf.square(tf.subtract(a_C_unrolled,a_G_unrolled))))

119

120 return J_content

121

122

123 # Gram_matrix (Style matrix)

124 def gram_matrix(A):

125 """

126 Argument:

127 A -- matrix of shape(n_C, n_H,n*W)

128

129 Returns:

130 GA -- Gram matrix of A: shape(n_C,n_C)

131 """

132 GA = tf.matmul(A,tf.transpose(A))

133

134 return GA

135

136 # Style cost

137 # We only use a single layer l

138 def compute_layer_style_cost(a_S,a_G):

139 """

140 Arguments:

141 a_S -- tensor of dimension(1,n_H,n_W,n_C), hidden layer activations representing style

142 a_G -- tensor of dimension(1,n_H,n_W,n_C), hidden layer activations

143 Returns:

144 J_style_layer -- tensor representing a scalar value(标量), style cost

145 """

146

147 # Retrieve params from a_G

148 m,n_H,n_W,n_C = a_G.get_shape().as_list()

149

150 # Reshape the images to have them of shape(n_C, n_H*n_W)

151 a_S = tf.reshape(a_S, [n_C,n_H*n_W])

152 a_G = tf.reshape(a_G, [n_C,n_H*n_W])

153

154 # Compute gram matrix for both images S and G

155 GS = gram_matrix(a_S)

156 GG = gram_matrix(a_G)

157

158 # Compute the loss

159 params = 1/(4*(n_C**2)*((n_H*n_W)**2))

160 J_style_layer = params*(tf.reduce_sum(tf.square(tf.subtract(GS,GG))))

161

162 return J_style_layer

163

164 # Combine the style costs for different layers as follows:

165 def compute_style_cost(model,STYLE_LAYERS):

166 """

167 Computes the overall style cost from several chosen layers.

168

169 Arguments:

170 model -- our tensorflow model

171 STYLE_LAYERS -- A python list contains:

172 -- the names of the layers we would like to extract style from

173 -- a coefficient for each of them

174 Returns:

175 J_style -- tensor representing a scalar value

176 """

177 # The overall style cost

178 J_style = 0

179

180 for layer_name, coeff in STYLE_LAYERS:

181 # Select the output tensor

182 out = model[layer_name]

183

184 # Set a_S to be the hidden layer activation that we have selected.

185 a_S = sess.run(out)

186 # U don't have to do it again

187 # Set a_G to be the hidden layer activation from same layer.

188 a_G = out

189

190 # Compute style_cost for the current layer

191 J_style_layer = compute_layer_style_cost(a_S, a_G)

192 # Add coeff

193 J_style += coeff * J_style_layer

194

195 return J_style

196

197 # Define the total cost to optimize

198 def total_cost(J_content, J_style, alpha=10, beta=40):

199 """

200 Compute the total cost function

201

202 alpha>>> hyperparameter weighting the importance of the content cost

203 beta >>> hyperparameter weighting the importance of the style cost

204

205 Returns:

206 J -- total cost as defined by the formula above.

207 """

208 J = alpha*J_content + beta*J_style

209 return J

210

211 # Solving the optimization problem

212 # STEP1: Create an interactive session:

213 tf.reset_default_graph()

214

215 sess = tf.InteractiveSession()

216

217 # STEP2: Load the content&style image

218 content_image = scipy.misc.imread("HZC_test_image/2.jpg")

219 content_image = reshape_and_normalize_image(content_image)

220 print(content_image.shape)

221 style_image = scipy.misc.imread("HZC_test_image/bjs.jpg")

222

223 style_image = reshape_and_normalize_image(style_image)

224 print(style_image.shape)

225 # STEP3: Randomly initialize the image to be generated

226 generated_image = generate_noise_image(content_image)

227 # print(generated_image.shape) # 1x300x400x3

228 imshow(generated_image[0])

229

230 # STEP4: Load the VGG16 model

231 model = load_vgg_model("pretrained-model/imagenet-vgg-verydeep-19.mat")

232

233 # STEP5: Build the tensorflow graph

234

235 # Run the content image through the VGG16 model and compute the content cost

236 # Assign the content image to be the input of the VGG model

237 sess.run(model['input'].assign(content_image))

238 # Select the output tensor of the layer conv4_2

239 out = model['conv4_2']

240 # Set a_C to be the hidden layer activation from the layer we have selected

241 a_C = sess.run(out)

242 a_G = out

243 J_content = compute_content_cost(a_C, a_G)

244

245 # Run the style image through the VGG16 model and compute the style cost

246 # Assign the input of the model to be the "style" image

247 sess.run(model['input'].assign(style_image))

248 J_style = compute_style_cost(model, STYLE_LAYERS)

249

250 # Compute the total cost

251 J = total_cost(J_content, J_style, alpha=10, beta=40)

252

253 # Define the optimizer and the learning rate

254 # optimizer

255 optimizer = tf.train.AdamOptimizer(2.0)

256 # train_step

257 train_step = optimizer.minimize(J)

258

259 # STEP6: Initialize the TensorFlow graph and run it for a large number of iterations,

260 # updating the generated image at every step.

261 def model_nn(sess,input_image,num_iterations=200):

262

263 # Initialize the global variables

264 sess.run(tf.global_variables_initializer())

265

266 # Run the noisy input image

267 sess.run(model['input'].assign(input_image))

268

269 for i in range(num_iterations):

270

271 # Run the session on the train_step to minimize the total cost

272 sess.run(train_step)

273

274 # Compute the generated image by runing the session on the

275 # Current model['input']

276 generated_image = sess.run(model['input'])

277

278 # Print every 20 iterations

279 if i%20 == 0:

280 Jt,Jc,Js = sess.run([J, J_content, J_style])

281 print("iterations"+str(i)+":")

282 print("total cost ="+str(Jt))

283 print("content cost = "+str(Jc))

284 print("style_cost = "+str(Js))

285

286 save_image("output/generated_image_cup.jpg", generated_image)

287 # Save last generate image

288 save_image('output/generated_image_cup.jpg',generated_image)

289

290 return generated_image

291

292 model_nn(sess, generated_image, num_iterations=200)

293

294

295

296 # plt.show()

297

298 # Test code for "compute_content_cost"

299 # tf.reset_default_graph()

300

301 # with tf.Session() as test1:

302 # tf.set_random_seed(1)

303 # a_C = tf.random_normal([1,4,4,3],mean=1,stddev=4)

304 # a_G = tf.random_normal([1,4,4,3],mean=1,stddev=4)

305 # J_content = compute_content_cost(a_C, a_G)

306

307 # print("J_content="+str(J_content.eval()))

308

309 # # Test code for "gram_matrix"

310 # tf.reset_default_graph()

311

312 # with tf.Session() as test2:

313 # tf.set_random_seed(1)

314 # A = tf.random_normal([3,2*1], mean=1, stddev=4)

315 # GA = gram_matrix(A)

316

317 # print("GA = " + str(GA.eval()))

318

319 # Test code for "compute_layer_style_cost "

320 # tf.reset_default_graph()

321

322 # with tf.Session() as test3:

323 # tf.set_random_seed(1)

324 # a_S = tf.random_normal([1,4,4,3],mean=1,stddev=4)

325 # a_G = tf.random_normal([1,4,4,3],mean=1,stddev=4)

326 # J_style_layer=compute_layer_style_cost(a_S, a_G)

327 # print("J_style_layer = " + str(J_style_layer.eval()))

328

329 # Test code for "total_cost"

330 # tf.reset_default_graph()

331

332 # with tf.Session() as test:

333 # np.random.seed(3)

334 # J_content = np.random.randn()

335 # J_style = np.random.randn()

336 # J = total_cost(J_content, J_style)

337 # print("J = " + str(J))

浙公网安备 33010602011771号

浙公网安备 33010602011771号