【Program】Pytorch入门④:TORCHVISION OBJECT DETECTION FINETUNING TUTORIAL

开始前需要有一个准备工作,安装coco的api,主要用到其中的IOU计算的库来评价模型的性能。我折腾了一个晚上加一个上午的时间,在我12年买的老笔记本上,按照网上很多方法还是无法解决。卡在了pycocotools问题上,最终的错误是:e:\anaconda3\include\pyconfig.h(59): fatal error C1083: 无法打开包括文件: “io.h”: No such file or directory。

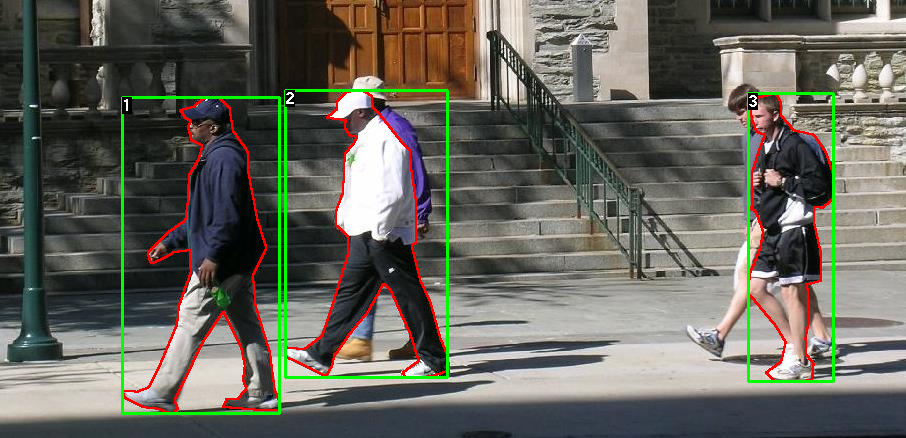

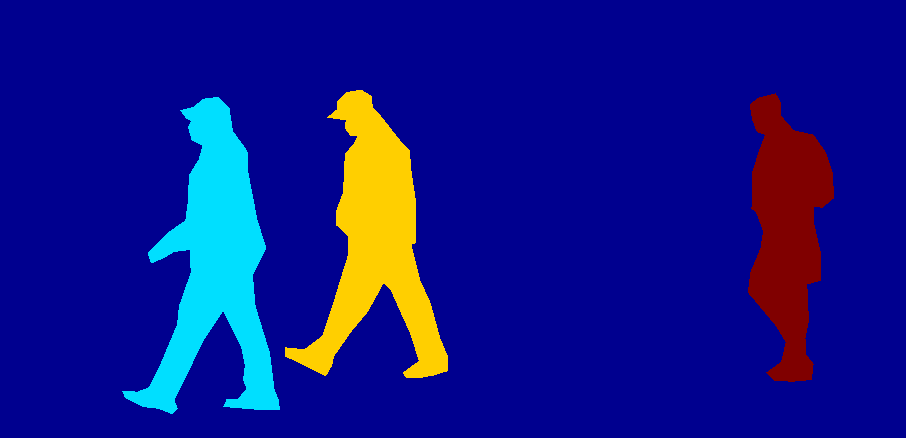

本教程使用Penn-Fudan的行人检测和分割数据集来训练Mask R-CNN实例分割模型。Penn-Fudan数据集中有170张图像,包含345个行人的实例。图像中场景主要是校园和城市街景,每张图中至少有一个行人。

本文参考作者一个菜鸟的奋斗的文章:手把手教你训练自己的Mask R-CNN图像实例分割模型(PyTorch官方教程)。

数据处理

按照官网教程下载好数据集后,可以看到起数据结构,PennFudanPed/readme中有详细的讲解。

PennFudanPed/

PedMasks/

FudanPed00001_mask.png

FudanPed00002_mask.png

FudanPed00003_mask.png

FudanPed00004_mask.png

...

PNGImages/

FudanPed00001.png

FudanPed00002.png

FudanPed00003.png

FudanPed00004.png

图像如下:

一个菜鸟的奋斗给了数据预览方法:

from PIL import Image

Image.open('PennFudanPed/PNGImages/FudanPed00001.png')

mask = Image.open('PennFudanPed/PedMasks/FudanPed00001_mask.png')

mask.putpalette([

0, 0, 0, # black background

255, 0, 0, # index 1 is red

255, 255, 0, # index 2 is yellow

255, 153, 0, # index 3 is orange

])

mask.show()

在训练模型之前,需要写好数据集的载入接口。其实针对每一个不同的问题,都需要首先做这一步,搭建模型和处理数据是同步进行的。贴上我简要注释的代码:

# -*- coding:utf-8 -*-

#@Time : 2020/2/14 9:13

#@Author: zhangqingbo

#@File : 1_torchvision_object_detection_finetuning.py

import os

import numpy as np

import torch

from PIL import Image

class PennFudanDataset(object):

def __init__(self, root, transforms):

self.root = root

self.transforms = transforms

# load all image files, sorting them to ensure that

# they are aligned

self.imgs = list(sorted(os.listdir(os.path.join(root, "PNGImages"))))

self.masks = list(sorted(os.listdir(os.path.join(root, "PedMasks"))))

def __getitem__(self, idx):

# load images ad masks

img_path = os.path.join(self.root, "PNGImages", self.imgs[idx])

mask_path = os.path.join(self.root, "PedMasks", self.masks[idx])

img = Image.open(img_path).convert("RGB")

# note that we haven't converted the mask to RGB,

# because each color corresponds to a different instance

# with 0 being background

mask = Image.open(mask_path)

# convert the PIL Image into a numpy array

mask = np.array(mask)

# instances are encoded as different colors

obj_ids = np.unique(mask)

# first id is the background, so remove it

obj_ids = obj_ids[1:]

# split the color-encoded mask to a set of binary masks

# 下面这行代码的解释:以FudanPed00001为例,有两个人目标,FudanPed00001_mask中用像素为0表示背景,

# 用1表示目标1,用2表示目标2,以此类推,所以mask是一个559*536的二维矩阵,目标1所占的像素值全为1,

# 目标2的像素值全为2,而obj_ids是取mask中的唯一值,即mask=[1, 2],原来mask=[0, 1, 2],但

# “obj_ids = obj_ids[1:]”已经截掉了元素0.

# 而下面这行代码,创建了masks(2*559*536),包含两个mask(559*536),分别对应第一个目标和第二个目标,

# 第一个mask中,目标1所占像素为True,其余全为False,第二个mask中,目标2所占像素为True,其余全为False。

# 感觉这行代码太骚气!

masks = mask == obj_ids[:, None, None]

# get bounding box coordinates for each mask

num_objs = len(obj_ids)

boxes = []

for i in range(num_objs):

pos = np.where(masks[i])

# pos[0]代表的是第几行,即为纵坐标

# pos[1]代表的是第几列,即为横坐标

xmin = np.min(pos[1])

xmax = np.max(pos[1])

ymin = np.min(pos[0])

ymax = np.max(pos[0])

boxes.append([xmin, ymin, xmax,ymax])

# convert everything into a torch.Tensor

boxes = torch.as_tensor(boxes, dtype=torch.float32)

# there is only one class

labels = torch.ones((num_objs,), dtype=torch.int64)

masks = torch.as_tensor(masks, dtype=torch.uint8)

image_id = torch.tensor([idx])

area = (boxes[:, 3] - boxes[:, 1]) * (boxes[:, 2] - boxes[:, 0])

# suppose all instances are not crowd

iscrowd = torch.zeros((num_objs,), dtype=torch.int64)

target = {}

target["boxes"] = boxes

target["labels"] = labels

target["masks"] = masks

target["image_id"] = image_id

target["area"] = area

target["iscrowd"] = iscrowd

if self.transforms is not None:

img, target = self.transforms(img, target)

return img, target

def __len__(self):

return len(self.imgs)

搭建模型

Mask R-CNN是基于Faster R-CNN改造而来的。Faster R-CNN用于预测图像中潜在的目标框和分类得分,而Mask R-CNN在此基础上加了一个额外的分支,用于预测每个实例的分割mask。

有两种方式来修改torchvision modelzoo中的模型,以达到预期的目的。第一种,采用预训练的模型,在修改网络最后一层后finetune。第二种,根据需要替换掉模型中的骨干网络,如将ResNet替换成MobileNet等。

1. Finetuning from a pretrained model

# if you want to start from a model pre-trained on COCO and want to finetune it for your particular classes.

# Here is a possible way of doing it:

import torchvision

from torchvision.models.detection.faster_rcnn import FastRCNNPredictor

# load a model pre-trained on COCO

model = torchvision.models.detection.fasterrcnn_resnet50_fpn(pretrained=True)

# replace the classifier with a new one, that has

# num_classes which is user-defined

num_classes = 2 # 1 class(person) + background

# get number of input features for the classifier

in_features = model.roi_heads.box_predictor.cls_score.in_features

# replace the pre-trained head with a new one

model.roi_head.box_predictor = FastRCNNPredictor(in_features, num_classes)

2. Modifying the model to add a different backbone

# if you want to replace the backbone of the model with a different one。

# 举例来说,默认的骨干网络(ResNet-50)对于某些应用来说可能参数过多不易部署,可以考虑将其替换成更轻量的网络(如MobileNet)

# Here is a possible way of doing it:

import torchvision

from torchvision.models.detection import FasterRCNN

from torchvision.models.detection.rpn import AnchorGenerator

# load a pre-trained model for classification and return only the features

backbone = torchvision.models.mobilenet_v2(pretrained=True).features

# FasterRNN needs to know the number of output channels in a backbone.

# For mobilenet_v2, it's 1280, so we need to add it here

backbone.out_channels = 1280

# let's make the RPN generate 5 x 3 anchors per spatial

# location, with 5 different sizes and 3 different aspect

# ratios. We have a Tuple[Tuple[int]] because each feature

# map could potentially have different sizes and

# aspect ratios

anchor_generator = AnchorGenerator(sizes=((32, 64, 128, 256, 512),),

aspect_ratios=((0.5, 1.0, 2.0),))

# let's define what are the feature maps that we will

# use to perform the region of interest cropping, as well as

# the size of the crop after rescaling.

# if your backbone returns a Tensor, featmap_names is expected to

# be [0]. More generally, the backbone should return an

# OrderedDict[Tensor], and in featmap_names you can choose which

# feature maps to use.

roi_pooler = torchvision.ops.MultiScaleRoIAlign(featmap_names=[0],

output_size=7,

sampling_ratio=2)

# put the pieces together inside a FasterRCNN model

model = FasterRCNN(backbone,

num_classes=2,

rpn_anchor_generator=anchor_generator,

box_roi_pool=roi_pooler)

本文的目的是在PennFudan数据集上训练Mask R-CNN实例分割模型,即上述第一种情况。在torchvision.models.detection中有官方的网络定义和接口的文件,可以直接使用。

import torchvision

from torchvision.models.detection.faster_rcnn import FastRCNNPredictor

from torchvision.models.detection.mask_rcnn import MaskRCNNPredictor

def get_model_instance_segemention(nun_classes):

# load an instance segmentation model pre-trained pre-trained on COCO

model = torchvision.models.detection.maskrcnn_resnet50_fpn(pretrained=True)

# get number of input features for the classifier

in_features = model.roi_heads.box_predictor.cls_score.in_features

# replace the pre-trained head with a new one

model.roi_heads.box_predictor = FastRCNNPredictor(in_features, num_classes)

# now get the number of input features for the mask classifier

in_features_mask = model.roi_heads.mask_predictor.conv5_mask.in_channels

hidden_layer = 256

# num_classes = 2 # 1 class(person) + background

# and replace the mask predictor with a new one

model.roi_heads.mask_predictor = MaskRCNNPredictor(in_features_mask,

hidden_layer,

num_classes)

return model

"""

在PyTorch官方的references/detection/中,有一些封装好的用于模型训练和测试的函数.

其中references/detection/engine.py、references/detection/utils.py、references/detection/transforms.py是我们需要用到的。

首先,将这些文件拷贝过来.这一步也是折腾半天,官网教程没有说的很清楚,原来是在GitHub/Pytorch里面有一个vision模块,里面包含了utils.py,transform.py

h和engine.py这些文件。

# Download TorchVision repo to use some files from references/detection

>>git clone https://github.com/pytorch/vision.git

>>cd vision

>>git checkout v0.4.0

>>cp references/detection/utils.py ../

>>cp references/detection/transforms.py ../

>>cp references/detection/coco_eval.py ../

>>cp references/detection/engine.py ../

>>cp references/detection/coco_utils.py ../

"""

3. 数据增强/转换

import transforms as T

def get_transform(train):

transforms = []

transforms.append(T.ToTensor())

if train:

transforms.append(T.RandomHorizontalFlip(0.5))

return T.Compose(transforms)

4. 训练(我没训练成功)

数据集、模型、数据增强的部分都已经写好。在模型初始化、优化器及学习率调整策略选定后,就可以开始训练了。

这里,设置模型训练10个epochs,并且在每个epoch完成后在测试集上对模型的性能进行评价。

from engine import train_one_epoch, evaluate

import utils

def main():

# train on the GPU or on the CPU, if a GPU is not available

device = torch.device('cuda') if torch.cuda.is_available() else torch.device('cpu')

# our dataset has two classes only - background and person

num_classes = 2

# use our dataset and defined transformations

dataset = PennFudanDataset('PennFudanPed', get_transform(train=True))

dataset_test = PennFudanDataset('PennFudanPed', get_transform(train=False))

# split the dataset in train and test set

indices = torch.randperm(len(dataset)).tolist()

dataset = torch.utils.data.Subset(dataset, indices[:-50])

dataset_test = torch.utils.data.Subset(dataset_test, indices[-50:])

# define training and validation data loaders

data_loader = torch.utils.data.DataLoader(

dataset, batch_size=2, shuffle=True, num_workers=4,

collate_fn=utils.collate_fn)

data_loader_test = torch.utils.data.DataLoader(

dataset_test, batch_size=1, shuffle=False, num_workers=4,

collate_fn=utils.collate_fn)

# get the model using our helper function

model = get_model_instance_segmentation(num_classes)

# move model to the right device

model.to(device)

# construct an optimizer

params = [p for p in model.parameters() if p.requires_grad]

optimizer = torch.optim.SGD(params, lr=0.005,

momentum=0.9, weight_decay=0.0005)

# and a learning rate scheduler

lr_scheduler = torch.optim.lr_scheduler.StepLR(optimizer,

step_size=3,

gamma=