【代码粗读】Simultaneously Self-Attending to All Mentions for Full-Abstract Biological Relation Extraction

代码地址:https://github.com/patverga/bran

今天下载这篇论文的代码,着实把我惊住了,工程量庞大,脚本文件太多看花眼,而且代码使用python2 写的,和我python3环境不搭。

先记录下调试过程中的坑。

Setup Environment Variables

From this directory call: source set_environment.sh

Note: this will only set the paths for this session.

#!/usr/bin/env bash export PYTHONPATH=$PYTHONPATH:`pwd` export CDR_IE_ROOT=`pwd`

主要是通过'pwd'获得当前文件夹的目录。用shell中变量CDR_IE_ROOT保存

Processing Data

CDR

Process the CDR dataset${CDR_IE_ROOT}/bin/process_CDR/process_CDR.sh

Process the CDR dataset including additional weakly labeled data${CDR_IE_ROOT}/bin/process_CDR/process_CDR_extra_data.sh

These scripts will use byte-pair encoding (BPE) tokenization. There are also scripts to tokenize using the Genia tokenizer.

#!/usr/bin/env bash #CDR_IE_ROOT = 'D:/python/wgy_jupyter/bran-master' word_piece_vocab=data/cdr/word_piece_vocabs/just_train_2500/word_pieces.txt input_dir=${CDR_IE_ROOT}/data/cdr processed_dir=${input_dir}/processed/just_train_2500 proto_dir=${processed_dir}/protos max_len=500000 # replace infrequent tokens with <UNK> min_count=5 # process train, dev, and test data mkdir -p ${processed_dir} echo "Processing Training data" python ${CDR_IE_ROOT}/src/processing/utils/process_CDR_data.py \ --input_file ${input_dir}/CDR_TrainingSet.PubTator.txt.gz \ --output_dir ${processed_dir} --output_file_suffix CDR_train.txt \ --max_seq ${max_len} \ --full_abstract True --word_piece_codes ${word_piece_vocab}

for f in CDR_dev CDR_train CDR_test; do

python ${CDR_IE_ROOT}/src/processing/utils/filter_hypernyms.py \

-p ${processed_dir}/positive_0_${f}.txt \

-n ${processed_dir}/negative_0_${f}.txt \

-m data/2017MeshTree.txt \

-o ${processed_dir}/negative_0_${f}_filtered.txt

done

拿训练集来举例:根据sh脚本文件的命令我们通过加载TraningSet.PubTator.txt.gz并与用分词工具BPE,得到关于训练集的命名实体训练集,正样本,负样本(过滤/未过滤)

word_pieces是一些切开分词的内容:

#version: 0.2 i n t i r e t h a n e n e r e d</w> o n o f</w> a t th e</w> r o i n</w> a ti i t an d</w> a r o n</w> s t s i a l a l</w> o r i c a s e c d i a c en t l o in g</w> t o</w>

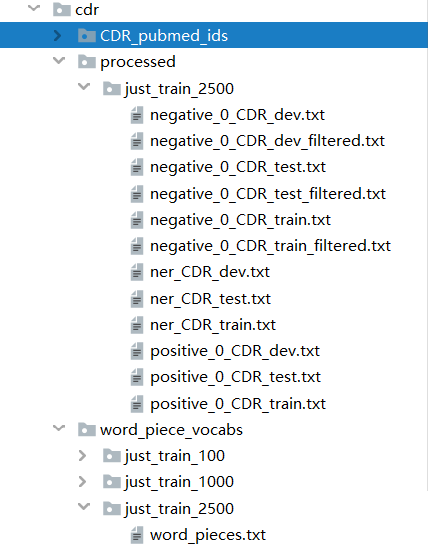

生成的数据集文件目录:

其中因为python2与python3的语法问题,Error: a bytes-like object is required, not 'str'

问题出在python3.5和Python2.7在套接字返回值解码上有区别:

python bytes和str两种类型可以通过函数encode()和decode()相互转换,

str→bytes:encode()方法。str通过encode()方法可以转换为bytes。

bytes→str:decode()方法。如果我们从网络或磁盘上读取了字节流,那么读到的数据就是bytes。要把bytes变为str,就需要用decode()方法。

解决方法:

将line.strip().split(",") 改为 line.decode().strip().split(",")【这分明和报错写的意思相反!】

也不知道为什么同一文件夹目录下

from word_piece_tokenizer import WordPieceTokenizer

语句导入同级别的包会标出红线报错,但是不影响使用,但在Pycharm中无法ctrl+鼠标索引;

from .word_piece_tokenizer import WordPieceTokenizer

这样同级别包下虽然不会红线报错,但代码无法运行,但在Pycharm中能够ctrl+鼠标索引.

代码使用Tensorflow 1.X写的所以没太看懂,只找到对应论文的模型部分看下作者如何实现的:

2.1 Inputs

1 def get_token_embeddings(self, token_embeddings, position_embeddings, token_attention=None): 2 selected_words = tf.nn.embedding_lookup(token_embeddings, self.text_batch) 3 if self.project_inputs: 4 params = {"inputs": selected_words, "filters": self.embed_dim, "kernel_size": 1, 5 "activation": tf.nn.relu, "use_bias": True} 6 selected_words = tf.layers.conv1d(**params) 7 if self.position_dim > 0: 8 selected_e1_dists = tf.nn.embedding_lookup(position_embeddings, self.e1_dist_batch) 9 selected_e2_dists = tf.nn.embedding_lookup(position_embeddings, self.e2_dist_batch) 10 token_embeds = tf.concat(axis=2, values=[selected_words, selected_e1_dists, selected_e2_dists]) 11 else: 12 token_embeds = selected_words 13 14 if self.encode_position: 15 pad_mask = tf.expand_dims(tf.cast(tf.not_equal(self.text_batch, self.pad_idx), tf.float32), [2]) 16 pos_encoding = pad_mask * tf.nn.embedding_lookup(self.pos_encoding, self.pos_encode_batch) 17 token_embeds = tf.add(token_embeds, pos_encoding) 18 19 dropped_embeddings = tf.nn.dropout(token_embeds, self.word_dropout_keep) 20 # keep pad tokens as 0 vectors 21 if self.filter_pad: 22 print('Filtering pad tokens') 23 dropped_embeddings = tf.multiply(dropped_embeddings, 24 tf.expand_dims(tf.cast(tf.not_equal(self.text_batch, self.pad_idx), 25 tf.float32), [2])) 26 27 return dropped_embeddings

2.2 Transformer

1 def forward(self, input_feats, middle_dropout_keep_prob, hidden_dropout_keep_prob, batch_size, max_seq_len, 2 reuse, block_num=0): 3 initial_in_dim =self.embed_dim if self.project_inputs else self.token_dim+(2*self.position_dim) 4 input_feats *= (initial_in_dim ** 0.5) #开根号 5 self.attention_weights = [] 6 for i in range(self.block_repeats): #B个transformer块 7 with tf.variable_scope("num_blocks_{}".format(i)): 8 ### Multihead Attention 9 in_dim = self.embed_dim if i > 0 else initial_in_dim 10 input_feats = self.multihead_attention(queries=input_feats, 11 keys=input_feats, 12 num_units=in_dim, 13 num_heads=self.num_heads, 14 dropout_rate=middle_dropout_keep_prob, 15 causality=False, 16 reuse=reuse) 17 18 ### Feed Forward 19 input_feats = self.feedforward(input_feats, num_units=[in_dim*self.ff_scale, in_dim], reuse=reuse) 20 return input_feats

2.2.1 Multi-head Attention

1 def multihead_attention(self, queries, 2 keys, 3 num_units=None, 4 num_heads=8, 5 dropout_rate=0, 6 is_training=True, 7 causality=False, 8 scope="multihead_attention", 9 reuse=None): 10 ''' 11 June 2017 by kyubyong park. 12 kbpark.linguist@gmail.com. 13 https://www.github.com/kyubyong/transformer 14 ''' 15 '''Applies multihead attention. 16 17 Args: 18 queries: A 3d tensor with shape of [N, T_q, C_q]. 19 keys: A 3d tensor with shape of [N, T_k, C_k]. 20 num_units: A scalar. Attention size. 21 dropout_rate: A floating point number. 22 is_training: Boolean. Controller of mechanism for dropout. 23 causality: Boolean. If true, units that reference the future are masked. 24 num_heads: An int. Number of heads. 25 scope: Optional scope for `variable_scope`. 26 reuse: Boolean, whether to reuse the weights of a previous layer 27 by the same name. 28 29 Returns 30 A 3d tensor with shape of (N, T_q, C) 31 ''' 32 with tf.variable_scope(scope, reuse=reuse): 33 # Set the fall back option for num_units 34 if num_units is None: 35 num_units = queries.get_shape().as_list[-1] 36 37 # Linear projections 38 Q = tf.layers.dense(queries, num_units, activation=tf.nn.relu) # (N, T_q, C) 39 K = tf.layers.dense(keys, num_units, activation=tf.nn.relu) # (N, T_k, C) 40 V = tf.layers.dense(keys, num_units, activation=tf.nn.relu) # (N, T_k, C) 41 42 # Split and concat 43 Q_ = tf.concat(tf.split(Q, num_heads, axis=2), axis=0) # (h*N, T_q, C/h) 44 K_ = tf.concat(tf.split(K, num_heads, axis=2), axis=0) # (h*N, T_k, C/h) 45 V_ = tf.concat(tf.split(V, num_heads, axis=2), axis=0) # (h*N, T_k, C/h) 46 47 # Multiplication 48 outputs = tf.matmul(Q_, tf.transpose(K_, [0, 2, 1])) # (h*N, T_q, T_k) 49 50 # Scale 51 outputs = outputs / (K_.get_shape().as_list()[-1] ** 0.5) 52 53 # Key Masking 54 key_masks = tf.sign(tf.abs(tf.reduce_sum(keys, axis=-1))) # (N, T_k) 55 key_masks = tf.tile(key_masks, [num_heads, 1]) # (h*N, T_k) 56 key_masks = tf.tile(tf.expand_dims(key_masks, 1), [1, tf.shape(queries)[1], 1]) # (h*N, T_q, T_k) 57 58 paddings = tf.ones_like(outputs)*(-1e8) 59 outputs = tf.where(tf.equal(key_masks, 0), paddings, outputs) # (h*N, T_q, T_k) 60 61 # Activation 62 attention_weights = tf.nn.softmax(outputs) # (h*N, T_q, T_k) 63 # store the attention weights for analysis 64 batch_size = tf.shape(queries)[0] 65 seq_len = tf.shape(queries)[1] 66 save_attention = tf.reshape(attention_weights, [self.num_heads, batch_size, seq_len, seq_len]) 67 save_attention = tf.transpose(save_attention, [1, 0, 2, 3]) 68 self.attention_weights.append(save_attention) 69 70 # Query Masking 71 query_masks = tf.sign(tf.abs(tf.reduce_sum(queries, axis=-1))) # (N, T_q) 72 query_masks = tf.tile(query_masks, [num_heads, 1]) # (h*N, T_q) 73 query_masks = tf.tile(tf.expand_dims(query_masks, -1), [1, 1, tf.shape(keys)[1]]) # (h*N, T_q, T_k) 74 outputs = attention_weights * query_masks # broadcasting. (N, T_q, C) 75 76 # Dropouts 77 outputs = tf.nn.dropout(outputs, dropout_rate) 78 79 # Weighted sum 80 outputs = tf.matmul(outputs, V_) # ( h*N, T_q, C/h) 81 82 # Restore shape 83 outputs = tf.concat(tf.split(outputs, num_heads, axis=0), axis=-1 ) # (N, T_q, C) 84 85 # Residual connection 86 outputs += tf.nn.dropout(queries, dropout_rate) 87 88 # Normalize 89 outputs = self.normalize(outputs) # (N, T_q, C) 90 91 return outputs

2.2.2 Convolutions

1 def feedforward(self, inputs, 2 num_units=[2048, 512], 3 scope="multihead_attention", 4 reuse=None): 5 6 '''Point-wise feed forward net. 7 8 Args: 9 inputs: A 3d tensor with shape of [N, T, C]. 10 num_units: A list of two integers. 11 scope: Optional scope for `variable_scope`. 12 reuse: Boolean, whether to reuse the weights of a previous layer 13 by the same name. 14 15 Returns: 16 A 3d tensor with the same shape and dtype as inputs 17 ''' 18 with tf.variable_scope(scope, reuse=reuse): 19 outputs = inputs 20 layer_params = self.layer_str.split(',') 21 for i, l_params in enumerate(layer_params): 22 width, dilation = [int(x) for x in l_params.split(':')] 23 dim = num_units[1] if i == (len(layer_params)-1) else num_units[0] 24 25 print('dimension: %d width: %d dilation: %d' % (dim, width, dilation)) 26 params = {"inputs": outputs, "filters": dim, "kernel_size": width, 27 "activation": tf.nn.relu, "use_bias": True, "padding": "same", "dilation_rate": dilation} 28 outputs = tf.layers.conv1d(**params) 29 # mask padding 30 outputs *= tf.expand_dims(tf.cast(tf.not_equal(self.text_batch, self.pad_idx), tf.float32), [2]) 31 # Residual connection 32 inputs += outputs 33 34 # Normalize 35 outputs = self.normalize(outputs) 36 37 return outputs

2.3 Bi-affine Pairwise Scores

1 def aggregate_tokens(self, encoded_tokens, batch_size, max_seq_len, 2 attention_vector, e1_dist_batch, e2_dist_batch, seq_lens, 3 middle_dropout_keep_prob, hidden_dropout_keep_prob, final_dropout_keep, 4 scope_name='text', reuse=False, aggregation='attention'): 5 6 reduction = tf.reduce_logsumexp 7 # # aggregation='attention' 8 with tf.variable_scope(scope_name, reuse=reuse): 9 input_feats = encoded_tokens 10 11 e1_mask = tf.cast(tf.expand_dims(tf.equal(self.e1_dist_batch, self.entity_index), 2), tf.float32) 12 e2_mask = tf.cast(tf.expand_dims(tf.equal(self.e2_dist_batch, self.entity_index), 2), tf.float32) 13 14 # # b x s x (d*l) 15 e1 = tf.layers.dense(tf.layers.dense(input_feats, self.embed_dim, activation=tf.nn.relu), self.embed_dim) 16 e2 = tf.layers.dense(tf.layers.dense(input_feats, self.embed_dim, activation=tf.nn.relu), self.embed_dim) 17 18 e1 = tf.nn.dropout(e1, final_dropout_keep) 19 e2 = tf.nn.dropout(e2, final_dropout_keep) 20 21 # result = self.diagonal_bilinear(e1, e2, num_labels) 22 pairwise_scores = self.bilinear(e1, e2, self.num_labels) 23 # self.attention_weights = tf.split(self.bilinear_scores, self.num_labels, 2)[1] 24 self.pairwise_scores = tf.nn.softmax(pairwise_scores, dim=2) 25 result = tf.transpose(pairwise_scores, [0, 1, 3, 2]) 26 # mask result 27 result += tf.expand_dims(self.ep_dist_batch, 3) 28 outputs = reduction(result, [1, 2]) 29 print(outputs.get_shape()) 30 31 return outputs

2.4 Entity Level Prediction

1 def bilinear(self, inputs1, inputs2, output_size, add_bias2=True, add_bias1=True, add_bias=False, 2 initializer=None, scope=None, moving_params=None): 3 """""" 4 with tf.variable_scope(scope or 'Bilinear'): 5 # Reformat the inputs 6 ndims = len(inputs1.get_shape().as_list()) 7 inputs1_shape = tf.shape(inputs1) 8 inputs1_bucket_size = inputs1_shape[ndims-2] 9 inputs1_size = inputs1.get_shape().as_list()[-1] 10 11 inputs2_shape = tf.shape(inputs2) 12 inputs2_bucket_size = inputs2_shape[ndims-2] 13 inputs2_size = inputs2.get_shape().as_list()[-1] 14 output_shape = [] 15 batch_size = 1 16 for i in range(ndims-2): 17 batch_size *= inputs1_shape[i] 18 output_shape.append(inputs1_shape[i]) 19 output_shape.append(inputs1_bucket_size) 20 output_shape.append(output_size) 21 output_shape.append(inputs2_bucket_size) 22 inputs1 = tf.reshape(inputs1, [batch_size, inputs1_bucket_size, inputs1_size]) 23 inputs2 = tf.reshape(inputs2, [batch_size, inputs2_bucket_size, inputs2_size]) 24 if add_bias1: 25 inputs1 = tf.concat([inputs1, tf.ones([batch_size, inputs1_bucket_size, 1])], 2) 26 if add_bias2: 27 inputs2 = tf.concat([inputs2, tf.ones([batch_size, inputs2_bucket_size, 1])], 2) 28 29 # Get the matrix 30 if initializer is None and moving_params is None: 31 mat = orthonormal_initializer(inputs1_size+add_bias1, inputs2_size+add_bias2)[:,None,:] 32 mat = np.concatenate([mat]*output_size, axis=1) 33 initializer = tf.constant_initializer(mat) 34 weights = tf.get_variable('Weights', [inputs1_size+add_bias1, output_size, inputs2_size+add_bias2], initializer=initializer) 35 if moving_params is not None: 36 weights = moving_params.average(weights) 37 else: 38 tf.add_to_collection('Weights', weights) 39 40 # Do the multiplications 41 # (bn x d) (d x rd) -> (bn x rd) 42 lin = tf.matmul(tf.reshape(inputs1, [-1, inputs1_size+add_bias1]), 43 tf.reshape(weights, [inputs1_size+add_bias1, -1])) 44 # (b x nr x d) (b x n x d)T -> (b x nr x n) 45 bilin = tf.matmul(tf.reshape(lin, [batch_size, inputs1_bucket_size*output_size, inputs2_size+add_bias2]), 46 inputs2, adjoint_b=True) 47 # (bn x r x n) 48 bilin = tf.reshape(bilin, [-1, output_size, inputs2_bucket_size]) 49 # (b x n x r x n) 50 bilin = tf.reshape(bilin, output_shape) 51 52 # Get the bias 53 if add_bias: 54 bias = tf.get_variable('Biases', [output_size], initializer=tf.zeros_initializer) 55 if moving_params is not None: 56 bias = moving_params.average(bias) 57 bilin += tf.expand_dims(bias, 1) 58 59 return bilin

由aggregate_tokens()得到的实体对score结果传入下列第19行代码:

1 def embed_text_from_tokens(self, selected_col_embeddings, attention_vector, e1_dist_batch, e2_dist_batch, seq_lens, 2 middle_dropout_keep_prob, hidden_dropout_keep_prob, final_dropout_keep, 3 scope_name='text', reuse=False, aggregation='piecewise', return_tokens=False): 4 batch_size = tf.shape(selected_col_embeddings)[0] 5 max_seq_len = tf.shape(selected_col_embeddings)[1] 6 7 output = [] 8 last_output = selected_col_embeddings 9 if not reuse: 10 print('___aggregation type: %s filter %d block repeats: %d___' 11 % (aggregation, self.filter_width, self.block_repeats)) 12 for i in range(1): 13 block_reuse = (reuse if i == 0 else True) 14 encoded_tokens = self.forward(last_output, middle_dropout_keep_prob, 15 hidden_dropout_keep_prob, batch_size, max_seq_len, block_reuse, i) 16 if return_tokens: 17 output.append(encoded_tokens) 18 else: 19 encoded_seq = self.aggregate_tokens(encoded_tokens, batch_size, max_seq_len, 20 attention_vector, e1_dist_batch, e2_dist_batch, seq_lens, 21 middle_dropout_keep_prob, hidden_dropout_keep_prob, final_dropout_keep, 22 scope_name=scope_name, reuse=block_reuse, aggregation=aggregation) 23 output.append(encoded_seq) 24 last_output = encoded_tokens 25 return output

由aggregate_tokens()得到的结果传入下列第12行代码:

1 def embed_text(self, token_embeddings, position_embeddings, attention_vector, #最终结果 2 scope_name='text', reuse=False, aggregation='attention', no_dropout=False, 3 return_tokens=False, token_attention=None,): 4 selected_col_embeddings = self.get_token_embeddings(token_embeddings, position_embeddings, token_attention) 5 if no_dropout: 6 middle_dropout_keep_prob, hidden_dropout_keep_prob, final_dropout_keep = 1.0, 1.0, 1.0 7 else: 8 middle_dropout_keep_prob = self.middle_dropout_keep_prob 9 hidden_dropout_keep_prob = self.hidden_dropout_keep_prob 10 final_dropout_keep = self.final_dropout_keep 11 12 output = self.embed_text_from_tokens( 13 selected_col_embeddings, attention_vector, 14 self.e1_dist_batch, self.e2_dist_batch, self.seq_len_batch, 15 middle_dropout_keep_prob, hidden_dropout_keep_prob, final_dropout_keep, 16 scope_name, reuse, aggregation, return_tokens=return_tokens) 17 return output

使用embed_text()的预测结果与标签做梯度下降优化模型:

1 encoded_text_list = self.text_encoder.embed_text(self.token_embeddings, self.position_embeddings, 2 self.attention_vector, token_attention=token_attention) 3 4 self.loss = self.calculate_loss(encoded_text_list, predictions_list, self.label_batch, 5 FLAGS.l2_weight, FLAGS.dropout_loss_weight, no_drop_output_list)

浙公网安备 33010602011771号

浙公网安备 33010602011771号