通过hadoop上的hive完成WordCount

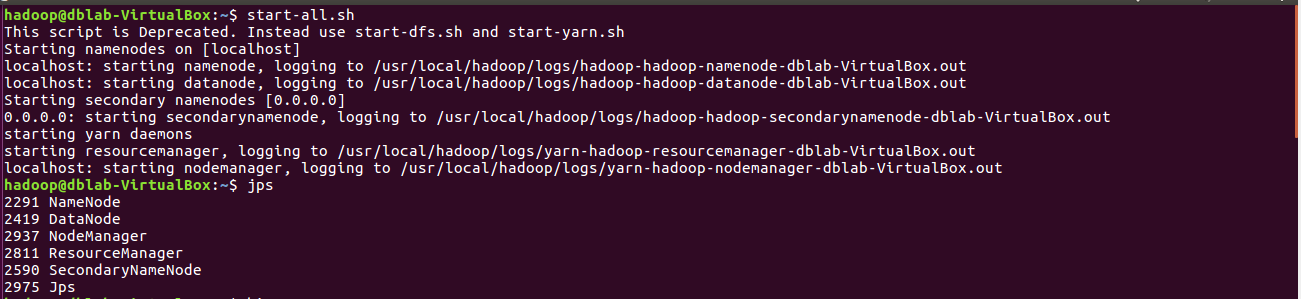

启动hadoop

|

1

|

start-all.sh |

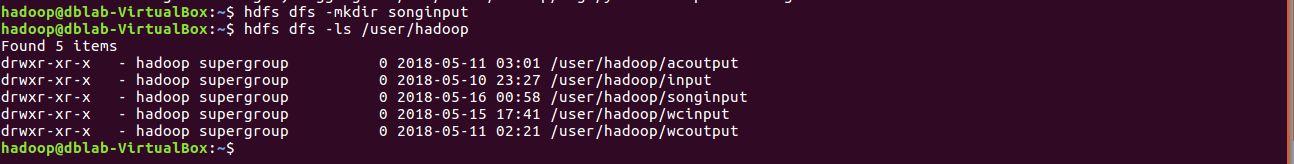

Hdfs上创建文件夹

|

1

2

|

hdfs dfs -mkdir songinputhdfs dfs -ls /user/hadoop |

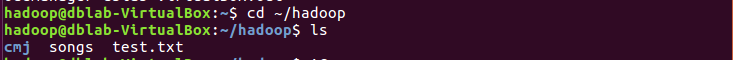

上传文件至hdfs:

下载歌词songs.txt保存在~/hadoop里,查询目录

|

1

2

|

cd ~/hadoopls |

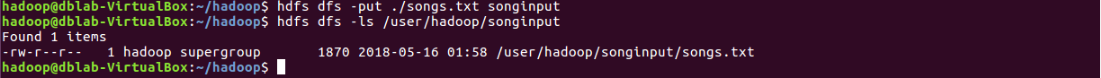

上传至hdfs

|

1

2

|

hdfs dfs -put ./songs.txt songinputhdfs dfs -ls /user/hadoop/songinput |

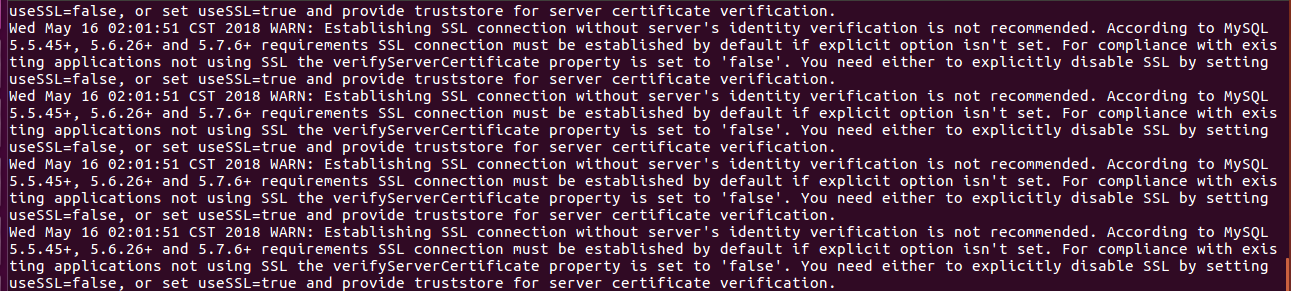

启动Hive

|

1

|

hive |

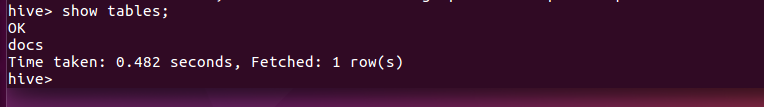

创建原始文档表

|

1

|

create table docs(line string);<br>show tables; |

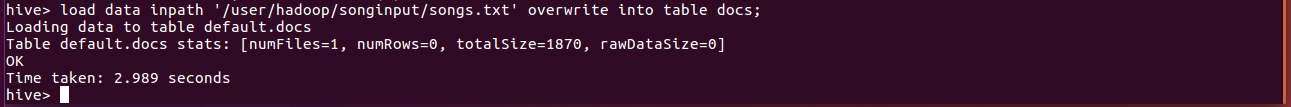

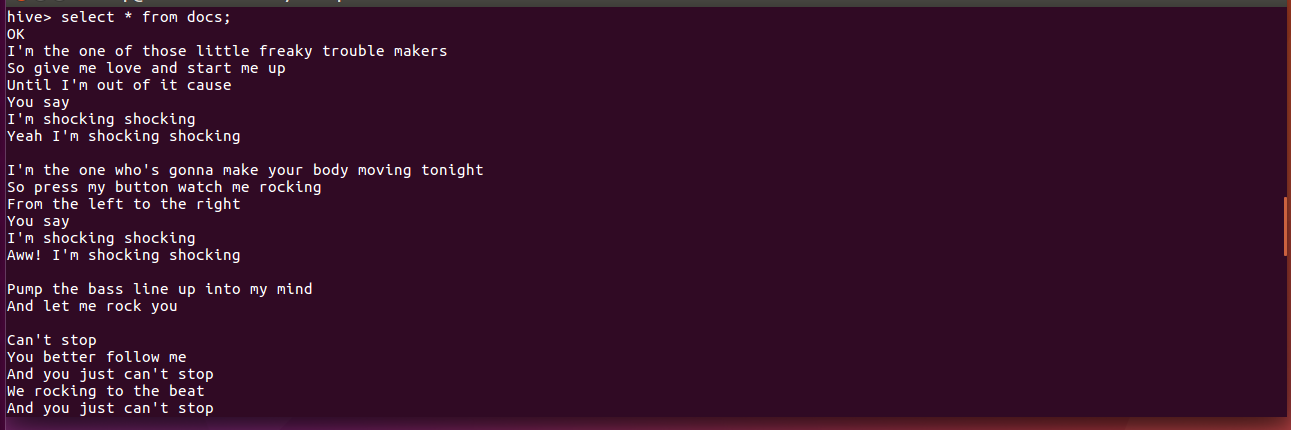

导入文件内容到表docs并查看

|

1

|

load data inpath '/user/hadoop/songinput/songs.txt' overwrite into table docs;<br>select * fromdocs; |

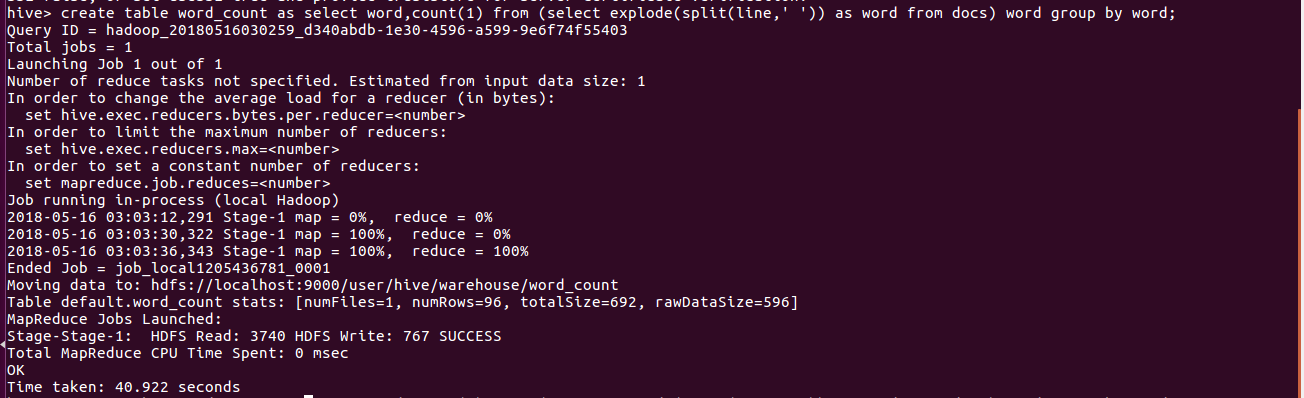

用HQL进行词频统计,结果放在表word_count里

|

1

|

create table word_count as select word,count(1) from (select explode(split(line,' ')) as word from docs) word group by word; |

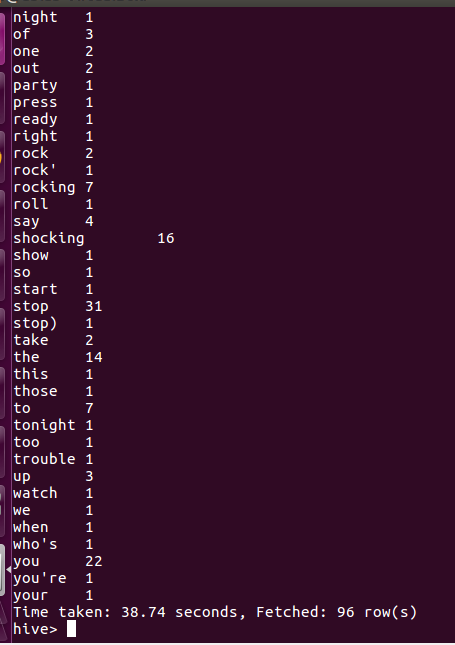

查看统计结果

|

1

|

select * from word_count; |

浙公网安备 33010602011771号

浙公网安备 33010602011771号