环境配置

一。生成数据库表

1. 创建数据库和表

/* SQLyog Ultimate v12.08 (64 bit) MySQL - 5.6.42 : Database - zghPortrait ********************************************************************* */ /*!40101 SET NAMES utf8 */; /*!40101 SET SQL_MODE=''*/; /*!40014 SET @OLD_UNIQUE_CHECKS=@@UNIQUE_CHECKS, UNIQUE_CHECKS=0 */; /*!40014 SET @OLD_FOREIGN_KEY_CHECKS=@@FOREIGN_KEY_CHECKS, FOREIGN_KEY_CHECKS=0 */; /*!40101 SET @OLD_SQL_MODE=@@SQL_MODE, SQL_MODE='NO_AUTO_VALUE_ON_ZERO' */; /*!40111 SET @OLD_SQL_NOTES=@@SQL_NOTES, SQL_NOTES=0 */; CREATE DATABASE /*!32312 IF NOT EXISTS*/`zghPortrait` /*!40100 DEFAULT CHARACTER SET utf8 COLLATE utf8_bin */; USE `zghPortrait`; /*Table structure for table `userInfo` */ DROP TABLE IF EXISTS `userInfo`; CREATE TABLE `userInfo` ( `userid` int(20) DEFAULT NULL COMMENT '用户id', `username` varchar(50) COLLATE utf8_bin DEFAULT NULL COMMENT '名称', `password` varchar(50) COLLATE utf8_bin DEFAULT NULL COMMENT '密码', `sex` int(1) DEFAULT NULL COMMENT '性别', `telphone` varchar(50) COLLATE utf8_bin DEFAULT NULL COMMENT '手机号', `email` varchar(50) COLLATE utf8_bin DEFAULT NULL COMMENT '邮箱', `age` int(20) DEFAULT NULL COMMENT '年龄', `idCard` varchar(50) COLLATE utf8_bin DEFAULT NULL COMMENT '身份证', `registerTime` timestamp NOT NULL DEFAULT CURRENT_TIMESTAMP ON UPDATE CURRENT_TIMESTAMP COMMENT '注册时间', `usetype` int(1) DEFAULT NULL COMMENT '使用终端:0 pc端:1 移动端:2 小程序端' ) ENGINE=InnoDB DEFAULT CHARSET=utf8 COLLATE=utf8_bin; /*Data for the table `userInfo` */ /*Table structure for table `userdetail` */ DROP TABLE IF EXISTS `userdetail`; CREATE TABLE `userdetail` ( `userdetailid` int(20) NOT NULL COMMENT '详情id', `userid` int(20) DEFAULT NULL COMMENT '用户id', `edu` int(1) DEFAULT NULL COMMENT '学历', `profession` varchar(20) COLLATE utf8_bin DEFAULT NULL COMMENT '职业', `marriage` int(1) DEFAULT NULL COMMENT '婚姻状态:1 未婚:2 已婚:3 离异', `haschild` int(1) DEFAULT NULL COMMENT '1.没有小孩:2.有小孩 3.未知 4. 未知', `hascar` int(1) DEFAULT NULL COMMENT '1.有车;2.没车;3. 未知', `hashourse` int(1) DEFAULT NULL COMMENT '1.有房;2.没房;3. 未知', `telphonebrand` varchar(50) COLLATE utf8_bin DEFAULT NULL COMMENT '手机品牌', PRIMARY KEY (`userdetailid`) ) ENGINE=InnoDB DEFAULT CHARSET=utf8 COLLATE=utf8_bin; /*Data for the table `userdetail` */ /*!40101 SET SQL_MODE=@OLD_SQL_MODE */; /*!40014 SET FOREIGN_KEY_CHECKS=@OLD_FOREIGN_KEY_CHECKS */; /*!40014 SET UNIQUE_CHECKS=@OLD_UNIQUE_CHECKS */; /*!40111 SET SQL_NOTES=@OLD_SQL_NOTES */;

二。创建maven工程

1. 生成父工程:zghPortraitMain ,并在此工程下生成工程:analySrv ,工程 analySrv 继承且聚合于工程 zghPortraitMain

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>com.zgh.portrait</groupId> <artifactId>zghPortraitMain</artifactId> <packaging>pom</packaging> <version>1.0-SNAPSHOT</version> <modules> <module>analySrv</module> </modules> </project>

2. 在工程 analySrv 下添加依赖,如果依赖下载无进度,停止任务并对应删除本地仓库的依赖,重新载入或者刷新下载

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>com.zgh.portrait</groupId> <!--依赖父版本--> <!--<version>1.0-SNAPSHOT</version>--> <artifactId>analySrv</artifactId> <parent> <groupId>org.apache.flink</groupId> <artifactId>flink-examples</artifactId> <version>1.7.0</version> </parent> <properties> <scala.version>2.11.12</scala.version> <scala.binary.version>2.11</scala.binary.version> </properties> <dependencies> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-streaming-java_${scala.binary.version}</artifactId> <version>${project.version}</version> </dependency> <dependency> <groupId>org.apache.flink</groupId> <artifactId>flink-scala_2.11</artifactId> <version>${project.version}</version> </dependency> </dependencies> </project>

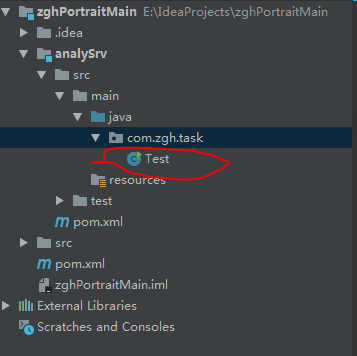

3. 在 analySrv 下新建包:com.zgh.task ,并创建 Test 类

package com.zgh.task; import org.apache.flink.api.java.utils.ParameterTool; import org.apache.flink.api.java.DataSet; import org.apache.flink.api.java.ExecutionEnvironment; public class Test { public static void main(String[] args){ final ParameterTool params = ParameterTool.fromArgs(args); // set up the execution environment final ExecutionEnvironment env = ExecutionEnvironment.getExecutionEnvironment(); // make parameters available in the web interface env.getConfig().setGlobalJobParameters(params); // get input data hdfs DataSet<String> text = env.readTextFile(params.get("input")); DataSet map = text.flatMap(null); DataSet reduce = map.groupBy("groupbyfield").reduce(null); try { env.execute("test"); } catch (Exception e) { e.printStackTrace(); } } }

三。搭建hadoop

1. 修改主机名为 master ,并对应 ip(dns),然后重新登录

[root@localhost ~]# vim /etc/hostname [root@localhost ~]# cat /etc/hostname master [root@localhost ~]# cat /etc/hosts 127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4 ::1 localhost localhost.localdomain localhost6 localhost6.localdomain6 10.20.0.129 master [root@localhost ~]# Last login: Fri Mar 1 10:16:35 2019 [root@master ~]#

2. 配置秘钥,回车默认,再配置秘钥登录

[root@master ~]# ssh-keygen -t rsa Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): [root@master ~]# ssh-copy-id master /usr/bin/ssh-copy-id: INFO: Source of key(s) to be installed: "/root/.ssh/id_rsa.pub" [root@master ~]# ls ~/.ssh/ authorized_keys id_rsa id_rsa.pub known_hosts [root@master ~]# cat ~/.ssh/authorized_keys ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAABAQDDmisZgUIF36e10nYnff1YqoqXFabUW7FTEZ1uO2w6W2Xq9R95ywaRqElMUccNxaruI8T5NFJ0uGpD3Bbv7TOHr42DTalA7MYU3gLUCs5rFXQATKbRA3veQa9IN2KYSxwrvqfmJCVbJbLMEsTPvobWDaOvDAacWdwh5ppliY3ngI8tgevttXblFWPrfbThXdIws3/vu04plRSEYxUn0Dvp9y9s4fOBClSCCvAmo2y2D3wK3ut2qnwyM8jVL1Fy58tke/w4ZMtRb83Tt7lRFpY5InxGmxNH68sB1ql2YXzKrIPP3ShuLFldQkp8qK/uvLC8GwcHT7trL+X3wyDBrkX9 root@master [root@master ~]#

[root@master ~]# ssh master Last login: Fri Mar 1 10:17:55 2019 from 10.20.0.1 [root@master ~]#

3. 下载jdk,并解压

[root@master hadoop]# ls jdk-8u201-linux-x64.tar.gz [root@master hadoop]# tar -zxvf jdk-8u201-linux-x64.tar.gz

[root@master hadoop]# ls jdk1.8.0_201 jdk-8u201-linux-x64.tar.gz [root@master hadoop]# mv jdk1.8.0_201/ /usr/local/jdk [root@master hadoop]#

4. 下载 hadoop :http://archive.apache.org/dist/hadoop/core/

[root@master hadoop]# tar -zxvf hadoop-2.6.0.tar.gz [root@master hadoop]# mv hadoop-2.6.0/ /usr/local/hadoop [root@master hadoop]#

5. 配置 hadoop jdk环境

[root@master ~]# cd /usr/local/hadoop/etc/hadoop/ [root@master hadoop]# vim hadoop-env.sh

export JAVA_HOME=/usr/local/jdk

6. 配置 core-site.xml

[root@master hadoop]# vim core-site.xml [root@master hadoop]#

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://master:9000</value>

</property>

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/local/hadoop/tmp</value>

</property>

</configuration>

7. 配置 hdfs-site.xml

[root@master hadoop]# vim hdfs-site.xml [root@master hadoop]#

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.permissions</name>

<value>true</value>

</property>

</configuration>

8. 配置 mapred-site.xml

[root@master hadoop]# cp mapred-site.xml.template mapred-site.xml [root@master hadoop]# vim mapred-site.xml [root@master hadoop]#

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property>

<name>mapreduce.jobhistory.address</name>

<value>master:10020</value>

</property>

</configuration>

9. 配置 yarn-site.xml

[root@master hadoop]# vim yarn-site.xml [root@master hadoop]#

<configuration>

<!-- Site specific YARN configuration properties -->

<property>

<name>yarn.resourcemanager.hostname</name>

<value>master</value>

</property>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>mapreduce.job.ubertask.enable</name>

<value>true</value>

</property>

</configuration>

10. 配置环境变量

[root@master hadoop]# vim /etc/profile [root@master hadoop]#

在末尾加上

export JAVA_HOME=/usr/local/jdk export HADOOP_HOME=/usr/local/hadoop export PATH=.:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$PATH

让环境生效

[root@master hadoop]# source /etc/profile [root@master hadoop]# jps 31134 Jps [root@master hadoop]#

11. 启动 hadoop , 一键启动:start-all.sh

[root@master hadoop]# hadoop namenode -format [root@master hadoop]# start-dfs.sh [root@master hadoop]# start-yarn.sh [root@master ~]# jps 2518 DataNode 2886 NodeManager 2791 ResourceManager 2648 SecondaryNameNode 3180 Jps 2429 NameNode [root@master hadoop]# systemctl stop firewalld.service [root@master hadoop]#

12. 浏览器访问:http://10.20.0.129:50070/

四。安装hbase

1. 下载 zookeeper:http://mirror.bit.edu.cn/apache/zookeeper/

[root@master hadoop]# tar -zxvf zookeeper-3.4.10.tar.gz [root@master hadoop]# mv zookeeper-3.4.10/ /usr/local/zk

2. 配置 zookeeper 数据路径

[root@master hadoop]# cd /usr/local/zk/conf/ [root@master conf]# cp zoo_sample.cfg zoo.cfg [root@master conf]# vim zoo.cfg

dataDir=/usr/local/zk/data

3. 下载 hbase: http://archive.cloudera.com/cdh5/cdh/5/

[root@master hadoop]# tar -zxvf hbase-1.0.0-cdh5.5.1.tar.gz [root@master hadoop]# mv hbase-1.0.0-cdh5.5.1/ /usr/local/hbase

4. 配置 hbase jdk路径

[root@master hadoop]# cd /usr/local/hbase/conf/ [root@master conf]# vim hbase-env.sh

export JAVA_HOME=/usr/local/jdk

5. 配置 hbase-site.xml

[root@master conf]# vim hbase-site.xml

<configuration> <property> <name>hbase.rootdir</name> <value>hdfs://master:9000/hbase</value> </property> <property> <name>hbase.cluster.distributed</name> <value>true</value> </property> <property> <name>hbase.zookeeper.quorum</name> <value>master</value> </property> <property> <name>dfs.replication</name> <value>1</value> </property> </configuration>

6. 配置 regionservers 为 master

[root@master conf]# vim regionservers

7. 配置环境

[root@master conf]# vim /etc/profile

export JAVA_HOME=/usr/local/jdk export HADOOP_HOME=/usr/local/hadoop export ZK_HOME=/usr/local/zk export HBASE_HOME=/usr/local/hbase export PATH=.:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$ZK_HOME/bin:$HBASE_HOME/bin:$PATH

[root@master conf]# source /etc/profile

8. 启动 hbase

[root@master conf]# zkServer.sh start ZooKeeper JMX enabled by default Using config: /usr/local/zk/bin/../conf/zoo.cfg Starting zookeeper ... STARTED [root@master conf]# zkServer.sh status ZooKeeper JMX enabled by default Using config: /usr/local/zk/bin/../conf/zoo.cfg Mode: standalone [root@master conf]# start-hbase.sh master: starting zookeeper, logging to /usr/local/hbase/bin/../logs/hbase-root-zookeeper-master.out starting master, logging to /usr/local/hbase/logs/hbase-root-master-master.out master: starting regionserver, logging to /usr/local/hbase/bin/../logs/hbase-root-regionserver-master.out [root@master conf]#

[root@master conf]# jps 4545 HMaster 5010 Jps 4197 QuorumPeerMain 2518 DataNode 2886 NodeManager 4678 HRegionServer 2791 ResourceManager 2648 SecondaryNameNode 4472 HQuorumPeer 2429 NameNode [root@master conf]#

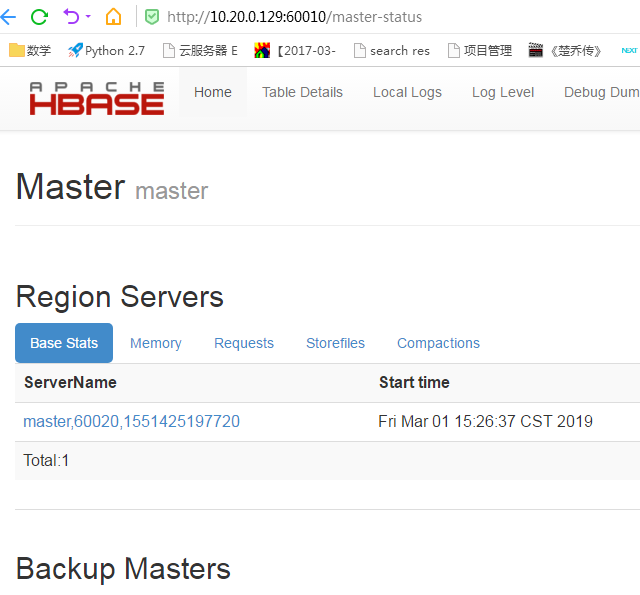

9 . 浏览器访问hbase控制台:http://10.20.0.129:60010/

10. 浏览器访问 hadoop 控制台:http://10.20.0.129:50070

五。安装mongodb

1. 下载 mongodb :https://www.mongodb.com/download-center/community

[root@master hadoop]# tar -zxvf mongodb-linux-x86_64-3.2.22.tgz [root@master hadoop]# mv mongodb-linux-x86_64-3.2.22/ /usr/local/mongodb [root@master hadoop]#

2. 配置 mongodb

[root@master hadoop]# cd /usr/local/mongodb/ [root@master mongodb]# mkdir data [root@master mongodb]# mkdir conf [root@master mongodb]# mkdir log [root@master mongodb]# vim conf/mongod.conf [root@master mongodb]# cat conf/mongod.conf #port = 12345 dbpath = /usr/local/mongodb/data logpath = /usr/local/mongodb/log/mongod.log fork = true logappend = true #bind_ip = 127.0.0.1 [root@master mongodb]#

3. 配置环境

[root@master mongodb]# vim /etc/profile [root@master mongodb]#

export JAVA_HOME=/usr/local/jdk export HADOOP_HOME=/usr/local/hadoop export ZK_HOME=/usr/local/zk export HBASE_HOME=/usr/local/hbase export MONGO_HOME=/usr/local/mongodb export PATH=.:$JAVA_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$ZK_HOME/bin:$HBASE_HOME/bin:$MONGO_HOME/bin:$PATH

[root@master mongodb]# source /etc/profile [root@master mongodb]#

3. 启动 mongodb

[root@master mongodb]# mongod -f conf/mongod.conf about to fork child process, waiting until server is ready for connections. forked process: 5662 child process started successfully, parent exiting [root@master mongodb]#

4. 登录

> show dbs local 0.000GB > exit bye [root@master mongodb]#

浙公网安备 33010602011771号

浙公网安备 33010602011771号