HY-MT1.5-1.8B&7B翻译模型,1.8B参数量实现33语种高效互译

HY-MT1.5-1.8B&7B翻译模型,1.8B参数量实现33语种高效互译

阅读原文

建议阅读原文,始终查看最新文档版本,获得最佳阅读体验:《HY-MT1.5-1.8B&7B翻译模型,1.8B参数量实现33语种高效互译》

https://docs.dingtalk.com/i/nodes/G53mjyd80pAor2A7SvBx3gLL86zbX04v

介绍

模型介绍

混元翻译模型1.5版本,包含一个1.8B翻译模型HY-MT1.5-1.8B和7B翻译模型HY-MT1.5-7B。两个模型均重点支持33语种互译,支持5种民汉/方言。其中HY-MT1.5-7B是WMT25冠军模型的升级版,优化解释性翻译和语种混杂情况,新增支持术语干预、上下文翻译、带格式翻译。HY-MT1.5-1.8B在参数量只有不到HY-MT-7B的三分之一情况下,翻译效果跟HY-MT1.5-7B相近,真正做到的速度又快效果又好。1.8B这个尺寸在经过量化后,能够支持端侧部署和实时翻译场景,应用面广泛。

HY-MT1.5-1.8B是腾讯开源的一个翻译模型,在常用的中外互译和英外互译上,HY-MT1.5-1.8B凭借仅1.8B参数量,全面超越中等尺寸开源模型(如Tower-Plus-72B、Qwen3-32B等)和主流商用翻译API(微软翻译、豆包翻译等),达到Gemini-3.0-Pro这种超大尺寸闭源模型的90分位水平。在WMT25和民汉翻译测试集上,效果仅略微差于Gemini-3.0-Pro,远超其他模型。

核心特性与优势

HY-MT1.5-1.8B同尺寸业界效果最优,超过大部分商用翻译API

HY-MT1.5-1.8B支持端侧部署和实时翻译场景,应用面广泛

HY-MT1.5-7B相比9月份开源版本,优化注释和语种混杂情况

两个模型均支持术语干预、上下文翻译、带格式翻译

新闻

2025.12.30 在Hugging Face开源了 HY-MT1.5-1.8B和HY-MT1.5-7B

2025.9.1 在Hugging Face开源了 Hunyuan-MT-7B和Hunyuan-MT-Chimera-7B。

支持的语种

| Languages | Abbr. | Chinese Names |

|---|---|---|

| Chinese | zh | 中文 |

| English | en | 英语 |

| French | fr | 法语 |

| Portuguese | pt | 葡萄牙语 |

| Spanish | es | 西班牙语 |

| Japanese | ja | 日语 |

| Turkish | tr | 土耳其语 |

| Russian | ru | 俄语 |

| Arabic | ar | 阿拉伯语 |

| Korean | ko | 韩语 |

| Thai | th | 泰语 |

| Italian | it | 意大利语 |

| German | de | 德语 |

| Vietnamese | vi | 越南语 |

| Malay | ms | 马来语 |

| Indonesian | id | 印尼语 |

| Filipino | tl | 菲律宾语 |

| Hindi | hi | 印地语 |

| Traditional Chinese | zh-Hant | 繁体中文 |

| Polish | pl | 波兰语 |

| Czech | cs | 捷克语 |

| Dutch | nl | 荷兰语 |

| Khmer | km | 高棉语 |

| Burmese | my | 缅甸语 |

| Persian | fa | 波斯语 |

| Gujarati | gu | 古吉拉特语 |

| Urdu | ur | 乌尔都语 |

| Telugu | te | 泰卢固语 |

| Marathi | mr | 马拉地语 |

| Hebrew | he | 希伯来语 |

| Bengali | bn | 孟加拉语 |

| Tamil | ta | 泰米尔语 |

| Ukrainian | uk | 乌克兰语 |

| Tibetan | bo | 藏语 |

| Kazakh | kk | 哈萨克语 |

| Mongolian | mn | 蒙古语 |

| Uyghur | ug | 维吾尔语 |

| Cantonese | yue | 粤语 |

官方网站

腾讯混元模型广场:

github:

huggingface:

tencent/HY-MT1.5-1.8B · Hugging Face

推理(HY-MT1.5-1.8B)

使用vllm推理

官方文档:HY-MT/README_CN.md at main · Tencent-Hunyuan/HY-MT

升级vLLM到最新版本:

uv run pip install --upgrade vllm

需要安装指定版本的transformers,腾讯混用团队将在不久后完成对transformers主分支的合入

uv pip install git+https://github.com/huggingface/transformers@4970b23cedaf745f963779b4eae68da281e8c6ca

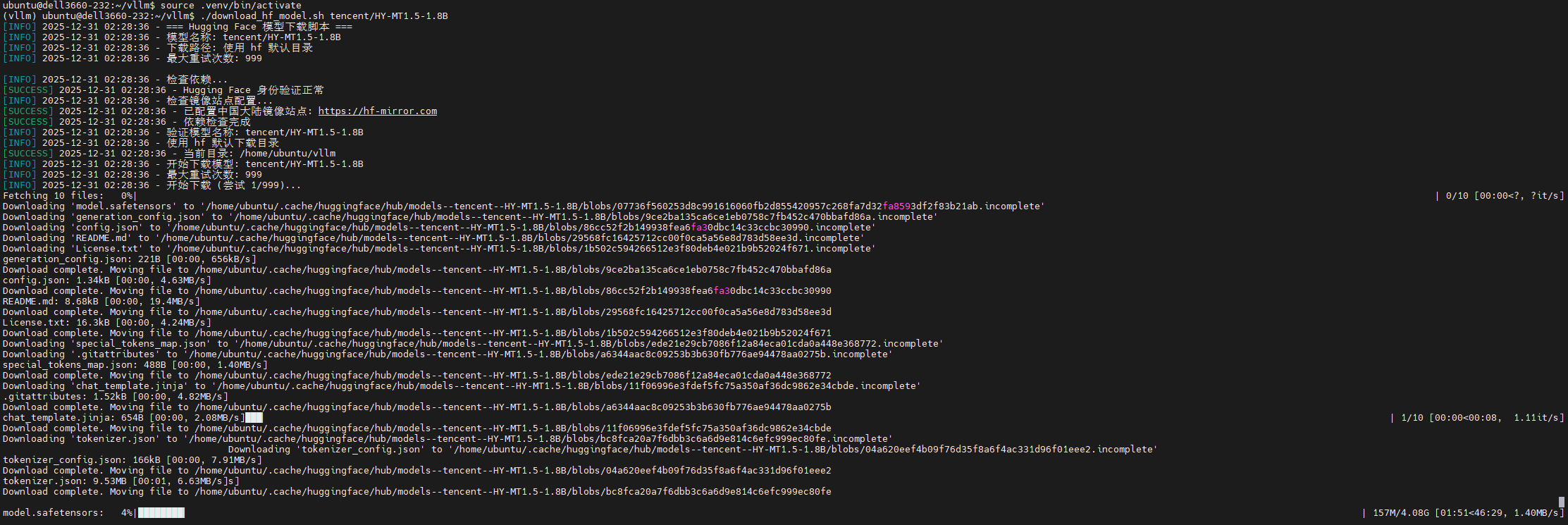

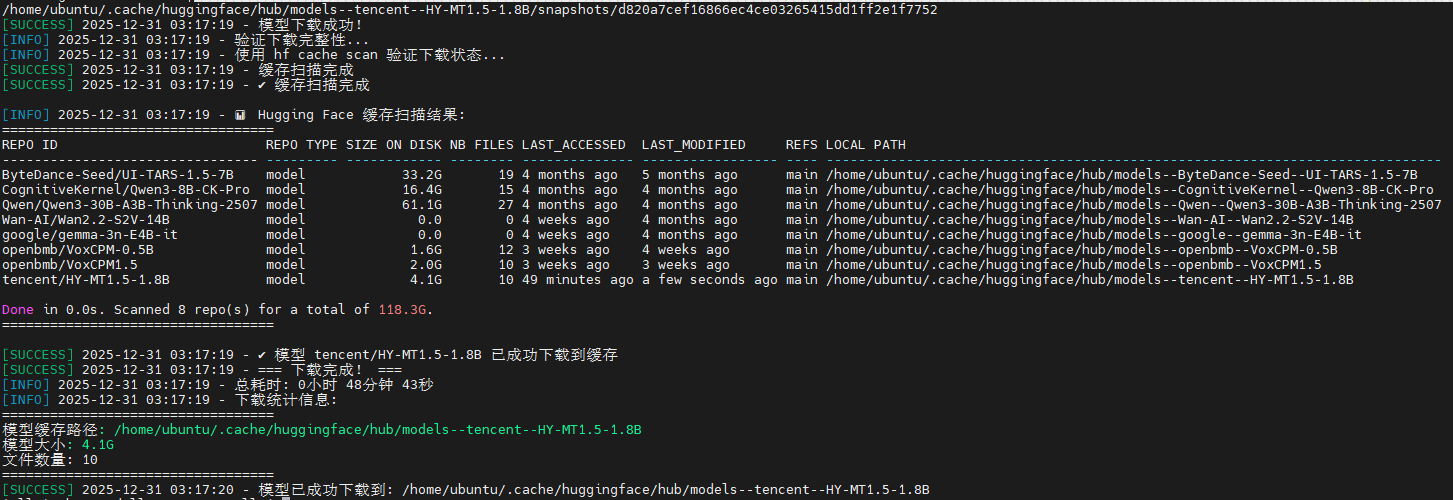

下载模型,我是直接用我自己编写的一个脚本下载的,具体可以看此文:https://docs.dingtalk.com/i/nodes/1R7q3QmWeePQ6rPdUGvG4KgrWxkXOEP2?corpId=

./download_hf_model.sh tencent/HY-MT1.5-1.8B

export MODEL_PATH=/home/ubuntu/.cache/huggingface/hub/models--tencent--HY-MT1.5-1.8B/snapshots/d820a7cef16866ec4ce03265415dd1ff2e1f7752

uv run python -m vllm.entrypoints.openai.api_server \

--host 0.0.0.0 \

--port 8000 \

--model ${MODEL_PATH} \

--trust-remote-code \

--max-model-len 32768 \

--tensor-parallel-size 1 \

--dtype bfloat16 \

--quantization experts_int8 \

--served-model-name hunyuan \

2>&1 | tee vllm_log_server.txt

(vllm) ubuntu@dell3660-232:~/vllm$ uv run python -m vllm.entrypoints.openai.api_server \

--host 0.0.0.0 \

--port 8000 \

--model ${MODEL_PATH} \

--trust-remote-code \

--max-model-len 32768 \

--tensor-parallel-size 1 \

--dtype bfloat16 \

--quantization experts_int8 \

--served-model-name hunyuan \

2>&1 | tee vllm_log_server.txt

(APIServer pid=3201434) INFO 01-04 04:01:36 [api_server.py:1351] vLLM API server version 0.13.0

(APIServer pid=3201434) INFO 01-04 04:01:36 [utils.py:253] non-default args: {'host': '0.0.0.0', 'model': '/home/ubuntu/.cache/huggingface/hub/models--tencent--HY-MT1.5-1.8B/snapshots/d820a7cef16866ec4ce03265415dd1ff2e1f7752', 'trust_remote_code': True, 'dtype': 'bfloat16', 'max_model_len': 32768, 'quantization': 'experts_int8', 'served_model_name': ['hunyuan']}

(APIServer pid=3201434) The argument `trust_remote_code` is to be used with Auto classes. It has no effect here and is ignored.

(APIServer pid=3201434) INFO 01-04 04:01:36 [config.py:373] Replacing legacy 'type' key with 'rope_type'

(APIServer pid=3201434) INFO 01-04 04:01:36 [model.py:514] Resolved architecture: HunYuanDenseV1ForCausalLM

(APIServer pid=3201434) WARNING 01-04 04:01:36 [model.py:2005] Casting bfloat16 to torch.bfloat16.

(APIServer pid=3201434) INFO 01-04 04:01:36 [model.py:1661] Using max model len 32768

(APIServer pid=3201434) INFO 01-04 04:01:36 [scheduler.py:230] Chunked prefill is enabled with max_num_batched_tokens=2048.

(EngineCore_DP0 pid=3201521) INFO 01-04 04:01:40 [core.py:93] Initializing a V1 LLM engine (v0.13.0) with config: model='/home/ubuntu/.cache/huggingface/hub/models--tencent--HY-MT1.5-1.8B/snapshots/d820a7cef16866ec4ce03265415dd1ff2e1f7752', speculative_config=None, tokenizer='/home/ubuntu/.cache/huggingface/hub/models--tencent--HY-MT1.5-1.8B/snapshots/d820a7cef16866ec4ce03265415dd1ff2e1f7752', skip_tokenizer_init=False, tokenizer_mode=auto, revision=None, tokenizer_revision=None, trust_remote_code=True, dtype=torch.bfloat16, max_seq_len=32768, download_dir=None, load_format=auto, tensor_parallel_size=1, pipeline_parallel_size=1, data_parallel_size=1, disable_custom_all_reduce=False, quantization=experts_int8, enforce_eager=False, kv_cache_dtype=auto, device_config=cuda, structured_outputs_config=StructuredOutputsConfig(backend='auto', disable_fallback=False, disable_any_whitespace=False, disable_additional_properties=False, reasoning_parser='', reasoning_parser_plugin='', enable_in_reasoning=False), observability_config=ObservabilityConfig(show_hidden_metrics_for_version=None, otlp_traces_endpoint=None, collect_detailed_traces=None, kv_cache_metrics=False, kv_cache_metrics_sample=0.01, cudagraph_metrics=False, enable_layerwise_nvtx_tracing=False), seed=0, served_model_name=hunyuan, enable_prefix_caching=True, enable_chunked_prefill=True, pooler_config=None, compilation_config={'level': None, 'mode': <CompilationMode.VLLM_COMPILE: 3>, 'debug_dump_path': None, 'cache_dir': '', 'compile_cache_save_format': 'binary', 'backend': 'inductor', 'custom_ops': ['none'], 'splitting_ops': ['vllm::unified_attention', 'vllm::unified_attention_with_output', 'vllm::unified_mla_attention', 'vllm::unified_mla_attention_with_output', 'vllm::mamba_mixer2', 'vllm::mamba_mixer', 'vllm::short_conv', 'vllm::linear_attention', 'vllm::plamo2_mamba_mixer', 'vllm::gdn_attention_core', 'vllm::kda_attention', 'vllm::sparse_attn_indexer'], 'compile_mm_encoder': False, 'compile_sizes': [], 'compile_ranges_split_points': [2048], 'inductor_compile_config': {'enable_auto_functionalized_v2': False, 'combo_kernels': True, 'benchmark_combo_kernel': True}, 'inductor_passes': {}, 'cudagraph_mode': <CUDAGraphMode.FULL_AND_PIECEWISE: (2, 1)>, 'cudagraph_num_of_warmups': 1, 'cudagraph_capture_sizes': [1, 2, 4, 8, 16, 24, 32, 40, 48, 56, 64, 72, 80, 88, 96, 104, 112, 120, 128, 136, 144, 152, 160, 168, 176, 184, 192, 200, 208, 216, 224, 232, 240, 248, 256, 272, 288, 304, 320, 336, 352, 368, 384, 400, 416, 432, 448, 464, 480, 496, 512], 'cudagraph_copy_inputs': False, 'cudagraph_specialize_lora': True, 'use_inductor_graph_partition': False, 'pass_config': {'fuse_norm_quant': False, 'fuse_act_quant': False, 'fuse_attn_quant': False, 'eliminate_noops': True, 'enable_sp': False, 'fuse_gemm_comms': False, 'fuse_allreduce_rms': False}, 'max_cudagraph_capture_size': 512, 'dynamic_shapes_config': {'type': <DynamicShapesType.BACKED: 'backed'>, 'evaluate_guards': False}, 'local_cache_dir': None}

(EngineCore_DP0 pid=3201521) INFO 01-04 04:01:40 [parallel_state.py:1203] world_size=1 rank=0 local_rank=0 distributed_init_method=tcp://10.65.37.233:48023 backend=nccl

(EngineCore_DP0 pid=3201521) INFO 01-04 04:01:41 [parallel_state.py:1411] rank 0 in world size 1 is assigned as DP rank 0, PP rank 0, PCP rank 0, TP rank 0, EP rank 0

(EngineCore_DP0 pid=3201521) INFO 01-04 04:01:41 [gpu_model_runner.py:3562] Starting to load model /home/ubuntu/.cache/huggingface/hub/models--tencent--HY-MT1.5-1.8B/snapshots/d820a7cef16866ec4ce03265415dd1ff2e1f7752...

(EngineCore_DP0 pid=3201521) /home/ubuntu/vllm/.venv/lib/python3.12/site-packages/tvm_ffi/_optional_torch_c_dlpack.py:174: UserWarning: Failed to JIT torch c dlpack extension, EnvTensorAllocator will not be enabled.

(EngineCore_DP0 pid=3201521) We recommend installing via `pip install torch-c-dlpack-ext`

(EngineCore_DP0 pid=3201521) warnings.warn(

(EngineCore_DP0 pid=3201521) INFO 01-04 04:01:42 [cuda.py:351] Using FLASH_ATTN attention backend out of potential backends: ('FLASH_ATTN', 'FLASHINFER', 'TRITON_ATTN', 'FLEX_ATTENTION')

Loading safetensors checkpoint shards: 0% Completed | 0/1 [00:00<?, ?it/s]

Loading safetensors checkpoint shards: 100% Completed | 1/1 [00:00<00:00, 3.25it/s]

Loading safetensors checkpoint shards: 100% Completed | 1/1 [00:00<00:00, 3.25it/s]

(EngineCore_DP0 pid=3201521)

(EngineCore_DP0 pid=3201521) INFO 01-04 04:01:42 [default_loader.py:308] Loading weights took 0.34 seconds

(EngineCore_DP0 pid=3201521) INFO 01-04 04:01:43 [gpu_model_runner.py:3659] Model loading took 3.3988 GiB memory and 1.394396 seconds

(EngineCore_DP0 pid=3201521) INFO 01-04 04:01:46 [backends.py:643] Using cache directory: /home/ubuntu/.cache/vllm/torch_compile_cache/499824d105/rank_0_0/backbone for vLLM's torch.compile

(EngineCore_DP0 pid=3201521) INFO 01-04 04:01:46 [backends.py:703] Dynamo bytecode transform time: 3.61 s

(EngineCore_DP0 pid=3201521) INFO 01-04 04:01:49 [backends.py:261] Cache the graph of compile range (1, 2048) for later use

(EngineCore_DP0 pid=3201521) INFO 01-04 04:01:50 [backends.py:278] Compiling a graph for compile range (1, 2048) takes 1.33 s

(EngineCore_DP0 pid=3201521) INFO 01-04 04:01:50 [monitor.py:34] torch.compile takes 4.94 s in total

(EngineCore_DP0 pid=3201521) INFO 01-04 04:01:51 [gpu_worker.py:375] Available KV cache memory: 5.91 GiB

(EngineCore_DP0 pid=3201521) INFO 01-04 04:01:51 [kv_cache_utils.py:1291] GPU KV cache size: 96,752 tokens

(EngineCore_DP0 pid=3201521) INFO 01-04 04:01:51 [kv_cache_utils.py:1296] Maximum concurrency for 32,768 tokens per request: 2.95x

Capturing CUDA graphs (mixed prefill-decode, PIECEWISE): 100%|██████████| 51/51 [00:02<00:00, 22.64it/s]

Capturing CUDA graphs (decode, FULL): 100%|██████████| 35/35 [00:01<00:00, 29.64it/s]

(EngineCore_DP0 pid=3201521) INFO 01-04 04:01:55 [gpu_model_runner.py:4587] Graph capturing finished in 4 secs, took 0.47 GiB

(EngineCore_DP0 pid=3201521) INFO 01-04 04:01:55 [core.py:259] init engine (profile, create kv cache, warmup model) took 12.25 seconds

(APIServer pid=3201434) INFO 01-04 04:01:55 [api_server.py:1099] Supported tasks: ['generate']

(APIServer pid=3201434) WARNING 01-04 04:01:55 [model.py:1487] Default sampling parameters have been overridden by the model's Hugging Face generation config recommended from the model creator. If this is not intended, please relaunch vLLM instance with `--generation-config vllm`.

(APIServer pid=3201434) INFO 01-04 04:01:55 [serving_responses.py:201] Using default chat sampling params from model: {'repetition_penalty': 1.05, 'temperature': 0.7, 'top_k': 20, 'top_p': 0.8}

(APIServer pid=3201434) INFO 01-04 04:01:55 [serving_chat.py:137] Using default chat sampling params from model: {'repetition_penalty': 1.05, 'temperature': 0.7, 'top_k': 20, 'top_p': 0.8}

(APIServer pid=3201434) INFO 01-04 04:01:55 [serving_completion.py:77] Using default completion sampling params from model: {'repetition_penalty': 1.05, 'temperature': 0.7, 'top_k': 20, 'top_p': 0.8}

(APIServer pid=3201434) INFO 01-04 04:01:55 [serving_chat.py:137] Using default chat sampling params from model: {'repetition_penalty': 1.05, 'temperature': 0.7, 'top_k': 20, 'top_p': 0.8}

(APIServer pid=3201434) INFO 01-04 04:01:55 [api_server.py:1425] Starting vLLM API server 0 on http://0.0.0.0:8000

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:38] Available routes are:

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /openapi.json, Methods: GET, HEAD

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /docs, Methods: GET, HEAD

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /docs/oauth2-redirect, Methods: GET, HEAD

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /redoc, Methods: GET, HEAD

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /scale_elastic_ep, Methods: POST

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /is_scaling_elastic_ep, Methods: POST

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /tokenize, Methods: POST

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /detokenize, Methods: POST

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /inference/v1/generate, Methods: POST

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /pause, Methods: POST

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /resume, Methods: POST

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /is_paused, Methods: GET

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /metrics, Methods: GET

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /health, Methods: GET

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /load, Methods: GET

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /v1/models, Methods: GET

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /version, Methods: GET

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /v1/responses, Methods: POST

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /v1/responses/{response_id}, Methods: GET

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /v1/responses/{response_id}/cancel, Methods: POST

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /v1/messages, Methods: POST

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /v1/chat/completions, Methods: POST

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /v1/completions, Methods: POST

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /v1/audio/transcriptions, Methods: POST

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /v1/audio/translations, Methods: POST

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /ping, Methods: GET

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /ping, Methods: POST

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /invocations, Methods: POST

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /classify, Methods: POST

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /v1/embeddings, Methods: POST

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /score, Methods: POST

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /v1/score, Methods: POST

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /rerank, Methods: POST

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /v1/rerank, Methods: POST

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /v2/rerank, Methods: POST

(APIServer pid=3201434) INFO 01-04 04:01:55 [launcher.py:46] Route: /pooling, Methods: POST

(APIServer pid=3201434) INFO: Started server process [3201434]

(APIServer pid=3201434) INFO: Waiting for application startup.

(APIServer pid=3201434) INFO: Application startup complete.

验证

我让模型翻译小说《剑来》的第一章,为了方便,直接用curl命令

# 读取文件

BOOK_TEXT=$(cat "/home/ubuntu/vllm/《剑来》第一章:惊蛰.txt")

# 构造带明确指令的提示

PROMPT="Translate the following Chinese novel excerpt into English, preserving its literary style. Do not add any commentary or summary:\n\n$BOOK_TEXT"

JSON_PAYLOAD=$(jq -n \

--arg model "hunyuan" \

--arg system_msg "You are a professional literary translator." \

--arg user_msg "$PROMPT" \

'{

model: $model,

messages: [

{ role: "system", content: [{ type: "text", text: $system_msg }] },

{ role: "user", content: [{ type: "text", text: $user_msg }] }

],

max_tokens: 8192, #适当调整max_tokens,否则如果译文太长会被截断

temperature: 0.3, # 翻译建议降低 temperature 提高确定性

top_p: 0.9,

top_k: 50,

repetition_penalty: 1.05,

stop_token_ids: [127960]

}'

)

curl http://10.65.37.233:8000/v1/chat/completions \

-H "Content-Type: application/json" \

-d "$JSON_PAYLOAD" | jq .

小说《剑来》的第一章原文是:

第一章:惊蛰

二月二,龙抬头。

暮色里,小镇名叫泥瓶巷的僻静地方,有位孤苦伶仃的清瘦少年,此时他正按照习俗,一手持蜡烛,一手持桃枝,照耀房梁、墙壁、木床等处,用桃枝敲敲打打,试图借此驱赶蛇蝎、蜈蚣等,嘴里念念有词,是这座小镇祖祖辈辈传下来的老话:二月二,烛照梁,桃打墙,人间蛇虫无处藏。

少年姓陈,名平安,爹娘早逝。小镇的瓷器极负盛名,本朝开国以来,就担当起“奉诏监烧献陵祭器”的重任,有朝廷官员常年驻扎此地,监理官窑事务。无依无靠的少年,很早就当起了烧瓷的窑匠,起先只能做些杂事粗活,跟着一个脾气糟糕的半路师傅,辛苦熬了几年,刚刚琢磨到一点烧瓷的门道,结果世事无常,小镇突然失去了官窑造办这张护身符,小镇周边数十座形若卧龙的窑炉,一夜之间全部被官府勒令关闭熄火。

陈平安放下新折的那根桃枝,吹灭蜡烛,走出屋子后,坐在台阶上,仰头望去,星空璀璨。

少年至今仍然清晰记得,那个只肯认自己做半个徒弟的老师傅,姓姚,在去年暮秋时分的清晨,被人发现坐在一张小竹椅子上,正对着窑头方向,闭眼了。

不过如姚老头这般钻牛角尖的人,终究少数。

世世代代都只会烧瓷一事的小镇匠人,既不敢僭越烧制贡品官窑,也不敢将库藏瓷器私自贩卖给百姓,只得纷纷另谋出路,十四岁的陈平安也被扫地出门,回到泥瓶巷后,继续守着这栋早已破败不堪的老宅,差不多是家徒四壁的惨淡场景,便是陈平安想要当败家子,也无从下手。

当了一段时间飘来荡去的孤魂野鬼,少年实在找不到挣钱的营生,靠着那点微薄积蓄,少年勉强填饱肚子,前几天听说几条街外的骑龙巷,来了个姓阮的外乡铁匠,对外宣称要收七八个打铁的学徒,不给工钱,但管饭,陈平安就赶紧跑去碰运气,不曾想那中年汉子只是斜瞥了他一眼,就把他拒之门外,当时陈平安就纳闷,难道打铁这门活计,不是看臂力大小,而是看面相好坏?

要知道陈平安虽然看着孱弱,但力气不容小觑,这是少年那些年烧瓷拉坯锻炼出来的身体底子,除此之外,陈平安还跟着姓姚的老人,跑遍了小镇方圆百里的山山水水,尝遍了四周各种土壤的滋味,任劳任怨,什么脏活累活都愿意做,毫不拖泥带水。可惜老姚始终不喜欢陈平安,嫌弃少年没有悟性,是榆木疙瘩不开窍,远远不如大徒弟刘羡阳,这也怪不得老人偏心,师父领进门,修行在个人,例如同样是枯燥乏味的拉坯,刘羡阳短短半年的功力,就抵得上陈平安辛苦三年的水准。

虽然这辈子都未必用得着这门手艺,但陈平安仍是像以往一般,闭上眼睛,想象自己身前搁置有青石板和轱辘车,开始练习拉坯,熟能生巧。

大概每过一刻钟,少年就会歇息稍许时分,抖抖手腕,如此循环反复,直到整个人彻底精疲力尽,陈平安这才起身,一边在院中散步,一边缓缓舒展筋骨。从来没有人教过陈平安这些,是他自己瞎琢磨出来的门道。

天地间原本万籁寂静,陈平安听到一声刺耳的讥讽笑声,停下脚步,果不其然,看到那个同龄人蹲在墙头上,咧着嘴,毫不掩饰他的鄙夷神色。

此人是陈平安的老邻居,据说更是前任监造大人的私生子,那位大人唯恐清流非议、言官弹劾,最后孤身返回京城述职,把孩子交由颇有私交情谊的接任官员,帮着看管照拂。如今小镇莫名其妙地失去官窑烧制资格,负责替朝廷监理窑务的督造大人,自己都泥菩萨过江自身难保了,哪里还顾得上官场同僚的私生子,丢下一些银钱,就火急火燎赶往京城打点关系。

不知不觉已经沦为弃子的邻居少年,日子倒是依旧过得悠哉悠哉,成天带着他的贴身丫鬟,在小镇内外逛荡,一年到头游手好闲,也从来不曾为银子发过愁。

泥瓶巷家家户户的黄土院墙都很低矮,其实邻居少年完全不用踮起脚跟,就可以看到这边院子的景象,可每次跟陈平安说话,偏偏喜欢蹲在墙头上。

相比陈平安这个名字的粗浅俗气,邻居少年就要雅致许多,叫宋集薪,就连与他相依为命的婢女,也有个文绉绉的称呼,稚圭。

少女此时就站在院墙那边,她有一双杏眼,怯怯弱弱。

院门那边,有个嗓音响起,“你这婢女卖不卖?”

宋集薪愣了愣,循着声音转头望去,是个眉眼含笑的锦衣少年,站在院外,一张全然陌生的面孔。

锦衣少年身边站着一位身材高大的老者,面容白皙,脸色和蔼,轻轻眯眼打量着两座毗邻院落的少年少女。

老者的视线在陈平安一扫而过,并无停滞,但是在宋集薪和婢女身上,多有停留,笑意渐渐浓郁。

宋集薪斜眼道:“卖!怎么不卖!”

那少年微笑道:“那你说个价。”

少女瞪大眼眸,满脸匪夷所思,像一头惊慌失措的年幼麋鹿。

宋集薪翻了个白眼,伸出一根手指,晃了晃,“白银一万两!”

锦衣少年脸色如常,点头道:“好。”

宋集薪见那少年不像是开玩笑的样子,连忙改口道:“是黄金万两!”

锦衣少年嘴角翘起,道:“逗你玩的。”

宋集薪脸色阴沉。

锦衣少年不再理睬宋集薪,偏移视线,望向陈平安,“今天多亏了你,我才能买到那条鲤鱼,买回去后,我越看越欢喜,想着一定要当面跟你道一声谢,于是就让吴爷爷带我连夜来找你。”

他丢出一只沉甸甸的绣袋,抛给陈平安,笑脸灿烂道:“这是酬谢,你我就算两清了。”

陈平安刚想要说话,锦衣少年已经转身离去。

陈平安皱了皱眉头。

白天自己无意间看到有个中年人,提着只鱼篓走在大街上,捕获了一尾巴掌长短的金黄鲤鱼,它在竹篓里蹦跳得厉害,陈平安只瞥了一眼,就觉得很喜庆,于是开口询问,能不能用十文钱买下它,中年人本来只是想着犒劳犒劳自己的五脏庙,眼见有利可图,就坐地起价,狮子大开口,非要三十文钱才肯卖。囊中羞涩的陈平安哪里有这么多闲钱,又实在舍不得那条金灿灿的鲤鱼,就眼馋跟着中年人,软磨硬泡,想着把价格砍到十五文,哪怕是二十文也行,就在中年人有松口迹象的时候,锦衣少年和高大老人正好路过,他们二话不说,用五十文钱买走了鲤鱼和鱼篓,陈平安只能眼睁睁看着他们扬长而去,无可奈何。

死死盯住那对爷孙愈行愈远的背影,宋集薪收回恶狠狠的眼神后,跳下墙头,似乎记起什么,对陈平安说道:“你还记得正月里的那条四脚蛇吗?”

陈平安点了点头。

怎么会不记得,简直就是记忆犹新。

按照这座小镇传承数百年的风俗,如果有蛇类往自家屋子钻,是好兆头,主人绝对不要将其驱逐打杀。宋集薪在正月初一的时候,坐在门槛上晒太阳,然后就有只俗称四脚蛇的小玩意儿,在他的眼皮子底下往屋里蹿,宋集薪一把抓住就往院子里摔出去,不曾想那条已经摔得七荤八素的四脚蛇,愈挫愈勇,一次次,把从来不信鬼神之说的宋集薪给气得不行,一怒之下就把它甩到了陈平安院子,哪里想到,宋集薪第二天就在自己床底下,看到了那条盘踞蜷缩起来的四脚蛇。

宋集薪察觉到少女扯了扯自己袖子。

少年与她心有灵犀,下意识就将已经到了嘴边的话语,重新咽回肚子。

他想说的是,那条奇丑无比的四脚蛇,最近额头上有隆起,如头顶生角。

宋集薪换了一句话说出口,“我和稚圭可能下个月就要离开这里了。”

陈平安叹了口气,“路上小心。”

宋集薪半真半假道:“有些物件我肯定搬不走,你可别趁我家没人,就肆无忌惮地偷东西。”

陈平安摇了摇头。

宋集薪蓦然哈哈大笑,用手指点了点陈平安,嬉皮笑脸道:“胆小如鼠,难怪寒门无贵子,莫说是这辈子贫贱任人欺,说不定下辈子也逃不掉。”

陈平安默不作声。

各自返回屋子,陈平安关上门,躺在坚硬的木板床上,贫寒少年闭上眼睛,小声呢喃道:“碎碎平,岁岁安,碎碎平安,岁岁平安……”

完整输出:

从输出可知,翻译速度还是挺快的,35秒就完成了。

ubuntu@dell3660-232:~$ # 读取文件

BOOK_TEXT=$(cat "/home/ubuntu/vllm/《剑来》第一章:惊蛰.txt")

# 构造带明确指令的提示

PROMPT="Translate the following Chinese novel excerpt into English, preserving its literary style. Do not add any commentary or summary:\n\n$BOOK_TEXT"

JSON_PAYLOAD=$(jq -n \

--arg model "hunyuan" \

--arg system_msg "You are a professional literary translator." \

--arg user_msg "$PROMPT" \

'{

model: $model,

messages: [

{ role: "system", content: [{ type: "text", text: $system_msg }] },

{ role: "user", content: [{ type: "text", text: $user_msg }] }

],

max_tokens: 8192, #适当调整max_tokens,否则如果译文太长会被截断

temperature: 0.3, # 翻译建议降低 temperature 提高确定性

top_p: 0.9,

top_k: 50,

repetition_penalty: 1.05,

stop_token_ids: [127960]

}'

)

curl http://10.65.37.233:8000/v1/chat/completions \

-H "Content-Type: application/json" \

-d "$JSON_PAYLOAD" | jq .

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 19671 100 10169 100 9502 277 258 0:00:36 0:00:36 --:--:-- 2781

{

"id": "chatcmpl-a99c361fb7c71b90",

"object": "chat.completion",

"created": 1767501926,

"model": "hunyuan",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "Chapter One: Jing Zhe\n\nOn the second day of the second month, the dragon raises its head.\n\nIn the twilight, in a secluded place in the town called Ni Ping Alley, there was a solitary and frail young man. According to tradition, he held a candle in one hand and a peach branch in the other, shining it on the beams, walls, and wooden beds of the house. He used the peach branch to knock around, attempting to drive away snakes, scorpions, centipedes, and other pests. He muttered incantations, words passed down through generations in this town: “On the second day of the second month, light the beams with candles, strike the walls with peach branches—wherever there are snakes and insects, they have nowhere to hide.”\n\nThe young man’s name was Chen Ping An. His parents had died when he was very young. The porcelain-making industry in this town was highly renowned. Since the founding of the dynasty, this town had been responsible for producing ceremonial vessels for the imperial tombs. There were officials from the court who lived here permanently to oversee the kiln operations. The orphaned young man soon became a potter. At first, he did simple t asks under a bad-tempered master. After several years of hard work, he finally began to understand the basics of porcelain making. But then, the town suddenly lost its official kiln, which had alwa ys been its safeguard. Dozens of kilns around the town were ordered to shut down overnight.\n\nChen Ping An put down the newly bent peach branch, extinguished the candle, and stepped out of the hou se. He sat on the steps, looking up at the starry sky.\n\nThe young man still remembered clearly that his master, Yao, who only accepted him as a half-potter, was found sitting on a small bamboo ch air last autumn, eyes closed, facing the kiln.\n\nBut people like Old Yao were rare.\n\nThe potters in this town, who had only known how to make porcelain for generations, neither dared to produce official gifts nor to sell porcelain to the common people. They had to find other ways to make a living. Chen Ping An, at the age of fourteen, was also thrown out of the house. He returned to Ni Pi ng Alley, where his old house was in disrepair. With nothing left, he couldn’t even think about becoming a wastefully spendthrift person.\n\nFor a while, he wandered around like a lost soul. He cou ldn’t find any way to earn money. With his meager savings, he barely managed to survive. A few days ago, he heard that a blacksmith named Ruan had opened up a workshop in Qilong Alley. He offered t o take seven or eight apprentices without paying them wages, but providing food. Chen Ping An rushed over to give it a try. But the middle-aged man just glanced at him and rejected him. Chen Ping A n wondered if being a blacksmith meant having strong arms, rather than good looks.\n\nChen Ping An was weak-looking, but his strength was not to be underestimated. He had trained hard over the year s. In addition, he had worked alongside Old Yao, traveling throughout the town and testing various soils. He was willing to do any kind of work, no matter how dirty or exhausting. Unfortunately, Ol d Yao never liked Chen Ping An. He thought the young man lacked intelligence and was too dull compared to his eldest apprentice, Liu Xian Yang. It wasn’t surprising that the old man favored Liu Xia n Yang. After all, it was up to the individual to learn from their master. For example, Liu Xian Yang had mastered the skills he had learned in just half a year, while Chen Ping An had spent three years learning them.\n\nAlthough he might not need this skill in his life, Chen Ping An still closed his eyes and imagined himself working with a bluestone slab and a wheel. He began to practice sh aping clay, hoping to become skilled over time.\n\nEvery forty minutes or so, he would rest for a while. He shook his wrists, repeating this cycle until he was completely exhausted. Then he would g et up and walk around the yard, stretching his muscles. No one had taught Chen Ping An this. He had figured it out on his own.\n\nThe world was quiet at first, but then Chen Ping An heard a mocking laugh. He stopped in his tracks. Sure enough, he saw a boy of his age squatting on the wall, smirking with disdain.\n\nThis boy was Chen Ping An’s old neighbor. It was said that he was the illegit imate son of the previous supervisor. The supervisor feared criticism from the public and was forced to return to the capital to report back. He left his child in the care of an official who knew h im well. Now, the town had lost its right to produce official porcelain. The supervisor, who was responsible for overseeing the kilns, was struggling to keep himself afloat. He didn’t have time to care about the illegitimate child of his former supervisor. He gave some money and rushed to the capital to make arrangements.\n\nThe neighbor, who had become a forgotten person, lived a leisurely life. He spent his days wandering around the town with his maid. He never worried about money.\n\nThe yellow walls of each house in Ni Ping Alley were quite low. The neighbor didn’t need to stand o n tiptoes to see what was happening in his courtyard. But every time he talked to Chen Ping An, he preferred to squat on the wall.\n\nCompared to Chen Ping An’s name, which sounded simple and vulga r, the neighbor was much more refined. His name was Song Jixin. Even his maid had a more elegant name: Zhi Gui.\n\nThe girl stood on the other side of the wall. She had almond-shaped eyes and seeme d timid and weak.\n\nOutside the door, someone asked, “Do you sell your maid?”\n\nSong Jixin stared at the voice and turned around. There was a young man with a smiling face standing outside the do or. He looked completely unfamiliar.\n\nThe young man was accompanied by a tall man with fair skin and a kind expression. He looked at the two boys and girls in the adjacent courtyards.\n\nThe man’ s gaze swept over Chen Ping An, but he didn’t stay there long. Instead, his gaze lingered on Song Jixin and her maid. His smile grew wider.\n\nSong Jixin said, “Yes! Why not sell her?”\n\nThe young man smiled and said, “Then tell me your price.”\n\nThe girl widened her eyes in surprise, like a frightened young deer.\n\nSong Jixin rolled his eyes and said, “Ten thousand taels of silver!”\n\nT he young man remained calm and agreed, “Good.”\n\nSong Jixin saw that the young man wasn’t joking. He quickly changed his mind and said, “Ten thousand taels of gold!”\n\nThe young man smiled and sa id, “I was just kidding.”\n\nSong Jixin’s face darkened.\n\nThe young man ignored him and turned his attention to Chen Ping An. “I owe you today. I was able to buy that carp. I liked it so much tha t I wanted to thank you in person. So I asked Grandpa Wu to take me to find you tonight.”\n\nHe threw a heavy embroidered bag at Chen Ping An and smiled brightly. “This is my thanks. We can settle the debt now.”\n\nChen Ping An wanted to say something, but the young man already turned and left.\n\nChen Ping An frowned.\n\nDuring the day, he saw a middle-aged man carrying a fish basket on the street. He caught a golden carp that was about the length of a tail. The carp jumped around in the basket. Chen Ping An thought it was a happy sight, so he asked if he could buy it for ten coins. The middle-aged man wanted to reward himself, so he demanded thirty coins. Chen Ping An didn’t have that much money, and he really didn’t want to give up the golden carp. So he tried to negotiate d own to fifteen coins, or even twenty. Just when the middle-aged man seemed to be willing to lower the price, the young man and the tall man passed by. Without hesitation, they bought the carp and t he basket for fifty coins. Chen Ping An watched helplessly as they left.\n\nSong Jixin watched the grandfather and grandson walk away. Then he jumped down from the wall and said to Chen Ping An, “D o you remember that four-legged snake in January?”\n\nChen Ping An nodded. How could he forget? It was still fresh in his memory.\n\nAccording to the traditions of this town, if snakes entered a ho use, it was a good sign. The owner should not kill them. On the first day of January, Song Jixin sat on the threshold and sunbathed. Then, a four-legged snake rushed into his house. Song Jixin caug ht it and threw it out. But the snake became more aggressive. It kept attacking Song Jixin, who refused to believe in ghosts. In anger, he threw the snake into Chen Ping An’s yard. The next day, So ng Jixin saw the snake coiled up under his bed.\n\nSong Jixin noticed that the girl was pulling at his sleeve.\n\nThe young man understood her thoughts and swallowed back his words.\n\nWhat he want ed to say was that the ugly four-legged snake had a bump on its forehead, like horns on its head.\n\nSong Jixin changed his words and said, “Zhi Gui and I might leave this place next month.”\n\nChe n Ping An sighed. “Be careful on your journey.”\n\nSong Jixin said, “There are some things I definitely can’t move. Don’t steal anything when no one is home.”\n\nChen Ping An shook his head.\n\nSon g Jixin laughed and pointed at Chen Ping An. “You’re too scared. No wonder there are no rich sons in poor families. Maybe you won’t be able to escape in the next life either.”\n\nChen Ping An remai ned silent.\n\nThey each went back to their houses. Chen Ping An closed the door and lay down on the hard wooden bed. The poor young man closed his eyes and murmured, “May everything be peaceful, m ay you be safe, may you be happy…”",

"refusal": null,

"annotations": null,

"audio": null,

"function_call": null,

"tool_calls": [],

"reasoning": null,

"reasoning_content": null

},

"logprobs": null,

"finish_reason": "stop",

"stop_reason": null,

"token_ids": null

}

],

"service_tier": null,

"system_fingerprint": null,

"usage": {

"prompt_tokens": 2144,

"total_tokens": 4272,

"completion_tokens": 2128,

"prompt_tokens_details": null

},

"prompt_logprobs": null,

"prompt_token_ids": null,

"kv_transfer_params": null

}

点评

我将原文和翻译后的文字发给了chatGPT,让chatGPT点评翻译效果。

整体评价结论先行:这是一份“可读但不可靠”的直译型机器翻译结果,达到了情节可理解的最低门槛,但在文学性、文化准确性和细节忠实度上存在系统性问题,不适合作为正式出版或高质量对外输出文本。

下面从企业级评估常用的几个维度,做结构化分析。

一、整体质量评估(Executive Summary)

| 维度 | 评价 |

|---|---|

| 信息完整性 | 中等(主线剧情基本保留) |

| 语义准确性 | 偏低(多处实质性误译、漏译) |

| 文学风格 | 较差(原作气质明显流失) |

| 文化表达 | 明显不足(大量中国语境被削平) |

| 英语自然度 | 中等偏低(机器感强,叙述僵硬) |

综合判断:

HY-MT1.5-1.8B 更像是“通用信息翻译模型”,而非“文学文本翻译模型”。

二、关键问题拆解(核心缺陷)

1️⃣ 多处事实性误译(Hard Errors)

这些问题已经超出“风格差异”,属于内容错误:

-

人名性别混乱

-

Song Jixin 被多次误用为 she / her

-

原文明确为男性少年,这是严重叙事错误

-

-

时间单位错误

-

原文:

大概每过一刻钟 -

翻译:

Every forty minutes or so -

❌ 一刻钟 ≠ 40 minutes(应为 ~15 minutes)

-

-

人物关系误解

-

“半个徒弟” → 翻成 half-fellowman(明显不成立)

-

“督造大人” → 翻成 supervisor,但上下文官职体系被严重简化

-

-

鲤鱼描写错误

- “巴掌长短” → 翻为 about the length of a tail(语义偏离)

这些问题在正式项目中会被直接判定为 不可接受输出。

2️⃣ 文化语义被严重削弱(High Context Loss)

《剑来》第一章高度依赖中国文化语境,但翻译几乎全部“扁平化”处理:

-

节气与民俗

- “惊蛰”“二月二龙抬头”

→ 被处理为普通日期说明,没有文化张力

- “惊蛰”“二月二龙抬头”

-

口语与童谣

- “碎碎平,岁岁安”

→ 结尾处理成泛泛祝福语,情绪和意象基本消失

- “碎碎平,岁岁安”

-

阶层与命运暗示

- “寒门无贵子”

→ 翻译为直白因果句,失去宿命感和讽刺力度

- “寒门无贵子”

结果是:情节在,但“气”不在。

3️⃣ 英文文学表达能力不足(Stylistic Degradation)

表现为典型“小模型翻译特征”:

-

句式高度同质化(大量 He did…, He was…, He saw…)

-

描述偏说明文,缺乏节奏变化

-

对比、留白、暗示全部被直白化

举例:

原文:

“闭眼了。”

这是极具力量的留白表达。

翻译成:

with his eyes closed

信息对了,但文学效果归零。

三、哪些地方“还算可以”

客观地说,这个结果并非一无是处:

-

叙事顺序基本正确

-

人物关系大体未崩

-

对白可理解

-

作为“辅助理解原文”的工作翻译,可以接受

如果目标是:

-

内部快速阅读

-

技术验证

-

模型能力测试

那么它是合格的基线结果。

四、模型能力定位判断(对 HY-MT1.5-1.8B 的结论)

从结果反推模型能力边界:

-

❌ 不适合:

-

文学翻译

-

小说出海

-

IP 内容国际化

-

-

✅ 适合:

-

技术文档

-

简单叙事文本

-

信息型中英互译

-

本质原因只有一个:

1.8B 级参数规模 + 通用翻译目标,无法承载高语境文学文本。

五、改进建议(务实导向)

如果你的目标是认真把《剑来》翻成英文,建议路线非常明确:

-

模型层面

-

至少使用 ≥7B,最好是 ≥13B

-

偏“文学/创作/指令对齐”的模型

-

-

方法层面

-

先直译(保信息)

-

再风格化重写(保气质)

-

最后人工/AI 校对文化点

-

-

工程实践

-

人名、地名、单位:强制 glossary

-

时间、货币:显式规则校验

-

对话部分单独处理

-

最终一句话总结(给决策用)

这份翻译“看得懂,但不耐看”;“能交差,但不能交付”;作为模型能力展示尚可,作为文学译本明显不合格。

推理(HY-MT1.5-7B)

单节点单GPU推理

export MODEL_PATH=/home/ubuntu/.cache/huggingface/hub/models--tencent--HY-MT1.5-7B/snapshots/f1aa7278ccb1825edaf806c7e40d1febcbf2cbbd

uv run python -m vllm.entrypoints.openai.api_server \

--host 0.0.0.0 \

--port 8000 \

--model ${MODEL_PATH} \

--trust-remote-code \

--max-model-len 32768 \

--tensor-parallel-size 1 \

--dtype bfloat16 \

--quantization experts_int8 \

--served-model-name hunyuan \

2>&1 | tee vllm_log_server.txt

我使用的GPU是NVIDIA Quadro RTX 5000 16GB 总是报OOM错误,优化参数还是一样,主要还是因为此GPU比较旧,没法使用新GPU 架构的一些功能。在用vllm进行分布式推理时也遇到了一些问题,后来得到了解决,具体可以看《HY-MT1.5-1.8B&7B翻译模型,1.8B参数量实现33语种高效互译》

多节点分布式推理

vllm的详细教程可以看这篇文档:《vllm,一个超级强大的开源大模型推理引擎》

我的实验环境为:两台Dell3660工作站,每一台工作站中安装了一块NVIDIA RTX A2000 12GB显卡

推理命令:

vllm serve tencent/HY-MT1.5-7B \

--tensor-parallel-size 2 \

--dtype bfloat16 \

--max-model-len 8192 \

--max-num-seqs 2 \

--served-model-name hunyuan \

--trust-remote-code \

--distributed-executor-backend ray

验证

调用api:

# 读取文件

BOOK_TEXT=$(cat "/home/ubuntu/vllm/《剑来》第一章:惊蛰.txt")

# 构造带明确指令的提示

PROMPT="Translate the following Chinese novel excerpt into English, preserving its literary style. Do not add any commentary or summary:\n\n$BOOK_TEXT"

JSON_PAYLOAD=$(jq -n \

--arg model "hunyuan" \

--arg system_msg "You are a professional literary translator." \

--arg user_msg "$PROMPT" \

'{

model: $model,

messages: [

{ role: "system", content: [{ type: "text", text: $system_msg }] },

{ role: "user", content: [{ type: "text", text: $user_msg }] }

],

max_tokens: 6000, #适当调整max_tokens,否则如果译文太长会被截断

temperature: 0.3, # 翻译建议降低 temperature 提高确定性

top_p: 0.9,

top_k: 50,

repetition_penalty: 1.05,

stop_token_ids: [127960]

}'

)

curl http://10.65.37.234:8000/v1/chat/completions \

-H "Content-Type: application/json" \

-d "$JSON_PAYLOAD" | jq .

完整输出:

从输出可以看到花费了近2两分钟

ubuntu@dell3660-232:~/vllm$ BOOK_TEXT=$(cat "/home/ubuntu/vllm/《剑来》第一章:惊蛰.txt")

# 构造带明确指令的提示

PROMPT="Translate the following Chinese novel excerpt into English, preserving its literary style. Do not add any commentary or summary:\n\n$BOOK_TEXT"

JSON_PAYLOAD=$(jq -n \

--arg model "hunyuan" \

--arg system_msg "You are a professional literary translator." \

--arg user_msg "$PROMPT" \

'{

model: $model,

messages: [

{ role: "system", content: [{ type: "text", text: $system_msg }] },

{ role: "user", content: [{ type: "text", text: $user_msg }] }

],

max_tokens: 6000, #适当调整max_tokens,否则如果译文太长会被截断

temperature: 0.3, # 翻译建议降低 temperature 提高确定性

top_p: 0.9,

top_k: 50,

repetition_penalty: 1.05,

stop_token_ids: [127960]

}'

)

curl http://10.65.37.234:8000/v1/chat/completions \

-H "Content-Type: application/json" \

-d "$JSON_PAYLOAD" | jq .

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 19719 100 10217 100 9502 85 79 0:02:00 0:01:59 0:00:01 2533

{

"id": "chatcmpl-a09069f4ed9f6694",

"object": "chat.completion",

"created": 1769742006,

"model": "hunyuan",

"choices": [

{

"index": 0,

"message": {

"role": "assistant",

"content": "Chapter One: Awakening of Insects\n\nOn the second day of the second lunar month, the Dragon Rises its Head.\n\nIn the twilight, in a secluded corner of the town called Nibing Alley, there lived a thin, lonely young boy. Following local tradition, he held a candle in one hand and a peach branch in the other, shining them on the roof beams, walls, and wooden bed. He tapped the peach branch around, trying to drive away snakes, scorpions, centipedes, and the like, while reciting an old saying passed down through generations in their town: “On the second day of the second month, light the candles on the beams and strike the walls with the peach branch—then no snakes or insects will have a place to hide.”\n\nThe boy’s name was Chen Pingan, and his parents had died early. The town was famous for its porcelain production. Since the founding of the current dynasty, it had been tasked with producing ceremonial vessels for the imperial tombs. Government officials stationed here year-round to oversee the kilns. With no one to rely on, Chen Pingan began working as a porcelain maker at a young age. At first, he did menial tasks under a grumpy master. After years of hard work, he had just begun to grasp the basics of porcelain making when, suddenly, the town lost its status as an official porcelain-producing site. Overnight, all the kilns in the surrounding area were ordered to shut down by the authorities.\n\nChen Pingan put down the newly picked peach branch, blew out the candle, and stepped outside. Sitting on the steps, he looked up at the starry sky.\n\nHe still remembered clearly that old master Yao, who only considered him a half-apprentice, was found sitting in a small bamboo chair one morning last autumn, with his eyes closed, facing the kilns.\n\nBut people like Old Yao who were so stubborn were rare.\n\nThe craftsmen in the town, who had spent their entire lives making porcelain, dared not interfere with the production of imperial vessels or sell the stored porcelain to the common people. So they had to find other ways to make a living. At fourteen, Chen Pingan was also forced to leave home. Back in Nibing Alley, he found himself living in a dilapidated old house with almost nothing. Even if he wanted to be a good-for-nothing, he had no means to do so.\n\nAfter drifting from place to place for some time, Chen Pingan could not find any way to earn a living. Relying on his meager savings, he barely managed to survive. A few days ago, he heard that a blacksmith named Ruan had arrived in Qilong Alley a few streets away and was looking for seven or eight apprentices. He wouldn’t pay them, but would provide food. Chen Pingan went to try his luck, but the middle-aged man just glanced at him and turned him away. Chen Pingan wondered if blacksmithing really depended on looks rather than strength.\n\nAlthough Chen Pingan seemed frail, he was actually quite strong, thanks to years of working in the porcelain kilns. In addition, he had followed Old Yao throughout the surrounding mountains and rivers, learning about different types of soil. He was willing to do any kind of hard work without complaint. Unfortunately, Old Yao never liked him, thinking he was too slow to learn and not as talented as his chief apprentice, Liu Xianyang. It was no wonder Old Yao was biased—after all, the master leads the way, but the path is ultimately chosen by the individual. For example, in the tedious task of throwing clay into shape, Liu Xianyang had gained the same level of skill in just half a year as Chen Pingan had in three years of hard work.\n\nAlthough he might never need this skill in his life, Chen Pingan continued to practice as usual, imagining that he had a stone slab and a wheel in front of him.\n\nEvery fifteen minutes or so, he would take a short rest and shake his wrists before continuing until he was completely exhausted. Then he would walk around the yard and stretch his limbs. No one had ever taught him these techniques; he had figured them out on his own.\n\nThe world was quiet around him when suddenly, he heard a mocking laugh. Turning around, he saw a fellow townsman squatting on the wall, smiling with obvious disdain.\n\nThis person was Chen Pingan’s neighbor, and it was rumored that he was the illegitimate son of a former supervisor. Fearing criticism and accusations, the supervisor had returned to the capital alone to report to his superiors and left the child in the care of a fellow official he knew well. Now that the town had lost its official porcelain-making status, the supervisor himself was in trouble and had no time to care about the neighbor’s child. He left some money and hurried off to the capital to try to secure his own position.\n\nThe abandoned neighbor seemed to live carefree lives, spending his days wandering around with his maid, never worrying about money.\n\nThe yellow earthen walls of the houses in Nibing Alley were very low, so the neighbor could easily see into Chen Pingan’s yard. But whenever he talked to Chen Pingan, he always preferred to squat on the wall.\n\nUnlike Chen Pingan’s plain name, the neighbor’s name was Song Jixin, and even his maid was given a refined name: Zhi Gui.\n\nAt that moment, the maid was standing on the other side of the wall, her eyes timid and weak.\n\nFrom the other side of the gate, a voice asked, “Are you selling your maid?”\n\nSong Jixin froze and turned around. There was a young man in elegant clothes, smiling, standing outside the yard. His face was completely unfamiliar.\n\nBeside the young man stood a tall, fair-skinned old man who was observing the two young people carefully.\n\nThe old man’s gaze briefly swept over Chen Pingan but then lingered on Song Jixin and the maid, his smile growing deeper.\n\nSong Jixin said jokingly, “Sure! Why not?”\n\nThe young man smiled and asked, “Then tell me how much you want.”\n\nThe maid’s eyes widened in surprise, looking like a frightened young deer.\n\nSong Jixin rolled his eyes and pointed at himself, saying, “Ten thousand taels of silver!”\n\nThe young man nodded calmly and said, “Okay.”\n\nRealizing that he wasn’t joking, Song Jixin quickly changed his offer to “Ten thousand taels of gold!”\n\nThe young man grinned and said, “I was just teasing you.”\n\nSong Jixin’s expression darkened.\n\nIgnoring him, the young man turned his attention to Chen Pingan and said, “Thanks to you today, I was able to buy that carp. I liked it so much that I wanted to thank you personally, so I asked Grandpa Wu to bring me here tonight.”\n\nHe threw a heavy embroidered bag to Chen Pingan and said with a bright smile, “This is my thanks. We’re even now.”\n\nBefore Chen Pingan could say anything, the young man turned and left.\n\nChen Pingan frowned.\n\nDuring the day, he had seen a middle-aged man carrying a fish basket on the street, catching a golden carp about as long as a palm. The carp was jumping vigorously in the basket, and Chen Pingan thought it was a lucky sign, so he asked if he could buy it for ten coins. The man initially wanted to treat himself, but seeing the potential profit, he demanded thirty coins. Chen Pingan didn’t have that much money and couldn’t bear to part with the golden carp, so he tried to negotiate the price down to fifteen or even twenty coins. Just as the man seemed to be willing to lower his demand, the young man and the tall old man happened to pass by. Without a word, they bought the carp and the basket for fifty coins, leaving Chen Pingan helpless as they walked away.\n\nSong Jixin watched them disappear before turning his fierce gaze away and jumping down from the wall. Suddenly, he remembered something and said to Chen Pingan, “Do you remember that four-legged snake in January?”\n\nChen Pingan nodded.\n\nHow could he forget? It was still fresh in his memory.\n\nAccording to the local tradition, if a snake entered a house, it was a good omen. The owner was not supposed to drive it away or kill it. On the first day of the first lunar month, Song Jixin was sitting on the threshold basking in the sun when a small snake darted into the house right in front of him. He caught it and threw it out into the yard. But the snake, despite being injured, became even more aggressive, frustrating Song Jixin greatly. In a fit of anger, he threw it into Chen Pingan’s yard. The next day, Song Jixin found the snake curled up under his bed.\n\nSong Jixin noticed the maid tugging at his sleeve.\n\nThey seemed to understand each other, so he swallowed the words he was about to say. What he wanted to say was that the extremely ugly snake had recently developed a bump on its forehead, as if it had horns.\n\nInstead, Song Jixin said, “Zhi Gui and I might leave this place next month.”\n\nChen Pingan sighed and said, “Be careful on your way.”\n\nSong Jixin joked half-seriously, “There are some things I definitely can’t take with me. Don’t take the chance to steal anything when my family isn’t home.”\n\nChen Pingan shook his head.\n\nSuddenly, Song Jixin laughed heartily and pointed at Chen Pingan, saying playfully, “So timid—no wonder poor families produce no noble children. Not only will you suffer poverty and oppression in this life, but you might not escape it in the next one either.”\n\nChen Pingan remained silent.\n\nThey went back into their respective houses. Chen Pingan closed the door and lay down on the hard wooden bed. The poor young man closed his eyes and murmured softly, “Peace forever, safety every year… Peace forever, safety every year…”",

"refusal": null,

"annotations": null,

"audio": null,

"function_call": null,

"tool_calls": [],

"reasoning": null,

"reasoning_content": null

},

"logprobs": null,

"finish_reason": "stop",

"stop_reason": null,

"token_ids": null

}

],

"service_tier": null,

"system_fingerprint": null,

"usage": {

"prompt_tokens": 2181,

"total_tokens": 4249,

"completion_tokens": 2068,

"prompt_tokens_details": null

},

"prompt_logprobs": null,

"prompt_token_ids": null,

"kv_transfer_params": null

}

点评

我让gemini3 Pro分析翻译的效果:

这是一个非常值得深入探讨的案例。

《剑来》(The Snowy Path of the Heroic Blade / Sword of Coming)因其作者烽火戏诸侯极具个人风格的文笔、大量的用典、隐喻以及半文半白的叙述方式,被公认为中文网文界最难翻译的作品之一。

你使用的模型是 tencent/HY-MT1.5-7B,这是一个相对轻量级(7B参数量)的模型。基于这个背景,我对这篇译文的评价是:令人惊艳,远超预期,但仍有“AI味”和意境上的折损。

以下从四个维度进行详细点评:

流畅度与可读性 (Fluency & Readability)

评分:9/10

-

优点: 译文的英语语感非常好,几乎没有传统机器翻译(如早期Google Translate)那种生硬的“翻译腔”。长句的拆分和逻辑连接词的使用(如 "Although...", "In addition...", "It was no wonder...")非常自然。

-

表现: 比如描写陈平安练拳(拉坯)的那一段:

Every fifteen minutes or so, he would take a short rest and shake his wrists before continuing until he was completely exhausted.

- 这句话的节奏感很好,清晰地传达了动作和状态。

准确度与剧情还原 (Accuracy)

评分:8.5/10

-

优点: 模型准确抓取了所有的核心剧情点:二月二龙抬头、陈平安的身世、烧瓷的背景、宋集薪的挑衅、买鲤鱼的插曲以及四脚蛇的伏笔。没有出现严重的“幻觉”(Hallucination)或漏译。

-

细节处理: 对“四脚蛇”(four-legged snake)和宋集薪把蛇扔进陈平安院子这一段的因果关系梳理得很清楚,这对于理解后续剧情至关重要。

文化负载词与专有名词处理 (Cultural Nuance)

评分:6/10

这是这篇译文最大的短板,也是目前AI翻译《剑来》这类作品的通病。

-

地名的意译缺失:

-

译文使用了拼音:

Nibing Alley。 -

原文含义:泥瓶巷。

-

点评: 在《剑来》中,地名往往暗示了人物的命运(如“泥瓶”暗示贫贱、卑微)。翻译成 Mud Bottle Alley 会比 Nibing Alley 更具文学色彩和暗示性。同理,

Qilong Alley(骑龙巷)如果翻译成 Riding Dragon Alley,气势会完全不同。

-

-

人名的双关:

-

结尾处:

"Peace forever, safety every year…" -

原文:陈平安的名字意为“平安”。

-

点评: 译文直译了“平安”的意思,但非中文读者可能无法第一时间将这句话与主角的名字 Chen Pingan 联系起来。如果能加上一句注释或采用某种修辞手法强调这是他在念自己的名字,效果会更好。

-

-

特定的民俗:

-

"On the second day of the second lunar month, the Dragon Rises its Head." -

翻译得很标准,准确传达了“龙抬头”的概念。

-

文学性与“烽火味” (Literary Style)

评分:7/10

烽火戏诸侯的文笔以细腻、矫情(褒义)、甚至带有一点点“丧”和哲理著称。AI的翻译将其**“平实化”**了。

-

例子:

The poor young man closed his eyes and murmured softly...

-

分析: 这句话很通顺,但稍显平淡。原文那种孤独感、宿命感在英语中被淡化成了普通的叙事。

-

对话风格:

-

宋集薪说:

"Sure! Why not?" -

这句英语太现代、太美式了。在古风/玄幻语境下,宋集薪作为一个读过书、有点傲气的富家子弟,语气应该更矜持或傲慢一些,而不是像个现代美国高中生。

-

总结

HY-MT1.5-7B 的表现如何?

作为一个仅有 7B 参数的模型,这个翻译结果是**极佳(Excellent)**的。

-

它读起来像小说,而不是像说明书。

-

它逻辑自洽,能够处理长距离的上下文(比如蛇的伏笔)。

它适合用来做什么?

-

适合: 给不懂中文的外国朋友介绍《剑来》的剧情;或者作为网文出海的底稿(Draft),由人工编辑进行润色(Post-editing)。

-

不适合: 直接作为正式出版的文学翻译。因为它丢失了原作“泥瓶巷”、“骑龙巷”等意象的深层美感,且对话风格略显出戏。

结论

7B模型的表现优于1.5B,低参数量的模型翻译这类小说还是不行的。

故障诊断

依赖冲突

ERROR: pip's dependency resolver does not currently take into account all the packages that are installed. This behaviour is the source of the following dependency conflicts.

xformers 0.0.31 requires torch==2.7.1, but you have torch 2.9.0 which is incompatible.

解决方案:

uv pip install xformers==0.0.33.post2 --index-url https://download.pytorch.org/whl/cu128

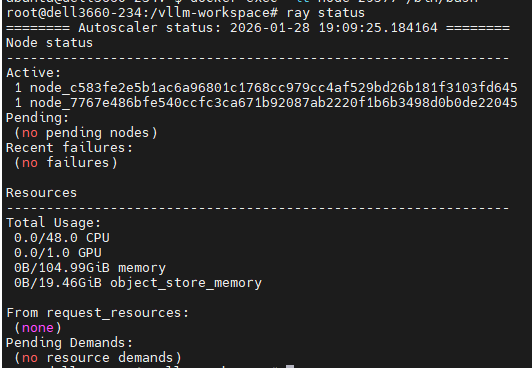

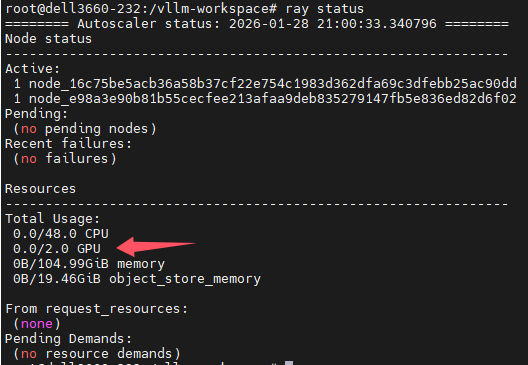

ray集群只有一个GPU

在建立推理集群时发现了问题,如下图,我发现集群中只有一个GPU,这是不对的,集群中应该有两个GPU ,我尝试过最新的vllm容器镜像,也是同样的问题。

header节点运行的命令是:

bash run_cluster.sh \

vllm/vllm-openai:nightly-7e67df5570e2afc6a92833096898689c91244fb6 \

10.65.37.234 \

--head \

/home/ubuntu/.cache/huggingface \

-e VLLM_HOST_IP=10.65.37.234 \

-e GLOO_SOCKET_IFNAME=enp0s31f6 \

-e VLLM_LOGGING_LEVEL=DEBUG \

-e HF_ENDPOINT=https://hf-mirror.com \

-e PYTORCH_CUDA_ALLOC_CONF=expandable_segments:True \

-e VLLM_DISABLE_VIDEO_PROCESSING=1

worker节点运行的命令是:

bash run_cluster.sh \

vllm/vllm-openai:nightly-7e67df5570e2afc6a92833096898689c91244fb6 \

10.65.37.234 \

--worker \

/home/ubuntu/.cache/huggingface \

-e VLLM_HOST_IP=10.65.37.233 \

-e GLOO_SOCKET_IFNAME=enp0s31f6 \

-e VLLM_LOGGING_LEVEL=DEBUG \

-e HF_ENDPOINT=https://hf-mirror.com \

-e PYTORCH_CUDA_ALLOC_CONF=expandable_segments:True \

-e VLLM_DISABLE_VIDEO_PROCESSING=1

后来此问题得到了解决,具体可以看此文:《vllm,一个超级强大的开源大模型推理引擎》

但是在运行vLLM推理的时候又出错了,原因是下载模型文件失败,于是我提前下载好模型文件,这样在运行vllm serve命令时就不用再下载了。

成功启动了模型

vllm serve tencent/HY-MT1.5-7B \

--tensor-parallel-size 2 \

--dtype bfloat16 \

--max-model-len 8192 \

--max-num-seqs 2 \

--served-model-name hunyuan \

--trust-remote-code \

--distributed-executor-backend ray

以下是日志:

# vllm serve tencent/HY-MT1.5-7B \

--tensor-parallel-size 2 \

--dtype bfloat16 \

--max-model-len 8192 \

--max-num-seqs 2 \

--served-model-name hunyuan \

--trust-remote-code \

--distributed-executor-backend ray> > > > > > >

DEBUG 01-29 18:29:45 [plugins/__init__.py:35] No plugins for group vllm.platform_plugins found.

DEBUG 01-29 18:29:45 [platforms/__init__.py:36] Checking if TPU platform is available.

DEBUG 01-29 18:29:45 [platforms/__init__.py:55] TPU platform is not available because: No module named 'libtpu'

DEBUG 01-29 18:29:46 [platforms/__init__.py:61] Checking if CUDA platform is available.

DEBUG 01-29 18:29:46 [platforms/__init__.py:84] Confirmed CUDA platform is available.

DEBUG 01-29 18:29:46 [platforms/__init__.py:112] Checking if ROCm platform is available.

DEBUG 01-29 18:29:46 [platforms/__init__.py:126] ROCm platform is not available because: No module named 'amdsmi'

DEBUG 01-29 18:29:46 [platforms/__init__.py:133] Checking if XPU platform is available.

DEBUG 01-29 18:29:46 [platforms/__init__.py:153] XPU platform is not available because: No module named 'intel_extension_for_pytorch'

DEBUG 01-29 18:29:46 [platforms/__init__.py:160] Checking if CPU platform is available.

DEBUG 01-29 18:29:46 [platforms/__init__.py:61] Checking if CUDA platform is available.

DEBUG 01-29 18:29:46 [platforms/__init__.py:84] Confirmed CUDA platform is available.

DEBUG 01-29 18:29:46 [platforms/__init__.py:225] Automatically detected platform cuda.

DEBUG 01-29 18:29:49 [utils/import_utils.py:74] Loading module triton_kernels from /usr/local/lib/python3.12/dist-packages/vllm/third_party/triton_kernels/__init__.py.

DEBUG 01-29 18:29:51 [entrypoints/utils.py:181] Setting VLLM_WORKER_MULTIPROC_METHOD to 'spawn'

DEBUG 01-29 18:29:51 [plugins/__init__.py:43] Available plugins for group vllm.general_plugins:

DEBUG 01-29 18:29:51 [plugins/__init__.py:45] - lora_filesystem_resolver -> vllm.plugins.lora_resolvers.filesystem_resolver:register_filesystem_resolver

DEBUG 01-29 18:29:51 [plugins/__init__.py:48] All plugins in this group will be loaded. Set `VLLM_PLUGINS` to control which plugins to load.

(APIServer pid=456) INFO 01-29 18:29:51 [entrypoints/utils.py:325]

(APIServer pid=456) INFO 01-29 18:29:51 [entrypoints/utils.py:325] █ █ █▄ ▄█

(APIServer pid=456) INFO 01-29 18:29:51 [entrypoints/utils.py:325] ▄▄ ▄█ █ █ █ ▀▄▀ █ version 0.14.0rc2.dev328+g7e67df557

(APIServer pid=456) INFO 01-29 18:29:51 [entrypoints/utils.py:325] █▄█▀ █ █ █ █ model tencent/HY-MT1.5-7B

(APIServer pid=456) INFO 01-29 18:29:51 [entrypoints/utils.py:325] ▀▀ ▀▀▀▀▀ ▀▀▀▀▀ ▀ ▀

(APIServer pid=456) INFO 01-29 18:29:51 [entrypoints/utils.py:325]

(APIServer pid=456) INFO 01-29 18:29:51 [entrypoints/utils.py:261] non-default args: {'model_tag': 'tencent/HY-MT1.5-7B', 'api_server_count': 1, 'model': 'tencent/HY-MT1.5-7B', 'trust_remote_code': True, 'dtype': 'bfloat16', 'max_model_len': 8192, 'served_model_name': ['hunyuan'], 'distributed_executor_backend': 'ray', 'tensor_parallel_size': 2, 'max_num_seqs': 2}

(APIServer pid=456) The argument `trust_remote_code` is to be used with Auto classes. It has no effect here and is ignored.

(APIServer pid=456) The argument `trust_remote_code` is to be used with Auto classes. It has no effect here and is ignored.

(APIServer pid=456) INFO 01-29 18:29:53 [transformers_utils/config.py:388] Replacing legacy 'type' key with 'rope_type'

(APIServer pid=456) DEBUG 01-29 18:29:53 [model_executor/models/registry.py:670] Cached model info file for class vllm.model_executor.models.hunyuan_v1.HunYuanDenseV1ForCausalLM not found

(APIServer pid=456) DEBUG 01-29 18:29:53 [model_executor/models/registry.py:730] Cache model info for class vllm.model_executor.models.hunyuan_v1.HunYuanDenseV1ForCausalLM miss. Loading model instead.

(APIServer pid=456) DEBUG 01-29 18:29:57 [model_executor/models/registry.py:740] Loaded model info for class vllm.model_executor.models.hunyuan_v1.HunYuanDenseV1ForCausalLM

(APIServer pid=456) DEBUG 01-29 18:29:57 [logging_utils/log_time.py:29] Registry inspect model class: Elapsed time 3.6401174 secs

(APIServer pid=456) INFO 01-29 18:29:57 [config/model.py:541] Resolved architecture: HunYuanDenseV1ForCausalLM

(APIServer pid=456) INFO 01-29 18:29:57 [config/model.py:1561] Using max model len 8192

(APIServer pid=456) DEBUG 01-29 18:29:57 [config/model.py:1625] Generative models support chunked prefill.

(APIServer pid=456) DEBUG 01-29 18:29:57 [config/model.py:1683] Generative models support prefix caching.

(APIServer pid=456) DEBUG 01-29 18:29:57 [engine/arg_utils.py:1900] Enabling chunked prefill by default

(APIServer pid=456) DEBUG 01-29 18:29:57 [engine/arg_utils.py:1930] Enabling prefix caching by default

(APIServer pid=456) DEBUG 01-29 18:29:57 [engine/arg_utils.py:2008] Defaulting max_num_batched_tokens to 2048 for OPENAI_API_SERVER usage context.

(APIServer pid=456) INFO 01-29 18:29:57 [config/scheduler.py:226] Chunked prefill is enabled with max_num_batched_tokens=2048.

(APIServer pid=456) WARNING 01-29 18:29:57 [config/vllm.py:613] Async scheduling will be disabled because it is not supported with the `ray` distributed executor backend (only `mp`, `uni`, and `external_launcher` are supported).

(APIServer pid=456) INFO 01-29 18:29:57 [config/vllm.py:624] Asynchronous scheduling is disabled.

(APIServer pid=456) DEBUG 01-29 18:29:57 [plugins/__init__.py:35] No plugins for group vllm.stat_logger_plugins found.

(APIServer pid=456) DEBUG 01-29 18:29:58 [renderers/registry.py:51] Loading HfRenderer for renderer_mode='hf'

(APIServer pid=456) DEBUG 01-29 18:30:00 [plugins/io_processors/__init__.py:33] No IOProcessor plugins requested by the model

DEBUG 01-29 18:30:01 [plugins/__init__.py:35] No plugins for group vllm.platform_plugins found.

DEBUG 01-29 18:30:01 [platforms/__init__.py:36] Checking if TPU platform is available.

DEBUG 01-29 18:30:01 [platforms/__init__.py:55] TPU platform is not available because: No module named 'libtpu'

DEBUG 01-29 18:30:01 [platforms/__init__.py:61] Checking if CUDA platform is available.

DEBUG 01-29 18:30:01 [platforms/__init__.py:84] Confirmed CUDA platform is available.

DEBUG 01-29 18:30:01 [platforms/__init__.py:112] Checking if ROCm platform is available.

DEBUG 01-29 18:30:01 [platforms/__init__.py:126] ROCm platform is not available because: No module named 'amdsmi'

DEBUG 01-29 18:30:01 [platforms/__init__.py:133] Checking if XPU platform is available.

DEBUG 01-29 18:30:01 [platforms/__init__.py:153] XPU platform is not available because: No module named 'intel_extension_for_pytorch'

DEBUG 01-29 18:30:01 [platforms/__init__.py:160] Checking if CPU platform is available.

DEBUG 01-29 18:30:01 [platforms/__init__.py:61] Checking if CUDA platform is available.

DEBUG 01-29 18:30:01 [platforms/__init__.py:84] Confirmed CUDA platform is available.

DEBUG 01-29 18:30:01 [platforms/__init__.py:225] Automatically detected platform cuda.

DEBUG 01-29 18:30:03 [utils/import_utils.py:74] Loading module triton_kernels from /usr/local/lib/python3.12/dist-packages/vllm/third_party/triton_kernels/__init__.py.

(EngineCore_DP0 pid=540) DEBUG 01-29 18:30:04 [v1/engine/core.py:861] Waiting for init message from front-end.

(APIServer pid=456) DEBUG 01-29 18:30:04 [v1/engine/utils.py:1086] HELLO from local core engine process 0.

(EngineCore_DP0 pid=540) DEBUG 01-29 18:30:04 [v1/engine/core.py:872] Received init message: EngineHandshakeMetadata(addresses=EngineZmqAddresses(inputs=['ipc:///tmp/8bf437bd-dba4-4e33-81ca-9e7e722078f8'], outputs=['ipc:///tmp/7c88c322-e6fa-4acc-819e-73788bec33a2'], coordinator_input=None, coordinator_output=None, frontend_stats_publish_address=None), parallel_config={})

(EngineCore_DP0 pid=540) DEBUG 01-29 18:30:04 [v1/engine/core.py:676] Has DP Coordinator: False, stats publish address: None

(EngineCore_DP0 pid=540) DEBUG 01-29 18:30:04 [plugins/__init__.py:43] Available plugins for group vllm.general_plugins:

(EngineCore_DP0 pid=540) DEBUG 01-29 18:30:04 [plugins/__init__.py:45] - lora_filesystem_resolver -> vllm.plugins.lora_resolvers.filesystem_resolver:register_filesystem_resolver

(EngineCore_DP0 pid=540) DEBUG 01-29 18:30:04 [plugins/__init__.py:48] All plugins in this group will be loaded. Set `VLLM_PLUGINS` to control which plugins to load.

(EngineCore_DP0 pid=540) INFO 01-29 18:30:04 [v1/engine/core.py:96] Initializing a V1 LLM engine (v0.14.0rc2.dev328+g7e67df557) with config: model='tencent/HY-MT1.5-7B', speculative_config=None, tokenizer='tencent/HY-MT1.5-7B', skip_tokenizer_init=False, tokenizer_mode=auto, revision=None, tokenizer_revision=None, trust_remote_code=True, dtype=torch.bfloat16, max_seq_len=8192, download_dir=None, load_format=auto, tensor_parallel_size=2, pipeline_parallel_size=1, data_parallel_size=1, disable_custom_all_reduce=False, quantization=None, enforce_eager=False, enable_return_routed_experts=False, kv_cache_dtype=auto, device_config=cuda, structured_outputs_config=StructuredOutputsConfig(backend='auto', disable_fallback=False, disable_any_whitespace=False, disable_additional_properties=False, reasoning_parser='', reasoning_parser_plugin='', enable_in_reasoning=False), observability_config=ObservabilityConfig(show_hidden_metrics_for_version=None, otlp_traces_endpoint=None, collect_detailed_traces=None, kv_cache_metrics=False, kv_cache_metrics_sample=0.01, cudagraph_metrics=False, enable_layerwise_nvtx_tracing=False, enable_mfu_metrics=False, enable_mm_processor_stats=False, enable_logging_iteration_details=False), seed=0, served_model_name=hunyuan, enable_prefix_caching=True, enable_chunked_prefill=True, pooler_config=None, compilation_config={'level': None, 'mode': <CompilationMode.VLLM_COMPILE: 3>, 'debug_dump_path': None, 'cache_dir': '', 'compile_cache_save_format': 'binary', 'backend': 'inductor', 'custom_ops': ['none'], 'splitting_ops': ['vllm::unified_attention', 'vllm::unified_attention_with_output', 'vllm::unified_mla_attention', 'vllm::unified_mla_attention_with_output', 'vllm::mamba_mixer2', 'vllm::mamba_mixer', 'vllm::short_conv', 'vllm::linear_attention', 'vllm::plamo2_mamba_mixer', 'vllm::gdn_attention_core', 'vllm::kda_attention', 'vllm::sparse_attn_indexer', 'vllm::rocm_aiter_sparse_attn_indexer'], 'compile_mm_encoder': False, 'compile_sizes': [], 'compile_ranges_split_points': [2048], 'inductor_compile_config': {'enable_auto_functionalized_v2': False, 'combo_kernels': True, 'benchmark_combo_kernel': True}, 'inductor_passes': {}, 'cudagraph_mode': <CUDAGraphMode.FULL_AND_PIECEWISE: (2, 1)>, 'cudagraph_num_of_warmups': 1, 'cudagraph_capture_sizes': [1, 2, 4], 'cudagraph_copy_inputs': False, 'cudagraph_specialize_lora': True, 'use_inductor_graph_partition': False, 'pass_config': {'fuse_norm_quant': False, 'fuse_act_quant': False, 'fuse_attn_quant': False, 'eliminate_noops': True, 'enable_sp': False, 'fuse_gemm_comms': False, 'fuse_allreduce_rms': False}, 'max_cudagraph_capture_size': 4, 'dynamic_shapes_config': {'type': <DynamicShapesType.BACKED: 'backed'>, 'evaluate_guards': False, 'assume_32_bit_indexing': True}, 'local_cache_dir': None}

(EngineCore_DP0 pid=540) WARNING 01-29 18:30:04 [v1/executor/ray_utils.py:337] Tensor parallel size (2) exceeds available GPUs (1). This may result in Ray placement group allocation failures. Consider reducing tensor_parallel_size to 1 or less, or ensure your Ray cluster has 2 GPUs available.

(EngineCore_DP0 pid=540) 2026-01-29 18:30:04,314 INFO worker.py:1821 -- Connecting to existing Ray cluster at address: 10.65.37.234:6379...

(EngineCore_DP0 pid=540) 2026-01-29 18:30:04,322 INFO worker.py:2007 -- Connected to Ray cluster.

(EngineCore_DP0 pid=540) /usr/local/lib/python3.12/dist-packages/ray/_private/worker.py:2046: FutureWarning: Tip: In future versions of Ray, Ray will no longer override accelerator visible devices env var if num_gpus=0 or num_gpus=None (default). To enable this behavior and turn off this error message, set RAY_ACCEL_ENV_VAR_OVERRIDE_ON_ZERO=0

(EngineCore_DP0 pid=540) warnings.warn(

(EngineCore_DP0 pid=540) INFO 01-29 18:30:04 [v1/executor/ray_utils.py:402] No current placement group found. Creating a new placement group.

(EngineCore_DP0 pid=540) WARNING 01-29 18:30:04 [v1/executor/ray_utils.py:213] tensor_parallel_size=2 is bigger than a reserved number of GPUs (1 GPUs) in a node 3bc17d292be6c1ce64063ccf88cc9e275f7811ea5518eaec0e0c9af8. Tensor parallel workers can be spread out to 2+ nodes which can degrade the performance unless you have fast interconnect across nodes, like Infiniband. To resolve this issue, make sure you have more than 2 GPUs available at each node.

(EngineCore_DP0 pid=540) WARNING 01-29 18:30:04 [v1/executor/ray_utils.py:213] tensor_parallel_size=2 is bigger than a reserved number of GPUs (1 GPUs) in a node 6ba484af886647fca20706ba4d1cdf024e22b6d0ba8d70416a658d66. Tensor parallel workers can be spread out to 2+ nodes which can degrade the performance unless you have fast interconnect across nodes, like Infiniband. To resolve this issue, make sure you have more than 2 GPUs available at each node.

(EngineCore_DP0 pid=540) (pid=634) DEBUG 01-29 18:30:06 [plugins/__init__.py:35] No plugins for group vllm.platform_plugins found.

(EngineCore_DP0 pid=540) (pid=634) DEBUG 01-29 18:30:06 [platforms/__init__.py:36] Checking if TPU platform is available.

(EngineCore_DP0 pid=540) (pid=634) DEBUG 01-29 18:30:06 [platforms/__init__.py:55] TPU platform is not available because: No module named 'libtpu'

(EngineCore_DP0 pid=540) (pid=634) DEBUG 01-29 18:30:06 [platforms/__init__.py:61] Checking if CUDA platform is available.

(EngineCore_DP0 pid=540) (pid=634) DEBUG 01-29 18:30:06 [platforms/__init__.py:84] Confirmed CUDA platform is available.

(EngineCore_DP0 pid=540) (pid=634) DEBUG 01-29 18:30:06 [platforms/__init__.py:112] Checking if ROCm platform is available.

(EngineCore_DP0 pid=540) (pid=634) DEBUG 01-29 18:30:06 [platforms/__init__.py:126] ROCm platform is not available because: No module named 'amdsmi'

(EngineCore_DP0 pid=540) (pid=634) DEBUG 01-29 18:30:06 [platforms/__init__.py:133] Checking if XPU platform is available.

(EngineCore_DP0 pid=540) (pid=634) DEBUG 01-29 18:30:06 [platforms/__init__.py:153] XPU platform is not available because: No module named 'intel_extension_for_pytorch'

(EngineCore_DP0 pid=540) (pid=634) DEBUG 01-29 18:30:06 [platforms/__init__.py:160] Checking if CPU platform is available.

(EngineCore_DP0 pid=540) (pid=634) DEBUG 01-29 18:30:06 [platforms/__init__.py:61] Checking if CUDA platform is available.

(EngineCore_DP0 pid=540) (pid=634) DEBUG 01-29 18:30:06 [platforms/__init__.py:84] Confirmed CUDA platform is available.

(EngineCore_DP0 pid=540) (pid=634) DEBUG 01-29 18:30:06 [platforms/__init__.py:225] Automatically detected platform cuda.

(EngineCore_DP0 pid=540) DEBUG 01-29 18:30:09 [v1/executor/ray_executor.py:232] workers: [RayWorkerMetaData(worker=Actor(RayWorkerWrapper, 9b859f6c7f8acfaca07927d301000000), created_rank=0, adjusted_rank=-1, ip='10.65.37.234'), RayWorkerMetaData(worker=Actor(RayWorkerWrapper, b8d8f03a196056f6dc43f67101000000), created_rank=1, adjusted_rank=-1, ip='10.65.37.233')]

(EngineCore_DP0 pid=540) DEBUG 01-29 18:30:09 [v1/executor/ray_executor.py:233] driver_dummy_worker: None

(EngineCore_DP0 pid=540) INFO 01-29 18:30:09 [ray/ray_env.py:66] RAY_NON_CARRY_OVER_ENV_VARS from config: set()

(EngineCore_DP0 pid=540) INFO 01-29 18:30:09 [ray/ray_env.py:69] Copying the following environment variables to workers: ['VLLM_WORKER_MULTIPROC_METHOD', 'VLLM_LOGGING_LEVEL', 'VLLM_USAGE_SOURCE', 'LD_LIBRARY_PATH']

(EngineCore_DP0 pid=540) INFO 01-29 18:30:09 [ray/ray_env.py:74] If certain env vars should NOT be copied, add them to /root/.config/vllm/ray_non_carry_over_env_vars.json file

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=634) WARNING 01-29 18:30:09 [utils/system_utils.py:37] Overwriting environment variable LD_LIBRARY_PATH from '/usr/local/nvidia/lib64:/usr/local/cuda/lib64:/usr/local/cuda/lib64' to '/usr/local/lib/python3.12/dist-packages/cv2/../../lib64:/usr/local/lib/python3.12/dist-packages/cv2/../../lib64:/usr/local/nvidia/lib64:/usr/local/cuda/lib64:/usr/local/cuda/lib64'

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=634) DEBUG 01-29 18:30:09 [plugins/__init__.py:43] Available plugins for group vllm.general_plugins:

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=634) DEBUG 01-29 18:30:09 [plugins/__init__.py:45] - lora_filesystem_resolver -> vllm.plugins.lora_resolvers.filesystem_resolver:register_filesystem_resolver

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=634) DEBUG 01-29 18:30:09 [plugins/__init__.py:48] All plugins in this group will be loaded. Set `VLLM_PLUGINS` to control which plugins to load.

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=224, ip=10.65.37.233) DEBUG 01-29 18:30:09 [utils/import_utils.py:74] Loading module triton_kernels from /usr/local/lib/python3.12/dist-packages/vllm/third_party/triton_kernels/__init__.py.

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=634) WARNING 01-29 18:30:10 [v1/worker/worker_base.py:301] Missing `shared_worker_lock` argument from executor. This argument is needed for mm_processor_cache_type='shm'.

(EngineCore_DP0 pid=540) (pid=224, ip=10.65.37.233) DEBUG 01-29 18:30:07 [plugins/__init__.py:35] No plugins for group vllm.platform_plugins found.

(EngineCore_DP0 pid=540) (pid=224, ip=10.65.37.233) DEBUG 01-29 18:30:07 [platforms/__init__.py:36] Checking if TPU platform is available.

(EngineCore_DP0 pid=540) (pid=224, ip=10.65.37.233) DEBUG 01-29 18:30:07 [platforms/__init__.py:55] TPU platform is not available because: No module named 'libtpu'

(EngineCore_DP0 pid=540) (pid=224, ip=10.65.37.233) DEBUG 01-29 18:30:07 [platforms/__init__.py:61] Checking if CUDA platform is available. [repeated 2x across cluster] (Ray deduplicates logs by default. Set RAY_DEDUP_LOGS=0 to disable log deduplication, or see https://docs.ray.io/en/master/ray-observability/user-guides/configure-logging.html#log-deduplication for more options.)

(EngineCore_DP0 pid=540) (pid=224, ip=10.65.37.233) DEBUG 01-29 18:30:07 [platforms/__init__.py:84] Confirmed CUDA platform is available. [repeated 2x across cluster]

(EngineCore_DP0 pid=540) (pid=224, ip=10.65.37.233) DEBUG 01-29 18:30:07 [platforms/__init__.py:112] Checking if ROCm platform is available.

(EngineCore_DP0 pid=540) (pid=224, ip=10.65.37.233) DEBUG 01-29 18:30:07 [platforms/__init__.py:126] ROCm platform is not available because: No module named 'amdsmi'

(EngineCore_DP0 pid=540) (pid=224, ip=10.65.37.233) DEBUG 01-29 18:30:07 [platforms/__init__.py:133] Checking if XPU platform is available.

(EngineCore_DP0 pid=540) (pid=224, ip=10.65.37.233) DEBUG 01-29 18:30:07 [platforms/__init__.py:153] XPU platform is not available because: No module named 'intel_extension_for_pytorch'

(EngineCore_DP0 pid=540) (pid=224, ip=10.65.37.233) DEBUG 01-29 18:30:07 [platforms/__init__.py:160] Checking if CPU platform is available.

(EngineCore_DP0 pid=540) (pid=224, ip=10.65.37.233) DEBUG 01-29 18:30:07 [platforms/__init__.py:225] Automatically detected platform cuda.

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=634) DEBUG 01-29 18:30:12 [distributed/parallel_state.py:1170] world_size=2 rank=0 local_rank=0 distributed_init_method=tcp://10.65.37.234:50055 backend=nccl

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=634) INFO 01-29 18:30:12 [distributed/parallel_state.py:1212] world_size=2 rank=0 local_rank=0 distributed_init_method=tcp://10.65.37.234:50055 backend=nccl

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=634) [Gloo] Rank 0 is connected to 1 peer ranks. Expected number of connected peer ranks is : 1

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=224, ip=10.65.37.233) DEBUG 01-29 18:30:12 [distributed/parallel_state.py:1256] Detected 2 nodes in the distributed environment

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=224, ip=10.65.37.233) DEBUG 01-29 18:30:12 [utils/nccl.py:34] Found nccl from library libnccl.so.2

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=634) INFO 01-29 18:30:12 [distributed/device_communicators/pynccl.py:111] vLLM is using nccl==2.27.5

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=224, ip=10.65.37.233) WARNING 01-29 18:30:12 [distributed/device_communicators/symm_mem.py:67] SymmMemCommunicator: Device capability 8.6 not supported, communicator is not available.

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=224, ip=10.65.37.233) WARNING 01-29 18:30:12 [distributed/device_communicators/custom_all_reduce.py:92] Custom allreduce is disabled because this process group spans across nodes.

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=224, ip=10.65.37.233) DEBUG 01-29 18:30:12 [distributed/device_communicators/shm_broadcast.py:400] Connecting to tcp://10.65.37.234:60745

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=634) DEBUG 01-29 18:30:12 [distributed/device_communicators/shm_broadcast.py:354] vLLM message queue communication handle: Handle(local_reader_ranks=[], buffer_handle=None, local_subscribe_addr=None, remote_subscribe_addr='tcp://10.65.37.234:60745', remote_addr_ipv6=False)

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=634) INFO 01-29 18:30:12 [distributed/parallel_state.py:1423] rank 0 in world size 2 is assigned as DP rank 0, PP rank 0, PCP rank 0, TP rank 0, EP rank N/A

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=634) DEBUG 01-29 18:30:12 [v1/worker/gpu_worker.py:236] worker init memory snapshot: torch_peak=0.0GiB, free_memory=11.4GiB, total_memory=11.62GiB, cuda_memory=0.21GiB, torch_memory=0.0GiB, non_torch_memory=0.21GiB, timestamp=1769740212.8729987, auto_measure=True

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=634) DEBUG 01-29 18:30:12 [v1/worker/gpu_worker.py:237] worker requested memory: 10.46GiB

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=634) DEBUG 01-29 18:30:12 [compilation/decorators.py:203] Inferred dynamic dimensions for forward method of <class 'vllm.model_executor.models.deepseek_v2.DeepseekV2Model'>: ['input_ids', 'positions', 'intermediate_tensors', 'inputs_embeds']

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=634) DEBUG 01-29 18:30:12 [compilation/decorators.py:203] Inferred dynamic dimensions for forward method of <class 'vllm.model_executor.models.llama.LlamaModel'>: ['input_ids', 'positions', 'intermediate_tensors', 'inputs_embeds']

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=224, ip=10.65.37.233) DEBUG 01-29 18:30:13 [v1/sample/ops/topk_topp_sampler.py:53] FlashInfer top-p/top-k sampling is available but disabled by default. Set VLLM_USE_FLASHINFER_SAMPLER=1 to opt in after verifying accuracy for your workloads.

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=224, ip=10.65.37.233) DEBUG 01-29 18:30:13 [v1/sample/logits_processor/__init__.py:63] No logitsprocs plugins installed (group vllm.logits_processors).

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=634) INFO 01-29 18:30:13 [v1/worker/gpu_model_runner.py:4015] Starting to load model tencent/HY-MT1.5-7B...

(APIServer pid=456) DEBUG 01-29 18:30:14 [v1/engine/utils.py:975] Waiting for 1 local, 0 remote core engine proc(s) to start.

(APIServer pid=456) DEBUG 01-29 18:30:24 [v1/engine/utils.py:975] Waiting for 1 local, 0 remote core engine proc(s) to start.

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=224, ip=10.65.37.233) DEBUG 01-29 18:30:24 [platforms/cuda.py:346] Some attention backends are not valid for cuda with AttentionSelectorConfig(head_size=128, dtype=torch.bfloat16, kv_cache_dtype=auto, block_size=None, use_mla=False, has_sink=False, use_sparse=False, use_mm_prefix=False, attn_type=AttentionType.DECODER). Reasons: {}.

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=224, ip=10.65.37.233) INFO 01-29 18:30:24 [platforms/cuda.py:364] Using FLASH_ATTN attention backend out of potential backends: ('FLASH_ATTN', 'FLASHINFER', 'TRITON_ATTN', 'FLEX_ATTENTION')

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=224, ip=10.65.37.233) WARNING 01-29 18:30:09 [utils/system_utils.py:37] Overwriting environment variable LD_LIBRARY_PATH from '/usr/local/nvidia/lib64:/usr/local/cuda/lib64:/usr/local/cuda/lib64' to '/usr/local/lib/python3.12/dist-packages/cv2/../../lib64:/usr/local/lib/python3.12/dist-packages/cv2/../../lib64:/usr/local/nvidia/lib64:/usr/local/cuda/lib64:/usr/local/cuda/lib64'

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=224, ip=10.65.37.233) DEBUG 01-29 18:30:09 [plugins/__init__.py:43] Available plugins for group vllm.general_plugins:

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=224, ip=10.65.37.233) DEBUG 01-29 18:30:09 [plugins/__init__.py:45] - lora_filesystem_resolver -> vllm.plugins.lora_resolvers.filesystem_resolver:register_filesystem_resolver

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=224, ip=10.65.37.233) DEBUG 01-29 18:30:09 [plugins/__init__.py:48] All plugins in this group will be loaded. Set `VLLM_PLUGINS` to control which plugins to load.

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=634) DEBUG 01-29 18:30:09 [utils/import_utils.py:74] Loading module triton_kernels from /usr/local/lib/python3.12/dist-packages/vllm/third_party/triton_kernels/__init__.py.

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=224, ip=10.65.37.233) WARNING 01-29 18:30:11 [v1/worker/worker_base.py:301] Missing `shared_worker_lock` argument from executor. This argument is needed for mm_processor_cache_type='shm'.

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=224, ip=10.65.37.233) DEBUG 01-29 18:30:12 [distributed/parallel_state.py:1170] world_size=2 rank=1 local_rank=0 distributed_init_method=tcp://10.65.37.234:50055 backend=nccl

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=224, ip=10.65.37.233) INFO 01-29 18:30:12 [distributed/parallel_state.py:1212] world_size=2 rank=1 local_rank=0 distributed_init_method=tcp://10.65.37.234:50055 backend=nccl

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=224, ip=10.65.37.233) [Gloo] Rank 0 is connected to 0 peer ranks. Expected number of connected peer ranks is : 0 [repeated 11x across cluster]

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=634) DEBUG 01-29 18:30:12 [distributed/parallel_state.py:1256] Detected 2 nodes in the distributed environment

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=634) DEBUG 01-29 18:30:12 [utils/nccl.py:34] Found nccl from library libnccl.so.2

(EngineCore_DP0 pid=540) (RayWorkerWrapper pid=634) WARNING 01-29 18:30:12 [distributed/device_communicators/symm_mem.py:67] SymmMemCommunicator: Device capability 8.6 not supported, communicator is not available.