YarnCoarseGrainedExecutorBackend源码分析

YarnCoarseGrainedExecutorBackend源码分析

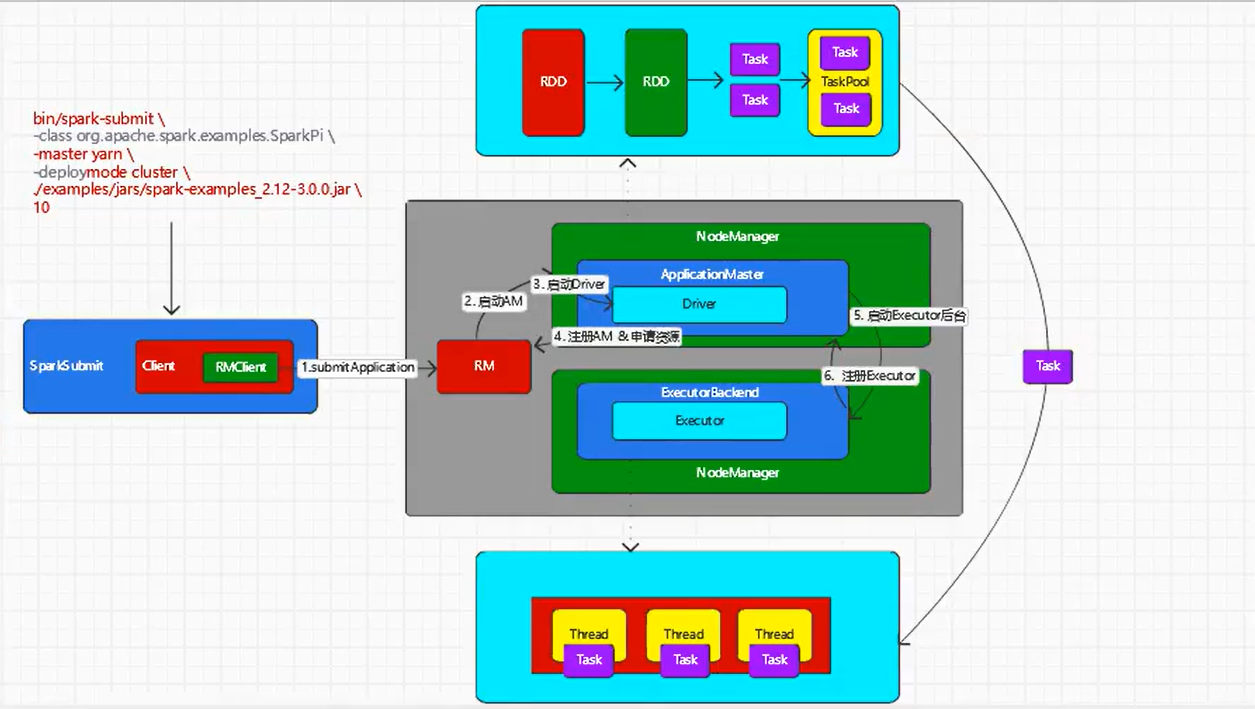

我们在applicationMaster中,发现他从rm申请了容器列表,经过一番筛选,遍历需要的容器然后联系nm去启动容器,在容器中又发现了他又开启了一个java线程,启动了YarnCoarseGrainedExecutorBackend,所以我们又来看看YarnCoarseGrainedExecutorBackend在做什么操作。

--main

-- CoarseGrainedExecutorBackend.run(backendArgs, createFn)

// 创建执行的环境

-- val env = SparkEnv.createExecutorEnv(

driverConf, executorId, hostname, port, cores, cfg.ioEncryptionKey, isLocal = false)

// 创建一个通信终端ExecutorBackend

-- env.rpcEnv.setupEndpoint("Executor", new CoarseGrainedExecutorBackend(

env.rpcEnv, driverUrl, executorId, hostname, cores, userClassPath, env))

// 发送

-- ref.ask[Boolean](RegisterExecutor(executorId, self, hostname, cores, extractLogUrls))

// 收到回复

-- case Success(msg) =>

// Always receive `true`. Just ignore it

case Failure(e) =>

exitExecutor(1, s"Cannot register with driver: $driverUrl", e, notifyDriver = false)

//创建executor对象

-- executor = new Executor(executorId, hostname, env, userClassPath, isLocal = false)

// 执行driver发送过来的task

-- LaunchTask(data)

//解码

-- val taskDesc = TaskDescription.decode(data.value)

// 准备计算

-- executor.launchTask(this, taskDesc)

// 创建一个执行线程 把他添加到线程池中

-- val tr = new TaskRunner(context, taskDescription)

runningTasks.put(taskDescription.taskId, tr)

//执行 会找到task的run方法

threadPool.execute(tr)

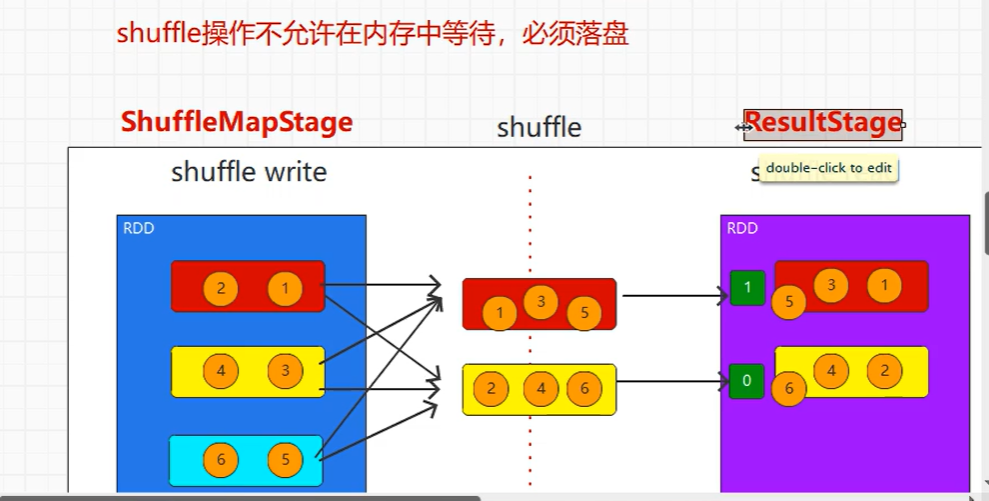

// spark的两个阶段,ShuffleMapTask写盘,ResultTask读盘

-- ShuffleMapTask.runTask()

-- dep.shuffleWriterProcessor.write(rdd, dep, mapId, context, partition)

-- ResultTask.runTask()

-- SparkEnv.get.shuffleManager.getReader(dep.shuffleHandle, split.index, split.index + 1, context).read()

CoarseGrainedSchedulerBackend (Driver)接收且答复

receiveAndReply

-- addressToExecutorId(executorAddress) = executorId

-- totalCoreCount.addAndGet(cores)

-- totalRegisteredExecutors.addAndGet(1)

-- executorRef.send(RegisteredExecutor)

// Note: some tests expect the reply to come after we put the executor in the map

-- context.reply(true)

-- makeOffers()

-- launchTasks(scheduler.resourceOffers(workOffers))

// 任务的序列化

-- val serializedTask = ser.serialize(task)

// 把序列化的任务发送给executorbackend

-- executorData.executorEndpoint.send(LaunchTask(new SerializableBuffer(serializedTask)))

Q:task在executor中是怎么执行的?

stage match {

case stage: ShuffleMapStage =>

stage.pendingPartitions.clear()

partitionsToCompute.map { id =>

val locs = taskIdToLocations(id)

val part = partitions(id)

stage.pendingPartitions += id

new ShuffleMapTask(stage.id, stage.latestInfo.attemptNumber,

taskBinary, part, locs, properties, serializedTaskMetrics, Option(jobId),

Option(sc.applicationId), sc.applicationAttemptId, stage.rdd.isBarrier())

}

case stage: ResultStage =>

partitionsToCompute.map { id =>

val p: Int = stage.partitions(id)

val part = partitions(p)

val locs = taskIdToLocations(id)

new ResultTask(stage.id, stage.latestInfo.attemptNumber,

taskBinary, part, locs, id, properties, serializedTaskMetrics,

Option(jobId), Option(sc.applicationId), sc.applicationAttemptId,

stage.rdd.isBarrier())

}

浙公网安备 33010602011771号

浙公网安备 33010602011771号