百度文库文字爬取

临近毕业,学校要求写实习报告,自己写报告是不可能写的,肯定是抄啊,百度文库能给你白抄么,不会的,你要注册会员,要花银子才能复制他的文章,对于我们苦逼穷学生,就剩这点技术了,用python写了个爬虫,爬出来直接就可以在终端复制粘贴了,捐献给各位同胞食用!

# 百度文库信息爬取

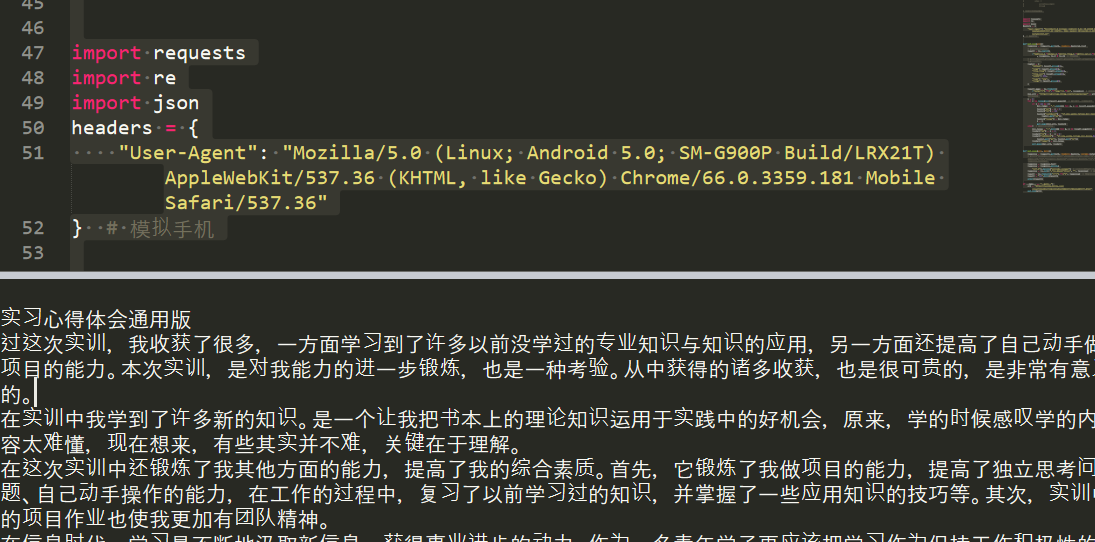

import requests

import re

import json

headers = {

"User-Agent": "Mozilla/5.0 (Linux; Android 5.0; SM-G900P Build/LRX21T) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/66.0.3359.181 Mobile Safari/537.36"

} # 模拟手机

def get_num(url):

response = requests.get(url, headers=headers).text

# print(response)

result = re.search(

r'&md5sum=(.*)&sign=(.*)&rtcs_flag=(.*)&rtcs_ver=(.*?)".*rsign":"(.*?)",', response, re.M | re.I) # 寻找参数

# print(result.group(1),result.group(2),result.group(3),result.group(4),result.group(5))

reader = {

"md5sum": result.group(1),

"sign": result.group(2),

"rtcs_flag": result.group(3),

"rtcs_ver": result.group(4),

"width": 176,

"type": "org",

"rsign": result.group(5)

}

result_page = re.findall(

r'merge":"(.*?)".*?"page":(.*?)}', response) # 获取每页的标签

doc_url = "https://wkretype.bdimg.com/retype/merge/" + url[29:-5] # 网页的前缀

n = 0

for i in range(len(result_page)): # 最大同时一次爬取10页

if i % 10 is 0:

doc_range = '_'.join([k for k, v in result_page[n:i]])

reader['pn'] = n + 1

reader['rn'] = 10

reader['callback'] = 'sf_edu_wenku_retype_doc_jsonp_%s_10' % (

reader.get('pn'))

reader['range'] = doc_range

n = i

get_page(doc_url, reader)

else: # 剩余不足10页的

doc_range = '_'.join([k for k, v in result_page[n:i + 1]])

reader['pn'] = n + 1

reader['rn'] = i - n + 1

reader['callback'] = 'sf_edu_wenku_retype_doc_jsonp_%s_%s' % (

reader.get('pn'), reader.get('rn'))

reader['range'] = doc_range

get_page(doc_url, reader)

def get_page(url, data):

response = requests.get(url, headers=headers, params=data)

# print("response.status_code:",response.status_code,"\n","response.url:",response.url,"\n","response.headers:",response.headers,"\n","response.cookies:",response.cookies)

response = response.text

response = response.encode(

'utf-8').decode('unicode_escape') # unciode转为utf-8 然后转为中文

response = re.sub(r',"no_blank":true', '', response) # 清洗数据

result = re.findall(r'c":"(.*?)"}', response) # 寻找文本匹配

result = '\n'.join(result)

print(result)

if __name__ == '__main__':

url = "" #这里写入想要爬取的文章URL,直接粘贴进来

get_num(url)

爬取效果图如下:

浙公网安备 33010602011771号

浙公网安备 33010602011771号