keepalived高可用

keepalived高可用

keepalived简介

Keepalived 软件起初是专为LVS负载均衡软件设计的,用来管理并监控LVS集群系统中各个服务节点的状态,后来又加入了可以实现高可用的VRRP功能。因此,Keepalived除了能够管理LVS软件外,还可以作为其他服务(例如:Nginx、Haproxy、MySQL等)的高可用解决方案软件。

Keepalived软件主要是通过VRRP协议实现高可用功能的。VRRP是Virtual Router RedundancyProtocol(虚拟路由器冗余协议)的缩写,VRRP出现的目的就是为了解决静态路由单点故障问题的,它能够保证当个别节点宕机时,整个网络可以不间断地运行。

所以,Keepalived 一方面具有配置管理LVS的功能,同时还具有对LVS下面节点进行健康检查的功能,另一方面也可实现系统网络服务的高可用功能。

keepalived官网

keepalived的重要功能

keepalived 有三个重要的功能,分别是:

管理LVS负载均衡软件

实现LVS集群节点的健康检查

keepalived 有三个重要的功能,分别是:

管理LVS负载均衡软件

实现LVS集群节点的健康检查

作为系统网络服务的高可用性(failover)作为系统keepalived 有三个重要的功能,分别是:

管理LVS负载均衡软件

实现LVS集群节点的健康检查

作为系统网络服务的高可用性(failover)网络服务的高可用性(failover)

keepalived高可用故障转移的原理

Keepalived 高可用服务之间的故障切换转移,是通过 VRRP (Virtual Router Redundancy Protocol ,虚拟路由器冗余协议)来实现的。

在 Keepalived 服务正常工作时,主 Master 节点会不断地向备节点发送(多播的方式)心跳消息,用以告诉备 Backup 节点自己还活看,当主 Master 节点发生故障时,就无法发送心跳消息,备节点也就因此无法继续检测到来自主 Master 节点的心跳了,于是调用自身的接管程序,接管主 Master 节点的 IP 资源及服务。而当主 Master 节点恢复时,备 Backup 节点又会释放主节点故障时自身接管的IP资源及服务,恢复到原来的备用角色。

什么是VRRP

VRRP ,全 称 Virtual Router Redundancy Protocol ,中文名为虚拟路由冗余协议 ,VRRP的出现就是为了解决静态踣甶的单点故障问题,VRRP是通过一种竞选机制来将路由的任务交给某台VRRP路由器的。

keepalived配置文件

keepalived 的主配置文件是 /etc/keepalived/keepalived.conf。其内容如下:

[root@master ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs { //全局配置

notification_email { //定义报警收件人邮件地址

acassen@firewall.loc

failover@firewall.loc

sysadmin@firewall.loc

}

notification_email_from Alexandre.Cassen@firewall.loc //定义报警发件人邮箱

smtp_server 192.168.118.1 //邮箱服务器地址

smtp_connect_timeout 30 //定义邮箱超时时间

router_id LVS_DEVEL //定义路由标识信息,同局域网内唯一

vrrp_skip_check_adv_addr

vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 { //定义实例

state MASTER //指定keepalived节点的初始状态,可选值为MASTER|BACKUP

interface eth0 //VRRP实例绑定的网卡接口,用户发送VRRP包

virtual_router_id 51 //虚拟路由的ID,同一集群要一致

priority 100 //定义优先级,按优先级来决定主备角色,优先级越大越优先

nopreempt //设置不抢占

advert_int 1 //主备通讯时间间隔

authentication { //配置认证

auth_type PASS //认证方式,此处为密码

auth_pass 1111 //同一集群中的keepalived配置里的此处必须一致,推荐使用8位随机数

}

virtual_ipaddress { //配置要使用的VIP地址

192.168.118.16

}

}

virtual_server 192.168.118.16 1358 { //配置虚拟服务器

delay_loop 6 //健康检查的时间间隔

lb_algo rr //lvs调度算法

lb_kind NAT //lvs模式

persistence_timeout 50 //持久化超时时间,单位是秒

protocol TCP //4层协议

sorry_server 192.168.118.200 1358 //定义备用服务器,当所有RS都故障时用sorry_server来响应客户端

real_server 192.168.118.2 1358 { //定义真实处理请求的服务器

weight 1 //给服务器指定权重,默认为1

HTTP_GET {

url {

path /testurl/test.jsp //指定要检查的URL路径

digest 640205b7b0fc66c1ea91c463fac6334d //摘要信息

}

url {

path /testurl2/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

url {

path /testurl3/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334d

}

connect_timeout 3 //连接超时时间

nb_get_retry 3 //get尝试次数

delay_before_retry 3 //在尝试之前延迟多长时间

}

}

real_server 192.168.118.3 1358 {

weight 1

HTTP_GET {

url {

path /testurl/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334c

}

url {

path /testurl2/test.jsp

digest 640205b7b0fc66c1ea91c463fac6334c

}

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

定制主配置文件

vrrp_instance段配置

nopreempt //设置为不抢占。默认是抢占的,当高优先级的机器恢复后,会抢占低优先 \

级的机器成为MASTER,而不抢占,则允许低优先级的机器继续成为MASTER,即使高优先级 \

的机器已经上线。如果要使用这个功能,则初始化状态必须为BACKUP。

preempt_delay //设置抢占延迟。单位是秒,范围是0---1000,默认是0.发现低优先 \

级的MASTER后多少秒开始抢占。

vrrp_script段配置

//作用:添加一个周期性执行的脚本。脚本的退出状态码会被调用它的所有的VRRP Instance记录。

//注意:至少有一个VRRP实例调用它并且优先级不能为0.优先级范围是1-254.

vrrp_script <SCRIPT_NAME> {

...

}

//选项说明:

script "/path/to/somewhere" //指定要执行的脚本的路径。

interval <INTEGER> //指定脚本执行的间隔。单位是秒。默认为1s。

timeout <INTEGER> //指定在多少秒后,脚本被认为执行失败。

weight <-254 --- 254> //调整优先级。默认为2.

rise <INTEGER> //执行成功多少次才认为是成功。

fall <INTEGER> //执行失败多少次才认为失败。

user <USERNAME> [GROUPNAME] //运行脚本的用户和组。

init_fail //假设脚本初始状态是失败状态。

//weight说明:

1. 如果脚本执行成功(退出状态码为0),weight大于0,则priority增加。

2. 如果脚本执行失败(退出状态码为非0),weight小于0,则priority减少。

3. 其他情况下,priority不变。

real_server段配置

weight <INT> //给服务器指定权重。默认是1

inhibit_on_failure //当服务器健康检查失败时,将其weight设置为0, \

//而不是从Virtual Server中移除

notify_up <STRING> //当服务器健康检查成功时,执行的脚本

notify_down <STRING> //当服务器健康检查失败时,执行的脚本

uthreshold <INT> //到这台服务器的最大连接数

lthreshold <INT> //到这台服务器的最小连接数

tcp_check段配置

connect_ip <IP ADDRESS> //连接的IP地址。默认是real server的ip地址

connect_port <PORT> //连接的端口。默认是real server的端口

bindto <IP ADDRESS> //发起连接的接口的地址。

bind_port <PORT> //发起连接的源端口。

connect_timeout <INT> //连接超时时间。默认是5s。

fwmark <INTEGER> //使用fwmark对所有出去的检查数据包进行标记。

warmup <INT> //指定一个随机延迟,最大为N秒。可防止网络阻塞。如果为0,则关闭该功能。

retry <INIT> //重试次数。默认是1次。

delay_before_retry <INT> //默认是1秒。在重试之前延迟多少秒。

keepalived实现nginx高可用

环境介绍

| 主机名 | ip |

|---|---|

| master | 192.168.118.129 |

| backup | 192.168.118.128 |

准备工作

master

#修改master主机名

[root@localhost ~]# hostnamectl set-hostname master

[root@localhost ~]# bash

#关闭防火墙和selinux

[root@master ~]# systemctl disable --now firewalld.service

Removed /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@master ~]# setenforce 0

[root@master ~]# sed -ri 's/^(SELINUX=).*/\1disabled/g' /etc/selinux/config

[root@backup ~]# reboot

backup

#修改backup主机名

[root@localhost ~]# hostnamectl set-hostname backup

[root@localhost ~]# bash

#关闭防火墙和selinux

[root@backup ~]# systemctl disable --now firewalld.service

Removed /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@backup ~]# setenforce 0

[root@backup ~]# sed -ri 's/^(SELINUX=).*/\1disabled/g' /etc/selinux/config

[root@backup ~]# reboot

安装并配置nginx

master配置nginx

[root@master ~]# yum -y install nginx

[root@master ~]# cd /usr/share/nginx/html/

[root@master html]# mv index.html{,.bak}

[root@master html]# echo 'master' > index.html //备份配置原配置文件

[root@master html]# cat index.html

master

[root@master ~]# systemctl start nginx.service //开启nginx

[root@master ~]# curl 192.168.118.129

master

backup配置nginx

[root@backup ~]# yum -y install nginx

[root@backup ~]# cd /usr/share/nginx/html/

[root@backup html]# mv index.html{,.bak}

[root@backup html]# echo 'backup' > index.html

[root@backup html]# cat index.html

backup

[root@backup]# systemctl start nginx.service

[root@backup]# curl 192.168.118.128

backup

安装并配置keepalived

master安装配置keepalived

[root@master ~]# yum -y install keepalived

[root@master ~]# cd /etc/keepalived/

[root@master keepalived]# vim keepalived.conf

[root@master keepalived]# cat keepalived.conf

! Configuration File for keepalived

global_defs {

router_id lb01

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

192.168.118.250

}

}

virtual_server 192.168.118.250 80 {

delay_loop 6

lb_algo rr

lb_kind NAT

persistence_timeout 50

protocol TCP

real_server 192.168.118.129 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.118.128 80 {

weight 1keepa

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

#启动keepalived

[root@master ~]# systemctl start keepalived.service

[root@master ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:b5:9a:13 brd ff:ff:ff:ff:ff:ff

inet 192.168.118.129/24 brd 192.168.118.255 scope global dynamic noprefixroute ens33

valid_lft 1121sec preferred_lft 1121sec

inet 192.168.118.250/32 scope global ens33 //当keepalived启动后 会生成vip

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:feb5:9a13/64 scope link noprefixroute

valid_lft forever preferred_lft forever

backup安装配置keepalived

[root@backup ~]# yum -y install keepalived

[root@master ~]# cd /etc/keepalived/

[root@master keepalived]# vim keepalived.conf

[root@master keepalived]# cat keepalived.conf

! Configuration File for keepalived

global_defs {

router_id lb02

}

vrrp_instance VI_1 {

state BACKUP //修改为backup

interface ens33

virtual_router_id 51

priority 90 //修改优先级 因为是备 所以优先级更低

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

192.168.118.250

}

}

virtual_server 192.168.118.250 80 {

delay_loop 6

lb_algo rr

lb_kind NAT

persistence_timeout 50

protocol TCP

real_server 192.168.118.129 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.118.128 80 {

weight 1keepa

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

[root@backup ~]# systemctl start keepalived.service

[root@backup ~]# ip a //因为优先级低 所以没有vip

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:16:02:99 brd ff:ff:ff:ff:ff:ff

inet 192.168.118.128/24 brd 192.168.118.255 scope global dynamic noprefixroute ens33

valid_lft 1140sec preferred_lft 1140sec

inet6 fe80::20c:29ff:fe16:299/64 scope link noprefixroute

valid_lft forever preferred_lft forever

访问测试

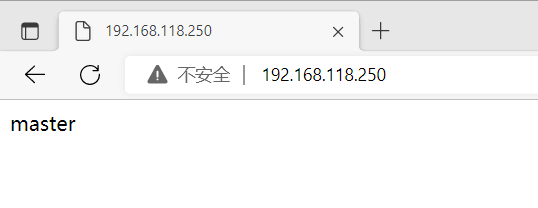

master在浏览器上使用vip进行访问

正常情况下主(master)的keepalived和nginx都是开启的,备(backup)的nginx是关闭的

[root@backup ~]# systemctl stop nginx

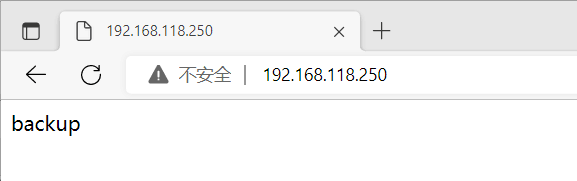

backup在浏览器上使用vip进行访问

关闭master主机的keepalived服务

[root@master ~]# systemctl stop keepalived.service

这时backup主机生成了vip

[root@backup ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:16:02:99 brd ff:ff:ff:ff:ff:ff

inet 192.168.118.128/24 brd 192.168.118.255 scope global dynamic noprefixroute ens33

valid_lft 1735sec preferred_lft 1735sec

inet 192.168.118.250/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe16:299/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@backup ~]# systemctl start nginx //开启nginx

在浏览器中访问

配置脚本

恢复环境

正常情况下主(master)的keepalived和nginx都是开启的,备(backup)的nginx是关闭的

[root@master ~]# systemctl start keepalived.service //启动master的keepalived服务

[root@backup ~]# systemctl stop nginx //关闭backup的nginx服务

master编写脚本

此脚本用于控制keepalived是否关闭,当master的nginx异常,而关闭时就自动关闭keepalived服务以便让backup能获得vip

[root@master ~]# mkdir /scripts

[root@master ~]# cd /scripts/

[root@master scripts]# touch check_m.sh

[root@master scripts]# vim check_m.sh

#!/bin/bash

nginx_status=$(ps -ef|grep -Ev "grep|$0"|grep '\bnginx\b'|wc -l)

if [ $nginx_status -lt 1 ];then

systemctl stop keepalived

fi

[root@master scripts]# chmod +x check_m.sh

[root@master scripts]# ll

total 4

-rwxr-xr-x 1 root root 145 Oct 9 00:29 check_m.sh

此脚本用于检查主机是否为master和backup 来控制nginx是否关闭和开启

[root@master scripts]# touch notify.sh

[root@master scripts]# vim notify.sh

[root@backup scripts]# cat notify.sh

#!/bin/bash

VIP=$2

#sendmail (){ //注释内容为异常时发送邮件,实验并不需要

# subject="${VIP}'s server keepalived state is translate"

# content="`date +'%F %T'`: `hostname`'s state change to master"

# echo $content | mail -s "$subject" **********@qq.com

#}

case "$1" in

master)

nginx_status=$(ps -ef|grep -Ev "grep|$0"|grep '\bnginx\b'|wc -l)

if [ $nginx_status -lt 1 ];then

systemctl start nginx

fi

sendmail

;;

backup)

nginx_status=$(ps -ef|grep -Ev "grep|$0"|grep '\bnginx\b'|wc -l)

if [ $nginx_status -gt 0 ];then

systemctl stop nginx

fi

;;

*)

echo "Usage:$0 master|backup VIP"

;;

esac

[root@master scripts]# chmod +x notify.sh

[root@master scripts]# ll

total 8

-rwxr-xr-x 1 root root 145 Oct 9 00:29 check_m.sh

-rwxr-xr-x 1 root root 572 Oct 9 00:36 notify.sh

backup编写脚本

[root@backup ~]# mkdir /scripts

[root@backup ~]# cd /scripts/

[root@backup scripts]# touch notify.sh

[root@backup scripts]# vim notify.sh

[root@backup scripts]# cat notify.sh

#!/bin/bash

VIP=$2

#sendmail (){

# subject="${VIP}'s server keepalived state is translate"

# content="`date +'%F %T'`: `hostname`'s state change to master"

# echo $content | mail -s "$subject" **********@qq.com

#}

case "$1" in

master)

nginx_status=$(ps -ef|grep -Ev "grep|$0"|grep '\bnginx\b'|wc -l)

if [ $nginx_status -lt 1 ];then

systemctl start nginx

fi

sendmail

;;

backup)

nginx_status=$(ps -ef|grep -Ev "grep|$0"|grep '\bnginx\b'|wc -l)

if [ $nginx_status -gt 0 ];then

systemctl stop nginx

fi

;;

*)

echo "Usage:$0 master|backup VIP"

;;

esac

[root@backup scripts]# chmod +x notify.sh

[root@backup scripts]# ll

total 4

-rwxr-xr-x 1 root root 558 Oct 9 00:41 notify.sh

配置keepalived加入监控脚本的配置

主(master)配置keepalived

[root@master ~]# vim /etc/keepalived/keepalived.conf

[root@master ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id lb01

}

vrrp_script nginx_check { //添加此处

script "/scripts/check_m.sh"

interval 1

weight -20

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

192.168.118.250

}

track_script { //添加此处

nginx_check

}

notify_master "/scripts/notify.sh master 192.168.118.250"

}

virtual_server 192.168.118.250 80 {

delay_loop 6

lb_algo rr

lb_kind NAT

persistence_timeout 50

protocol TCP

real_server 192.168.118.129 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.118.128 80 {

weight 1keepa

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

[root@master ~]# systemctl restart keepalived.service //重启服务

备用(backup)配置keepalived

[root@backup ~]# vim /etc/keepalived/keepalived.conf

[root@backup ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id lb02

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

192.168.118.250

}

notify_master "/scripts/notify.sh master 192.168.118.250"

notify_backup "/scripts/notify.sh backup 192.168.118.250"

}

virtual_server 192.168.118.250 80 {

delay_loop 6

lb_algo rr

lb_kind NAT

persistence_timeout 50

protocol TCP

real_server 192.168.118.129 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.118.128 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

[root@backup ~]# systemctl restart keepalived.service //重启服务

实验

这时保证master主机nginx与keepalived服务都处于开启状态,backup主机nginx处于关闭keepalived处于开启状态

关闭master的nginx服务

[root@master ~]# systemctl stop nginx

[root@master ~]# ip a //vip消失了

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:b5:9a:13 brd ff:ff:ff:ff:ff:ff

inet 192.168.118.129/24 brd 192.168.118.255 scope global dynamic noprefixroute ens33

valid_lft 1294sec preferred_lft 1294sec

inet6 fe80::20c:29ff:feb5:9a13/64 scope link noprefixroute

valid_lft forever preferred_lft forever

当master的nginx服务异常时,backup抢占vip开启nginx服务

[root@backup ~]# systemctl status nginx

● nginx.service - The nginx HTTP and reverse proxy server

Loaded: loaded (/usr/lib/systemd/system/nginx.service; disabled; vendor pres>

Active: active (running) since Sun 2022-10-09 00:56:16 CST; 3min 42s ago

Process: 143804 ExecStart=/usr/sbin/nginx (code=exited, status=0/SUCCESS)

Process: 143802 ExecStartPre=/usr/sbin/nginx -t (code=exited, status=0/SUCCES>

Process: 143799 ExecStartPre=/usr/bin/rm -f /run/nginx.pid (code=exited, stat>

...省略

[root@backup ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:16:02:99 brd ff:ff:ff:ff:ff:ff

inet 192.168.118.128/24 brd 192.168.118.255 scope global dynamic noprefixroute ens33

valid_lft 1070sec preferred_lft 1070sec

inet 192.168.118.250/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe16:299/64 scope link noprefixroute

valid_lft forever preferred_lft forever

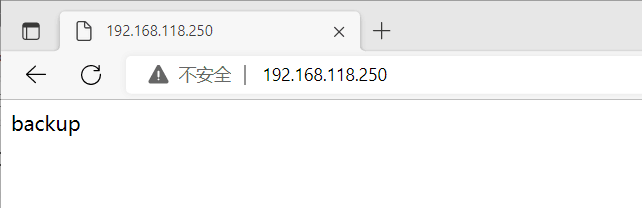

浏览器这时应访问backup

正常情况下主的nginx和keepalived服务是开启的,备的nginx是关闭keeplived服务是开启的。当主的nginx服务异常时通过监控或者脚本用邮件发送告警信息给用户,当需要恢复主的服务时需要手动将主的nginx异常修复后并将nginx和keepalived服务重启,主就拥有了vip并可以正常访问到master

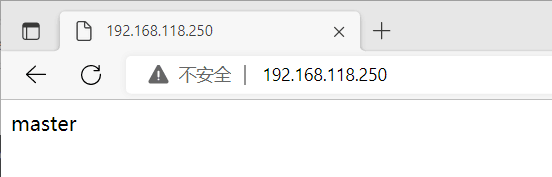

开启master的nginx服务和keepalived服务

[root@master ~]# systemctl start nginx

[root@master ~]# systemctl restart keepalived.service

[root@master ~]# ip a //重新获取到了vip

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:b5:9a:13 brd ff:ff:ff:ff:ff:ff

inet 192.168.118.129/24 brd 192.168.118.255 scope global dynamic noprefixroute ens33

valid_lft 1777sec preferred_lft 1777sec

inet 192.168.118.250/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:feb5:9a13/64 scope link noprefixroute

valid_lft forever preferred_lft forever

浏览器这时应访问到master

keepalived实现haproxy负载均衡高可用

环境介绍

| 主机名 | ip | 服务 |

|---|---|---|

| master | 192.168.118.129 | haproxy keepalived |

| backup | 192.168.118.128 | haproxy keepalived |

| RS1 | 192.168.118.130 | nginx |

| RS2 | 192.168.118.131 | httpd |

准备工作

master

#修改master主机名

[root@localhost ~]# hostnamectl set-hostname master

[root@localhost ~]# bash

#关闭防火墙和selinux

[root@master ~]# systemctl disable --now firewalld.service

Removed /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@master ~]# setenforce 0

[root@master ~]# sed -ri 's/^(SELINUX=).*/\1disabled/g' /etc/selinux/config

[root@backup ~]# reboot

backup

#修改backup主机名

[root@localhost ~]# hostnamectl set-hostname backup

[root@localhost ~]# bash

#关闭防火墙和selinux

[root@backup ~]# systemctl disable --now firewalld.service

Removed /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@backup ~]# setenforce 0

[root@backup ~]# sed -ri 's/^(SELINUX=).*/\1disabled/g' /etc/selinux/config

[root@backup ~]# reboot

RS1

#修改RS1主机名

[root@localhost ~]# hostnamectl set-hostname RS1

[root@localhost ~]# bash

#关闭防火墙和selinux

[root@RS1 ~]# systemctl disable --now firewalld.service

Removed /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@RS1 ~]# setenforce 0

[root@RS1 ~]# sed -ri 's/^(SELINUX=).*/\1disabled/g' /etc/selinux/config

[root@RS1 ~]# reboot

#安装nginx服务,主页内容为web1

[root@RS1 ~]# dnf -y install nginx

[root@RS1 ~]# echo "web1" > /usr/share/nginx/html/index.html

[root@RS1 ~]# systemctl enable --now nginx.service

Created symlink /etc/systemd/system/multi-user.target.wants/nginx.service → /usr/lib/systemd/system/nginx.service.

[root@RS1 ~]# ss -antl

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 128 0.0.0.0:80 0.0.0.0:*

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 128 [::]:80 [::]:*

LISTEN 0 128 [::]:22 [::]:*

[root@RS1 ~]# curl 192.168.118.130

web1

RS2

#修改RS2主机名

[root@localhost ~]# hostnamectl set-hostname RS2

[root@localhost ~]# bash

#关闭防火墙和selinux

[root@RS2 ~]# systemctl disable --now firewalld.service

Removed /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.

[root@RS2 ~]# setenforce 0

[root@RS2 ~]# sed -ri 's/^(SELINUX=).*/\1disabled/g' /etc/selinux/config

[root@RS2 ~]# reboot

#安装httpd服务,主页内容为web2

[root@RS2 ~]# dnf -y install httpd

[root@RS2 ~]# echo "web2" > /var/www/html/index.html

[root@RS2 ~]# systemctl enable --now httpd.service

Created symlink /etc/systemd/system/multi-user.target.wants/httpd.service → /usr/lib/systemd/system/httpd.service.

[root@RS2 ~]# ss -antl

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 128 *:80 *:*

LISTEN 0 128 [::]:22 [::]:*

[root@RS2 ~]# curl 192.168.118.131

web2

部署haproxy负载均衡

master

#创建用户

[root@master ~]# useradd -rMs /sbin/nologin haproxy

#下载依赖包

[root@master ~]# dnf -y install make gcc pcre-devel bzip2-devel openssl-devel systemd-devel wget vim

#下载haproxy压缩包

[root@master ~]# wget https://src.fedoraproject.org/repo/pkgs/haproxy/haproxy-2.6.0.tar.gz/sha512/7bb70bfb5606bbdac61d712bc510c5e8d5a5126ed8827d699b14a2f4562b3bd57f8f21344d955041cee0812c661350cca8082078afe2f277ff1399e461ddb7bb/haproxy-2.6.0.tar.gz

#解压并安装

[root@master ~]# tar -xf haproxy-2.6.0.tar.gz

[root@master ~]# cd haproxy-2.6.0

[root@master haproxy-2.6.0]# make -j $(grep 'processor' /proc/cpuinfo |wc -l) \

TARGET=linux-glibc \

USE_OPENSSL=1 \

USE_ZLIB=1 \

USE_PCRE=1 \

USE_SYSTEMD=1

[root@master haproxy-2.6.0]# make install PREFIX=/usr/local/haproxy

#复制命令到/usr/sbin目录下

[root@master haproxy-2.6.0]# cp haproxy /usr/sbin/

[root@master haproxy-2.6.0]# cd

#修改内核参数

[root@master ~]# vim /etc/sysctl.conf

...省略

net.ipv4.ip_nonlocal_bind = 1

net.ipv4.ip_forward = 1

[root@master ~]# sysctl -p

net.ipv4.ip_nonlocal_bind = 1

net.ipv4.ip_forward = 1

#修改配置文件

[root@master ~]# mkdir /etc/haproxy

[root@master ~]# vim /etc/haproxy/haproxy.cfg

global

daemon

maxconn 256

defaults

mode http

timeout connect 5000ms

timeout client 50000ms

timeout server 50000ms

frontend http-in

bind *:80

default_backend servers

backend servers

server web01 192.168.118.130:80

server web02 192.168.118.131:80

#编写service文件,并启动服务

[root@master ~]# vim /usr/lib/systemd/system/haproxy.service

[Unit]

Description=HAProxy Load Balancer

After=syslog.target network.target

[Service]

ExecStartPre=/usr/local/haproxy/sbin/haproxy -f /etc/haproxy/haproxy.cfg -c -q

ExecStart=/usr/local/haproxy/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -p /var/run/haproxy.pid

ExecReload=/bin/kill -USR2 $MAINPID

[Install]

WantedBy=multi-user.target

[root@master ~]# systemctl daemon-reload

[root@master ~]# systemctl start haproxy.service

[root@master ~]# ss -antl

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 128 0.0.0.0:80 0.0.0.0:*

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 128 [::]:22 [::]:*

#查看负载均衡效果

[root@master ~]# curl 192.168.118.129

web1

[root@master ~]# curl 192.168.118.129

web2

[root@master ~]# curl 192.168.118.129

web1

[root@master ~]# curl 192.168.118.129

web2

backup

[root@backup ~]# dnf -y install nginx

#创建用户

[root@backup ~]# useradd -rMs /sbin/nologin haproxy

#下载依赖包

[root@backup ~]# dnf -y install make gcc pcre-devel bzip2-devel openssl-devel systemd-devel wget vim

#下载haproxy压缩包

[root@backup ~]# wget https://src.fedoraproject.org/repo/pkgs/haproxy/haproxy-2.6.0.tar.gz/sha512/7bb70bfb5606bbdac61d712bc510c5e8d5a5126ed8827d699b14a2f4562b3bd57f8f21344d955041cee0812c661350cca8082078afe2f277ff1399e461ddb7bb/haproxy-2.6.0.tar.gz

#解压并安装

[root@backup ~]# tar -xf haproxy-2.6.0.tar.gz

[root@backup ~]# cd haproxy-2.6.0

[root@backup haproxy-2.6.0]# make -j $(grep 'processor' /proc/cpuinfo |wc -l) \

TARGET=linux-glibc \

USE_OPENSSL=1 \

USE_ZLIB=1 \

USE_PCRE=1 \

USE_SYSTEMD=1

[root@backup haproxy-2.6.0]# make install PREFIX=/usr/local/haproxy

#复制命令到/usr/sbin目录下

[root@backup haproxy-2.6.0]# cp haproxy /usr/sbin/

[root@backup haproxy-2.6.0]# cd

#修改内核参数

[root@backup ~]# vim /etc/sysctl.conf

...省略

net.ipv4.ip_nonlocal_bind = 1

net.ipv4.ip_forward = 1

[root@backup ~]# sysctl -p

net.ipv4.ip_nonlocal_bind = 1

net.ipv4.ip_forward = 1

#修改配置文件

[root@backup ~]# mkdir /etc/haproxy

[root@backup ~]# vim /etc/haproxy/haproxy.cfg

global

daemon

maxconn 256

defaults

mode http

timeout connect 5000ms

timeout client 50000ms

timeout server 50000ms

frontend http-in

bind *:80

default_backend servers

backend servers

server web01 192.168.118.130:80

server web02 192.168.118.131:80

#编写service文件,并启动服务

[root@backup ~]# vim /usr/lib/systemd/system/haproxy.service

[Unit]

Description=HAProxy Load Balancer

After=syslog.target network.target

[Service]

ExecStartPre=/usr/local/haproxy/sbin/haproxy -f /etc/haproxy/haproxy.cfg -c -q

ExecStart=/usr/local/haproxy/sbin/haproxy -Ws -f /etc/haproxy/haproxy.cfg -p /var/run/haproxy.pid

ExecReload=/bin/kill -USR2 $MAINPID

[Install]

WantedBy=multi-user.target

[root@backup ~]# systemctl daemon-reload

[root@backup ~]# systemctl start haproxy.service

[root@backup ~]# ss -antl

State Recv-Q Send-Q Local Address:Port Peer Address:Port Process

LISTEN 0 128 0.0.0.0:80 0.0.0.0:*

LISTEN 0 128 0.0.0.0:22 0.0.0.0:*

LISTEN 0 128 [::]:22 [::]:*

#查看负载均衡效果

[root@backup ~]# curl 192.168.118.128

web1

[root@backup ~]# curl 192.168.118.128

web2

[root@backup ~]# curl 192.168.118.128

web1

[root@backup ~]# curl 192.168.118.128

web2

#backup端的负载均衡器最好关掉

[root@backup ~]# systemctl stop haproxy.service

部署keepalived高可用

master

#首先安装keepalived

[root@master ~]# dnf -y install keepalived

#编辑配置文件

[root@master ~]# vim /etc/keepalived/keepalived.conf

[root@master ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id lb01

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

192.168.118.250

}

}

virtual_server 192.168.118.250 80 {

delay_loop 6

lb_algo rr

lb_kind DR

persistence_timeout 50

protocol TCP

real_server 192.168.118.129 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.118.128 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

#并启动服务

[root@master ~]# systemctl start keepalived.service

#通过虚拟ip访问

[root@master ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:b5:9a:13 brd ff:ff:ff:ff:ff:ff

inet 192.168.118.129/24 brd 192.168.118.255 scope global dynamic noprefixroute ens33

valid_lft 1066sec preferred_lft 1066sec

inet 192.168.118.250/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:feb5:9a13/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@master ~]# curl 192.168.118.250

web1

[root@master ~]# curl 192.168.118.250

web2

[root@master ~]# curl 192.168.118.250

web1

[root@master ~]# curl 192.168.118.250

web2

backup

#首先安装keepalived

[root@backup ~]# dnf -y install keepalived

#编辑配置文件

[root@backup ~]# vim /etc/keepalived/keepalived.conf

[root@backup ~]# cat /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id lb02

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

192.168.118.250

}

}

virtual_server 192.168.118.250 80 {

delay_loop 6

lb_algo rr

lb_kind DR

persistence_timeout 50

protocol TCP

real_server 192.168.118.129 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.118.128 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

#启动服务

[root@backup ~]# systemctl start keepalived.service

编写脚本

master

[root@master ~]# mkdir /scripts

[root@master ~]# cd /scripts/

[root@master scripts]# vim check_haproxy.sh

#!/bin/bash

haproxy_status=$(ps -ef|grep -Ev "grep|$0"|grep '\bhaproxy\b'|wc -l)

if [ $haproxy_status -lt 1 ];then

systemctl stop keepalived

fi

[root@master scripts]# vim notify.sh

#!/bin/bash

VIP=$2

case "$1" in

master)

haproxy_status=$(ps -ef|grep -Ev "grep|$0"|grep '\bhaproxy\b'|wc -l)

if [ $haproxy_status -lt 1 ];then

systemctl start haproxy

fi

;;

backup)

haproxy_status=$(ps -ef|grep -Ev "grep|$0"|grep '\bhaproxy\b'|wc -l)

if [ $haproxy_status -gt 0 ];then

systemctl stop haproxy

fi

;;

esac

[root@master scripts]# chmod +x check_haproxy.sh notify.sh

[root@master scripts]# ll

total 8

-rwxr-xr-x 1 root root 145 Oct 10 00:32 check_haproxy.sh

-rwxr-xr-x 1 root root 328 Oct 10 00:38 notify.sh

backup

[root@backup ~]# mkdir /scripts

[root@backup ~]# cd /scripts/

[root@backup scripts]# scp root@192.168.118.129:/scripts/notify.sh .

The authenticity of host '192.168.118.129 (192.168.118.129)' can't be established.

ECDSA key fingerprint is SHA256:+RwKWt1ErJuzJKcNeLslD46lbAtIO3wmNQk9BhIrtxs.

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '192.168.118.129' (ECDSA) to the list of known hosts.

root@192.168.118.129's password:

notify.sh 100% 328 353.0KB/s 00:00

[root@backup scripts]# ll

total 4

-rwxr-xr-x 1 root root 328 Oct 10 00:41 notify.sh

配置keepalived加入监控脚本的配置

master

[root@master ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id lb01

}

vrrp_script haproxy_check { #添加这块

script "/scripts/check_haproxy.sh"

interval 1

weight -20

}

vrrp_instance VI_1 {

state MASTER

interface ens33

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

192.168.118.250

}

track_script { #添加这块

haproxy_check

}

notify_master "/scripts/notify.sh master 192.168.118.250"

}

virtual_server 192.168.118.250 80 {

delay_loop 6

lb_algo rr

lb_kind DR

persistence_timeout 50

protocol TCP

real_server 192.168.118.129 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.118.128 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

#重启服务

[root@master ~]# systemctl restart keepalived.service

backup

backup无需检测nginx是否正常,当升级为MASTER时启动nginx,当降级为BACKUP时关闭

[root@backup ~]# vim /etc/keepalived/keepalived.conf

onfiguration File for keepalived

global_defs {

router_id lb02

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass 123456

}

virtual_ipaddress {

192.168.118.250

}

notify_master "/scripts/notify.sh master 192.168.118.250" #只需添加此行

# notify_backup "/scripts/notify.sh backup 192.168.118.250"

}

virtual_server 192.168.118.250 80 {

delay_loop 6

lb_algo rr

lb_kind DR

persistence_timeout 50

protocol TCP

real_server 192.168.118.129 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

real_server 192.168.118.128 80 {

weight 1

TCP_CHECK {

connect_port 80

connect_timeout 3

nb_get_retry 3

delay_before_retry 3

}

}

}

#重启服务

[root@backup ~]# systemctl restart keepalived.service

测试

模拟haproxy服务故障

master

[root@master ~]# curl 192.168.118.250

web1

[root@master ~]# curl 192.168.118.250

web2

[root@master ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:b5:9a:13 brd ff:ff:ff:ff:ff:ff

inet 192.168.118.129/24 brd 192.168.118.255 scope global dynamic noprefixroute ens33

valid_lft 1404sec preferred_lft 1404sec

inet 192.168.118.250/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:feb5:9a13/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@master ~]# systemctl stop haproxy.service

[root@master ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:b5:9a:13 brd ff:ff:ff:ff:ff:ff

inet 192.168.118.129/24 brd 192.168.118.255 scope global dynamic noprefixroute ens33

valid_lft 1322sec preferred_lft 1322sec

inet6 fe80::20c:29ff:feb5:9a13/64 scope link noprefixroute

valid_lft forever preferred_lft forever

backup获得vip

[root@backup ~]# ip a

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc fq_codel state UP group default qlen 1000

link/ether 00:0c:29:16:02:99 brd ff:ff:ff:ff:ff:ff

inet 192.168.118.128/24 brd 192.168.118.255 scope global dynamic noprefixroute ens33

valid_lft 1328sec preferred_lft 1328sec

inet 192.168.118.250/32 scope global ens33

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe16:299/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@backup ~]# curl 192.168.118.250

web1

[root@backup ~]# curl 192.168.118.250

web2

[root@backup ~]# curl 192.168.118.250

web1

[root@backup ~]# curl 192.168.118.250

web2

浙公网安备 33010602011771号

浙公网安备 33010602011771号