爬虫作业

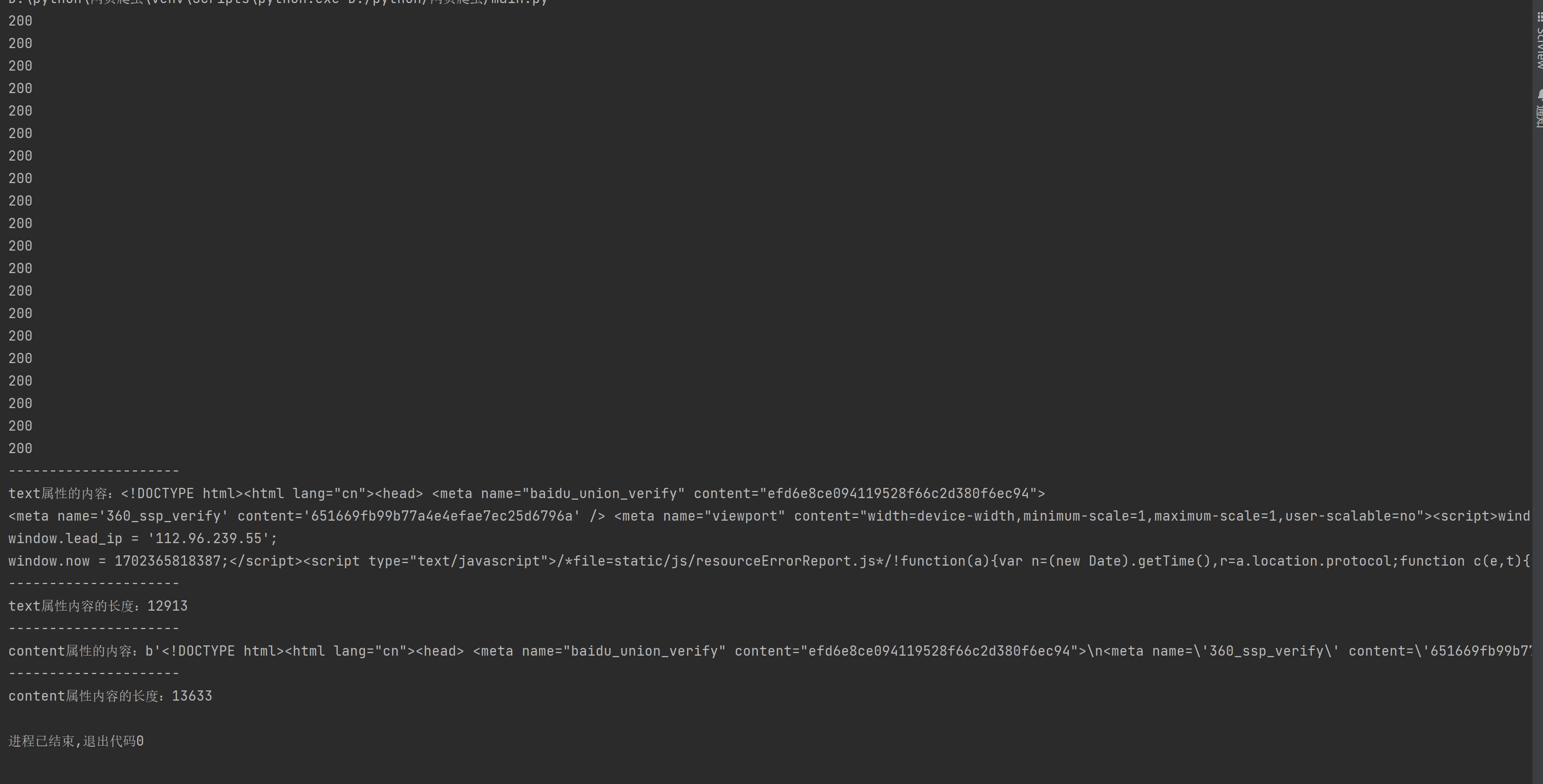

(2)请用requests库的get()函数访问如下一个搜狗网站主页20次,打印返回状态,text()内容,计算text()属性和content属性所返回网页内容的长度。

1 import requests 2 url = "https://www.sogou.com" 3 for i in range(20): 4 r = requests.get(url) 5 print(r.status_code) 6 print('---------------------') 7 print("text属性的内容:{}".format(r.text)) 8 print('---------------------') 9 a = len(r.text) 10 print("text属性内容的长度:{}".format(a)) 11 print('---------------------') 12 print("content属性的内容:{}".format(r.content)) 13 print('---------------------') 14 b = len(r.content) 15 print("content属性内容的长度:{}".format(b))

输出:

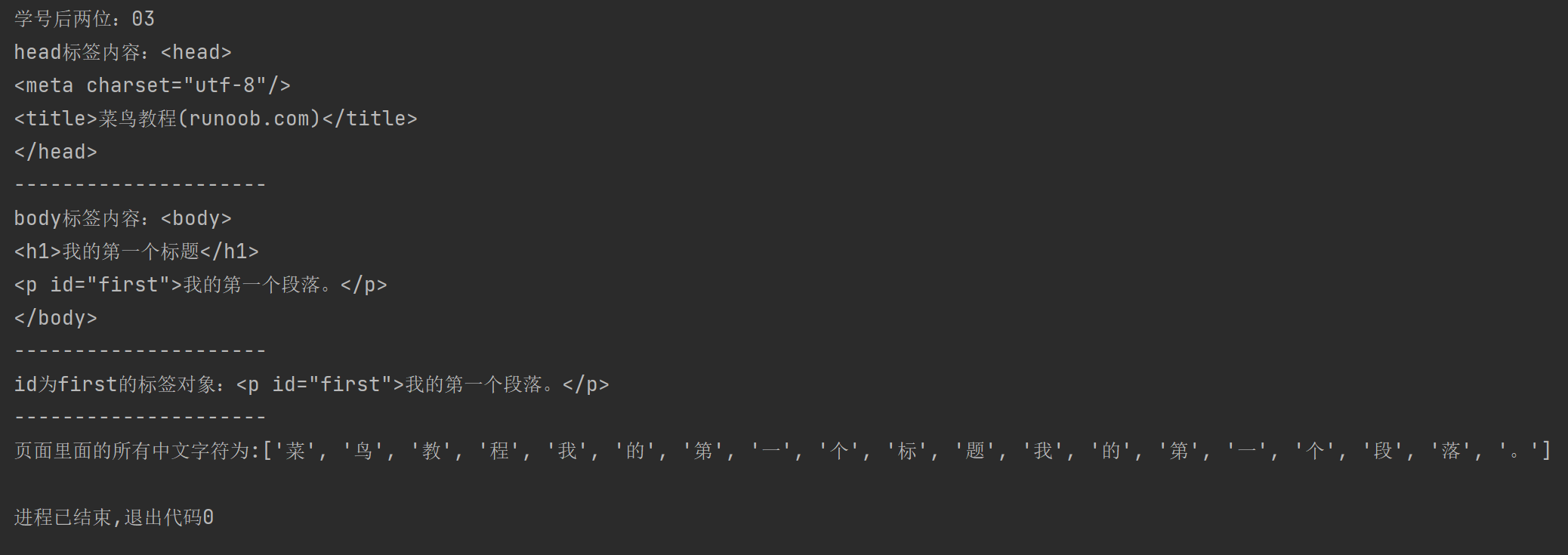

(3)这是一个简单的html页面,请保持为字符串,完成后面的计算要求。

<!DOCTYPE html> <html> <head> <meta charset="utf-8"> <title>菜鸟教程(runoob.com)</title> </head> <body> <h1>我的第一个标题</h1> <p id="first">我的第一个段落。</p> </body> <table border="1"> <tr> <td>row 1, cell 1</td> <td>row 1, cell 2</td> </tr> <tr> <td>row 2, cell 1</td> <td>row 2, cell 2</td> </tr> </table> </html>

要求:a.打印head标签内容和你的学号后两位

b.获取body标签的内容

c. 获取id 为first的标签对象

d. 获取并打印html页面中的中文字符

1 import re 2 from bs4 import BeautifulSoup 3 text = '''<!DOCTYPE html> 4 <html> 5 <head> 6 <meta charset="utf-8"> 7 <title>菜鸟教程(runoob.com)</title> 8 </head> 9 <body> 10 <h1>我的第一个标题</h1> 11 <p id="first">我的第一个段落。</p> 12 </body> 13 <table border="1"> 14 <tr> 15 <td>row 1, cell 1</td> 16 <td>row 1, cell 2</td> 17 </tr> 18 <tr> 19 <td>row 2, cell 1</td> 20 <td>row 2, cell 2</td> 21 </tr> 22 </table> 23 </html>''' 24 soup = BeautifulSoup(text) 25 print("学号后两位:03") 26 print("head标签内容:{}".format(soup.head)) 27 print('---------------------') 28 print("body标签内容:{}".format(soup.body)) 29 print('---------------------') 30 print("id为first的标签对象:{}".format(soup.p)) 31 print('---------------------') 32 print('页面里面的所有中文字符为:{}'.format(re.findall('[\u1100-\uFFFDh]+?', soup.text)))

输出:

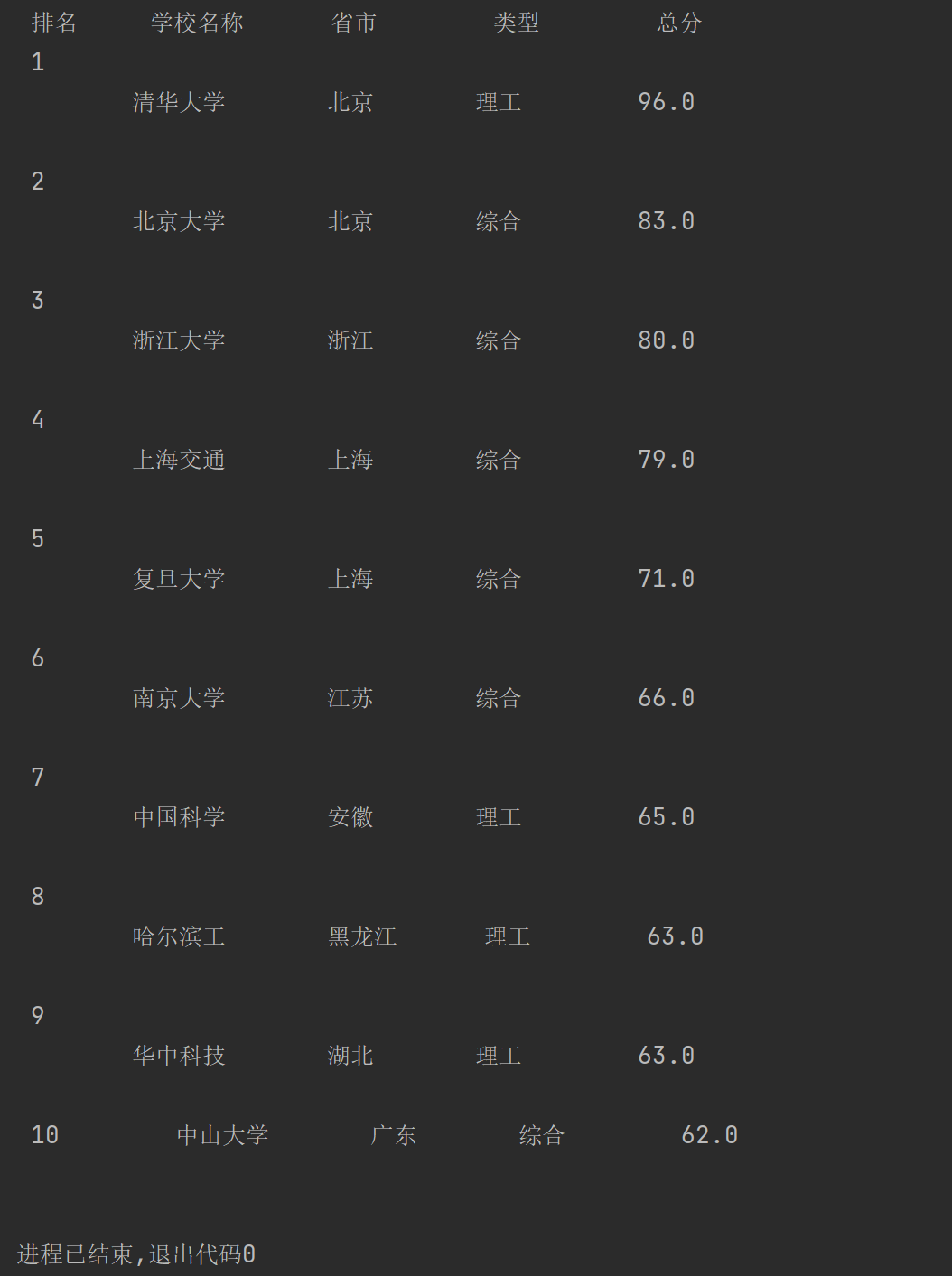

(4) 爬中国大学排名网站内容,https://www.shanghairanking.cn/rankings/bcur/201811

要求:(一)爬取大学排名(学号尾号3,4,爬取年份2016)

(二)把爬取得数据,存为csv文件

1 import re 2 import pandas as pd 3 import requests 4 from bs4 import BeautifulSoup 5 allUniv = [] 6 def getHTMLText(url): 7 try: 8 r = requests.get(url, timeout=30) 9 r.raise_for_status() 10 r.encoding = 'utf-8' 11 return r.text 12 except: 13 return "" 14 15 def fillUnivList(soup): 16 soup.encode('utf-8') 17 data = soup.find_all('tr') 18 list1 = [] 19 for tr in data: 20 ltd = tr.find_all('td') 21 if len(ltd) == 0: 22 continue 23 singleUniv = [] 24 for td in ltd: 25 temp = re.findall('[\u4e00-\u9fff]+', str(td)) 26 if td.string != None and td.string != "[]": 27 singleUniv.append(td.string) 28 if temp != []: 29 if type(temp) == list: 30 str1 = '' 31 for i in temp: 32 str1 += i 33 singleUniv.append(str1) 34 allUniv.append(singleUniv) 35 return allUniv 36 37 38 def printUnivList(num): 39 print("{:^5}{:^10}{:^10}{:^10}{:^10}".format("排名", "学校名称", "省市", "类型", "总分")) 40 for i in range(num): 41 u = allUniv[i] 42 u[0] = u[0][29:31] 43 u[1] = u[1][:4] 44 u[4] = u[4][25:31] 45 print("{:^5}{:^10}{:^10}{:^10}{:^10}".format(u[0], u[1], u[2], u[3], u[4])) 46 def main(flag): 47 url = 'https://www.shanghairanking.cn/rankings/bcur/201611' 48 html = getHTMLText(url) 49 soup = BeautifulSoup(html, "html.parser") 50 list1 = fillUnivList(soup) 51 if flag == 0: 52 printUnivList(10) 53 else: 54 return list1 55 # 定义一个函数,将里面的嵌套列表的第一个元素取出 56 def combination(list1, count): 57 list2 = [] 58 for i in list1: 59 list2.append(i[count]) 60 return list2 61 main(0) 62 list1 = main(1) 63 # 定义一个函数,处理一下获取到的数据 64 def deal_data(list1): 65 list_1 = combination(list1, 0) 66 list_2 = combination(list1, 1) 67 list_3 = combination(list1, 2) 68 list_4 = combination(list1, 3) 69 list_5 = combination(list1, 4) 70 data = pd.DataFrame({ 71 "排名": list_1, 72 "学校名称": list_2, 73 '省市': list_3, 74 '类型': list_4, 75 '总分': list_5 76 }) 77 return data 78 data = deal_data(list1) 79 data.to_csv('University_grade.csv', index=False)

输出:

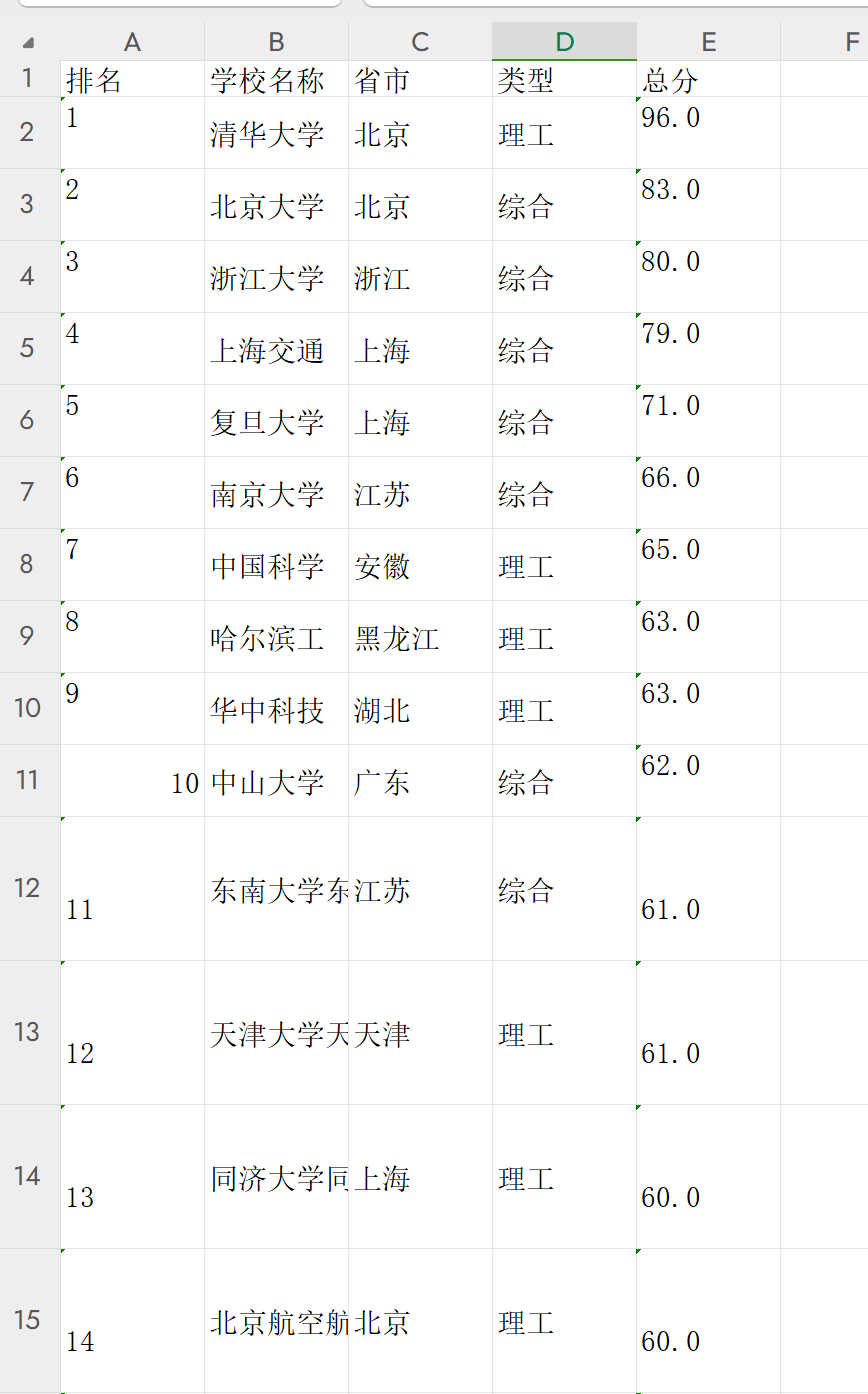

输出的University_grade.csv文件截图:

浙公网安备 33010602011771号

浙公网安备 33010602011771号