爬虫作业

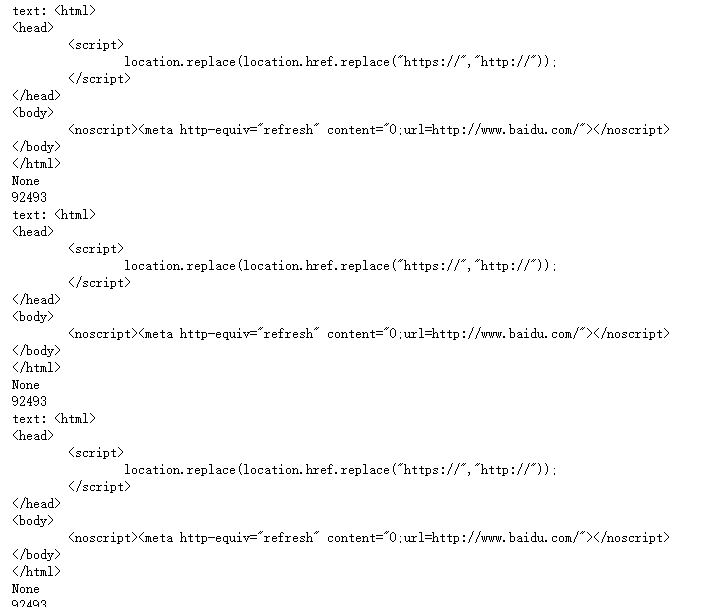

- 请用requests库的get()函数访问如下一个网站20次,打印返回状态,text()内容,计算text()属性和content属性所返回网页内容的长度。

1 import requests 2 url="https://www.baidu.com/" 3 def getHTMLText(url): 4 try: 5 r=requests.get(url) 6 r.raise_for_status() 7 r.encoding="utf-8" 8 print("text:",r.text) 9 except: 10 return "" 11 for i in range(20): 12 print(getHTMLText(url)) 13 print(len(r.text))

运行结果(简略):

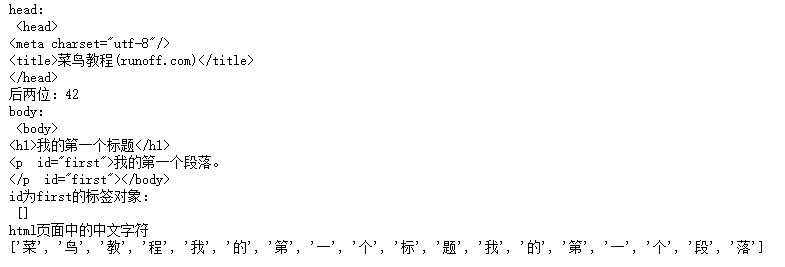

2.爬字符串页面

1 from bs4 import BeautifulSoup 2 import re 3 4 soup = BeautifulSoup('''<!DOCTYPE html> 5 <html> 6 <head> 7 <meta charset="utf-8"> 8 <title>菜鸟教程(runoff.com)</title> 9 </head> 10 <body> 11 <h1>我的第一个标题</h1> 12 <p id="first">我的第一个段落。</p> 13 </body> 14 <table border="1"> 15 <tr> 16 <td>row 1, cell 1</td> 17 <td>row 1, cell 2</td> 18 </tr> 19 <tr> 20 <td>row 2, cell 1</td> 21 <td>row 2, cell 2</td> 22 </tr> 23 </table> 24 </html>''') 25 print("head:\n", soup.head, "\n后两位:42") 26 print("body:\n", soup.body) 27 print("id为first的标签对象:\n", soup.find_all(id="first")) 28 st = soup.text 29 cc = re.findall(u'[\u4e00-\u9fff]', st)#中文字符集的Unicode编码 30 print("html页面中的中文字符") 31 print(cc)

运行结果:

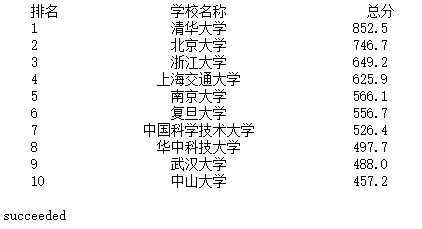

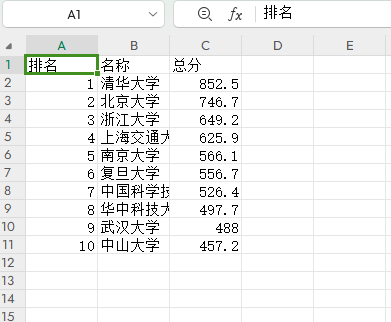

3.2020软科排名前十的中国大学

1 import requests 2 from bs4 import BeautifulSoup 3 import bs4 4 import csv 5 6 ulist1=[] 7 8 def getHTMLText(url): 9 try: 10 r = requests.get(url,timeout = 30) 11 r.raise_for_status() 12 r.encoding = r.apparent_encoding 13 return r.text 14 except: 15 return"" 16 17 def fillUnivList(ulist,html): 18 soup = BeautifulSoup(html,"html.parser") 19 for tr in soup.find('tbody').children: 20 if isinstance(tr,bs4.element.Tag):#如果tr标签的类型不是bs4库中定义的tag类型,则过滤掉 21 a = tr('a') 22 tds = tr('td')#将所有的td标签存为一个列表类型 23 ulist.append([tds[0].text.strip(), a[0].string.strip(), tds[4].text.strip()]) 24 25 def printUnivList(ulist1,num):#打印出ulist列表的信息,num表示希望将列表中的多少个元素打印出来 26 #格式化输出 27 tplt = "{0:^10}\t{1:{3}^12}\t{2:^10}" 28 # 0、1、2为槽,{3}表示若宽度不够,使用format的3号位置处的chr(12288)(中文空格)进行填充 29 print(tplt.format("排名","学校名称","总分",chr(12288))) 30 for i in range(num): 31 u = ulist1[i] 32 print(tplt.format(u[0], u[1], u[2],chr(12288))) 33 print() 34 35 def writeList(ulist,num): 36 f = open('rank1-10.csv','w',encoding='gbk',newline='') 37 csv_writer = csv.writer(f) 38 csv_writer.writerow(['排名','名称','总分']) 39 for i in range(num): 40 u=ulist[i] 41 csv_writer.writerow([u[0],u[1],u[2]]) 42 f.close() 43 print('succeeded') 44 45 46 47 def main(): 48 uinfo = [] 49 url = "https://www.shanghairanking.cn/rankings/bcur/2020" 50 html = getHTMLText(url) 51 fillUnivList(uinfo,html) 52 printUnivList(uinfo,10) 53 writeList(uinfo,10) 54 55 56 main()

运行结果:

干员:2-42

浙公网安备 33010602011771号

浙公网安备 33010602011771号