【爬虫】豆瓣新书速递页面爬取数据

《python数据分析入门》书籍上的例子

import requests

from bs4 import BeautifulSoup

import pandas as pd

# 请求数据

def get_data():

url = 'https://book.douban.com/latest'

headers = {

'User-Agent': "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/86.0.4240.111 Safari/537.36"}

data = requests.get(url, headers=headers)

# print(data.text)

return data

# 解析数据

def parse_data(data):

soup = BeautifulSoup(data.text, 'lxml')

# print(soup)

# 观察到网页上的书籍按左右两边分布,按照标签分别提取

book_left = soup.find('ul', {'class': 'cover-col-4 clearfix'})

book_left = book_left.find_all('li')

book_right = soup.find('ul', {'class': 'cover-col-4 pl20 clearfix'})

book_right = book_right.find_all('li')

books = list(book_left) + list(book_right)

# 对每一个图书区块进行相同的操作,获取图书信息

img_urls = []

titles = []

authors = []

ratings = []

details = []

for book in books:

# 图书封面图片url地址

img_url = book.find_all('a')[0].find('img').get('src')

img_urls.append(img_url)

# 图书标题

# get_text() 此方法可以去除find返回对象内的html标签,返回纯文本

title = book.find_all('a')[1].get_text()

titles.append(title)

# print(title)

# 评价星级

rating = book.find('p', {'class': 'rating'}).get_text()

# 删除\n符号和前后空格

rating = rating.replace('\n', '').replace(' ', '')

ratings.append(rating)

# 作者及出版信息

author = book.find('p', {'class': 'color-gray'}).get_text()

# replace('原文字','要切换的文字')

author = author.replace('\n', '').replace(' ', '')

authors.append(author)

# 图书简介

detail = book.find_all('p')[2].get_text()

detail = detail.replace('\n', '').replace(' ', '')

details.append(detail)

print(img_urls)

print(titles)

print(ratings)

print(authors)

print(details)

return img_urls, titles, ratings, authors, details

# 存储数据

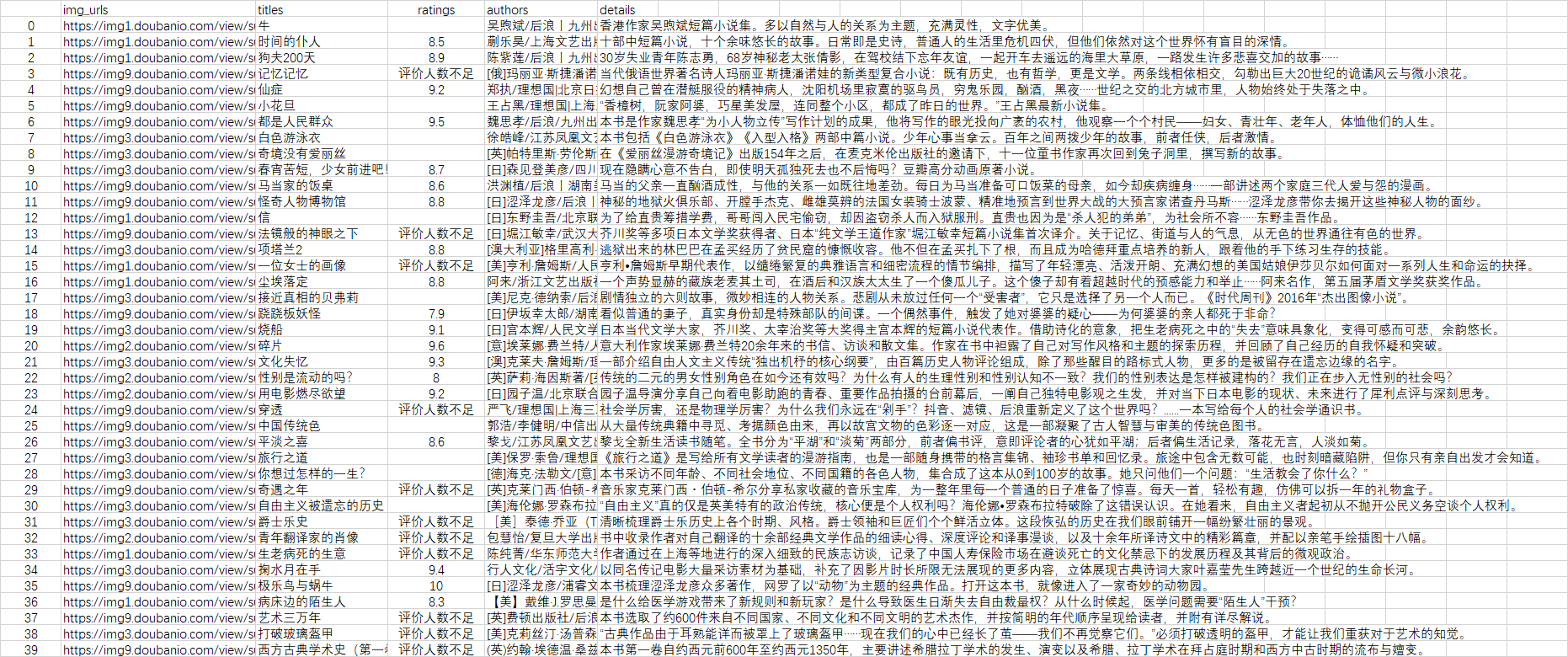

def save_data(img_urls, titles, ratings, authors, details):

result = pd.DataFrame()

result['img_urls'] = img_urls

result['titles'] = titles

result['ratings'] = ratings

result['authors'] = authors

result['details'] = details

result.to_csv('result.csv', encoding="utf_8_sig")

# 开始爬取

def run():

data = get_data()

img_urls, titles, ratings, authors, details = parse_data(data)

save_data(img_urls, titles, ratings, authors, details)

if __name__ == '__main__':

run()

作者:不懂就问薛定谔的猫

本博客所有文章仅用于学习、研究和交流目的,欢迎非商业性质转载。

博主的文章没有高度、深度和广度,只是凑字数。由于博主的水平不高,不足和错误之处在所难免,希望大家能够批评指出。

博主是利用读书、参考、引用、抄袭、复制和粘贴等多种方式打造成自己的文章,请原谅博主成为一个无耻的文档搬运工!

浙公网安备 33010602011771号

浙公网安备 33010602011771号