[Cloud DA] Serverless Framework with AWS

Serverless framework with AWS

Link to each sections

Table of Content:

- Part 0: Serverless Project structure

- Part 1: DynamoDB & ApiGateway

- Part 2: Event Processing with WebSocket and DyanmoDB Stream

- Part 3: Full Text searhc with ElasticSearch

- Part 4: Fant out notification with SNS

- Part 5: Auth with Auth0

- Part 6: Using Lambda Middleware

Full content here

Serverless Project Structure

New configuration format

Please notice that the default structure of the TypeScript project has slightly changed, and it now contains serverless.ts file instead of serverless.yaml. You can still configure the project using YAML configuration as demonstrated in the course, but now the Serverless framework provides more configuration options, such as yml, json, js, and ts as described on serverless documentation. All functionalities work as well in the other available service file formats.

Serverless Plugins

Serverless framework's functionality can be extended using dozens of plugins developed for it. During the course we will use some of the most popular plugins, and you will see when and how to use them.

- When you are looking for a plugin for your project you can use the plugins catalog on the Serverless Framework website.

- If you can't find a plugin that would fit your needs, you can always implement your own. You can start with this guide if you want to explore this option.

Serverless Framework Events

If you want to learn more, you can find a full list of events that Serverless Framework supports in the official documentation. It provides examples for every event they support and describe all parameters it supports.

CloudFormation Resources

AWS documentation provides reference for all resource types CloudFormation support: AWS Resource and Property Types Reference.

Most of AWS resources can be created with CloudFormation, but in some rare cases you may encounter an AWS resource that is not supported by CloudFormation. In this case you would have to use AWS API, AWS CLI or AWS dashboard.

INSTALL

npm install -g serverlessCREATE PROJECT

serverless create --template aws-nodejs-typescript --path folder-nameINSTALL PLUGIN

npm install plugin-name --save-devDEPLOY PROJECT

sls deploy -v

- Install serverless:

npm install -g serverless

- Set up a new user in IAM named "serverless" and save the access key and secret key.

- Configure serverless to use the AWS credentials you just set up:

sls config credentials --provider aws --key YOUR_ACCESS_KEY --secret YOUR_SECRET_KEY --profile IAM_USER_NAME -

To create a serverless boilerplate project:

sls create --template aws-nodejs-typescript --path 10-udagram-app -

Deploy the application

sls deploy -v

Part 1 DyanmoDB & ApiGateway

Build a Lambda function get data from DynamoDB:

src/lambda/http/getGroups.ts

import * as AWS from "aws-sdk";

import {

APIGatewayProxyEvent,

APIGatewayProxyHandler,

APIGatewayProxyResult,

} from "aws-lambda";

const docClient = new AWS.DynamoDB.DocumentClient();

const groupTables = process.env.GROUPS_TABLE;

export const handler: APIGatewayProxyHandler = async (

event: APIGatewayProxyEvent

): Promise<APIGatewayProxyResult> => {

const result = await docClient

.scan({

TableName: groupTables,

})

.promise();

const items = result.Items;

return {

statusCode: 200,

headers: {

"Access-Control-Allow-Origin": "*",

},

body: JSON.stringify({

items,

}),

};

};

serverless.yml:

service:

name: serverless-udagram-app

plugins:

- serverless-webpack

provider:

name: aws

runtime: nodejs14.x

stage: ${opt:stage, 'dev'}

region: ${opt:region, 'us-east-1'}

environment:

GROUPS_TABLE: Groups-${self:provider.stage}

iamRoleStatements:

- Effect: Allow

Action:

- dynamodb:Scan

Resource: arn:aws:dynamodb:${self:provider.region}:*:table/${self:provider.environment.GROUPS_TABLE}

functions:

GetGroups:

handler: src/lambda/http/getGroups.handler

events:

- http:

method: get

path: groups

cors: true

resources:

Resources:

GroupsDynamoDBTable:

Type: AWS::DynamoDB::Table

Properties:

AttributeDefinitions:

- AttributeName: id

AttributeType: S

KeySchema:

- AttributeName: id

KeyType: HASH

BillingMode: PAY_PER_REQUEST

TableName: ${self:provider.environment.GROUPS_TABLE}

Validate request in ApiGateway

Refer to: https://www.cnblogs.com/Answer1215/p/14774552.html

Query in Node.js

const docClient = new AWS.DynamoDB.DocumentClient()

const result = await docClient.query({

TableName: 'GameScore',

KeyConditionExpression: 'GameId = :gameId',

ExpressionAttributeValues: {

':gameId': '10'

}

}).promise()

const items = result.Items

Path parameter

functions:

GetOrders:

handler: src/images.handler

events:

- http:

method: get

path: /groups/{groupId}/images

export.handler = async (event) => {

const grouId = event.pathParameters.groupId;

...

}

Add indexes

serverless.yml

service:

name: serverless-udagram-app

plugins:

- serverless-webpack

provider:

name: aws

runtime: nodejs14.x

stage: ${opt:stage, 'dev'}

region: ${opt:region, 'us-east-1'}

environment:

GROUPS_TABLE: Groups-${self:provider.stage}

IMAGES_TABLE: Images-${self:provider.stage}

IMAGE_ID_INDEX: ImageIdIndex

iamRoleStatements:

- Effect: Allow

Action:

- dynamodb:Query

Resource: arn:aws:dynamodb:${self:provider.region}:*:table/${self:provider.environment.IMAGES_TABLE}/index/${self:provider.environment.IMAGE_ID_INDEX}

- Effect: Allow

Action:

- dynamodb:Query

- dynamodb:PutItem

Resource: arn:aws:dynamodb:${self:provider.region}:*:table/${self:provider.environment.IMAGES_TABLE}

- Effect: Allow

Action:

- dynamodb:Scan

- dynamodb:PutItem

- dynamodb:GetItem

Resource: arn:aws:dynamodb:${self:provider.region}:*:table/${self:provider.environment.GROUPS_TABLE}

functions:

CreateImage:

handler: src/lambda/http/createImage.handler

events:

- http:

method: post

path: groups/{groupId}/images

cors: true

reqValidatorName: RequestBodyValidator

request:

schema:

application/json: ${file(models/create-image-request.json)}

GetImage:

handler: src/lambda/http/getImage.handler

events:

- http:

method: get

path: images/{imageId}

cors: true

GetImages:

handler: src/lambda/http/getImages.handler

events:

- http:

method: get

path: group/{groupId}/images

cors: true

GetGroups:

handler: src/lambda/http/getGroups.handler

events:

- http:

method: get

path: groups

cors: true

CreateGroup:

handler: src/lambda/http/createGroup.handler

events:

- http:

method: post

path: groups

cors: true

## do the validation in api gateway

reqValidatorName: RequestBodyValidator

request:

schema:

application/json: ${file(models/create-group-request.json)}

resources:

Resources:

ImagesDynamoDBTable:

Type: AWS::DynamoDB::Table

Properties:

TableName: ${self:provider.environment.IMAGES_TABLE}

BillingMode: PAY_PER_REQUEST

KeySchema:

- AttributeName: groupId

KeyType: HASH

- AttributeName: timestamp

KeyType: RANGE

AttributeDefinitions:

- AttributeName: groupId

AttributeType: S

- AttributeName: timestamp

AttributeType: S

- AttributeName: imageId

AttributeType: S

GlobalSecondaryIndexes:

- IndexName: ${self:provider.environment.IMAGE_ID_INDEX}

KeySchema:

- AttributeName: imageId

KeyType: HASH

## Copy all the attrs to the new GSI table

Projection:

ProjectionType: ALL

GroupsDynamoDBTable:

Type: AWS::DynamoDB::Table

Properties:

AttributeDefinitions:

- AttributeName: id

AttributeType: S

KeySchema:

- AttributeName: id

KeyType: HASH

BillingMode: PAY_PER_REQUEST

TableName: ${self:provider.environment.GROUPS_TABLE}

const docClient = new AWS.DynamoDB.DocumentClient();

const imagesTable = process.env.IMAGES_TABLE;

const imageIdIndex = process.env.IMAGE_ID_INDEX;

export const handler: APIGatewayProxyHandler = async (

event: APIGatewayProxyEvent

): Promise<APIGatewayProxyResult> => {

const imageId = event.pathParameters.imageId;

const result = await docClient

.query({

TableName: imagesTable,

IndexName: imageIdIndex,

KeyConditionExpression: "imageId= :imageId",

ExpressionAttributeValues: {

":imageId": imageId,

},

})

.promise();

Part 2 Event Processing

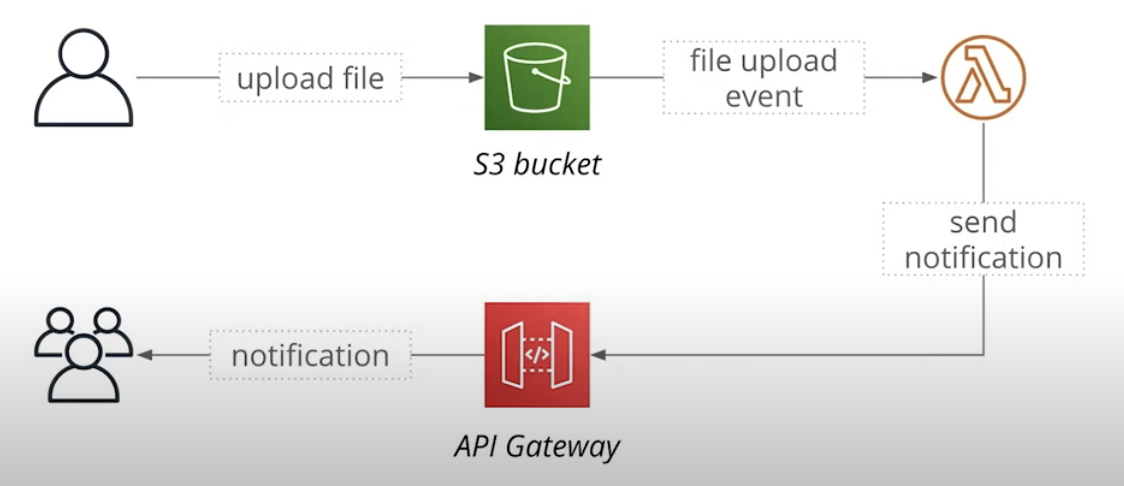

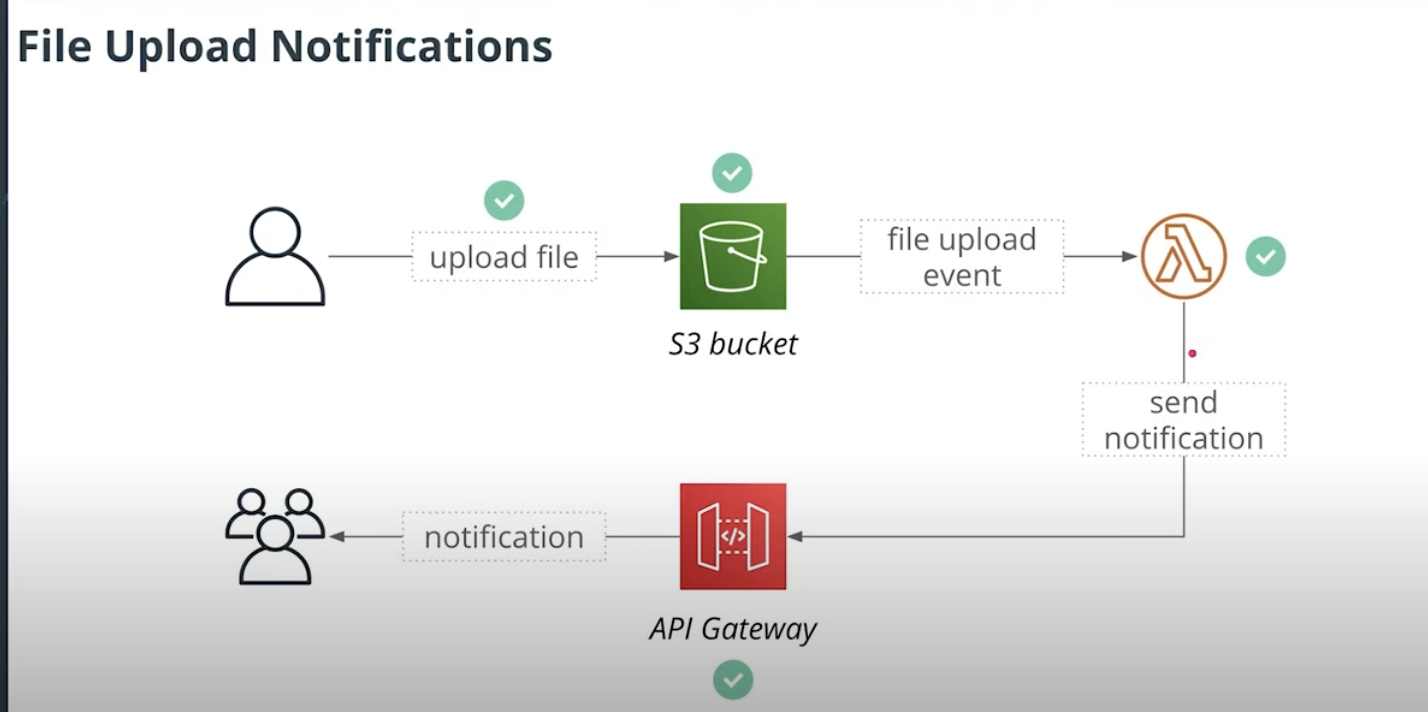

In section 2.1, what we will do is

- User Uplaod a image to S3 bucket

- Then S3 bucket will fire an event about file uploaded to lambda function

- Lambda function will send notification vai API Gateway to tell users that one image has been uploaded

Upload a image to S3 bucket

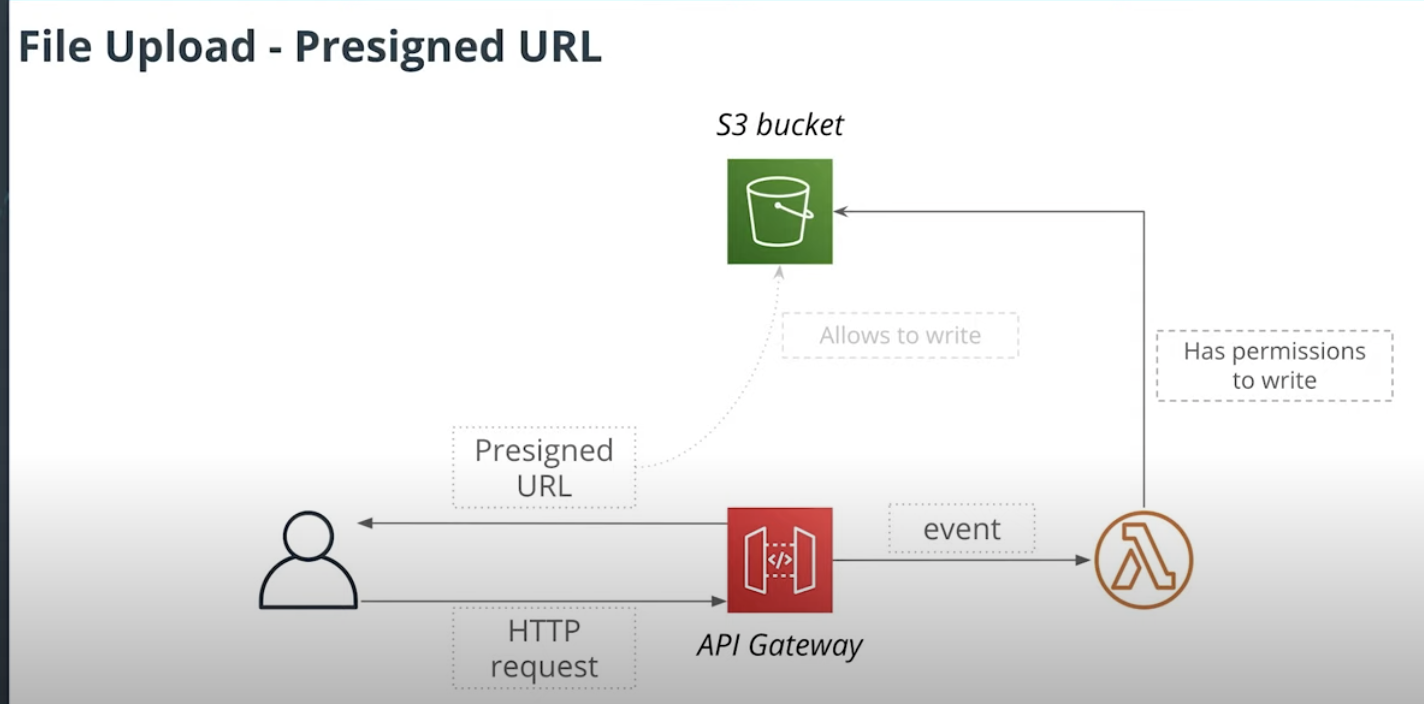

Ref: Using Pre-signed url pattern for cmd use cases

In short: When user load a image

- Request comes to API Gateway

- API Gateway route event to lambda function to generate a Pre-signed URL return to Client

- Lambda function needs to have the permissions to write to S3 Bucket

- Client can use that Pre-signed URL to upload image to S3.

Define the resource in serverless.yml:

Define a name for S3 bucekt:

environment:

..

IMAGES_S3_BUCKET: zhentiw-serverless-udagram-images-${self:provider.stage}

Define resouce for S3 and bucket policy which allow anybody to read from S3:

resources: Resources: ## S3 bueckt which store images AttachmentsBucket: Type: AWS::S3::Bucket Properties: BucketName: ${self:provider.environment.IMAGES_S3_BUCKET} CorsConfiguration: CorsRules: - AllowedMethods: - GET - PUT - POST - DELETE - HEAD AllowedHeaders: - "*" AllowedOrigins: - "*" MaxAge: 3000 # 50 hours BucketPolicy: Type: AWS::S3::BucketPolicy Properties: Bucket: !Ref AttachmentsBucket PolicyDocument: Id: MyPolicy Version: "2012-10-17" Statement: - Sid: PublicReadForGetBucketObjects Effect: Allow Principal: "*" Action: "s3:GetObject" Resource: "arn:aws:s3:::${self:provider.environment.IMAGES_S3_BUCKET}/*"

Generate a Pre-sign url:

Define a expriation time for pre-signed url:

environment: ... IMAGES_S3_BUCKET: zhentiw-serverless-udagram-images-${self:provider.stage} SIGNED_URL_EXPIRATION: 300

Add Permission to Lambda for S3:

iamRoleStatements: - Effect: Allow Action: - s3:PutObject - s3:GetObject Resource: arn:aws:s3:::${self:provider.environment.IMAGES_S3_BUCKET}/*

Define Lambda funtions:

functions: CreateImage: handler: src/lambda/http/createImage.handler events: - http: method: post path: groups/{groupId}/images cors: true reqValidatorName: RequestBodyValidator request: schema: application/json: ${file(models/create-image-request.json)}

Lambda code: src/lambda/http/createImages.ts:

import { v4 as uuidv4 } from "uuid";

import "source-map-support/register";

import * as AWS from "aws-sdk";

import {

APIGatewayProxyEvent,

APIGatewayProxyHandler,

APIGatewayProxyResult,

} from "aws-lambda";

const docClient = new AWS.DynamoDB.DocumentClient();

const groupTables = process.env.GROUPS_TABLE;

const imagesTables = process.env.IMAGES_TABLE;

const bucketName = process.env.IMAGES_S3_BUCKET;

const urlExpiration = process.env.SIGNED_URL_EXPIRATION;

const s3 = new AWS.S3({

signatureVersion: "v4",

});

function getPreSignedUrl(imageId) {

return s3.getSignedUrl("putObject", {

Bucket: bucketName,

Key: imageId,

Expires: parseInt(urlExpiration, 10),

});

}

export const handler: APIGatewayProxyHandler = async (

event: APIGatewayProxyEvent

): Promise<APIGatewayProxyResult> => {

console.log("Processing event: ", event);

const imageId = uuidv4();

const groupId = event.pathParameters.groupId;

const parsedBody = JSON.parse(event.body);

const isGroupExist = await groupExists(groupId);

if (!isGroupExist) {

return {

statusCode: 404,

headers: {

"Access-Control-Allow-Origin": "*",

},

body: JSON.stringify({

error: "Group does not exist",

}),

};

}

const newItem = {

imageId,

groupId,

timestamp: new Date().toISOString(),

...parsedBody,

imageUrl: `https://${bucketName}.s3.amazonaws.com/${imageId}`,

};

await docClient

.put({

TableName: imagesTables,

Item: newItem,

})

.promise();

const url = getPreSignedUrl(imageId);

return {

statusCode: 201,

headers: {

"Access-Control-Allow-Origin": "*",

},

body: JSON.stringify({

newItem,

uploadUrl: url,

}),

};

};

async function groupExists(groupId) {

const result = await docClient

.get({

TableName: groupTables,

Key: {

id: groupId,

},

})

.promise();

console.log("Get group", result);

return !!result.Item;

}

S3 Event

We need to create 2 resources

- S3 should config Notification to Lambda function

- S3 should have persmission to call Lambda function

Config Notification for S3 send to Lambda

Here is a configuration snippet that can be used to subscribe to S3 events:

functions:

process:

handler: file.handler

events:

- s3: bucket-name

event: s3:ObjectCreated:*

rules:

- prefix: images/

- suffix: .pngBut there are some small problems with Event sent by S3 in serverless, it will re-create a s3 bucket with the same name cause the operation failed.

So here is how we should do it as work around:

Define function for Lambda

functions:

...

SendUploadNotification:

handler: src/lambda/s3/sendNotification.handler

Add noticifation

resources: Resources: AttachmentsBucket: Type: AWS::S3::Bucket Properties: BucketName: ${self:provider.environment.IMAGES_S3_BUCKET} NotificationConfiguration: LambdaConfigurations: - Event: s3:ObjectCreated:* ## <function_name>LambdaFunction.Arn Function: !GetAtt SendUploadNotificationLambdaFunction.Arn CorsConfiguration: CorsRules: - AllowedMethods: - GET - PUT - POST - DELETE - HEAD AllowedHeaders: - "*" AllowedOrigins: - "*" MaxAge: 3000 # 50 hours

Allow S3 to send Notification to Lambda:

resources: Resources: ... SendUploadNotificationPermission: Type: AWS::Lambda::Permission Properties: Action: lambda:InvokeFunction FunctionName: !Ref SendUploadNotificationLambdaFunction Principal: s3.amazonaws.com SourceAccount: !Ref "AWS::AccountId" SourceArn: arn:aws:s3:::${self:provider.environment.IMAGES_S3_BUCKET}

Lambda function:

src/lambda/s3/sendNotification.ts

import { S3Handler, S3Event } from "aws-lambda";

import "source-map-support/register";

export const handler: S3Handler = async (event: S3Event) => {

for (const record of event.Records) {

const key = record.s3.object.key;

console.log("Processing S3 item with key: " + key);

}

};

For now, we just need to verify that Lambda function can get notfication from S3.

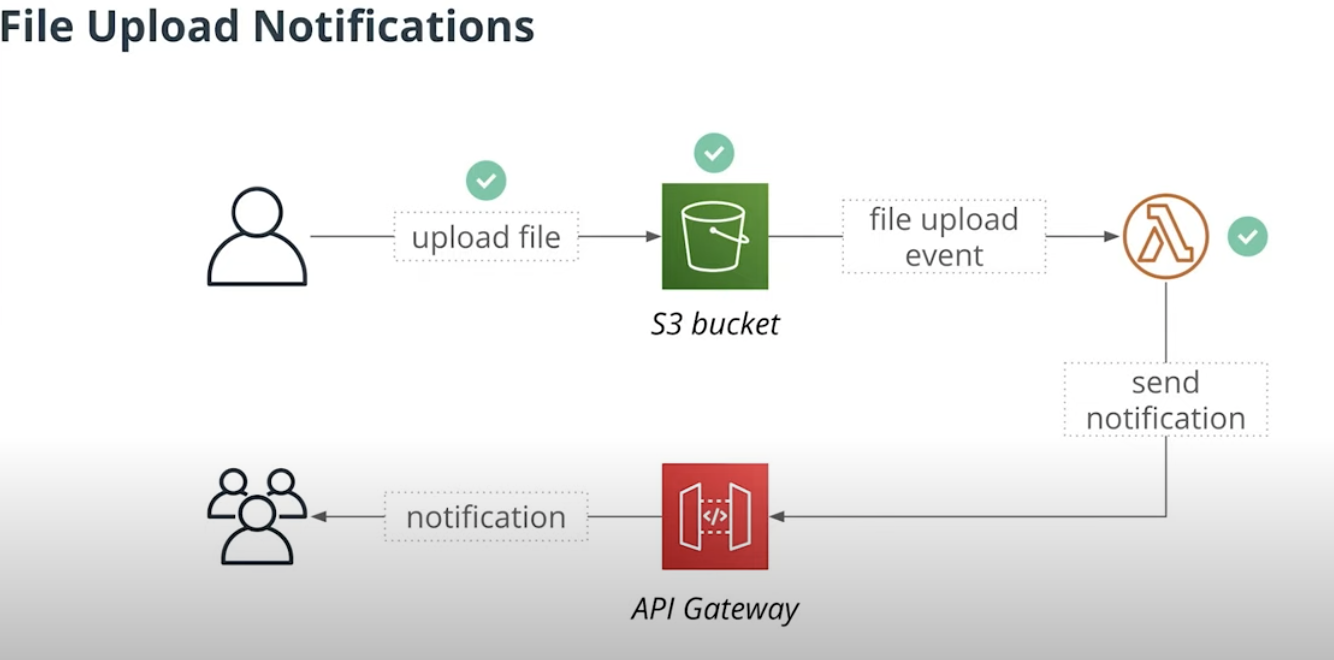

Now, what we have done from the roundmap:

WebSocket

In section 2.2, what we will do is

- Send notification about a image has been uploaded to clinet by websockets

Websocket Refer: https://www.cnblogs.com/Answer1215/p/14782429.html

Add Env var:

environment: ... CONNECTIONS_TABLE: Socket-connections-${self:provider.stage} ...

Add iam permission to `scan`, `PutItem` and `DeleteItem`:

iamRoleStatements: ... - Effect: Allow Action: - dynamodb:Scan - dynamodb:PutItem - dynamodb:DeleteItem Resource: arn:aws:dynamodb:${self:provider.region}:*:table/${self:provider.environment.CONNECTIONS_TABLE}

Add two Lambda functions to handle Connect socket and Disconnect socket events:

Once websocket connect to ApiGateway, we want to save `connectionId` to DynamoDB. Once sockets disconnect from API Gateway, we delete the connectionId from DynamoDB.

src/lambda/websockets/connect.ts

import * as AWS from "aws-sdk"; import { APIGatewayProxyEvent, APIGatewayProxyHandler, APIGatewayProxyResult, } from "aws-lambda"; const docClient = new AWS.DynamoDB.DocumentClient(); const connectionsTable = process.env.CONNECTIONS_TABLE; export const handler: APIGatewayProxyHandler = async ( event: APIGatewayProxyEvent ): Promise<APIGatewayProxyResult> => { console.log("Websocket connect: ", event); const timestamp = new Date().toISOString(); const connectionId = event.requestContext.connectionId; const item = { id: connectionId, timestamp, }; await docClient .put({ TableName: connectionsTable, Item: item, }) .promise(); return { statusCode: 200, headers: { "Access-Control-Allow-Origin": "*", }, body: "", }; };

src/lambda/websockets/disconnect.ts

import * as AWS from "aws-sdk"; import { APIGatewayProxyEvent, APIGatewayProxyHandler, APIGatewayProxyResult, } from "aws-lambda"; const docClient = new AWS.DynamoDB.DocumentClient(); const connectionsTable = process.env.CONNECTIONS_TABLE; export const handler: APIGatewayProxyHandler = async ( event: APIGatewayProxyEvent ): Promise<APIGatewayProxyResult> => { console.log("Websocket connect: ", event); const connectionId = event.requestContext.connectionId; const key = { id: connectionId, }; await docClient .delete({ TableName: connectionsTable, Key: key, }) .promise(); return { statusCode: 200, body: "", }; };

Send Notifiction once file was uploaded

What we need to do:

- Send Websocket messages on a file upload

- Will use a list of connections stored in DynamoDB

Add functions for Lambda:

SendUploadNotification: handler: src/lambda/s3/sendNotification.handler environment: STAGE: ${self:provider.stage} API_ID: Ref: WebsocketsApi

It has inline `environment` which will only being used for this function.

import { S3Handler, S3Event } from "aws-lambda";

import "source-map-support/register";

import * as AWS from "aws-sdk";

const docClient = new AWS.DynamoDB.DocumentClient();

const connectionsTable = process.env.CONNECTIONS_TABLE;

const stage = process.env.STAGE;

const apiID = process.env.API_ID;

const connectionParams = {

apiVersion: "2010-11-29",

endpoint: `${apiID}.execute-api.us-east-1.amazonaws.com/${stage}`,

};

const apiGateway = new AWS.ApiGatewayManagementApi(connectionParams);

async function processS3Event(s3Event: S3Event) {

for (const record of s3Event.Records) {

const key = record.s3.object.key;

console.log("Processing S3 item with key: ", key);

const connections = await docClient

.scan({

TableName: connectionsTable,

})

.promise();

const payload = {

imageId: key,

};

for (const connection of connections.Items) {

const connectionId = connection.id;

await sendMessagedToClient(connectionId, payload);

}

}

}

async function sendMessagedToClient(connectionId, payload) {

try {

console.log("Sending message to a connection", connectionId);

await apiGateway

.postToConnection({

ConnectionId: connectionId,

Data: JSON.stringify(payload),

})

.promise();

} catch (e) {

if (e.statusCode === 410) {

console.log("Stale connection");

await docClient

.delete({

TableName: connectionsTable,

Key: {

id: connectionId,

},

})

.promise();

}

}

}

export const handler: S3Handler = async (event: S3Event) => {

await processS3Event(event)

};

Becasuse API Gateway holds a connection between client and websocket.

We send message to Api gatway by `postToConnection` method.

Part 3 Full-Text Search

ElasticSearch, Sync data between DynamoDB and ElasticSearch

Refer: https://www.cnblogs.com/Answer1215/p/14783470.html

Enable DynamoDB Stream

serverless.yml:

service: name: serverless-udagram-app plugins: - serverless-webpack provider: name: aws runtime: nodejs14.x stage: ${opt:stage, 'dev'} region: ${opt:region, 'us-east-1'} environment: GROUPS_TABLE: Groups-${self:provider.stage} IMAGES_TABLE: Images-${self:provider.stage} CONNECTIONS_TABLE: Socket-connections-${self:provider.stage} IMAGE_ID_INDEX: ImageIdIndex IMAGES_S3_BUCKET: zhentiw-serverless-udagram-images-${self:provider.stage} SIGNED_URL_EXPIRATION: 300 iamRoleStatements: - Effect: Allow Action: - s3:PutObject - s3:GetObject Resource: arn:aws:s3:::${self:provider.environment.IMAGES_S3_BUCKET}/* - Effect: Allow Action: - dynamodb:Query Resource: arn:aws:dynamodb:${self:provider.region}:*:table/${self:provider.environment.IMAGES_TABLE}/index/${self:provider.environment.IMAGE_ID_INDEX} - Effect: Allow Action: - dynamodb:Query - dynamodb:PutItem Resource: arn:aws:dynamodb:${self:provider.region}:*:table/${self:provider.environment.IMAGES_TABLE} - Effect: Allow Action: - dynamodb:Scan - dynamodb:PutItem - dynamodb:GetItem Resource: arn:aws:dynamodb:${self:provider.region}:*:table/${self:provider.environment.GROUPS_TABLE} - Effect: Allow Action: - dynamodb:Scan - dynamodb:PutItem - dynamodb:DeleteItem Resource: arn:aws:dynamodb:${self:provider.region}:*:table/${self:provider.environment.CONNECTIONS_TABLE} functions: CreateImage: handler: src/lambda/http/createImage.handler events: - http: method: post path: groups/{groupId}/images cors: true reqValidatorName: RequestBodyValidator request: schema: application/json: ${file(models/create-image-request.json)} GetImage: handler: src/lambda/http/getImage.handler events: - http: method: get path: images/{imageId} cors: true GetImages: handler: src/lambda/http/getImages.handler events: - http: method: get path: group/{groupId}/images cors: true GetGroups: handler: src/lambda/http/getGroups.handler events: - http: method: get path: groups cors: true CreateGroup: handler: src/lambda/http/createGroup.handler events: - http: method: post path: groups cors: true ## do the validation in api gateway reqValidatorName: RequestBodyValidator request: schema: application/json: ${file(models/create-group-request.json)} SendUploadNotification: handler: src/lambda/s3/sendNotification.handler ConnectHandler: handler: src/lambda/websockets/connect.handler events: - websocket: route: $connect DisconnectHandler: handler: src/lambda/websockets/disconnect.handler events: - websocket: route: $disconnect SyncWithElasticsearch: handler: src/lambda/dynamodb/elasticsearchSync.handler events: - stream: type: dynamodb arn: !GetAtt ImagesDynamoDBTable.StreamArn resources: Resources: AttachmentsBucket: Type: AWS::S3::Bucket Properties: BucketName: ${self:provider.environment.IMAGES_S3_BUCKET} NotificationConfiguration: LambdaConfigurations: - Event: s3:ObjectCreated:* ## <function_name>LambdaFunction.Arn Function: !GetAtt SendUploadNotificationLambdaFunction.Arn CorsConfiguration: CorsRules: - AllowedMethods: - GET - PUT - POST - DELETE - HEAD AllowedHeaders: - "*" AllowedOrigins: - "*" MaxAge: 3000 # 50 hours BucketPolicy: Type: AWS::S3::BucketPolicy Properties: Bucket: !Ref AttachmentsBucket PolicyDocument: Id: MyPolicy Version: "2012-10-17" Statement: - Sid: PublicReadForGetBucketObjects Effect: Allow Principal: "*" Action: "s3:GetObject" Resource: "arn:aws:s3:::${self:provider.environment.IMAGES_S3_BUCKET}/*" ConnectionsDynamoDBTable: Type: AWS::DynamoDB::Table Properties: TableName: ${self:provider.environment.CONNECTIONS_TABLE} BillingMode: PAY_PER_REQUEST AttributeDefinitions: - AttributeName: id AttributeType: S KeySchema: - AttributeName: id KeyType: HASH ImagesDynamoDBTable: Type: AWS::DynamoDB::Table Properties: TableName: ${self:provider.environment.IMAGES_TABLE} BillingMode: PAY_PER_REQUEST StreamSpecification: StreamViewType: NEW_IMAGE KeySchema: - AttributeName: groupId KeyType: HASH - AttributeName: timestamp KeyType: RANGE AttributeDefinitions: - AttributeName: groupId AttributeType: S - AttributeName: timestamp AttributeType: S - AttributeName: imageId AttributeType: S GlobalSecondaryIndexes: - IndexName: ${self:provider.environment.IMAGE_ID_INDEX} KeySchema: - AttributeName: imageId KeyType: HASH ## Copy all the attrs to the new GSI table Projection: ProjectionType: ALL SendUploadNotificationPermission: Type: AWS::Lambda::Permission Properties: Action: lambda:InvokeFunction FunctionName: !Ref SendUploadNotificationLambdaFunction Principal: s3.amazonaws.com SourceAccount: !Ref "AWS::AccountId" SourceArn: arn:aws:s3:::${self:provider.environment.IMAGES_S3_BUCKET} GroupsDynamoDBTable: Type: AWS::DynamoDB::Table Properties: AttributeDefinitions: - AttributeName: id AttributeType: S KeySchema: - AttributeName: id KeyType: HASH BillingMode: PAY_PER_REQUEST TableName: ${self:provider.environment.GROUPS_TABLE}

We enabled "DynamoDB Stream" for Images table, whenever there is an update for the table, a stream will be fired and catch by a lambda function.

Add Elasticsearch resources

ImagesSearch: Type: AWS::Elasticsearch::Domain Properties: ElasticsearchVersion: "6.7" DomainName: images-search-${self:provider.stage} ElasticsearchClusterConfig: DedicatedMasterEnabled: false InstanceCount: 1 ZoneAwarenessEnabled: false InstanceType: t2.small.elasticsearch EBSOptions: EBSEnabled: true Iops: 0 VolumeSize: 10 VolumeType: "gp2" AccessPolicies: Version: "2012-10-17" Statement: - Effect: Allow Principal: AWS: !Sub "arn:aws:sts::${AWS::AccountId}:assumed-role/${self:service}-${self:provider.stage}-${self:provider.region}-lambdaRole/serverless-udagram-app-${self:provider.stage}-SyncWithElasticsearch" Action: es:ESHttp* Resource: !Sub "arn:aws:es:${self:provider.region}:${AWS::AccountId}:domain/images-search-${self:provider.stage}/*" - Effect: Allow Principal: AWS: "*" Action: es:ESHttp* Condition: IpAddress: aws:SourceIp: - "<YOUR_IP_ADDRESS>/32" Resource: !Sub "arn:aws:es:${self:provider.region}:${AWS::AccountId}:domain/images-search-${self:provider.stage}/*"

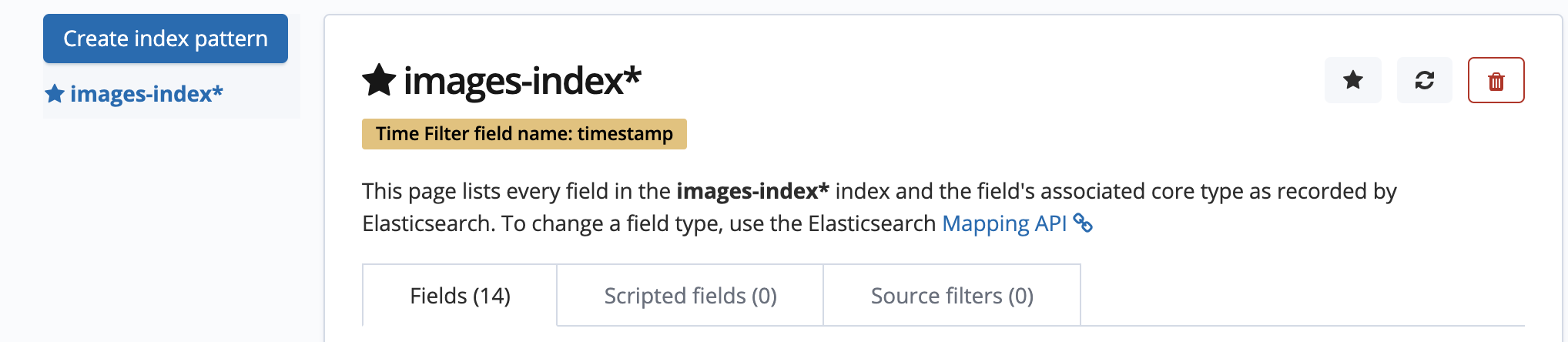

After Deployed, Open Kibana, config index:

Part 4 SNS

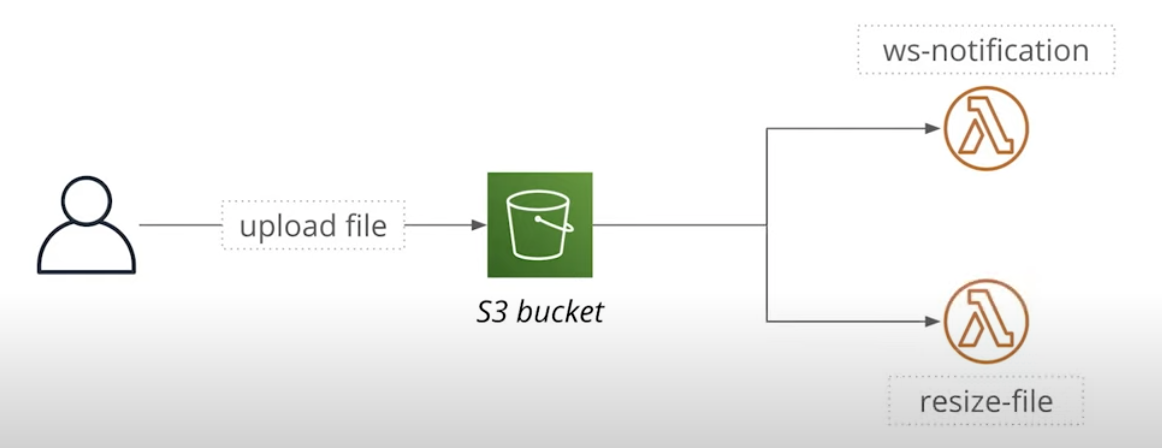

What if we want to have two event handlers for the same S3 bucket

- Send a WebSocket notification

- Resize an image

Looks like the pic:

But actually we CANNOT do this.

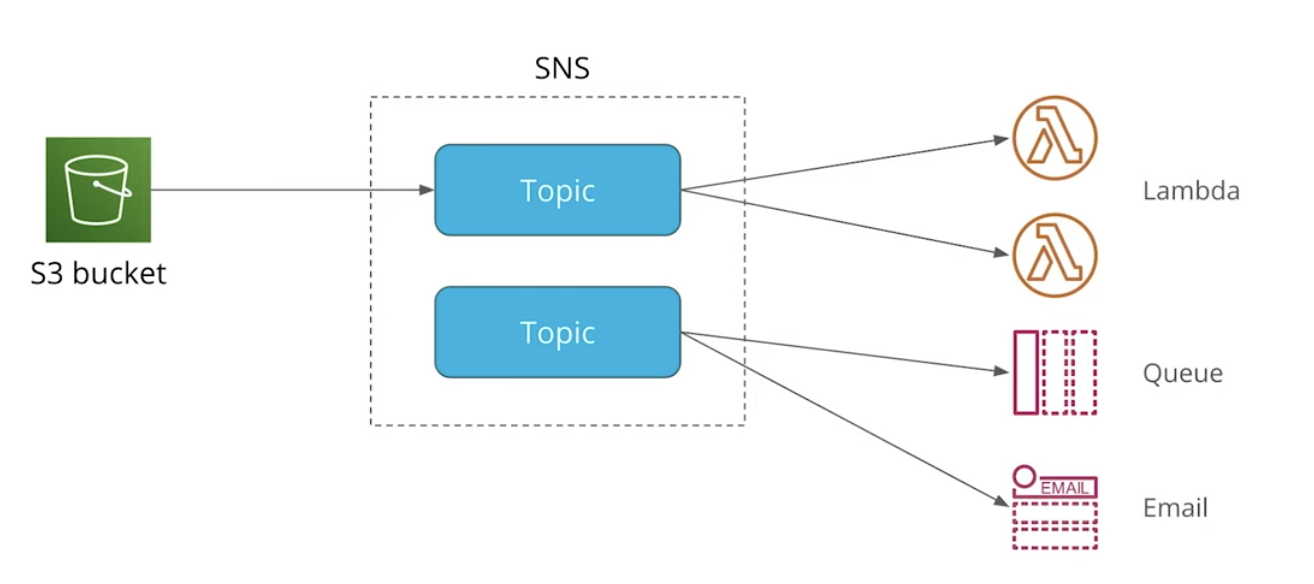

Because S3 allows only one notification target.

We need to use a different service to

- receive one event

- Broadcast it to multiple targets

We will use SNS for this.

SNS is a service to send messages to other services.

It has two main concepts:

- Publishers - publish messages

- Subscribers - consume incoming messages

Publishers and subscribers communicate via topics:

- A publisher publish a message to a topic

- A subscriber receives a message if it is subscribed to a topic

- One topic can have many subscribers

- Subscribers can use various protocols: Lambda, HTTP, email, SMS, etc.

Add a `custom` which can be used as environment in template, but won't be used at lambda function:

custom:

topicName: ImagesTopic-${self:provider.stage}

Then for the lambda function which send notification to clinets, previously, we send notfiction when S3 upload event fire.

Now we need to change it listen SNS topic:

functions: SendUploadNotification: handler: src/lambda/s3/sendNotification.handler environment: STAGE: ${self:provider.stage} API_ID: Ref: WebsocketsApi events: - sns: arn: Fn::Join: - ":" - - arn:aws:sns - Ref: AWS::Region - Ref: AWS::AccountId - ${self:custom.topicName} topicName: ${self:custom.topicName}

Add topic resource and topic policy:

ImagesTopic: Type: AWS::SNS::Topic Properties: DisplayName: Image bucket topic TopicName: ${self:custom.topicName} SNSTopicPolicy: Type: AWS::SNS::TopicPolicy Properties: PolicyDocument: Version: "2012-10-17" Statement: - Effect: Allow Principal: AWS: "*" Action: sns:Publish Resource: !Ref ImagesTopic Condition: ArnLike: Aws:SourceArn: arn:aws:s3:::${self:provider.environment.IMAGES_S3_BUCKET} Topics: - !Ref ImagesTopic

Update Lambda function:

import { S3Event, SNSHandler, SNSEvent } from "aws-lambda";

import "source-map-support/register";

import * as AWS from "aws-sdk";

const docClient = new AWS.DynamoDB.DocumentClient();

const connectionsTable = process.env.CONNECTIONS_TABLE;

const stage = process.env.STAGE;

const apiID = process.env.API_ID;

const connectionParams = {

apiVersion: "2018-11-29",

endpoint: `${apiID}.execute-api.us-east-1.amazonaws.com/${stage}`,

};

const apiGateway = new AWS.ApiGatewayManagementApi(connectionParams);

export const handler: SNSHandler = async (event: SNSEvent) => {

console.log("Processing SNS event", JSON.stringify(event));

for (const snsRecord of event.Records) {

const s3EventStr = snsRecord.Sns.Message;

console.log("Processing S3 event", s3EventStr);

const s3Event = JSON.parse(s3EventStr);

await processS3Event(s3Event);

}

};

async function processS3Event(s3Event: S3Event) {

for (const record of s3Event.Records) {

const key = record.s3.object.key;

console.log("Processing S3 item with key: ", key);

const connections = await docClient

.scan({

TableName: connectionsTable,

})

.promise();

const payload = {

imageId: key,

};

for (const connection of connections.Items) {

const connectionId = connection.id;

await sendMessagedToClient(connectionId, payload);

}

}

}

async function sendMessagedToClient(connectionId, payload) {

try {

console.log("Sending message to a connection", connectionId);

await apiGateway

.postToConnection({

ConnectionId: connectionId,

Data: JSON.stringify(payload),

})

.promise();

} catch (e) {

if (e.statusCode === 410) {

console.log("Stale connection");

await docClient

.delete({

TableName: connectionsTable,

Key: {

id: connectionId,

},

})

.promise();

}

}

}

Part 5 Auth

Refer: https://www.cnblogs.com/Answer1215/p/14798866.html

We want to store the Auth0 Secret into AWS Secret Manager.

Add Permission for IAM user:

When doing Auth authorization, what we want to do is

- Get secrect object stored in ASM

- Then get that field which contains our actual secret value

- Do the validation with JWT

Update code in Lambda: src/lambda/auth/auth0Authorization.ts

import {

CustomAuthorizerEvent,

CustomAuthorizerHandler,

CustomAuthorizerResult,

} from "aws-lambda";

import * as AWS from "aws-sdk";

import { verify } from "jsonwebtoken";

import { JwtToken } from "../../auth/JwtToken";

const secretId = process.env.AUTH_0_SECRET_ID;

const secretField = process.env.AUTH_0_SECRET_FIELD;

let client = new AWS.SecretsManager();

// cache secrect if a Lambda instance is reused

let cachedSecret: string;

export const handler: CustomAuthorizerHandler = async (

event: CustomAuthorizerEvent

): Promise<CustomAuthorizerResult> => {

try {

const decodedToken = await verifyToken(event.authorizationToken);

console.log("User was authorized", decodedToken);

return {

// unqiue token id for user

principalId: decodedToken.sub,

policyDocument: {

Version: "2012-10-17",

Statement: [

{

Action: "execute-api:Invoke",

Effect: "Allow",

Resource: "*",

},

],

},

};

} catch (err) {

console.log("User was not authorized", err.message);

return {

principalId: "user",

policyDocument: {

Version: "2012-10-17",

Statement: [

{

Action: "execute-api:Invoke",

Effect: "Deny",

Resource: "*",

},

],

},

};

}

};

async function verifyToken(authHeader: string): Promise<JwtToken> {

console.log("authHeader", authHeader);

if (!authHeader) {

throw new Error("No authorization header");

}

if (authHeader.toString().indexOf("bearer") > -1) {

throw new Error("Invalid authorization header");

}

const splits = authHeader.split(" ");

const token = splits[1];

console.log("token", token);

const secrectObject = await getSecret();

const secret = secrectObject[secretField];

// use JWT to verify the token with the secret

return verify(token, secret) as JwtToken;

// A request has been authorized.

}

async function getSecret() {

// using the secret id we have in KMS to get the secret object

// which will contain the secret value we want to use with JWT token

if (cachedSecret) {

return cachedSecret;

}

const data = await client

.getSecretValue({

SecretId: secretId,

})

.promise();

cachedSecret = data.SecretString;

return JSON.parse(cachedSecret);

}

Add resouces:

AUTH_SECRET_ID: AuthZeroSercet-${self:provider.stage}

AUTH_SECRET_FIELD: authSecret

resources: Resources: KMSKey: Type: AWS::KMS::Key Properties: KeyPolicy: Version: "2012-10-17" Id: key-default-1 Statement: - Sid: Allow administration of the key Effect: Allow Principal: AWS: Fn::Join: - ":" - - "arn:aws:iam:" - Ref: AWS::AccountId - "root" Action: - "kms:*" Resource: "*" KMSAlias: Type: AWS::KMS::Alias Properties: AliasName: alias/authKey-${self:provider.stage} TargetKeyId: !Ref KMSKey AuthZeroSecret: Type: AWS::SecretsManager::Secret Properties: Name: ${self:provider.environment.AUTH_SECRET_ID} Description: AuthZero Secret KmsKeyId: !Ref KMSKey GatewayResponseDefault4XX:

Add permission for Lambda:

- Effect: Allow Action: - secretsmanager:GetSecretValue Resource: !Ref AuthZeroSecret - Effect: Allow Action: - kms:Decrypt Resource: !GetAtt KMSKey.Arn

Add Auth to endpoint:

CreateImage: handler: src/lambda/http/createImage.handler events: - http: method: post path: groups/{groupId}/images cors: true authorizer: Auth reqValidatorName: RequestBodyValidator request: schema: application/json: ${file(models/create-image-request.json)}

Also need to add Auth0 sercet into AWS Sercet Manager:

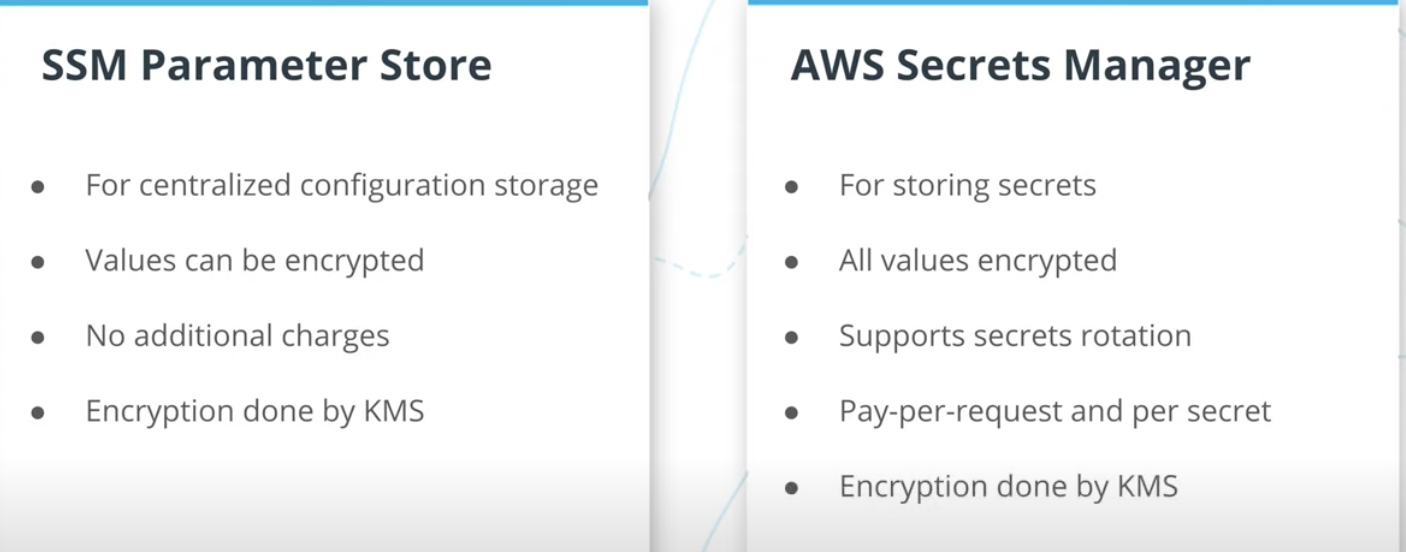

For storing sercet in AWS, there are two options, what we choose is Sercet manager, another one can be used is SSM parameter store:

Part 7: Using Lamba Middleware

Refer: https://www.cnblogs.com/Answer1215/p/14804275.html

- Use Middy middleware to load secrets

- Will read secrets from Secrets Manager

- Caches them so a Lambda could re-use-them

- Configure caching duration

Install middy lib.

Using Cors:

// createImage.ts

import { v4 as uuidv4 } from "uuid";

import "source-map-support/register";

import * as AWS from "aws-sdk";

import { APIGatewayProxyEvent, APIGatewayProxyResult } from "aws-lambda";

import * as middy from "middy";

import { cors } from "middy/middlewares";

const docClient = new AWS.DynamoDB.DocumentClient();

const groupTables = process.env.GROUPS_TABLE;

const imagesTables = process.env.IMAGES_TABLE;

const bucketName = process.env.IMAGES_S3_BUCKET;

const urlExpiration = process.env.SIGNED_URL_EXPIRATION;

const s3 = new AWS.S3({

signatureVersion: "v4",

});

function getPreSignedUrl(imageId) {

return s3.getSignedUrl("putObject", {

Bucket: bucketName,

Key: imageId,

Expires: parseInt(urlExpiration, 10),

});

}

export const handler = middy(

async (event: APIGatewayProxyEvent): Promise<APIGatewayProxyResult> => {

console.log("Processing event: ", event);

const imageId = uuidv4();

const groupId = event.pathParameters.groupId;

const parsedBody = JSON.parse(event.body);

const isGroupExist = await groupExists(groupId);

if (!isGroupExist) {

return {

statusCode: 404,

body: JSON.stringify({

error: "Group does not exist",

}),

};

}

const newItem = {

imageId,

groupId,

timestamp: new Date().toISOString(),

...parsedBody,

imageUrl: `https://${bucketName}.s3.amazonaws.com/${imageId}`,

};

await docClient

.put({

TableName: imagesTables,

Item: newItem,

})

.promise();

const url = getPreSignedUrl(imageId);

return {

statusCode: 201,

body: JSON.stringify({

newItem,

uploadUrl: url,

}),

};

}

);

async function groupExists(groupId) {

const result = await docClient

.get({

TableName: groupTables,

Key: {

id: groupId,

},

})

.promise();

console.log("Get group", result);

return !!result.Item;

}

handler.use(cors({ credentials: true }));

Using SecretManager:

import { CustomAuthorizerEvent, CustomAuthorizerResult } from "aws-lambda";

import { verify } from "jsonwebtoken";

import { JwtToken } from "../../auth/JwtToken";

import * as middy from "middy";

import { secretsManager } from "middy/middlewares";

const secretId = process.env.AUTH_SECRET_ID;

const secretField = process.env.AUTH_SECRET_FIELD;

export const handler = middy(

async (

event: CustomAuthorizerEvent,

// middy will store the result in the context

context

): Promise<CustomAuthorizerResult> => {

try {

const decodedToken = verifyToken(

event.authorizationToken,

context["AUTH0_SECRET"][secretField]

);

console.log("User was authorized", decodedToken);

return {

// unqiue token id for user

principalId: decodedToken.sub,

policyDocument: {

Version: "2012-10-17",

Statement: [

{

Action: "execute-api:Invoke",

Effect: "Allow",

Resource: "*",

},

],

},

};

} catch (err) {

console.log("User was not authorized", err.message);

return {

principalId: "user",

policyDocument: {

Version: "2012-10-17",

Statement: [

{

Action: "execute-api:Invoke",

Effect: "Deny",

Resource: "*",

},

],

},

};

}

}

);

function verifyToken(authHeader: string, secret: string): JwtToken {

console.log("authHeader", authHeader);

if (!authHeader) {

throw new Error("No authorization header");

}

if (authHeader.toString().indexOf("bearer") > -1) {

throw new Error("Invalid authorization header");

}

const splits = authHeader.split(" ");

const token = splits[1];

console.log("token", token);

// use JWT to verify the token with the secret

return verify(token, secret) as JwtToken;

// A request has been authorized.

}

handler.use(

secretsManager({

cache: true,

cacheExpiryInMills: 60000,

throwOnFailedCall: true,

secrets: {

AUTH0_SECRET: secretId,

},

})

);

浙公网安备 33010602011771号

浙公网安备 33010602011771号