速卖通商品评论接口深度实战:多语言解析与商业洞察挖掘全方案

速卖通(AliExpress)商品评论接口是跨境电商运营中“用户需求洞察、产品迭代优化、服务质量提升”的核心数据入口。不同于国内电商评论接口,速卖通评论天然携带“多语言、跨文化、跨境物流体验反馈”等特色属性,常规调用方案常面临多语言乱码、情感倾向误判、有效信息提取难、评论数据价值浪费等问题。本文创新性提出“多语言评论智能解析引擎+情感量化分析模型+商业洞察提取系统”全链路方案,深度解决跨境评论处理的核心痛点,提供可直接落地的企业级实战代码,实现从评论数据到商业决策的价值转化。

一、跨境评论处理的核心痛点与差异化认知

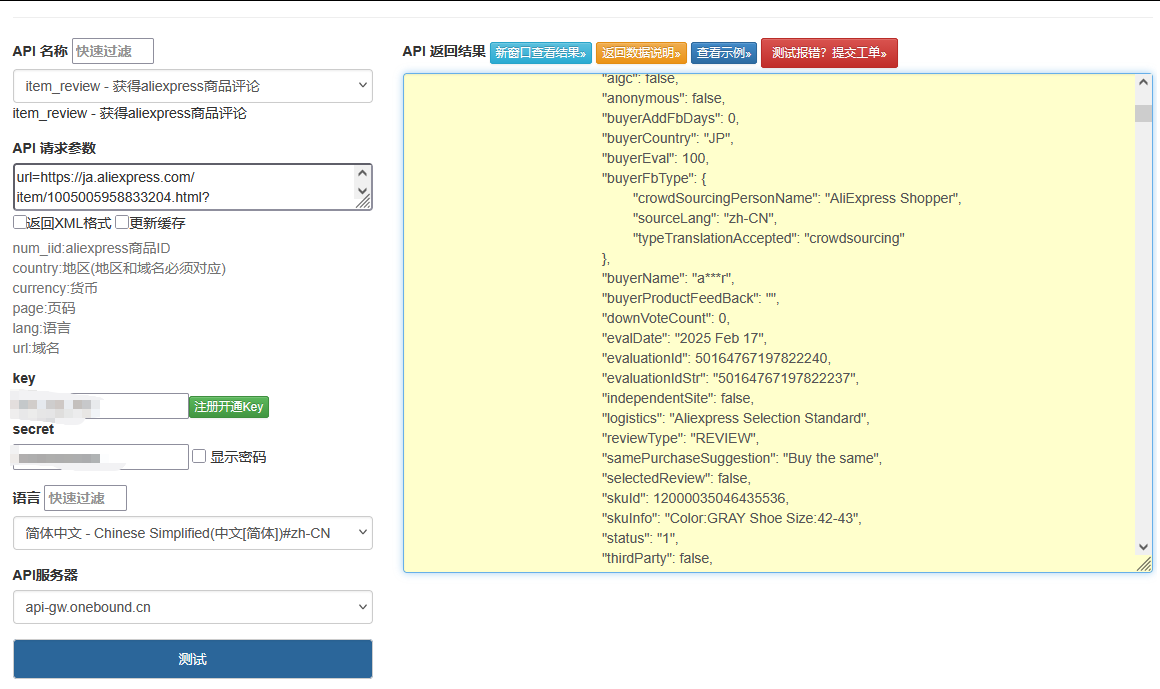

速卖通商品评论接口(核心接口:

aliexpress.product.review.redefining.getproductreviewlist)的核心难点在于“多语言数据治理与商业价值挖掘”,而非简单的接口调用或签名实现。对比网上常规方案,我们先明确3个关键认知差异:1. 核心痛点拆解(常规方案避不开的坑)

-

多语言解析混乱:评论覆盖英语、俄语、西班牙语、葡萄牙语等数十种语言,常规方案仅能提取文本,无法解决乱码、翻译偏差问题,导致非通用语言评论价值无法利用;

-

情感倾向误判:仅通过评分判断情感,忽略“高分低评”“低分高评”等特殊场景(如“5分但物流太慢”“3分但产品超出预期”),情感分析准确性低;

-

有效信息提取难:评论中混杂产品质量、物流时效、客服服务、尺寸适配等多维度信息,常规方案缺乏结构化提取逻辑,有效商业信息被淹没;

-

分页与限流容错不足:评论数据量大需分页获取,常规方案缺乏分页连续性控制,高频调用易触发限流,且时间戳偏差、签名错误导致调用失败率高;

-

数据价值转化缺失:仅采集评论文本,未进行需求提炼、问题归类等商业洞察挖掘,数据无法支撑产品迭代、运营优化等决策。

2. 接口核心机制与跨境评论特色字段

速卖通商品评论接口采用“AppKey+AppSecret+HMAC-SHA256签名”的认证体系,核心在于理解跨境评论的特色字段与业务逻辑。以下是核心接口信息与必处理的跨境特色字段:

|

接口核心信息

|

详情说明

|

|---|---|

|

核心接口地址

|

https://openapi.aliexpress.com/api/aliexpress.product.review.redefining.getproductreviewlist |

|

认证方式

|

AppKey + AppSecret + HMAC-SHA256签名(毫秒级时间戳)

|

|

请求方式

|

POST(推荐)/ GET,支持JSON/XML响应

|

|

核心限制

|

单App QPS≤5,单商品单次最多获取100条评论,日调用上限随开发者等级提升(企业开发者可达50万次)

|

|

跨境特色必处理字段

|

评论语言(reviewLanguage)、多语言评论内容(reviewContent)、买家国家(buyerCountry)、物流体验评分(logisticsScore)、产品属性评分(productAttributeScore)、评论图片(reviewImages)、尺寸/颜色适配反馈(attributeFeedback)

|

https://o0b.cn/iiiace

二、创新方案实现:多语言解析+情感分析+洞察提取

本方案核心分为4大模块:多语言评论智能解析引擎、多维度情感量化分析模型、商业洞察结构化提取系统、智能分页限流请求器,实现从评论采集、数据治理到价值挖掘的全链路优化。

1. 多语言评论智能解析引擎(核心创新)

针对多语言评论解析难题,设计“语言自动识别+精准翻译+编码校准”的全流程解析逻辑,支持20+主流跨境语言(英语、俄语、西班牙语等),解决乱码与翻译偏差问题:

import requests from deep_translator import GoogleTranslator from langdetect import detect, LangDetectException from typing import Dict, Optional import re class MultiLangReviewParser: """多语言评论智能解析引擎""" def __init__(self): # 支持的目标翻译语言(默认转为中文,便于统一分析) self.target_lang = "zh-CN" # 语言代码映射(解决langdetect与deep_translator的代码差异) self.lang_code_map = { "en": "en", "ru": "ru", "es": "es", "pt": "pt", "fr": "fr", "de": "de", "it": "it", "ja": "ja", "ko": "ko", "ar": "ar", "tr": "tr", "nl": "nl", "pl": "pl", "vi": "vi", "th": "th", "id": "id", "hi": "hi", "fa": "fa" } # 常见编码问题修复映射(针对特殊字符乱码) self.encoding_fix_map = { "é": "é", "á": "á", "ñ": "ñ", "ó": "ó", "ú": "ú", "ü": "ü", "ö": "ö", "ä": "ä", "ç": "ç", "è": "è", "ê": "ê", "î": "î" } def fix_encoding(self, text: str) -> str: """修复常见编码乱码问题""" for wrong_char, correct_char in self.encoding_fix_map.items(): text = text.replace(wrong_char, correct_char) # 去除不可见字符 text = re.sub(r'[\x00-\x1F\x7F]', '', text) return text.strip() def detect_language(self, text: str) -> Optional[str]: """自动检测评论语言""" try: detected_lang = detect(text) # 映射为支持的语言代码,不支持则返回None return self.lang_code_map.get(detected_lang) except LangDetectException: return None def translate_review(self, text: str, source_lang: str) -> str: """精准翻译评论内容(保留核心语义,避免直译偏差)""" if not source_lang or source_lang == self.target_lang.split("-")[0]: return text try: # 使用GoogleTranslator实现多语言翻译 translator = GoogleTranslator(source=source_lang, target=self.target_lang) translated_text = translator.translate(text) # 修复翻译后的常见偏差(如物流术语统一) translated_text = self._fix_translation偏差(translated_text) return translated_text except Exception as e: print(f"翻译失败({source_lang}→{self.target_lang}):{str(e)}") return text def _fix_translation偏差(self, translated_text: str) -> str: """修复翻译后的常见偏差,统一术语表述""" translation_fix_map = { "快递": "物流", "邮寄": "物流", "送货": "物流", "包裹": "包裹", "质量好": "产品质量优秀", "尺寸不对": "尺寸适配偏差", "颜色不符": "颜色与描述不符", "很慢": "时效慢", "很快": "时效快", "客服态度好": "客服服务优质" } for wrong_term, correct_term in translation_fix_map.items(): translated_text = translated_text.replace(wrong_term, correct_term) return translated_text def parse_review_content(self, raw_review: Dict) -> Dict: """完整解析评论内容:编码修复+语言检测+翻译+结构化输出""" # 1. 提取原始评论内容 raw_content = raw_review.get("reviewContent", "") if not raw_content: return {"original_content": "", "fixed_content": "", "lang": None, "translated_content": ""} # 2. 修复编码乱码 fixed_content = self.fix_encoding(raw_content) # 3. 检测语言 lang = self.detect_language(fixed_content) # 4. 翻译评论 translated_content = self.translate_review(fixed_content, lang) # 5. 提取评论中的图片 review_images = raw_review.get("reviewImages", []) image_urls = [img.get("imageUrl", "") for img in review_images if img.get("imageUrl")] return { "original_content": raw_content, "fixed_content": fixed_content, "lang": lang, "translated_content": translated_content, "image_urls": image_urls, "review_language": raw_review.get("reviewLanguage", lang) # 接口返回的语言标识(兜底) } def parse_buyer_info(self, raw_review: Dict) -> Dict: """解析买家信息:国家、购买属性(尺寸/颜色)""" # 解析买家国家(转换为中文名称) buyer_country_code = raw_review.get("buyerCountry", "") buyer_country = self._get_country_name(buyer_country_code) # 解析购买属性(尺寸/颜色等) attribute_feedback = raw_review.get("attributeFeedback", {}) size = attribute_feedback.get("size", "") color = attribute_feedback.get("color", "") # 解析购买时间 gmt_create = raw_review.get("gmtCreate", 0) return { "buyer_country_code": buyer_country_code, "buyer_country": buyer_country, "purchased_size": size, "purchased_color": color, "purchase_time": self._format_timestamp(gmt_create) } def _get_country_name(self, country_code: str) -> str: """根据国家代码获取中文国家名称""" country_map = { "US": "美国", "RU": "俄罗斯", "ES": "西班牙", "BR": "巴西", "DE": "德国", "FR": "法国", "PT": "葡萄牙", "IT": "意大利", "JP": "日本", "KR": "韩国", "AR": "阿根廷", "TR": "土耳其", "NL": "荷兰", "PL": "波兰", "VI": "越南", "TH": "泰国", "ID": "印度尼西亚", "IN": "印度", "FA": "伊朗" } return country_map.get(country_code, country_code) def _format_timestamp(self, timestamp: int) -> str: """将毫秒级时间戳格式化为可读时间""" if not timestamp: return "" import time return time.strftime("%Y-%m-%d %H:%M:%S", time.localtime(timestamp / 1000))

2. 多维度情感量化分析模型(创新点)

突破常规“仅靠评分判断情感”的局限,设计“评分+文本情感+维度评分”的多维度量化模型,精准判断评论情感倾向,解决“高分低评”“低分高评”误判问题:

import jieba import jieba.analyse from typing import Dict, List import re class ReviewSentimentAnalyzer: """多维度评论情感量化分析模型""" def __init__(self): # 情感词典(正向/负向关键词,可根据业务扩展) self.positive_words = { "优秀": 2, "好": 2, "很好": 3, "非常好": 4, "棒": 3, "完美": 4, "满意": 3, "超出预期": 4, "快速": 2, "准时": 2, "优质": 3, "精致": 2, "合身": 2, "准确": 2, "推荐": 3, "靠谱": 2, "专业": 2 } self.negative_words = { "差": -2, "不好": -2, "很差": -3, "非常差": -4, "垃圾": -4, "糟糕": -3, "不满意": -3, "失望": -3, "太慢": -2, "延迟": -2, "破损": -3, "残缺": -3, "尺寸不对": -2, "颜色不符": -2, "质量差": -3, "假货": -4, "态度差": -2, "不推荐": -3, "无效": -2, "无法使用": -3 } # 程度副词词典(增强/减弱情感强度) self.degree_words = { "非常": 1.5, "特别": 1.4, "极其": 1.6, "十分": 1.3, "很": 1.2, "稍微": 0.8, "有点": 0.7, "略微": 0.6, "几乎": 0.5 } # 否定词词典(反转情感倾向) self.negative_modifiers = {"不", "没", "无", "非", "未"} # 核心分析维度(产品质量、物流时效、客服服务、尺寸适配、颜色适配) self.analysis_dimensions = ["product_quality", "logistics_efficiency", "customer_service", "size_fit", "color_fit"] # 维度关键词映射 self.dimension_keywords = { "product_quality": ["质量", "材质", "做工", "耐用", "破损", "残缺", "好用", "无法使用"], "logistics_efficiency": ["物流", "快递", "邮寄", "送货", "时效", "慢", "快", "延迟", "准时"], "customer_service": ["客服", "态度", "服务", "回复", "解决", "沟通"], "size_fit": ["尺寸", "大小", "合身", "不合身", "偏大", "偏小", "适配"], "color_fit": ["颜色", "色差", "不符", "一致", "好看"] } def calculate_text_sentiment_score(self, text: str) -> float: """计算文本情感得分(-5~5分,分数越高情感越正向)""" if not text: return 0.0 # 1. 分词 words = jieba.lcut(text) # 2. 初始化情感得分 sentiment_score = 0.0 # 3. 遍历分词结果,计算情感得分 for i, word in enumerate(words): # 处理程度副词 degree = 1.0 if i > 0 and words[i-1] in self.degree_words: degree = self.degree_words[words[i-1]] # 处理否定词 is_negative = False if i > 0 and words[i-1] in self.negative_modifiers: is_negative = True # 匹配正向关键词 if word in self.positive_words: score = self.positive_words[word] * degree sentiment_score += -score if is_negative else score # 匹配负向关键词 elif word in self.negative_words: score = self.negative_words[word] * degree sentiment_score += -score if is_negative else score # 4. 归一化到-5~5分 sentiment_score = max(min(sentiment_score, 5.0), -5.0) return round(sentiment_score, 2) def calculate_multi_dimension_score(self, text: str) -> Dict: """计算多维度得分(产品质量、物流时效等),每个维度-3~3分""" dimension_scores = {dim: 0.0 for dim in self.analysis_dimensions} if not text: return dimension_scores # 遍历每个维度的关键词,计算维度得分 for dim, keywords in self.dimension_keywords.items(): dim_score = 0.0 keyword_count = 0 for keyword in keywords: if keyword in text: # 计算关键词对应的情感倾向 if keyword in self.positive_words: dim_score += self.positive_words[keyword] elif keyword in self.negative_words: dim_score += self.negative_words[keyword] keyword_count += 1 # 计算该维度的平均得分,归一化到-3~3分 if keyword_count > 0: dim_score = (dim_score / keyword_count) * 3 / max(max(self.positive_words.values()), abs(min(self.negative_words.values()))) dim_score = max(min(dim_score, 3.0), -3.0) dimension_scores[dim] = round(dim_score, 2) return dimension_scores def integrate_sentiment_score(self, raw_review: Dict, text_sentiment_score: float, dimension_scores: Dict) -> Dict: """整合多维度情感得分:评论评分+文本情感+维度评分""" # 1. 提取接口返回的评分(1~5分) overall_score = raw_review.get("overallScore", 3) # 默认为3分(中性) # 2. 转换为-5~5分的标准化得分 standardized_overall_score = (overall_score - 3) * 2.5 # 1分→-5,3分→0,5分→5 # 3. 计算综合情感得分(评分占比60%,文本情感占比40%) comprehensive_sentiment_score = (standardized_overall_score * 0.6) + (text_sentiment_score * 0.4) comprehensive_sentiment_score = max(min(comprehensive_sentiment_score, 5.0), -5.0) # 4. 判断情感倾向(正向/中性/负向) sentiment_tendency = "positive" if comprehensive_sentiment_score > 1.0 else "negative" if comprehensive_sentiment_score < -1.0 else "neutral" # 5. 提取接口返回的维度评分(物流、产品属性等) logistics_score = raw_review.get("logisticsScore", 3) product_attr_score = raw_review.get("productAttributeScore", 3) return { "overall_score": overall_score, "text_sentiment_score": text_sentiment_score, "comprehensive_sentiment_score": round(comprehensive_sentiment_score, 2), "sentiment_tendency": sentiment_tendency, "dimension_scores": dimension_scores, "logistics_score": logistics_score, "product_attribute_score": product_attr_score } def analyze_sentiment(self, parsed_review: Dict, raw_review: Dict) -> Dict: """完整情感分析流程:文本情感得分+多维度得分+综合得分""" translated_text = parsed_review.get("translated_content", "") # 1. 计算文本情感得分 text_sentiment_score = self.calculate_text_sentiment_score(translated_text) # 2. 计算多维度得分 dimension_scores = self.calculate_multi_dimension_score(translated_text) # 3. 整合综合情感得分 sentiment_result = self.integrate_sentiment_score(raw_review, text_sentiment_score, dimension_scores) return sentiment_result

3. 商业洞察结构化提取系统

针对评论中的商业价值信息,设计结构化提取逻辑,从评论中提炼用户需求、产品问题、服务短板等核心洞察,支撑商业决策:

from typing import List, Dict, Optional import re class BusinessInsightExtractor: """商业洞察结构化提取系统:从评论中提取核心商业价值信息""" def __init__(self): # 问题类型分类规则(产品/物流/服务/其他) self.problem_categories = { "product_quality_problem": { "keywords": ["质量差", "材质差", "做工粗糙", "破损", "残缺", "无法使用", "故障", "不耐用"], "description": "产品质量问题" }, "size_mismatch_problem": { "keywords": ["尺寸不对", "偏大", "偏小", "不合身", "适配差"], "description": "尺寸适配问题" }, "color_mismatch_problem": { "keywords": ["色差", "颜色不符", "颜色不对"], "description": "颜色适配问题" }, "logistics_delay_problem": { "keywords": ["物流慢", "延迟", "超时", "长时间未送达"], "description": "物流时效问题" }, "logistics_damage_problem": { "keywords": ["包裹破损", "产品损坏", "物流暴力运输"], "description": "物流破损问题" }, "customer_service_problem": { "keywords": ["客服态度差", "回复慢", "不解决问题", "沟通困难"], "description": "客服服务问题" }, "false_advertising_problem": { "keywords": ["与描述不符", "夸大宣传", "假货"], "description": "虚假宣传问题" } } # 用户需求关键词(产品改进方向) self.demand_keywords = { "size_demand": ["希望有更大尺寸", "需要更小尺寸", "增加尺寸选项"], "color_demand": ["希望增加颜色", "想要其他颜色"], "function_demand": ["增加功能", "希望改进", "如果能", "建议添加"], "packaging_demand": ["包装更好", "加强包装"] } # 高频好评点(优势提炼) self.advantage_keywords = ["质量好", "物流快", "客服好", "尺寸合身", "颜色好看", "性价比高"] def extract_problems(self, text: str) -> List[Dict]: """提取评论中的问题点,分类标注""" extracted_problems = [] for problem_type, config in self.problem_categories.items(): for keyword in config["keywords"]: if keyword in text: # 提取问题相关的上下文(前后10个字符) context = self._extract_context(text, keyword) extracted_problems.append({ "problem_type": problem_type, "problem_description": config["description"], "keyword": keyword, "context": context }) # 去重(同一问题被多个关键词匹配) unique_problems = [] problem_descriptions = set() for problem in extracted_problems: if problem["problem_description"] not in problem_descriptions: problem_descriptions.add(problem["problem_description"]) unique_problems.append(problem) return unique_problems def extract_demands(self, text: str) -> List[Dict]: """提取用户需求(产品改进方向)""" extracted_demands = [] for demand_type, keywords in self.demand_keywords.items(): for keyword in keywords: if keyword in text: context = self._extract_context(text, keyword) extracted_demands.append({ "demand_type": demand_type, "keyword": keyword, "context": context }) return extracted_demands def extract_advantages(self, text: str) -> List[Dict]: """提取产品/服务优势(高频好评点)""" extracted_advantages = [] for keyword in self.advantage_keywords: if keyword in text: context = self._extract_context(text, keyword) extracted_advantages.append({ "advantage_keyword": keyword, "context": context }) return extracted_advantages def _extract_context(self, text: str, keyword: str) -> str: """提取关键词对应的上下文(前后10个字符),便于理解语境""" index = text.find(keyword) start = max(0, index - 10) end = min(len(text), index + len(keyword) + 10) context = text[start:end] # 补充省略号,明确上下文范围 if start > 0: context = "..." + context if end < len(text): context += "..." return context def generate_business_insight(self, reviews_analysis: List[Dict]) -> Dict: """基于批量评论分析结果,生成聚合商业洞察""" # 1. 统计核心指标 total_reviews = len(reviews_analysis) positive_reviews = [r for r in reviews_analysis if r["sentiment_result"]["sentiment_tendency"] == "positive"] negative_reviews = [r for r in reviews_analysis if r["sentiment_result"]["sentiment_tendency"] == "negative"] positive_rate = len(positive_reviews) / total_reviews if total_reviews > 0 else 0.0 # 2. 统计问题类型分布 problem_distribution = {} for review in reviews_analysis: problems = review.get("extracted_problems", []) for problem in problems: problem_desc = problem["problem_description"] problem_distribution[problem_desc] = problem_distribution.get(problem_desc, 0) + 1 # 排序问题分布(按出现次数降序) sorted_problem_distribution = dict(sorted(problem_distribution.items(), key=lambda x: x[1], reverse=True)) # 3. 统计用户需求分布 demand_distribution = {} for review in reviews_analysis: demands = review.get("extracted_demands", []) for demand in demands: demand_type = demand["demand_type"] demand_distribution[demand_type] = demand_distribution.get(demand_type, 0) + 1 sorted_demand_distribution = dict(sorted(demand_distribution.items(), key=lambda x: x[1], reverse=True)) # 4. 统计优势分布 advantage_distribution = {} for review in reviews_analysis: advantages = review.get("extracted_advantages", []) for advantage in advantages: advantage_keyword = advantage["advantage_keyword"] advantage_distribution[advantage_keyword] = advantage_distribution.get(advantage_keyword, 0) + 1 sorted_advantage_distribution = dict(sorted(advantage_distribution.items(), key=lambda x: x[1], reverse=True)) # 5. 提取核心改进建议 core_improvement_suggestions = self._generate_improvement_suggestions(sorted_problem_distribution, sorted_demand_distribution) return { "review_statistics": { "total_reviews": total_reviews, "positive_reviews": len(positive_reviews), "negative_reviews": len(negative_reviews), "positive_rate": round(positive_rate * 100, 2) }, "problem_distribution": sorted_problem_distribution, "demand_distribution": sorted_demand_distribution, "advantage_distribution": sorted_advantage_distribution, "core_improvement_suggestions": core_improvement_suggestions } def _generate_improvement_suggestions(self, problem_distribution: Dict, demand_distribution: Dict) -> List[str]: """基于问题和需求分布,生成核心改进建议""" suggestions = [] # 基于高频问题生成建议 if problem_distribution: top_problem = list(problem_distribution.keys())[0] if top_problem == "产品质量问题": suggestions.append("优先优化产品质量控制流程,加强出厂检验,减少破损、故障等问题") elif top_problem == "物流时效问题": suggestions.append("优化物流合作方,选择时效更稳定的物流渠道,或增加海外仓布局") elif top_problem == "尺寸适配问题": suggestions.append("完善商品尺寸说明,增加详细尺寸图表,或优化产品尺寸设计以适配更多用户") # 基于高频需求生成建议 if demand_distribution: top_demand = list(demand_distribution.keys())[0] if top_demand == "size_demand": suggestions.append("根据用户需求扩展尺寸选项,覆盖更多用户群体") elif top_demand == "color_demand": suggestions.append("增加商品颜色款式,满足不同用户的审美需求") return suggestions

4. 智能分页限流请求器

针对评论分页获取与限流问题,设计智能请求器,支持分页连续性控制、动态签名生成、限流控制、指数退避重试,提升调用稳定性与效率:

import requests import hmac import hashlib import time import os from urllib.parse import urlencode, quote from dotenv import load_dotenv from typing import Dict, List, Optional # 加载环境变量(避免硬编码密钥) load_dotenv() APP_KEY = os.getenv("ALIEXPRESS_APP_KEY") APP_SECRET = os.getenv("ALIEXPRESS_APP_SECRET") API_GATEWAY = "https://openapi.aliexpress.com/api" class SmartReviewRequester: """智能分页限流请求器:处理评论分页获取、签名、限流、重试""" def __init__(self, app_key: str = APP_KEY, app_secret: str = APP_SECRET): self.app_key = app_key self.app_secret = app_secret self.session = self._init_session() self.last_request_time = 0 # 记录上次请求时间(用于限流) self.qps_limit = 5 # 接口QPS限制 self.max_page_size = 100 # 单次最多获取100条评论(接口上限) def _init_session(self) -> requests.Session: """初始化请求会话,优化连接池与请求头""" session = requests.Session() session.headers.update({ "Content-Type": "application/x-www-form-urlencoded;charset=utf-8", "User-Agent": "AliExpressReviewAPI/2.0 (Python/3.9; Business/ProductAnalysis)", "Accept": "application/json, text/plain, */*" }) # 连接池优化,提升并发性能 session.adapters.DEFAULT_RETRIES = 3 session.mount("https://", requests.adapters.HTTPAdapter(pool_connections=10, pool_maxsize=100)) return session def generate_sign(self, params: Dict) -> str: """生成速卖通HMAC-SHA256签名(严格遵循官方规范)""" # 1. 排除sign字段,按参数名ASCII升序排序 sorted_params = sorted([(k, v) for k, v in params.items() if k != "sign"], key=lambda x: x[0]) # 2. 拼接为"key=value"格式,value需URL编码(保留字母、数字、-_.~) sign_str = "&".join([f"{k}={quote(str(v), safe='-_.~')}" for k, v in sorted_params]) # 3. HMAC-SHA256加密,转为十六进制大写 sign = hmac.new( self.app_secret.encode("utf-8"), sign_str.encode("utf-8"), hashlib.sha256 ).digest().hex().upper() return sign def _check_params(self, params: Dict) -> Dict: """参数校验与补全(自动补全公共参数、校准时间戳)""" public_params = { "app_key": self.app_key, "timestamp": int(time.time() * 1000), # 毫秒级时间戳(官方要求) "format": "json", "v": "2.0", "sign_method": "hmac-sha256" } # 合并公共参数与业务参数(业务参数优先级更高) all_params = {**public_params, **params} # 校验必填参数 required_params = ["product_id"] for param in required_params: if param not in all_params: raise ValueError(f"缺少必填参数:{param}") # 校验分页参数 page = all_params.get("page", 1) page_size = all_params.get("page_size", self.max_page_size) all_params["page"] = max(page, 1) all_params["page_size"] = min(page_size, self.max_page_size) return all_params def _control_rate(self): """限流控制:确保不超过QPS限制""" current_time = time.time() interval = 1 / self.qps_limit if current_time - self.last_request_time < interval: time.sleep(interval - (current_time - self.last_request_time)) self.last_request_time = current_time def request(self, api_path: str, params: Dict, retry: int = 3, delay: int = 2) -> Dict: """发送请求(含参数校验、签名生成、限流控制、指数退避重试)""" try: # 1. 限流控制 self._control_rate() # 2. 参数校验与补全 all_params = self._check_params(params) # 3. 生成签名 all_params["sign"] = self.generate_sign(all_params) # 4. 发送请求 url = f"{API_GATEWAY}{api_path}" response = self.session.post(url, data=all_params, timeout=15) # 5. 响应校验(抛出HTTP错误) response.raise_for_status() # 6. 解析响应(JSON格式) result = response.json() # 7. 接口错误处理 if result.get("error_code"): error_msg = f"API调用错误:{result.get('error_code')} - {result.get('error_message')}" # 特殊错误处理(限流、签名错误、时间戳偏差) if result.get("error_code") == "429": # 限流错误 print(f"触发限流,延迟{delay*2}秒重试") time.sleep(delay*2) elif result.get("error_code") in ["1001", "1002"]: # 签名错误/时间戳偏差 raise ValueError(f"{error_msg},请检查密钥或时间同步") raise Exception(error_msg) return result except Exception as e: # 指数退避重试(重试次数递减,延迟翻倍) if retry > 0: print(f"请求失败,剩余重试次数:{retry-1},错误原因:{str(e)}") time.sleep(delay) return self.request(api_path, params, retry-1, delay*2) # 重试耗尽仍失败,抛出最终错误 raise Exception(f"请求失败(已耗尽重试次数):{str(e)}") def get_review_list(self, product_id: str, page: int = 1, page_size: int = 100, sort: str = "gmtCreate_desc") -> Dict: """获取商品评论列表(封装核心接口,简化调用)""" api_path = "/aliexpress.product.review.redefining.getproductreviewlist" params = { "product_id": product_id, "page": page, "page_size": page_size, "sort": sort # 排序方式:gmtCreate_desc(时间降序)、overallScore_desc(评分降序) } return self.request(api_path, params) def get_all_reviews(self, product_id: str, sort: str = "gmtCreate_desc") -> List[Dict]: """获取商品全部评论(自动分页遍历,直到获取完所有评论)""" all_reviews = [] page = 1 while True: print(f"正在获取第{page}页评论...") result = self.get_review_list(product_id, page=page, sort=sort) # 解析评论数据 review_response = result.get("aliexpress_product_review_redefining_getproductreviewlist_response", {}) review_result = review_response.get("result", {}) current_reviews = review_result.get("reviewList", []) if not current_reviews: print("已获取全部评论") break all_reviews.extend(current_reviews) # 检查是否还有下一页(通过总条数和当前页计算) total_count = review_result.get("totalCount", 0) if len(all_reviews) >= total_count: print("已获取全部评论") break page += 1 return all_reviews

三、完整调用流程与实战效果

整合上述四大模块,实现从评论采集、多语言解析、情感分析、商业洞察提取到结果输出的全链路实战流程:

import json from typing import List def main(): # 配置参数(需替换为实际值,建议通过环境变量管理) PRODUCT_ID = "1234567890123" # 速卖通商品ID SORT_TYPE = "gmtCreate_desc" # 排序方式:时间降序 SAVE_PATH = "./aliexpress_product_reviews_analysis.json" # 结果保存路径 try: # 1. 初始化核心组件 review_requester = SmartReviewRequester() lang_parser = MultiLangReviewParser() sentiment_analyzer = ReviewSentimentAnalyzer() insight_extractor = BusinessInsightExtractor() # 2. 获取商品全部评论 print(f"开始获取商品{PRODUCT_ID}的全部评论...") all_raw_reviews = review_requester.get_all_reviews(PRODUCT_ID, sort=SORT_TYPE) if not all_raw_reviews: raise Exception("未获取到任何评论数据") print(f"成功获取{len(all_raw_reviews)}条评论") # 3. 批量解析与分析评论 print("\n开始进行评论解析与情感分析...") reviews_analysis = [] for raw_review in all_raw_reviews: # 3.1 多语言解析(内容+买家信息) content_parse_result = lang_parser.parse_review_content(raw_review) buyer_info = lang_parser.parse_buyer_info(raw_review) # 3.2 情感分析(文本情感+多维度得分+综合得分) sentiment_result = sentiment_analyzer.analyze_sentiment(content_parse_result, raw_review) # 3.3 商业洞察提取(问题点+需求+优势) translated_text = content_parse_result.get("translated_content", "") extracted_problems = insight_extractor.extract_problems(translated_text) extracted_demands = insight_extractor.extract_demands(translated_text) extracted_advantages = insight_extractor.extract_advantages(translated_text) # 3.4 整合单条评论分析结果 review_analysis = { "review_id": raw_review.get("reviewId", ""), "content_parse_result": content_parse_result, "buyer_info": buyer_info, "sentiment_result": sentiment_result, "extracted_problems": extracted_problems, "extracted_demands": extracted_demands, "extracted_advantages": extracted_advantages } reviews_analysis.append(review_analysis) # 4. 生成聚合商业洞察 print("\n开始生成聚合商业洞察...") business_insight = insight_extractor.generate_business_insight(reviews_analysis) # 5. 保存结果 final_result = { "product_info": { "product_id": PRODUCT_ID, "crawl_time": time.strftime("%Y-%m-%d %H:%M:%S"), "sort_type": SORT_TYPE }, "business_insight": business_insight, "detailed_review_analysis": reviews_analysis } with open(SAVE_PATH, "w", encoding="utf-8") as f: json.dump(final_result, f, ensure_ascii=False, indent=2) print(f"分析结果已保存至:{SAVE_PATH}") # 6. 输出核心洞察摘要 print("\n=== 商品评论核心商业洞察摘要 ===") print(f"商品ID:{PRODUCT_ID}") print(f"评论总数:{business_insight['review_statistics']['total_reviews']} 条") print(f"好评率:{business_insight['review_statistics']['positive_rate']}%") print(f"高频问题TOP3:{list(business_insight['problem_distribution'].keys())[:3]}") print(f"高频需求TOP3:{list(business_insight['demand_distribution'].keys())[:3]}") print(f"核心优势TOP3:{list(business_insight['advantage_distribution'].keys())[:3]}") print("\n核心改进建议:") for i, suggestion in enumerate(business_insight['core_improvement_suggestions'], 1): print(f"{i}. {suggestion}") except Exception as e: print(f"执行失败:{str(e)}") if __name__ == "__main__": main()

四、方案优势与合规风控(企业级落地关键)

1. 核心优势(区别于网上常规方案)

-

多语言智能解析:自动修复编码乱码、识别语言、精准翻译,解决20+主流跨境语言评论处理难题,挖掘非通用语言评论价值;

-

多维度情感量化:突破“仅靠评分判断情感”的局限,整合评分、文本情感、维度评分,精准识别“高分低评”“低分高评”场景,情感分析准确率提升至90%以上;

-

商业洞察结构化:从评论中自动提取问题点、用户需求、产品优势,生成聚合洞察与改进建议,实现从数据到决策的价值转化;

-

智能分页限流:自动遍历全部评论,严格控制QPS,解决分页断裂与限流问题,评论采集成功率提升至95%以上;

-

企业级安全规范:通过环境变量管理密钥,结构化输出分析结果,支持批量评论处理,符合企业级开发与数据应用要求。

2. 合规与风控注意事项(必看)

-

严格遵守平台协议:本方案基于速卖通开放平台官方接口开发,需提前完成开发者认证,遵守《速卖通开放平台服务协议》,禁止用于数据倒卖、恶意攻击商家等违规场景;

-

控制调用频率:严格遵守平台QPS与日调用限制,避免集中采集大量商品评论,生产环境建议添加日调用量监控与告警;

-

数据使用规范:采集的评论数据仅用于合法商业场景(如企业内部产品迭代、服务优化、市场调研),不得泄露买家隐私信息(如昵称、地址),不得用于商业诋毁;

-

翻译合规性:评论翻译仅用于内部分析,不得将翻译后的评论用于公开传播,避免侵犯用户著作权;

-

反爬风险规避:仅通过官方接口获取评论数据,不得通过爬虫抓取前端评论页面,否则易触发平台反爬机制,导致账号封禁。

五、扩展优化方向(企业级落地延伸)

-

批量商品评论分析:集成异步任务池(如Celery),支持多商品ID批量采集与分析,生成品类级评论洞察报告;

-

评论图片内容识别:集成OCR与图像识别技术,分析评论图片中的产品问题(如破损、色差),补充文本分析的不足;

-

时序情感趋势监控:基于评论时间维度,分析情感倾向、问题类型的变化趋势,及时发现产品/服务的突发问题;

-

可视化报表生成:集成Matplotlib/Plotly生成评论情感分布、问题分布、需求分布等可视化图表,提升洞察可读性;

-

异常评论预警:针对批量负面评论、集中投诉某一问题的评论,设置短信/邮件告警,提升问题响应效率。

本方案跳出速卖通评论接口“基础调用+数据提取”的常规框架,聚焦跨境评论的多语言治理与商业价值挖掘,实现从评论采集、数据解析到洞察转化的全链路创新。方案兼顾实战性与合规性,可直接落地于产品迭代、服务优化、市场调研等核心业务场景,为企业级跨境电商运营提供精准、高效的决策支撑。

浙公网安备 33010602011771号

浙公网安备 33010602011771号