神经网络

一、手工搭建神经网络

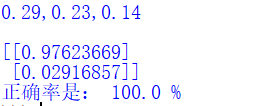

1 import numpy as np 2 import scipy.special 3 import matplotlib.pyplot as plt 4 import pylab 5 6 7 class NeuralNetwork(): 8 def __init__(self,inputnodes,hiddennodes,outputnodes,learningrate): 9 self.inodes = inputnodes 10 self.hnodes = hiddennodes 11 self.onodes = outputnodes 12 self.lr = learningrate 13 self.wih = np.random.normal(0.0,pow(self.hnodes,-0.5),(self.hnodes,self.inodes)) 14 self.who = np.random.normal(0.0,pow(self.onodes,-0.5),(self.onodes,self.hnodes)) 15 self.activation_function = lambda x:scipy.special.expit(x) 16 pass 17 def train(self,input_list,target_list): 18 inputs = np.array(input_list,ndmin=2).T 19 targets = np.array(target_list,ndmin=2).T 20 hidden_inputs = np.dot(self.wih,inputs) 21 hidden_outputs = self.activation_function(hidden_inputs) 22 final_inputs = np.dot(self.who,hidden_outputs) 23 final_outputs = self.activation_function(final_inputs) 24 output_errors = targets-final_outputs 25 hidden_errors = np.dot(self.who.T,output_errors) 26 self.who += self.lr*np.dot((output_errors*final_outputs*(1.0-final_outputs)),np.transpose(hidden_outputs)) 27 self.wih += self.lr*np.dot((hidden_errors*hidden_outputs*(1.0-hidden_outputs)),np.transpose(inputs)) 28 pass 29 def query(self,input_list): 30 inputs = np.array(input_list,ndmin=2).T 31 hidden_inputs = np.dot(self.wih,inputs) 32 hidden_outputs = self.activation_function(hidden_inputs) 33 final_inputs = np.dot(self.who,hidden_outputs) 34 final_outputs = self.activation_function(final_inputs) 35 return final_outputs 36 print('n') 37 38 39 input_nodes = 2 40 hidden_nodes = 100 41 output_nodes = 2 42 learning_rate = 0.3 43 n = NeuralNetwork(input_nodes,hidden_nodes,output_nodes,learning_rate) 44 training_data_file = open(r"E:\人工智能\train.csv","r") 45 training_data_list = training_data_file.readlines() 46 training_data_file.close() 47 print(training_data_list[0]) 48 49 50 epochs = 10 51 for e in range(epochs): 52 for record in training_data_list: 53 all_values = record.split(",") 54 inputs = (np.asfarray(all_values[1:])/255.0*0.99)+0.01 55 targets = np.zeros(output_nodes)+0.01 56 targets[int(float(all_values[0]))]=0.99 57 n.train(inputs,targets) 58 pass 59 pass 60 61 62 test_data_file = open(r"E:\人工智能\train.csv","r") 63 test_data_list = test_data_file.readlines() 64 test_data_file.close() 65 all_values = record.split(',') 66 67 scorecard=[] 68 for record in test_data_list: 69 all_values = record.split(',') 70 correct_label = int(float(all_values[0])) 71 inputs = (np.asfarray(all_values[1:])/255.0*0.99)+0.01 72 outputs = n.query(inputs) 73 label = np.argmax(outputs) 74 if(label == correct_label): 75 scorecard.append(1) 76 else: 77 scorecard.append(0) 78 pass 79 pass 80 print(outputs) 81 82 scorecard_array = np.asarray(scorecard) 83 print("正确率是:",(scorecard_array.sum()/scorecard_array.size)*100,'%')

二、BP神经网络

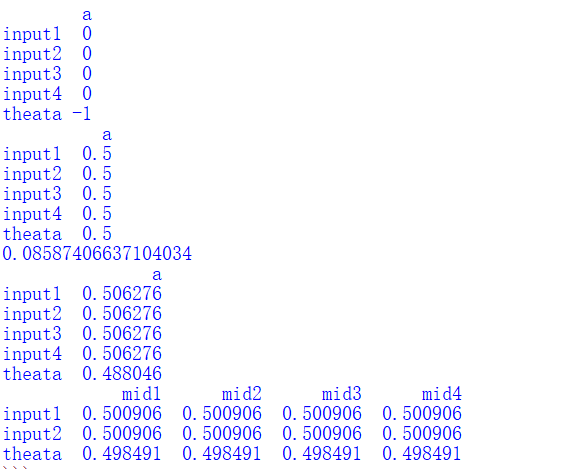

1 def sigmoid(x): #映射函数 2 return 1/(1+math.exp(-x)) 3 import math 4 import numpy as np 5 import pandas as pd 6 from pandas import DataFrame,Series 7 8 #中间层神经元输入和输出层神经元输入 9 Net_in = DataFrame(0.6,index=['input1','input2','theata'],columns=['a']) 10 Out_in = DataFrame(0,index=['input1','input2','input3','input4','theata'],columns=['a']) 11 Net_in.iloc[2,0] = -1 12 Out_in.iloc[4,0] = -1 13 real = Net_in.iloc[0,0]**2+Net_in.iloc[1,0]**2 14 print(Out_in) 15 16 #中间层和输出层神经元权值 17 W_mid=DataFrame(0.5,index=['input1','input2','theata'],columns=['mid1','mid2','mid3','mid4']) 18 W_out=DataFrame(0.5,index=['input1','input2','input3','input4','theata'],columns=['a']) 19 W_mid_delta=DataFrame(0,index=['input1','input2','theata'],columns=['mid1','mid2','mid3','mid4']) 20 W_out_delta=DataFrame(0,index=['input1','input2','input3','input4','theata'],columns=['a']) 21 print(W_out) 22 23 #中间层的输出 24 for i in range(0,4): 25 Out_in.iloc[i,0] = sigmoid(sum(W_mid.iloc[:,i]*Net_in.iloc[:,0])) 26 #输出层的输出/网络输出 27 res = sigmoid(sum(Out_in.iloc[:,0]*W_out.iloc[:,0])) 28 error = abs(res-real) 29 print(error) 30 31 yita=0.6 32 #输出层权值变化量 33 W_out_delta.iloc[:,0] = yita*res*(1-res)*(real-res)*Out_in.iloc[:,0] 34 W_out_delta.iloc[4,0] = -(yita*res*(1-res)*(real-res)) 35 W_out = W_out + W_out_delta #输出层权值更新 36 print(W_out) 37 38 #中间层权值变化量 39 for i in range(0,4): 40 W_mid_delta.iloc[:,i] = yita*Out_in.iloc[i,0]*(1-Out_in.iloc[i,0])*W_out.iloc[i,0]*res*(1-res)*(real-res)*Net_in.iloc[:,0] 41 W_mid_delta.iloc[2,i] = -(yita*Out_in.iloc[i,0]*(1-Out_in.iloc[i,0])*W_out.iloc[i,0]*res*(1-res)*(real-res)) 42 W_mid = W_mid + W_mid_delta #中间层权值更新 43 print(W_mid)

浙公网安备 33010602011771号

浙公网安备 33010602011771号