一个完整的大作业

大作业:

1.选一个自己感兴趣的主题。

2.网络上爬取相关的数据。

3.进行文本分析,生成词云。

4.对文本分析结果解释说明。

5.写一篇完整的博客,附上源代码、数据爬取及分析结果,形成一个可展示的成果。

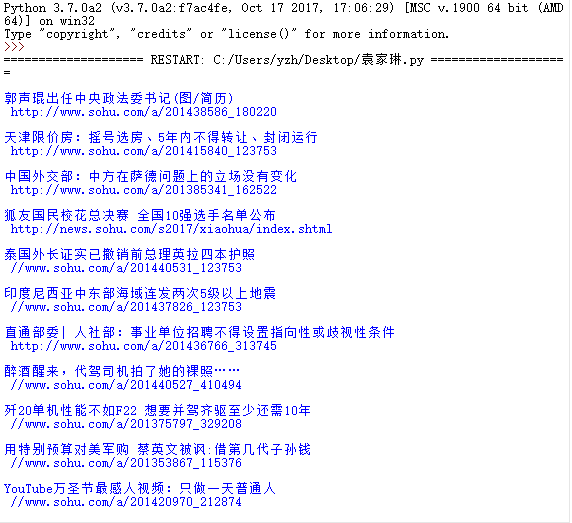

1.抓取搜狐新闻,官网:http://news.sohu.com

2.爬取的数据

import requests

from bs4 import BeautifulSoup

url = 'http://news.sohu.com/'

res = requests.get(url)

res.encoding = 'UTF-8'

soup = BeautifulSoup(res.text, 'html.parser')

for news in soup.select('.list16'):

li = news.select('li')

if len(li) > 0:

title = li[0].text

href = li[0].select('a')[0]['href']

print(title, href)

获取的相关信息

3.生成词云

from os import path

from scipy.misc import imread

import jieba

import sys

import matplotlib.pyplot as plt

from wordcloud import WordCloud, STOPWORDS, ImageColorGenerator

text = open('D:\\sohu.txt').read()

wordlist = jieba.cut(text)

wl_space_split = " ".join(wordlist)

d = path.dirname(__file__)

nana_coloring = imread(path.join(d, "D:\\0.jpg"))

my_wordcloud = WordCloud( background_color = 'white',

mask = nana_coloring,

max_words = 3000,

stopwords = STOPWORDS,

max_font_size = 70,

random_state = 50, )

text_dict = { 'you': 2993, 'and': 6625, 'in': 2767, 'was': 2525, 'the': 7845,}

my_wordcloud = WordCloud().generate_from_frequencies(text_dict)

image_colors = ImageColorGenerator(nana_coloring)

my_wordcloud.recolor(color_func=image_colors)

plt.imshow(my_wordcloud)

plt.axis("off")

plt.show()

my_wordcloud.to_file(path.join(d, "cloudimg.png"))

云图

总结:搜狐上抓取到的的主要信息是万圣节、选房的之类的。

浙公网安备 33010602011771号

浙公网安备 33010602011771号