爬虫作业

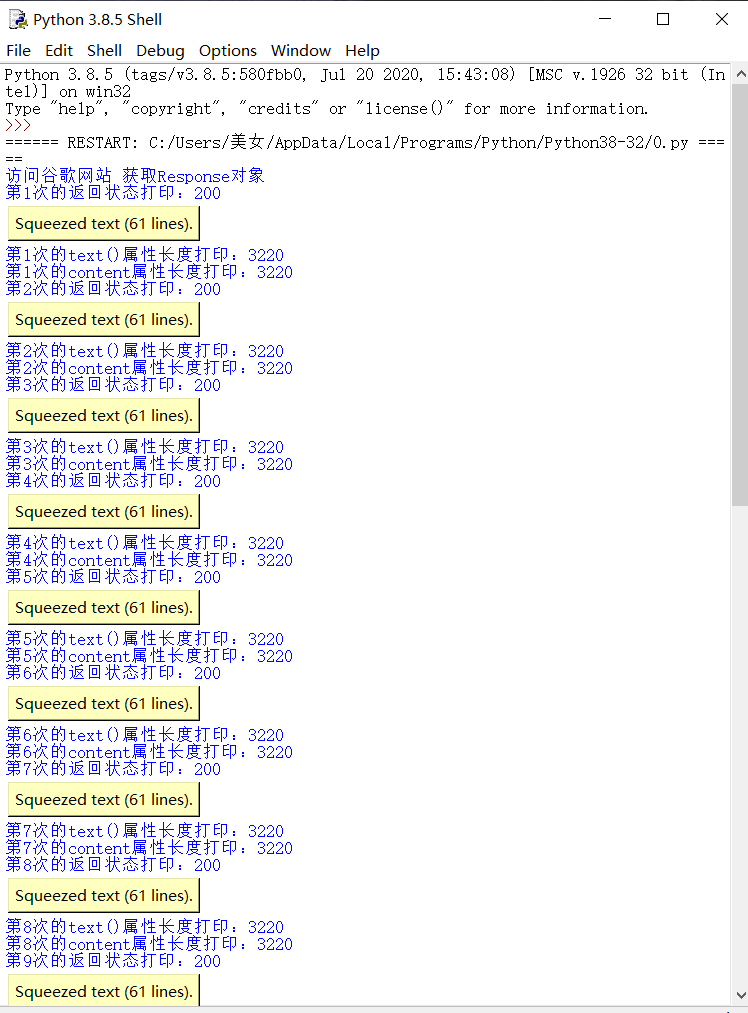

(2)请用requests库的get()函数访问如下一个网站20次,打印返回状态,text()内容,计算text()属性和content属性所返回网页内容的长度。(不同学号选做如下网页,必做及格)

首先是谷歌主页的爬取

from pip._vendor import requests

print('访问谷歌网站 获取Response对象')

r = requests.get("http://www.google.cn")

x = 1

while x <= 20:

print('第' + str(x) + '次的返回状态打印:' + str(r.status_code))

print('第' + str(x) + '次的text()打印:' + str(r.text))

print('第' + str(x) + '次的text()属性长度打印:' + str(len(r.text)))

print('第' + str(x) + '次的content属性长度打印:' +str(len(r.content)))

x += 1

爬取结果

html页面

from bs4 import BeautifulSoup

import re

soup=BeautifulSoup("<head><title>菜鸟教程(runoob.com)</title></head><body><h1>我的第一个标题</h1><p id="frist">我的第一个段落。</p></body><tr><td>row 1,cell 1</td><td>row 1,cell 2</td></tr><tr><td>row 2,cell 1</td><td>row 2,cell 2</td></tr></body></table>","html.parser")

print(soup.head,"26")

print(soup.body)

print(soup.find_all(id="china")) #打印id为china的文本

r=soup.text

pattern = re.findall(u'[\u1100-\uFFFDh]+?',r)

print(pattern)

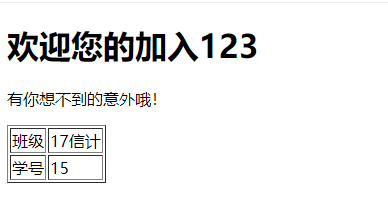

html页面

<!DOCTYPE html> <html lang="en"> <head> <meta charset="UTF-8"> <title>菜鸟教程(runoob.com)</title> </head> <body> <h1>这是个python作业</h1> <p id="first">这也是python作业</p> </body> <table border="1"> <tr> <td>这应该算完成了吧</td> <td>不想写太多</td> </tr> </table> </html>

(4)

import requests

from bs4 import BeautifulSoup

allUniv = []

def getHTMLText(url):

try:

r = requests.get(url, timeout=30)

r.raise_for_status()

r.encoding = 'utf-8'

return r.text

except:

return ""

def fillUnivList(soup):

data = soup.find_all('tr')

for tr in data:

ltd = tr.find_all('td')

if len(ltd) == 0:

continue

singleUniv = []

for td in ltd:

singleUniv.append(td.string)

allUniv.append(singleUniv)

def printUnivList(num):

print("{1:^2}{2:{0}^10}{3:{0}^6}{4:{0}^4}{5:{0}^10}".format(chr(12288), "排名", "学校名称", "省市", "总分", "年费"))

for i in range(num):

u = allUniv[i]

print("{1:^4}{2:{0}^10}{3:{0}^5}{4:{0}^8.1f}{5:{0}^11}".format(chr(12288), u[0], u[1], u[2], eval(u[3]), u[11]))

def main():

url = 'http://www.zuihaodaxue.com/zuihaodaxuepaiming2017.html'

html = getHTMLText(url)

soup = BeautifulSoup(html, "html.parser")

fillUnivList(soup)

printUnivList(10)

main()

浙公网安备 33010602011771号

浙公网安备 33010602011771号