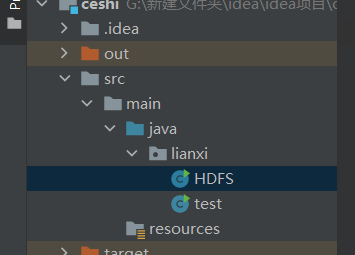

使用HDFS做一个记事本功能

pom.xml

<?xml version="1.0" encoding="UTF-8"?> <project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd"> <modelVersion>4.0.0</modelVersion> <groupId>org.example</groupId> <artifactId>ceshi</artifactId> <version>1.0-SNAPSHOT</version> <properties> <maven.compiler.source>16</maven.compiler.source> <maven.compiler.target>16</maven.compiler.target> </properties> <dependencies> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-hdfs</artifactId> <version>3.1.3</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-client</artifactId> <version>3.1.3</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-common</artifactId> <version>3.1.3</version> </dependency> <dependency> <groupId>org.apache.hbase</groupId> <artifactId>hbase-client</artifactId> <version>2.4.11</version> </dependency> <dependency> <groupId>org.apache.hbase</groupId> <artifactId>hbase-client</artifactId> <version>2.4.11</version> </dependency> <dependency> <groupId>org.apache.zookeeper</groupId> <artifactId>zookeeper</artifactId> <version>3.5.7</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-mapreduce-client-core</artifactId> <version>3.1.3</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-yarn-api</artifactId> <version>3.1.3</version> </dependency> <dependency> <groupId>commons-io</groupId> <artifactId>commons-io</artifactId> <version>2.11.0</version> </dependency> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>4.13.2</version> <scope>test</scope> </dependency> </dependencies> </project>

HDFS.java

package lianxi; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FSDataInputStream; import org.apache.hadoop.fs.FSDataOutputStream; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; import java.io.*; import java.util.Scanner; public class HDFS { public static FileSystem fs = null; Scanner sc = new Scanner(System.in); public static void main(String[] args) throws IOException { Scanner sc=new Scanner(System.in); Configuration configuration = new Configuration(); System.setProperty("HADOOP_USER_NAME","hadoop"); configuration.set("fs.defaultFS","hdfs://hadoop1:8020"); fs = FileSystem.get(configuration); for (int i=0;;i++){ System.out.println("请选择功能:1.创建文件 2.追加文件内容 3. 删除文件 4. 更改存储位置 5. 退出"); int n=sc.nextInt(); if (n==1){ System.out.print("请输入将要创建文件的路径:"); String path = sc.next(); create(path); }else if (n==2){ System.out.print("请输入想要追加文件的路径:"); String path1 = sc.next(); update(path1); }else if (n==3){ System.out.println("请输入删除文件地址"); String w=sc.next(); delete(w); }else if (n==4){ System.out.println("请输入原地址"); String xinxi=sc.next(); String pp=readFromHdfs(xinxi); System.out.print("请输入将要创建文件的路径:"); String pathw = sc.next(); create(pathw,pp); delete(xinxi); } else break; } fs.close(); } public static void create(String path) throws IOException { if(!fs.exists(new Path(path))){ Scanner sc=new Scanner(System.in); FSDataOutputStream outputStream = fs.create(new Path(path)); System.out.println("请输入需要文件中的内容"); String s=sc.next(); outputStream.writeUTF(s); System.out.println("创建文件夹成功"); outputStream.flush(); outputStream.close(); FSDataInputStream open = fs.open(new Path(path)); System.out.println(open.readUTF()); open.close(); } } public static void create(String path, String pp) throws IOException { if(!fs.exists(new Path(path))){ Scanner sc=new Scanner(System.in); FSDataOutputStream outputStream = fs.create(new Path(path)); System.out.println("请输入需要文件中的内容"); outputStream.writeUTF(pp); System.out.println("创建文件夹成功"); outputStream.flush(); outputStream.close(); FSDataInputStream open = fs.open(new Path(path)); System.out.println(open.readUTF()); open.close(); } } public static void update(String path) throws IOException { Scanner sc = new Scanner(System.in); FSDataOutputStream append = fs.append(new Path(path)); System.out.print("追加的内容"); String s1 = sc.next(); append.writeUTF(s1); append.flush(); append.close(); FSDataInputStream open = fs.open(new Path(path)); System.out.println(open.readUTF()); } public static void delete(String w) throws IOException { //带删除文件的目录路径 Path src = new Path(w); //删除 fs.delete(src,true); System.out.println("删除成功"); } public static String readFromHdfs(String filePath) throws IOException{ //读 Path inFile = new Path(filePath); FSDataInputStream inputStream = fs.open(inFile); String pp=inputStream.readUTF(); inputStream.close(); return pp; } }

test

package lianxi; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FSDataInputStream; import org.apache.hadoop.fs.FSDataOutputStream; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; import java.io.BufferedReader; import java.io.BufferedWriter; import java.io.InputStreamReader; import java.io.OutputStreamWriter; public class test { public static FileSystem fs = null; public static void main(String[] args) throws Exception{ Configuration conf = new Configuration(); System.setProperty("HADOOP_USER_NAME", "hadoop"); conf.set("fs.defaultFS", "hdfs://hadoop1:8020"); fs = FileSystem.get(conf); if(!fs.exists(new Path("/lyh"))){ fs.create(new Path("/lyh")); } create(); copy(); fs.close(); } public static void create() throws Exception { if(!fs.exists(new Path("/ceshi/hdfstest1.txt"))){ FSDataOutputStream output = fs.create(new Path("/ceshi/hdfstest1.txt")); output.write("20203959 李迎辉 HDFS课堂测试".getBytes()); output.flush(); System.out.println("创建获得hdfstest1成功"); output.close(); } else{ System.out.println("创建获得hdfstest1失败"); } } public static void copy() throws Exception { FSDataInputStream fin = fs.open(new Path("/test/hdfstest1.txt")); BufferedReader in = new BufferedReader(new InputStreamReader(fin, "UTF-8")); if(!fs.exists(new Path("/test/hdfstest2.txt"))){ FSDataOutputStream fout = fs.create(new Path("/test/hdfstest2.txt")); BufferedWriter out = new BufferedWriter(new OutputStreamWriter(fout, "UTF-8")); out.write(in.readLine()); System.out.println("创建hdfstest2文件成功"); out.flush(); out.close(); }else{ System.out.println("创建hdfstest2文件失败"); } } }

浙公网安备 33010602011771号

浙公网安备 33010602011771号