爬虫综合大作业

本次作业爬取的是最近上映的很火热的电影《反贪风暴》。希望可以爬取一些有意义的东西。

最新电影票房排行明细:

Scrapy使用的基本流程:

- 引擎从调度器中取出一个链接(URL)用于接下来的抓取

- 引擎把URL封装成一个请求(Request)传给下载器

- 下载器把资源下载下来,并封装成应答包(Response)

- 爬虫解析Response

- 解析出实体(Item),则交给实体管道进行进一步的处理

- 解析出的是链接(URL),则把URL交给调度器等待抓取

一.把爬取的内容保存取MySQL数据库

主要代码如下:

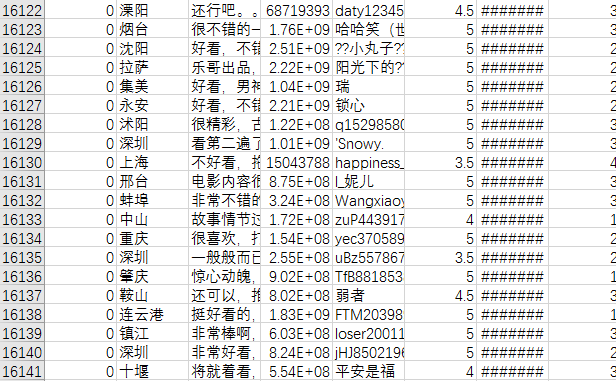

城市,评论,号码,昵称,评论时间,用户等级

import scrapyclass MaoyanItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() city = scrapy.Field() # 城市 content = scrapy.Field() # 评论 user_id = scrapy.Field() # 用户id nick_name = scrapy.Field() # 昵称 score = scrapy.Field() # 评分 time = scrapy.Field() # 评论时间 user_level = scrapy.Field() # 用户等级comment.py

import scrapyimport randomfrom scrapy.http import Requestimport datetimeimport jsonfrom maoyan.items import MaoyanItemclass CommentSpider(scrapy.Spider): name = 'comment' allowed_domains = ['maoyan.com'] uapools = [ 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/535.1 (KHTML, like Gecko) Chrome/14.0.835.163 Safari/535.1', 'Mozilla/5.0 (Windows NT 6.1; WOW64; rv:6.0) Gecko/20100101 Firefox/6.0', 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/534.50 (KHTML, like Gecko) Version/5.1 Safari/534.50', 'Opera/9.80 (Windows NT 6.1; U; zh-cn) Presto/2.9.168 Version/11.50', 'Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 6.1; WOW64; Trident/4.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; .NET4.0C; InfoPath.3)', 'Mozilla/4.0 (compatible; MSIE 8.0; Windows NT 5.1; Trident/4.0; GTB7.0)', 'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 5.1)', 'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1; SV1)', 'Mozilla/5.0 (Windows; U; Windows NT 6.1; ) AppleWebKit/534.12 (KHTML, like Gecko) Maxthon/3.0 Safari/534.12', 'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; InfoPath.3; .NET4.0C; .NET4.0E)', 'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; InfoPath.3; .NET4.0C; .NET4.0E; SE 2.X MetaSr 1.0)', 'Mozilla/5.0 (Windows; U; Windows NT 6.1; en-US) AppleWebKit/534.3 (KHTML, like Gecko) Chrome/6.0.472.33 Safari/534.3 SE 2.X MetaSr 1.0', 'Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; InfoPath.3; .NET4.0C; .NET4.0E)', 'Mozilla/5.0 (Windows NT 6.1) AppleWebKit/535.1 (KHTML, like Gecko) Chrome/13.0.782.41 Safari/535.1 QQBrowser/6.9.11079.201', 'Mozilla/4.0 (compatible; MSIE 7.0; Windows NT 6.1; WOW64; Trident/5.0; SLCC2; .NET CLR 2.0.50727; .NET CLR 3.5.30729; .NET CLR 3.0.30729; Media Center PC 6.0; InfoPath.3; .NET4.0C; .NET4.0E) QQBrowser/6.9.11079.201', 'Mozilla/5.0 (compatible; MSIE 9.0; Windows NT 6.1; WOW64; Trident/5.0)', 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/71.0.3578.80 Safari/537.36', 'Mozilla/5.0 (Windows NT 6.1; WOW64; rv:34.0) Gecko/20100101 Firefox/34.0' ] thisua = random.choice(uapools) header = {'User-Agent': thisua} current_time = datetime.datetime.now().strftime('%Y-%m-%d %H:%M:%S') current_time = '2019-04-4 18:50:22' end_time = '2019-04-4 00:08:00' # 电影上映时间 url = 'http://m.maoyan.com/mmdb/comments/movie/248172.json?_v_=yes&offset=0&startTime=' +current_time.replace(' ','%20') def start_requests(self): current_t = str(self.current_time) if current_t > self.end_time: try: yield Request(self.url, headers=self.header, callback=self.parse) except Exception as error: print('请求1出错-----' + str(error)) else: print('全部有关信息已经搜索完毕') def parse(self, response): item = MaoyanItem() data = response.body.decode('utf-8', 'ignore') json_data = json.loads(data)['cmts'] count = 0 for item1 in json_data: if 'cityName' in item1 and 'nickName' in item1 and 'userId' in item1 and 'content' in item1 and 'score' in item1 and 'startTime' in item1 and 'userLevel' in item1: try: city = item1['cityName'] comment = item1['content'] user_id = item1['userId'] nick_name = item1['nickName'] score = item1['score'] time = item1['startTime'] user_level = item1['userLevel'] item['city'] = city item['content'] = comment item['user_id'] = user_id item['nick_name'] = nick_name item['score'] = score item['time'] = time item['user_level'] = user_level yield item count += 1 if count >= 15: temp_time = item['time'] current_t = datetime.datetime.strptime(temp_time, '%Y-%m-%d %H:%M:%S') + datetime.timedelta( seconds=-1) current_t = str(current_t) if current_t > self.end_time: url1 = 'http://m.maoyan.com/mmdb/comments/movie/248172.json?_v_=yes&offset=0&startTime=' + current_t.replace( ' ', '%20') yield Request(url1, headers=self.header, callback=self.parse) else: print('全部有关信息已经搜索完毕') except Exception as error: print('提取信息出错1-----' + str(error)) else: print('信息不全,已滤除')pipelines文件

import pandas as pdclass MaoyanPipeline(object): def process_item(self, item, spider): dict_info = {'city': item['city'], 'content': item['content'], 'user_id': item['user_id'], 'nick_name': item['nick_name'], 'score': item['score'], 'time': item['time'], 'user_level': item['user_level']} try: data = pd.DataFrame(dict_info, index=[0]) # 为data创建一个表格形式 ,注意加index = [0] data.to_csv('G:\info.csv', header=False, index=True, mode='a', encoding='utf_8_sig') # 模式:追加,encoding = 'utf-8-sig' except Exception as error: print('写入文件出错-------->>>' + str(error)) else: print(dict_info['content'] + '---------->>>已经写入文件') 最后爬完的数据1.34M,16732条数据。显示如下所示:

浙公网安备 33010602011771号

浙公网安备 33010602011771号