每日总结11.14

(二)编程实现一个类“MyFSDataInputStream”,该类继承“org.apache.hadoop.fs.FSDataInputStream”,

要求如下:实现按行读取HDFS中指定文件的方法“readLine()”,如果读到文件末尾,则返回空,否则返回文件一行的文本。

首先启动hdfs集群

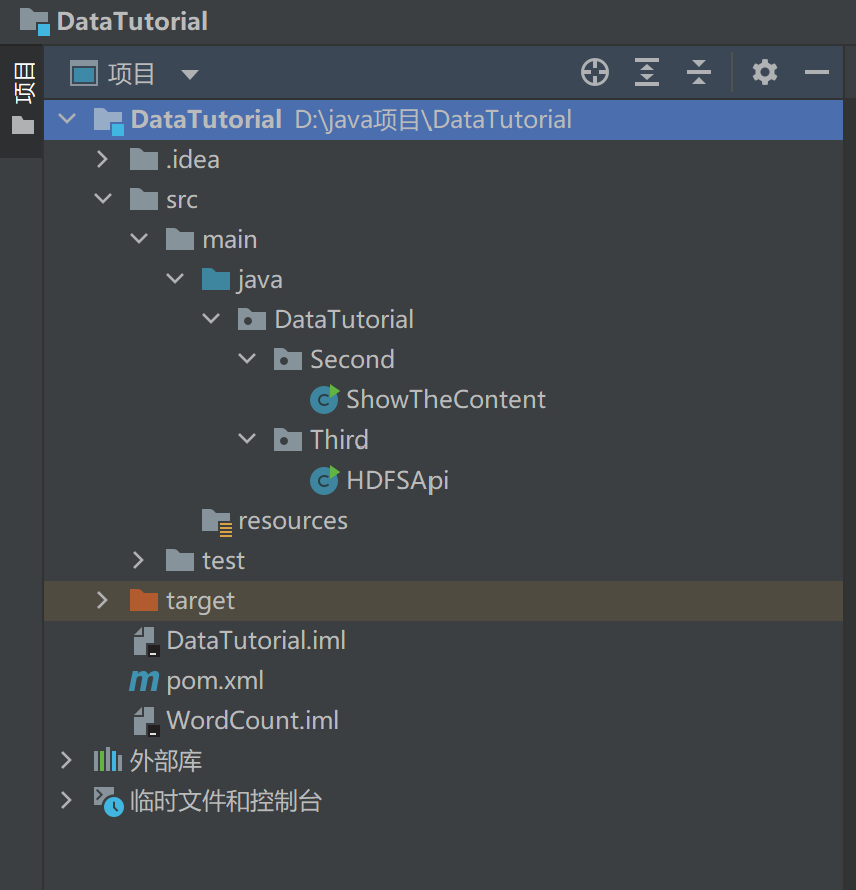

然后创建maven项目

MyFSDataInputStream类:

package DataTutorial.Second; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.FSDataInputStream; import org.apache.hadoop.fs.FileSystem; import org.apache.hadoop.fs.Path; import java.io.BufferedReader; import java.io.IOException; import java.io.InputStream; import java.io.InputStreamReader; public class ShowTheContent extends FSDataInputStream{ public ShowTheContent(InputStream in) { super(in); } /** * 实现按行读取 每次读入一个字符,遇到"\n"结束,返回一行内容 */ public static String readline(BufferedReader br) throws IOException { char[] data = new char[1024]; int read = -1; int off = 0; while ((read = br.read(data, off, 1)) != -1) { if (String.valueOf(data[off]).equals("\n")) { off += 1; break; } off += 1; } if (off > 0) { return String.valueOf(data, 0, off); } else { return null; } } /** * 读取文件内容 */ public static void cat(Configuration conf, String remoteFilePath) throws IOException { FileSystem fs = FileSystem.get(conf); Path remotePath = new Path(remoteFilePath); try (FSDataInputStream in = fs.open(remotePath); BufferedReader br = new BufferedReader(new InputStreamReader(in))) { String line; while ((line = ShowTheContent.readline(br)) != null) { System.out.println(line); } } } /** * 主函数 */ public static void main(String[] args) { Configuration conf = new Configuration(); conf.setLong("ipc.maximum.data.length", 67108864); // 设置为合适的值 conf.set("fs.default.name", "hdfs://192.168.88.101:8020"); String remoteFilePath = "/test/test2.txt"; // HDFS 路径 try { ShowTheContent.cat(conf, remoteFilePath); } catch (Exception e) { e.printStackTrace(); } } }

(三)查看Java帮助手册或其它资料,用“java.net.URL”和“org.apache.hadoop.fs.FsURLStreamHandlerFactory”编程完成输出HDFS中指定文件的文本到终端中。

package DataTutorial.Third;

import org.apache.hadoop.fs.*;

import org.apache.hadoop.io.IOUtils;

import java.io.*;

import java.net.URL;

public class HDFSApi {

static {

URL.setURLStreamHandlerFactory(new FsUrlStreamHandlerFactory());

}

/**

* 主函数

*/

public static void main(String[] args) throws Exception {

String remoteFilePath = "hdfs://192.168.88.101:8020/test/test2.txt"; // HDFS 文件

InputStream in = null;

try {

/* 通过 URL 对象打开数据流,从中读取数据 */

in = new URL(remoteFilePath).openStream();

IOUtils.copyBytes(in, System.out, 4096, false);

} finally {

IOUtils.closeStream(in);

}

}

}

浙公网安备 33010602011771号

浙公网安备 33010602011771号