day04

内容概要

- selenium 登录cnblongs

- 抽屉半自动点赞

- xpath使用

- selenium动作链

- 自动登录12306

- 打码平台使用

- 使用打码平台自动登录

- 使用selenium爬取京东信息

- scrapy介绍

selenium登录cnblongs

import time

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.common.keys import Keys

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.chrome.options import Options

"""

r"D:\py3.8\Tools\chromedriver_win32\chromedriver.exe"

"""

options = Options()

options.add_argument("--disable-blink-features=AutomationControlled")

s = Service(r"D:\py3.8\Tools\chromedriver_win32\chromedriver.exe")

bro = webdriver.Chrome(service=s, options=options)

bro.implicitly_wait(10) # 等待

bro.get("https://www.cnblogs.com/")

bro.find_element(By.LINK_TEXT, "登录").click()

bro.find_element(By.CSS_SELECTOR,

"body > app-root > app-sign-in-layout > div > div > app-sign-in > app-content-container > div > div > div > div > app-external-sign-in-providers > div > button:nth-child(2) > span.mat-button-wrapper > img").click()

"""

<iframe frameborder="0" width="407" height="331" id="ptlogin_iframe" name="ptlogin_iframe" src="https://xui.ptlogin2.qq.com/cgi-bin/xlogin?appid=716027609&daid=383&style=33&login_text=%E7%99%BB%E5%BD%95&hide_title_bar=1&hide_border=1&target=self&s_url=https%3A%2F%2Fgraph.qq.com%2Foauth2.0%2Flogin_jump&pt_3rd_aid=101880508&pt_feedback_link=https%3A%2F%2Fsupport.qq.com%2Fproducts%2F77942%3FcustomInfo%3D.appid101880508&theme=2&verify_theme="></iframe>

<span class="qrlogin_img_out" onmouseover="pt.plogin.showQrTips();" onmouseout="pt.plogin.hideQrTips();"></span>

<a class="link" hidefocus="true" id="switcher_plogin" href="javascript:void(0);" tabindex="8">密码登录</a>

"""

res1 = bro.current_window_handle # 获取当前页的句柄

hels_list = bro.window_handles # 获取所有的

for i in hels_list:

if i != res1:

bro.switch_to.window(i)

bro.maximize_window()

bro.switch_to.frame("ptlogin_iframe")

#

res = bro.find_element(By.ID, "switcher_plogin")

bro.execute_script("arguments[0].click()", res)

bro.find_element(By.ID, "u").send_keys("1548346849")

bro.find_element(By.ID, "p").send_keys("1548346849li")

bro.find_element(By.ID, "p").send_keys(Keys.ENTER)

time.sleep(3)

bro.close()

抽屉半自动点赞

- 使用selenium 半自动登录---》取到cookie

- 使用requests模块,解析出点赞的请求地址---》模拟发送请求---》携带cookie

import json

import time

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.chrome.service import Service

"""

r"D:\py3.8\Tools\chromedriver_win32\chromedriver.exe"

"""

# s = Service(r"D:\py3.8\Tools\chromedriver_win32\chromedriver.exe")

# bro = webdriver.Chrome(service=s)

# bro.implicitly_wait(10)

# bro.get("https://dig.chouti.com/")

#

# res = bro.find_element(By.LINK_TEXT, "登录")

# bro.execute_script("arguments[0].click()", res)

#

# bro.find_element(By.NAME, "phone").send_keys("")

# bro.find_element(By.NAME, "password").send_keys("")

#

# bro.find_element(By.XPATH, "/html/body/div[4]/div/div[4]/div[4]/button").click()

# input(":")

# print("继续了哦")

# bro.refresh()

# with open("cookies.json", "w", encoding="utf8") as f:

# json.dump(bro.get_cookies(), f)

#

#

# bro.close()

import requests

# 写一个头 , 不带头获取不到数据, 防爬措施

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/111.0.0.0 Safari/537.36'

}

# 获取文章的路由

url = 'https://dig.chouti.com/top/24hr?_=1679311149916'

# 转换成 json格式 {code:200, data:[{id:xx, name:xx}]}

res = requests.get(url=url, headers=headers).json()

# 点赞的接

# 点赞的数据格式

payload = {'linkId': '38116195'}

# 获取cookies 我们已经用selenium获取到cookie了但是是列表的格式, requests用不来

# 得处理一下, 我们只用获取到 文件里面的cookies的 name和values 凭借成name:value

with open("cookies.json", "r", encoding="utf8") as f:

data = json.load(f)

# 获取id 发送请求

cookies = {i["name"]: i["value"] for i in data}

request_cookies = {}

for cookie in data:

request_cookies[cookie['name']] = cookie['value']

# print(type(cookies))

# for i in res.get('data'):

# print(i.get("id"))

# res1 = requests.post(url='https://dig.chouti.com/link/vote', headers=headers, data={"linkId": "38116629"},

# cookies=cookies)

# res1 = requests.post(url='https://dig.chouti.com/link/vote', headers=headers,cookies=cookies)

# print(res1.text)

Xpaht的使用

每个解析器,都会有自己的查找方法

- bs4 find和find_all

- selenium find_element和 find_elements

- lxml 也是一个解析器 支持xpath和css

这些解析器,基本上都会支持两种统一的 css和xpath

xpath是什么

XPath即为XML路径语言(XML Path Language),他是一个用来确定XML文档某部分位置的语言

/ 从当前路径下找

/div 从当前路径下开始找

// 递归查找, 子子孙孙

//div 递归查找 所有的div

@ 取属性

. 当前

.. 上一层

# 每个解析器,都会有自己的查找方法

doc = '''

<html>

<head>

<base href='http://example.com/' />

<title>Example website</title>

</head>

<body>

<div id='images'>

<a href='image1.html'>Name: My image 1 <br /><img src='image1_thumb.jpg'/></a>

<a href='image2.html'>Name: My image 2 <br /><img src='image2_thumb.jpg' /></a>

<a href='image3.html'>Name: My image 3 <br /><img src='image3_thumb.jpg' /></a>

<a href='image4.html'>Name: My image 4 <br /><img src='image4_thumb.jpg' /></a>

<a href='image5.html' class='li li-item' name='items'>Name: My image 5 <br /><img src='image5_thumb.jpg' /></a>

<a href='image6.html' name='items'><span><h5>test</h5></span>Name: My image 6 <br /><img src='image6_thumb.jpg' /></a>

</div>

</body>

</html>

'''

from lxml import etree

# html = etree.HTML(doc)

# html=etree.parse('search.html',etree.HTMLParser())

# 1 所有节点

# a=html.xpath('//*')

# 2 指定节点(结果为列表)

# a=html.xpath('//head')

# 3 子节点,子孙节点

# a=html.xpath('//div/a')

# a=html.xpath('//body/a') #无数据

# a = html.xpath('//body//a')

# 4 父节点

# a=html.xpath('/html/body')

# a=html.xpath('//body//a[@href="image1.html"]/..') # 属性a[@href="image1.html"] .. 表示上一层

# a=html.xpath('//body//a[1]/..') # 从1 开始的

# 也可以这样

# a=html.xpath('//body//a[1]/parent::*')

# a=html.xpath('//body//a[1]/parent::p')

# a=html.xpath('//body//a[1]/parent::div')

# 5 属性匹配

# a=html.xpath('//body//a[@href="image1.html"]')

# 6 文本获取 /text()

# a=html.xpath('//body//a[@href="image1.html"]/text()')

# 7 属性获取

# a=html.xpath('//body//a/@href')

# # 注意从1 开始取(不是从0)

# a=html.xpath('//body//a[1]/@href')

# 8 属性多值匹配

# a 标签有多个class类,直接匹配就不可以了,需要用contains

# a=html.xpath('//body//a[@class="li"]') # 这个取不到

# a=html.xpath('//body//a[contains(@class,"li")]')

# a=html.xpath('//body//a[contains(@class,"li")]/text()')

# 9 多属性匹配

# a=html.xpath('//body//a[contains(@class,"li") or @name="items"]')

# a=html.xpath('//body//a[contains(@class,"li") and @name="items"]/text()')

# 10 按序选择

# a=html.xpath('//a[2]/text()')

# a=html.xpath('//a[2]/@href')

# 取最后一个

# a=html.xpath('//a[last()]/@href')

# 位置小于3的

# a=html.xpath('//a[position()<3]/@href')

# 倒数第二个

# a=html.xpath('//a[last()-2]/@href')

# 11 节点轴选择

# ancestor:祖先节点

# 使用了* 获取所有祖先节点

# a=html.xpath('//a/ancestor::*')

# # 获取祖先节点中的div

# a=html.xpath('//a/ancestor::div')

# attribute:属性值

# a=html.xpath('//a[1]/attribute::*')

# child:直接子节点

# a=html.xpath('//a[1]/child::*')

# descendant:所有子孙节点

# a=html.xpath('//a[6]/descendant::*')

# following:当前节点之后所有节点

# a=html.xpath('//a[1]/following::*')

# a=html.xpath('//a[1]/following::*[1]/@href')

# following-sibling:当前节点之后同级节点

# a=html.xpath('//a[1]/following-sibling::*')

# a=html.xpath('//a[1]/following-sibling::a')

# a=html.xpath('//a[1]/following-sibling::*[2]')

# a=html.xpath('//a[1]/following-sibling::*[2]/@href')

# print(a)

import requests

res=requests.get('https://www.runoob.com/xpath/xpath-syntax.html')

print(res.text)

html = etree.HTML(res.text)

a=html.xpath('//*[@id="content"]/h2[2]/text()')

print(a)

selenium动作链

人可以滑动某些标签

网站中有些按住鼠标,滑动的效果

- 滑动验证码

两种形式

-

形式一

actions = ActionChains(bro) # 拿到动作链对象 actions.drag_and_drop(sourse, target) # 把动作放到动作链中,准备串行执行 actions.perform() -

方式二

ActionChains(bro).click_add_hold(sourse).perform() distanc=target.location["x"]-sourse.location["x"] track=0 while track < distance: ActionChains(bro).move_by_offset(xoffset=2, yoffset=0).perform() track +=2动作链案例

方式一

from selenium import webdriver from selenium.webdriver import ActionChains from selenium.webdriver.common.by import By from selenium.webdriver.chrome.service import Service s = Service(r"D:\py3.8\Tools\chromedriver_win32\chromedriver.exe") bro = webdriver.Chrome(service=s) bro.implicitly_wait(10) bro.get("http://www.runoob.com/try/try.php?filename=jqueryui-api-droppable") bro.switch_to.frame("iframeResult") sourse = bro.find_element(By.ID, "draggable") res = bro.find_element(By.ID, "droppable") res1 = ActionChains(bro) res1.drag_and_drop(sourse, res) res1.perform() input() bro.close()方式二

import time from selenium import webdriver from selenium.webdriver import ActionChains from selenium.webdriver.common.by import By from selenium.webdriver.chrome.service import Service s = Service(r"D:\py3.8\Tools\chromedriver_win32\chromedriver.exe") bro = webdriver.Chrome(service=s) bro.implicitly_wait(10) bro.get("http://www.runoob.com/try/try.php?filename=jqueryui-api-droppable") bro.switch_to.frame("iframeResult") sourse = bro.find_element(By.ID, "draggable") res = bro.find_element(By.ID, "droppable") res1 = ActionChains(bro).click_and_hold(sourse).perform() # 现在只是点住了sourse res_cont = res.location["x"] - sourse.location["x"] track = 0 while track < res_cont: ActionChains(bro).move_by_offset(xoffset=10, yoffset=0).perform() # 开始点住 x移动 track += 10 input(":") bro.close()

自动登录12306

import time

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver import ActionChains

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.chrome.options import Options

options = Options()

options.add_argument("--disable-blink-features=AutomationControlled") # 防爬 防检测为selenium

s = Service(r"D:\py3.8\Tools\chromedriver_win32\chromedriver.exe")

bro = webdriver.Chrome(service=s, options=options)

bro.maximize_window()

bro.implicitly_wait(10)

bro.get("https://kyfw.12306.cn/otn/resources/login.html")

bro.find_element(By.ID, "J-userName").send_keys("")

bro.find_element(By.ID, "J-password").send_keys("")

bro.find_element(By.LINK_TEXT, "立即登录").click()

sourse = bro.find_element(By.XPATH, '/html/body/div[1]/div[4]/div[2]/div[2]/div/div/div[2]/div/div[1]/span')

res = ActionChains(bro).click_and_hold(sourse).perform()

track = 0

while track < 300:

ActionChains(bro).move_by_offset(xoffset=10, yoffset=0).perform()

track += 10

time.sleep(10)

bro.close()

打码平台使用

登录网站,会有些验证码,可以借助第三方的打码平台,破解验证码,只需花钱解决

免费的:纯数字,纯字母的----》python有免费模块破解失败率高

云打码,超级鹰

# 云打码:https://zhuce.jfbym.com/price/

# 价格体系:破解什么验证码,需要多少钱

http://www.chaojiying.com/price.html

抠图

使用selenium截图然后使用pillow抠图

import time

from selenium import webdriver

from PIL import Image

from selenium.webdriver.common.by import By

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.chrome.options import Options

s = Service(r"D:\py3.8\Tools\chromedriver_win32\chromedriver.exe")

bor = webdriver.Chrome(service=s)

bor.get("http://www.chaojiying.com/apiuser/login/")

bor.implicitly_wait(10)

bor.maximize_window()

bor.save_screenshot("main.png")

res = bor.find_element(By.XPATH, "/html/body/div[3]/div/div[3]/div[1]/form/div/img")

res_locat = res.location

size = res.size

print(res_locat)

print(size)

img_tu = (int(res_locat["x"]),

int(res_locat["y"]),

int(res_locat["x"])+int(size["width"]),

int(res_locat["y"])+int(size["height"]))

print(img_tu)

img = Image.open("./main.png")

fram = img.crop(img_tu)

fram.save("code.png")

time.sleep(2)

bor.close()

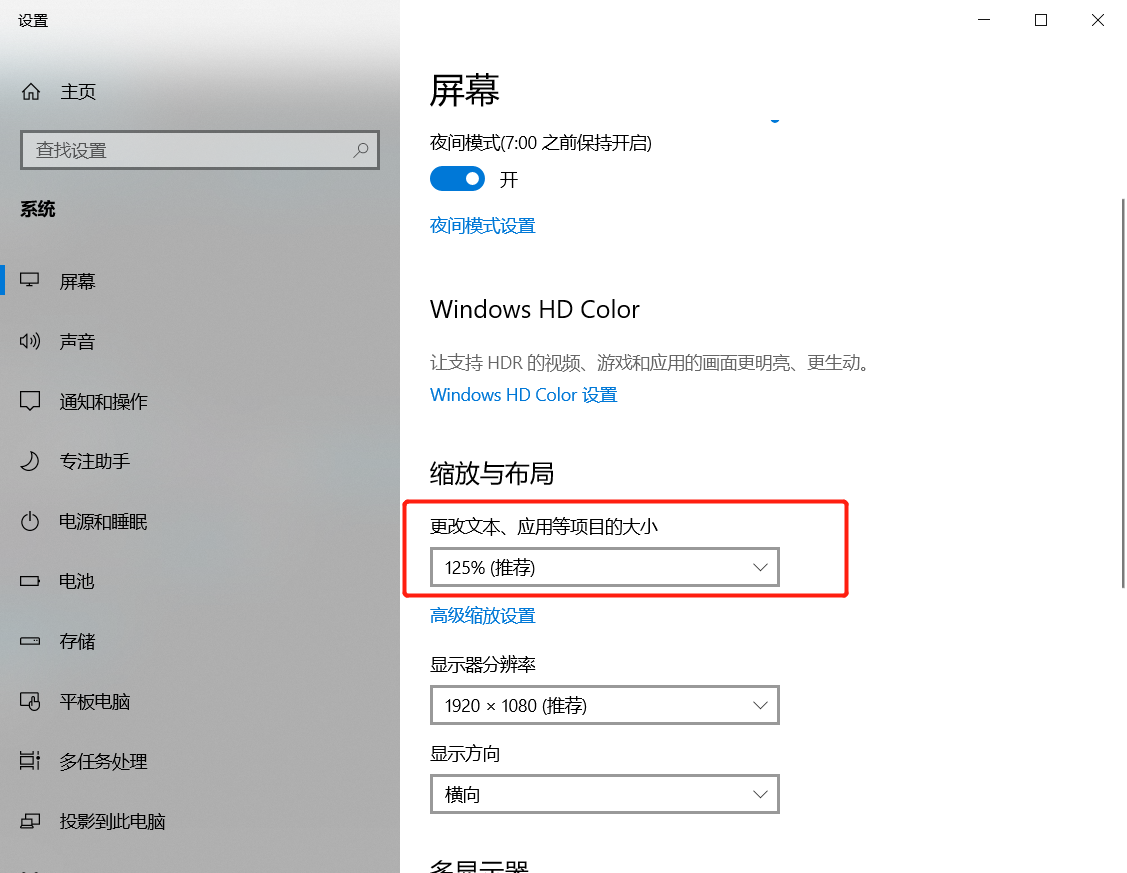

注意:自己的电脑的缩放,

如果不是100%,得自己取调图片的缩放,要不然距离截不到,按125%截的图,pillow按100%截取的图。

爬取京东的商品

"""

商品图片地址:%s

商品地址:%s

商品名字:%s

商品价格:%s

商品评论数:%s

"""

import time

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.common.keys import Keys

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.chrome.options import Options

def get_goods(bro):

bro.execute_script('scrollTo(0,5000)')

li_list = bro.find_elements(By.CLASS_NAME, 'gl-item')

for li in li_list:

try:

img_url = li.find_element(By.XPATH, '//*[@id="J_goodsList"]/ul/li[1]/div/div[1]/a/img').get_attribute("src")

res = li.find_element(By.XPATH, '//*[@id="J_goodsList"]/ul/li[1]/div/div[1]/a')

goods_url = res.get_attribute("href")

goods_name = li.find_element(By.XPATH, '//*[@id="J_goodsList"]/ul/li[1]/div/div[3]/a/em').text

goods_price = li.find_element(By.XPATH, '//*[@id="J_goodsList"]/ul/li[1]/div/div[2]/strong/i').text

goods_commit = li.find_element(By.CSS_SELECTOR, ".p-commit a").text

except Exception as e:

continue

print("""

商品图片地址:%s

商品地址:%s

商品名字:%s

商品价格:%s

商品评论数:%s

""" % (img_url, goods_url, goods_name, goods_price, goods_commit))

bro.find_element(By.XPATH, '//*[@id="J_bottomPage"]/span[1]/a[9]').click()

get_goods(bro)

try:

s = Service(r"D:\py3.8\Tools\chromedriver_win32\chromedriver.exe")

bro = webdriver.Chrome(service=s)

bro.implicitly_wait(10)

bro.get("https://www.jd.com/")

int_put = bro.find_element(By.ID, "key")

int_put.send_keys("茅台")

int_put.send_keys(Keys.ENTER)

get_goods(bro)

except Exception as e:

print(e)

finally:

bro.close()

scrapy介绍

requsets bs4 selenium 模块

框架 :django ,scrapy--->专门做爬虫的框架,爬虫界的django,大而全,爬虫有的东西,它都自带

安装 (win看人品,linux,mac一点问题没有)

-pip3.8 install scrapy

-装不上,基本上是因为twisted装不了,单独装

1、pip3 install wheel #安装后,便支持通过wheel文件安装软件,wheel文件官网:https://www.lfd.uci.edu/~gohlke/pythonlibs

3、pip3 install lxml

4、pip3 install pyopenssl

5、下载并安装pywin32:https://sourceforge.net/projects/pywin32/files/pywin32/

6、下载twisted的wheel文件:http://www.lfd.uci.edu/~gohlke/pythonlibs/#twisted

7、执行pip3 install 下载目录\Twisted-17.9.0-cp36-cp36m-win_amd64.whl

8、pip3 install scrapy

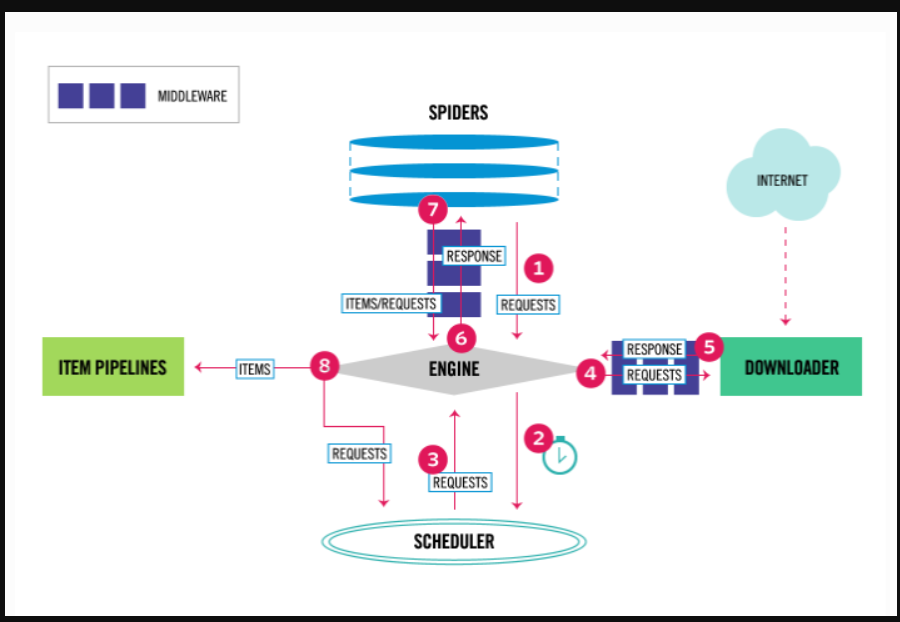

架构分析

爬虫:spiders(自己定义的,可以有很多),定义爬取的地址,解析规则

引擎:engine---》控制整个框架数据的流动,大总管

调度器:scheduler--》要爬取的 request对象,放在里面排队

下载中间件:DownloaderMiddleware--->处理请求对象,处理响应对象

下载器:Downloader--->负责真正的下载,效率很高,基于twisted的高并发模型之上

爬虫中间件:spiderMiddleware---->处理engine和爬虫直接的(用的少)

管道:piplines--->负责存储数据

创建出scrapy项目

scrapy startproject firstscrapy # 创建项目

scrapy genspider 名字 网址 # 创建爬虫 等同于 创建app

# pycharm打开

浙公网安备 33010602011771号

浙公网安备 33010602011771号