安装部署zookeeper-kafka配置密码访问

zookeeper开启认证访问(账号密码)

vi zoo.cfg # 修改配置文件,添加红色部分内容

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/data/zookeeper/data

clientPort=2181

maxClientCnxns=360

autopurge.snapRetainCount=3

autopurge.purgeInterval=3

server.1=AInode1:2888:3888

server.2=AInode2:2888:3888

server.3=AInode3:2888:3888

# 启用SASL认证

authProvider.1=org.apache.zookeeper.server.auth.SASLAuthenticationProvider

# 设置SASL的登录上下文配置文件路径

jaasLoginRenew=3600000

sessionRequireClientSASLAuth=true

# 创建两个认证相关配置,文件跟zoo.cfg同目录

vi zookeeper-sasl-jaas.config

Server {

org.apache.zookeeper.server.auth.DigestLoginModule required

user_super="zookeeper的管理密码";

};

Client {

org.apache.zookeeper.server.auth.DigestLoginModule required

username="super"

password="zookeeper的管理密码";

};

vi jaas-client.conf

Client {

org.apache.zookeeper.server.auth.DigestLoginModule required

username="super"

password="zookeeper的管理密码";

};

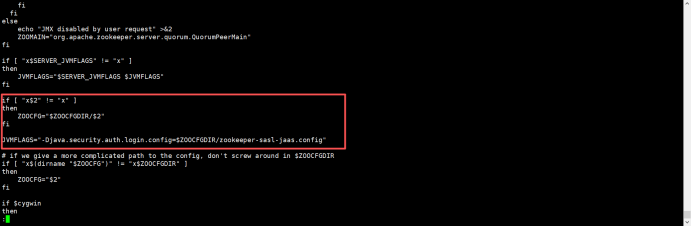

# 修改启动zookeeper脚本,添加一个配置,放到方框的配置里面

vi bin/zkServer.sh

JVMFLAGS="-Djava.security.auth.login.config=$ZOOCFGDIR/zookeeper-sasl-jaas.config"

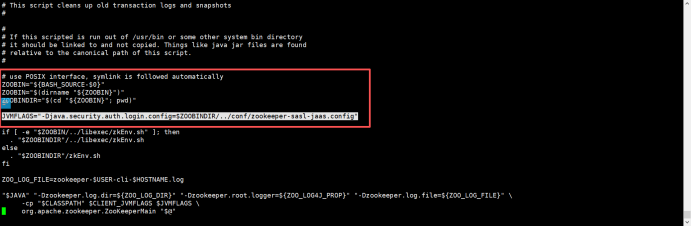

vi bin/zkCli.sh

JVMFLAGS="-Djava.security.auth.login.config=$ZOOBINDIR/../conf/zookeeper-sasl-jaas.config"

上面的操作同步zookeeper的所有节点,然后重启zookeeper服务就会生效

Kafka开启认证访问(账号密码)

# 修改kafka的配置文件,添加或者修改红色部分的内容

vi server.properties

broker.id=1 # 每个节点都不一样

auto.create.topics.enable=false

auto.leader.rebalance.enable=true

compression.type=producer

controlled.shutdown.enable=true

controlled.shutdown.max.retries=3

controlled.shutdown.retry.backoff.ms=5000

controller.message.queue.size=10

controller.socket.timeout.ms=30000

default.replication.factor=1

delete.topic.enable=true

fetch.purgatory.purge.interval.requests=10000

leader.imbalance.check.interval.seconds=300

leader.imbalance.per.broker.percentage=10

# listeners ip为主机ip,端口默认9092

listeners=SASL_PLAINTEXT://AInode1:9092 # 端口监听类型,每个节点的ip都要改本机的Ip

security.inter.broker.protocol=SASL_PLAINTEXT

sasl.enabled.mechanisms=PLAIN

sasl.mechanism.inter.broker.protocol=PLAIN

allow.everyone.if.no.acl.found=false

super.users=User:admin

log.cleanup.interval.mins=10

log.dirs=/data/kafka/data

log.index.interval.bytes=4096

log.index.size.max.bytes=10485760

log.retention.bytes=-1

log.retention.hours=168

log.roll.hours=168

log.segment.bytes=1073741824

message.max.bytes=1000000

min.insync.replicas=2 # 最小同步副本

num.io.threads=8

num.network.threads=3

num.partitions=2 # 默认创建的分区数

num.recovery.threads.per.data.dir=1

num.replica.fetchers=1

offset.metadata.max.bytes=4096

offsets.commit.required.acks=-1

offsets.commit.timeout.ms=5000

offsets.load.buffer.size=5242880

offsets.retention.check.interval.ms=600000

offsets.retention.minutes=86400000

offsets.topic.compression.codec=0

offsets.topic.num.partitions=50

offsets.topic.replication.factor=3

offsets.topic.segment.bytes=104857600

port=9092 # 启动执行端口

producer.purgatory.purge.interval.requests=10000

queued.max.requests=500

replica.fetch.max.bytes=1048576

replica.fetch.min.bytes=1

replica.fetch.wait.max.ms=500

replica.high.watermark.checkpoint.interval.ms=5000

replica.lag.max.messages=4000

replica.lag.time.max.ms=10000

replica.socket.receive.buffer.bytes=65536

replica.socket.timeout.ms=30000

socket.receive.buffer.bytes=102400

socket.request.max.bytes=104857600

socket.send.buffer.bytes=102400

# zookeeper 地址

zookeeper.connect=AInode1:2181,AInode2:2181,AInode3:2181 # zookeeper地址

zookeeper.connection.timeout.ms=25000

zookeeper.session.timeout.ms=30000

zookeeper.sync.time.ms=2000

zookeeper.client.sasl=true

zookeeper.set.acl=true

zookeeper.client.cnxn.socket=org.apache.zookeeper.ClientCnxnSocketNetty

# 添加两个jaas配置文件,password密码可以根据要求修改

vi config/kafka_server_jaas.conf

KafkaServer {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="admin"

password="admin123"

user_admin="admin123"

user_producer="producer123"

user_consumer="consumer123";

};

Client {

org.apache.zookeeper.server.auth.DigestLoginModule required

username="super"

password="zookeeper的管理密码";

};

vi config/kafka_client_jaas.conf

KafkaClient {

org.apache.kafka.common.security.plain.PlainLoginModule required

username="producer"

password="producer123";

};

# 配置生产和消费的配置

vi config/producer.properties

bootstrap.servers=localhost:9092

security.protocol=SASL_PLAINTEXT

sasl.mechanism=PLAIN

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="producer" password="producer123";

vi config/consumer.properties

bootstrap.servers=localhost:9092

security.protocol=SASL_PLAINTEXT

sasl.mechanism=PLAIN

sasl.jaas.config=org.apache.kafka.common.security.plain.PlainLoginModule required username="consumer" password="consumer123";

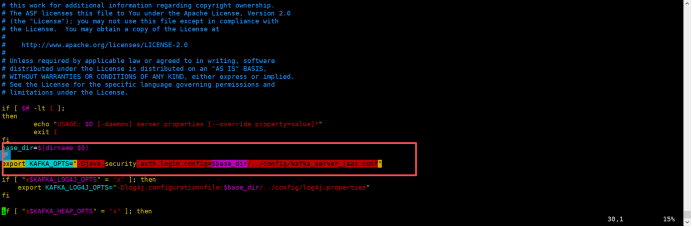

# 配置kafka的启动服务脚本,添加以下内容配置到脚本

vi bin/kafka-server-start.sh

export KAFKA_OPTS="-Djava.security.auth.login.config=$base_dir/../config/kafka_server_jaas.conf"

以上操作同步kafka的每个节点

生产者和消费者使用命令

./bin/kafka-console-producer.sh --broker-list localhost:9092 --topic test-topic --producer.config config/producer.properties

./bin/kafka-console-consumer.sh --bootstrap-server localhost:9092 --topic test-topic --from-beginning --consumer.config config/consumer.properties

# 其他操作可以先用这个命令配置一下,然后在执行相关命令操作

export KAFKA_OPTS="-Djava.security.auth.login.config=$base_dir/../config/kafka_server_jaas.conf"

./kafka-topics.sh --zookeeper localhost:2181 --list

./kafka-topics.sh --zookeeper localhost:2181 --create --topic test001 --partitions 1 --replication-factor 1

浙公网安备 33010602011771号

浙公网安备 33010602011771号