funasr语音识别,支持cpu

阿里达摩院开源大型端到端语音识别工具包FunASR:

FunASR提供了在大规模工业语料库上训练的模型,并能够将其部署到应用程序中。工具包的核心模型是Paraformer,这是一个非自回归的端到端语音识别模型,经过手动注释的普通话语音识别数据集进行了训练,该数据集包含60,000小时的语音数据。为了提高Paraformer的性能,本文在标准的Paraformer基础上增加了时间戳预测和热词定制能力。此外,为了便于模型部署,本文还开源了基于前馈时序记忆网络FSMN-VAD的语音活动检测模型和基于可控时延Transformer(CT-Transformer)的文本后处理标点模型,这两个模型都是在工业语料库上训练的。这些功能模块为构建高精度的长音频语音识别服务提供了坚实的基础,与在公开数据集上训练的其它模型相比,Paraformer展现出了更卓越的性能。 FunASR 的中文语音转写效果比 Whisper 更优秀。

安装步骤

conda create -n funasr python=3.9 conda in pip install torch==1.13 pip install torchaudio pip install pyaudio pip install -U funasr conda activate funasr

安装ffmpeg

https://blog.51cto.com/mshxuyi/10980887

资料

https://github.com/modelscope/FunASR/blob/main/README_zh.md

https://huggingface.co/FunAudioLLM/SenseVoiceSmall/tree/main

https://www.modelscope.cn/models/iic/SenseVoiceSmall

demo1直接转音频文件

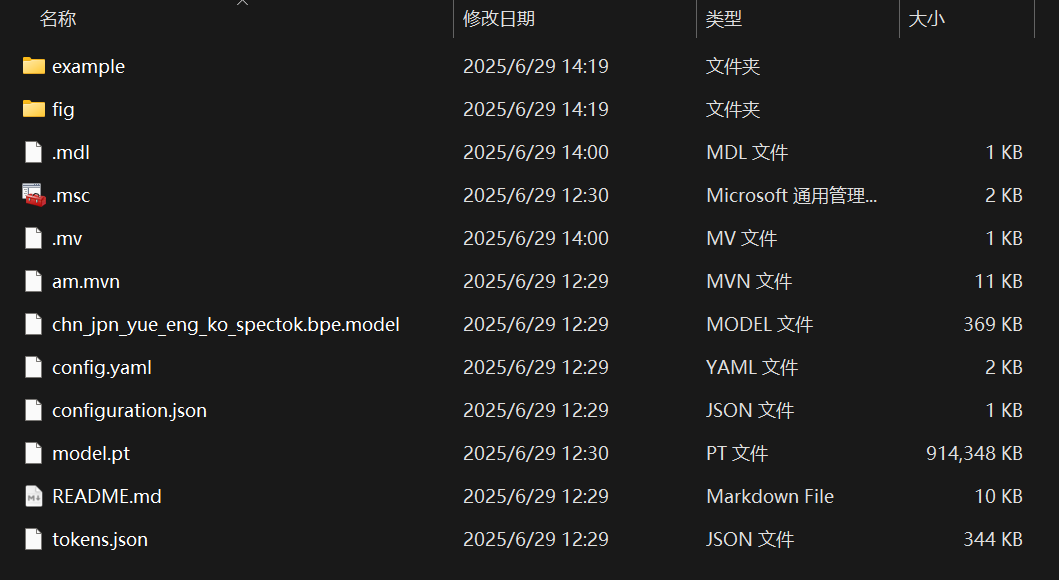

E:\\model\\FunAudioLLM\\SenseVoiceSmall是从魔塔上下载的文件

如果直接下载的报错就把模型变量换成model_dir = "iic/SenseVoiceSmall"

下载到缓存目录后(路径会打印)剪切到想指定的路径即可,指定的路径写到config.yaml那级

from funasr import AutoModel from funasr.utils.postprocess_utils import rich_transcription_postprocess model_dir = "E:\\model\\FunAudioLLM\\SenseVoiceSmall" model = AutoModel( model=model_dir, vad_model="fsmn-vad", vad_kwargs={"max_single_segment_time": 30000}, device="cpu", ) # en res = model.generate( input=f"C:\\Users\\zxppc\\Documents\\录音\\录音 (3).m4a", cache={}, language="auto", # "zn", "en", "yue", "ja", "ko", "nospeech" use_itn=True, batch_size_s=60, merge_vad=True, # merge_length_s=15, ) text = rich_transcription_postprocess(res[0]["text"]) print(text)

建议成功后再修改

demo2试试转麦克风采集的音频

from funasr import AutoModel import pyaudio import numpy as np import os # 配置参数 chunk_size = [0, 10, 5] # 600ms encoder_chunk_look_back = 4 decoder_chunk_look_back = 1 model_dir = "E:\\model\\FunAudioLLM\\SenseVoiceSmall" model = AutoModel(model=model_dir) # 麦克风配置 FORMAT = pyaudio.paInt16 # 16-bit int CHANNELS = 1 # 单声道 RATE = 16000 # 采样率 (与模型一致) CHUNK_SIZE_MS = 600 # 每个 chunk 600ms CHUNK_SAMPLES = int(RATE * CHUNK_SIZE_MS / 1000) # 样本数 # 初始化 PyAudio p = pyaudio.PyAudio() stream = p.open(format=FORMAT, channels=CHANNELS, rate=RATE, input=True, frames_per_buffer=CHUNK_SAMPLES) print("🎤 正在监听麦克风,请开始说话...") # 流式识别缓存 cache = {} chunk_count = 0 try: while True: audio_data = stream.read(CHUNK_SAMPLES, exception_on_overflow=False) speech_chunk = np.frombuffer(audio_data, dtype=np.int16).astype(np.float32) / 32768.0 # 归一化到 [-1, 1] # 推理 res = model.generate( input=speech_chunk, cache=cache, is_final=False, # 实时识别一般不设为最终帧 chunk_size=chunk_size, encoder_chunk_look_back=encoder_chunk_look_back, decoder_chunk_look_back=decoder_chunk_look_back ) if res and len(res) > 0: print("🎙️ 识别结果:", res[0]["text"]) except KeyboardInterrupt: print("\n🛑 停止录音...") finally: stream.stop_stream() stream.close() p.terminate()

浙公网安备 33010602011771号

浙公网安备 33010602011771号