prepare:

- 了解hadoop的hdfs、MapReduce、yarn这三个组件的原理和基本使用,hive是在他们之上的应用。 http://hadoop.apache.org/docs/stable/ (安全、认证、REST相关的不关注;里面有关设计原理的,最好到slideshare上找几个介绍hadoop框架原理的ppt先看一下。

- hive原理:http://infolab.stanford.edu/~ragho/hive-icde2010.pdf http://www.vldb.org/pvldb/2/vldb09-938.pdf

- hive ql的文档: https://cwiki.apache.org/confluence/display/Hive/LanguageManual

------------

SKEWED BY 对于倾斜的数据,指定在哪些值倾斜,从而做优化。

较群面的分析了hive优化

如何配置yarn的内存;

提供了一个脚本生成参考配置值;

With the following options:

| Option | Description |

| -c CORES | The number of cores on each host. |

| -m MEMORY | The amount of memory on each host in GB. |

| -d DISKS | The number of disks on each host. |

| -k HBASE | "True" if HBase is installed, "False" if not. |

Note: You can also use the -h or --help option to display a Help message that describes the options.

Running the following command:

[root@jason3 scripts]# python yarn-utils.py -c 24 -m 64 -d 12 -k FalseUsing cores=24 memory=64GB disks=12 hbase=FalseProfile: cores=24 memory=57344MB reserved=8GB usableMem=56GB disks=12Num Container=22Container Ram=2560MBUsed Ram=55GBUnused Ram=8GByarn.scheduler.minimum-allocation-mb=2560yarn.scheduler.maximum-allocation-mb=56320yarn.nodemanager.resource.memory-mb=56320mapreduce.map.memory.mb=2560mapreduce.map.java.opts=-Xmx2048mmapreduce.reduce.memory.mb=2560mapreduce.reduce.java.opts=-Xmx2048myarn.app.mapreduce.am.resource.mb=2560yarn.app.mapreduce.am.command-opts=-Xmx2048mmapreduce.task.io.sort.mb=1024[root@jason3 scripts]#

mapjoin:

hive.auto.convert.join (if set to true) automatically converts the joins to mapjoins at runtime if possible, and it should be used instead of the mapjoin hint

- hive.auto.convert.join.noconditionaltask - Whether Hive enable the optimization about converting common join into mapjoin based on the input file size. If this paramater is on, and the sum of size for n-1 of the tables/partitions for a n-way join is smaller than the specified size, the join is directly converted to a mapjoin (there is no conditional task).

- hive.auto.convert.join.noconditionaltask.size - If hive.auto.convert.join.noconditionaltask is off, this parameter does not take affect. However, if it is on, and the sum of size for n-1 of the tables/partitions for a n-way join is smaller than this size, the join is directly converted to a mapjoin(there is no conditional task). The default is 10MB.

- 系统资源统计。用top,sysstat等工具监控整个系统资源使用情况。

- binary instrumentation。这方面也有很多工具,如hprof, jprof, btrace等等。特别是btrace值得看看,它可以动态的插入profile代码。

- Hadoop提供的JMX bean信息。JMX是Java一个监控和管理的标准,Hadoop代码中有部分关键信息通过JMX接口暴露出来。

- Hadoop的log。这方面有专门的Hadoop的分析工具,如Vaidya,Kahuna。其他通用的log分析工具也有很多。Pasted from: <http://www.zhihu.com/question/19661847>

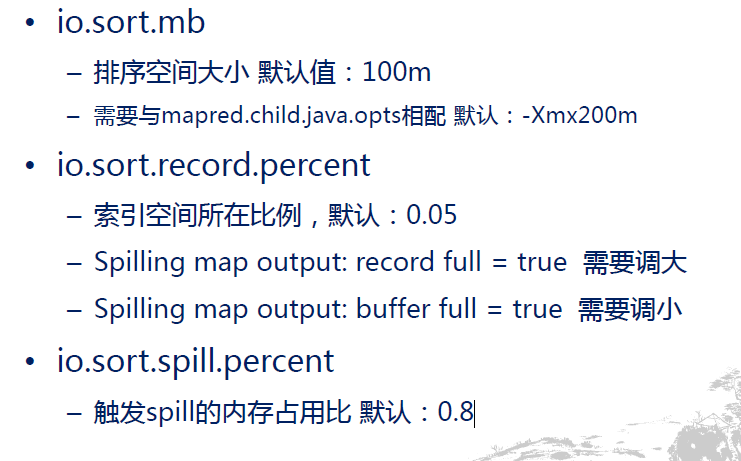

Hive及Hadoop作业调优.pdf-------------–mapred.map.tasks 期望的map个数 默认值:1, 可增大map数–mapred.min.split.size 切割出的split最小size 默认:1 ,可减少map数–mapred.max.split.size 切割出的split最大size 默认:Long.MAX_VALUE ,增加map数![]()

![]()

![]()

![]()

Designing for Performance Using Hadoop Hive

set mapred.max.split.size=1000000;set hive.optimize.bucketmapjoin=true;set hive.optimize.bucketmapjoin.sortedmerge=true;set hive.groupby.skewindata=true;(This setting may reduce performance for data that is not heavily skewed.)Storage File Format: 缺省是

序列文件;ORC;压缩是在cpu与磁盘/IO之间的权衡;Partitioning:数据提前按field分片,那么where相关的过滤变快;

Bucketing: 数据被提前按照某些key做hash分片了,所以group by和join等需要reduce的操作就变快(reduce默认使用hash分片)

Benchmarking Apache Hive 13 for Enterprise Hadoop http://zh.hortonworks.com/blog/benchmarking-apache-hive-13-enterprise-hadoop/Tez and MapReduce were tuned to process all queries using 4 GB containers at a target container-to-disk ratio of 2.0. The ratio is important because it minimizes disk thrash and maximizes throughput.

Other Settings:

- yarn.nodemanager.resource.memory-mb was set to 49152

- Default virtual memory for a job’s map-task and reduce-task were set to 4096

- hive.tez.container.size was set to 4096

- hive.tez.java.opts was set to -Xmx3800m

- Tez app masters were given 8 GB

- mapreduce.map.java.opts and mapreduce.reduce.java.opts were set to -Xmx3800m. This is smaller than 4096 to allow for some garbage collection overhead

- hive.auto.convert.join.noconditionaltask.size was set to 1252698795

Note: this is 1/3 of the Xmx value, about 1.7 GB.

The following additional optimizations were used for Hive 0.13.0:

- Vectorized Query enabled

- ORCFile formatted data

- Map-join auto conversion enabled

Hive Performance Tuning

Use Hive’s Mapjoin: 使用注释或者启用自动识别;SELECT /*+ MAPJOIN(tbl2) */ ... FROM tbl1 join tbl2 on tbl1.key = tbl2.keyDISTRIBUTE BY…SORT BY v. ORDER BY: order by是全序,效率低;Avoid “SELECT count(DISTINCT field) FROM tbl” ,使用代替:SELECT count(1) FROM ( SELECT DISTINCT field FROM tbl ) t

浙公网安备 33010602011771号

浙公网安备 33010602011771号