三:编写hivesql离线分析信件内容

启动hive服务:

先启动metastore服务 然后启动hiveserver2服务

nohup /export/servers/hive/bin/hive --service metastore &

nohup /export/servers/hive/bin/hive --service hiveserver2 &

使用datagrip客户端连接Hiveserver2服务

hive建库建表语句,以及使用CTAS语法创建结果表,其中有一个使用udf(自定义函数)

点击查看代码

-- =============================================================================

-- 初始化数据库和数据表

-- =============================================================================

-- 创建政府信箱数据库(如果不存在)

CREATE DATABASE IF NOT EXISTS gov_letter_db;

-- 使用新创建的数据库

USE gov_letter_db;

-- 创建外部表连接HDFS上的原始数据文件

-- 数据格式:TSV分隔,位置在HDFS的/output目录

CREATE EXTERNAL TABLE IF NOT EXISTS gov_letters (

title STRING COMMENT '信件标题',

sender STRING COMMENT '来信人',

send_time STRING COMMENT '来信时间',

question STRING COMMENT '问题内容',

reply_org STRING COMMENT '答复机构',

reply_time STRING COMMENT '答复时间',

reply_content STRING COMMENT '答复内容'

)

ROW FORMAT DELIMITED

FIELDS TERMINATED BY '\t'

STORED AS TEXTFILE

LOCATION '/output';

-- =============================================================================

-- 基础统计分析

-- =============================================================================

-- 查询1:统计总信件数量(数据质量检查)

SELECT COUNT(*) AS total_letters FROM gov_letters;

-- 查询2:各答复机构的处理量排名(识别最活跃部门)

CREATE TABLE reply_org_ranking AS

SELECT

reply_org AS reply_org,

COUNT(*) AS cnt

FROM gov_letters

WHERE reply_org != 'N/A' -- 过滤无效机构名称

GROUP BY reply_org

ORDER BY cnt DESC;

-- 查询3:信件处理时效分布(分析响应效率)

-- 将处理时间分为5个时段并计算占比

CREATE TABLE processing_time_distribution AS

SELECT

processing_period,

COUNT(*) AS cnt,

ROUND(COUNT(*) * 100.0 / total.total_cnt, 1) AS percentage

FROM (

SELECT

CASE

WHEN days_diff = 0 THEN 'same_day'

WHEN days_diff = 1 THEN '1_day'

WHEN days_diff = 2 THEN '2_days'

WHEN days_diff = 3 THEN '3_days'

ELSE 'more_than_3_days'

END AS processing_period

FROM (

SELECT

DATEDIFF(

FROM_UNIXTIME(UNIX_TIMESTAMP(reply_time, 'yyyy-MM-dd')),

FROM_UNIXTIME(UNIX_TIMESTAMP(send_time, 'yyyy-MM-dd'))

) AS days_diff

FROM gov_letters

WHERE send_time != 'N/A'

AND reply_time != 'N/A'

AND reply_time RLIKE '^[0-9]{4}-[0-9]{2}-[0-9]{2}$' -- 日期格式验证

AND send_time RLIKE '^[0-9]{4}-[0-9]{2}-[0-9]{2}$' -- 日期格式验证

) t1

) t2

CROSS JOIN (

SELECT COUNT(*) AS total_cnt

FROM gov_letters

WHERE send_time != 'N/A'

AND reply_time != 'N/A'

AND reply_time RLIKE '^[0-9]{4}-[0-9]{2}-[0-9]{2}$'

AND send_time RLIKE '^[0-9]{4}-[0-9]{2}-[0-9]{2}$'

) total

GROUP BY processing_period, total.total_cnt

ORDER BY

CASE processing_period

WHEN 'same_day' THEN 1

WHEN '1_day' THEN 2

WHEN '2_days' THEN 3

WHEN '3_days' THEN 4

ELSE 5

END;

-- =============================================================================

-- 高级分析(使用自定义UDF)

-- =============================================================================

-- 注册自定义UDF函数:extract_ym(从日期字符串提取年月)

ADD JAR hdfs:///udf/pachong-1.0-SNAPSHOT.jar;

CREATE TEMPORARY FUNCTION extract_ym AS 'my.mr.ExtractYearMonth';

-- 查询4:月度信件量趋势分析(使用UDF)

-- 按来信年月分组统计信件数量,反映需求变化趋势

CREATE TABLE monthly_trend AS

SELECT

extract_ym(send_time) AS year_month,

COUNT(*) AS total_letters

FROM gov_letters

WHERE send_time != 'N/A'

AND extract_ym(send_time) IS NOT NULL -- 使用UDF过滤无效日期

GROUP BY extract_ym(send_time)

ORDER BY year_month DESC;

这样就在hive数据库中有了三个结果表

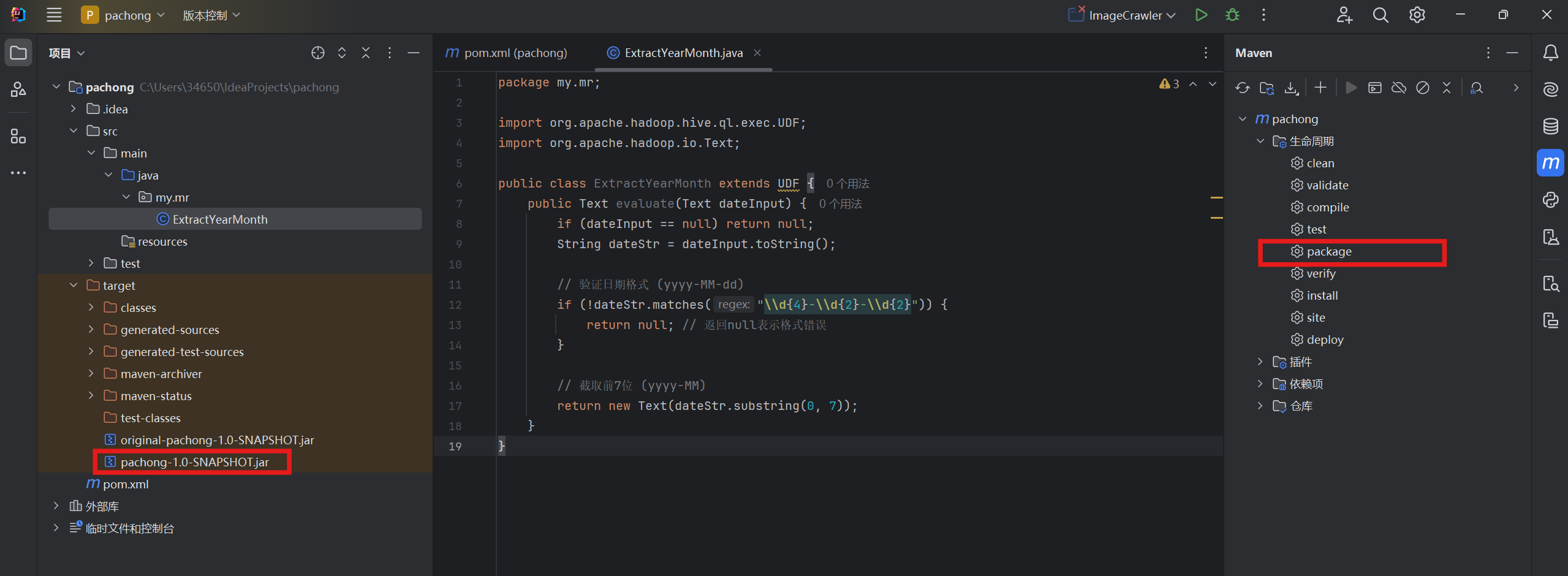

下面介绍UDF的使用

源代码:

package my.mr;

import org.apache.hadoop.hive.ql.exec.UDF;

import org.apache.hadoop.io.Text;

public class ExtractYearMonth extends UDF {

public Text evaluate(Text dateInput) {

if (dateInput == null) return null;

String dateStr = dateInput.toString();

// 验证日期格式 (yyyy-MM-dd)

if (!dateStr.matches("\\d{4}-\\d{2}-\\d{2}")) {

return null; // 返回null表示格式错误

}

// 截取前7位 (yyyy-MM)

return new Text(dateStr.substring(0, 7));

}

}

在pom.xml中要排除 Hadoop/Hive 依赖

<build>

<plugins>

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-shade-plugin</artifactId>

<version>3.5.0</version>

<executions>

<execution>

<phase>package</phase>

<goals>

<goal>shade</goal>

</goals>

<configuration>

<createDependencyReducedPom>false</createDependencyReducedPom>

<filters>

<filter>

<!-- 排除签名文件防止安全警告 -->

<artifact>*:*</artifact>

<excludes>

<exclude>META-INF/*.SF</exclude>

<exclude>META-INF/*.DSA</exclude>

<exclude>META-INF/*.RSA</exclude>

</excludes>

</filter>

</filters>

<artifactSet>

<!-- 排除 Hadoop/Hive 依赖 -->

<excludes>

<exclude>org.apache.hadoop:*</exclude>

<exclude>org.apache.hive:*</exclude>

</excludes>

</artifactSet>

</configuration>

</execution>

</executions>

</plugin>

</plugins>

</build>

打为jar包并放在hdfs的udf文件夹中,并在DG中注册为函数,之后就能和其他函数一样使用了。

浙公网安备 33010602011771号

浙公网安备 33010602011771号