kubeadm安装k8s

1.环境准备

(1)在所有节点上安装Docker和kubeadm

(2)部署Kubernetes Master

(3)部署容器网络插件

(4)部署 Kubernetes Node,将节点加入Kubernetes集群中

(5)部署 Dashboard Web 页面,可视化查看Kubernetes资源

(6)部署 Harbor 私有仓库,存放镜像资源

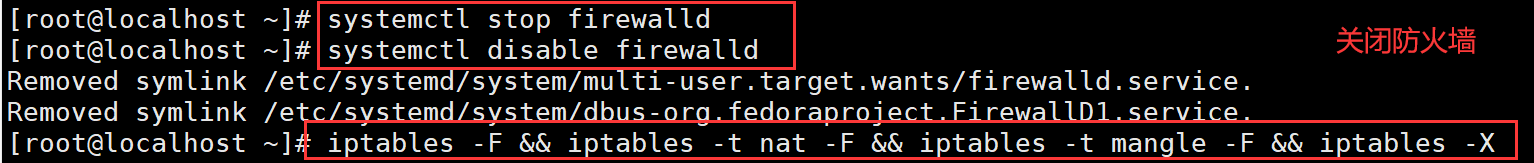

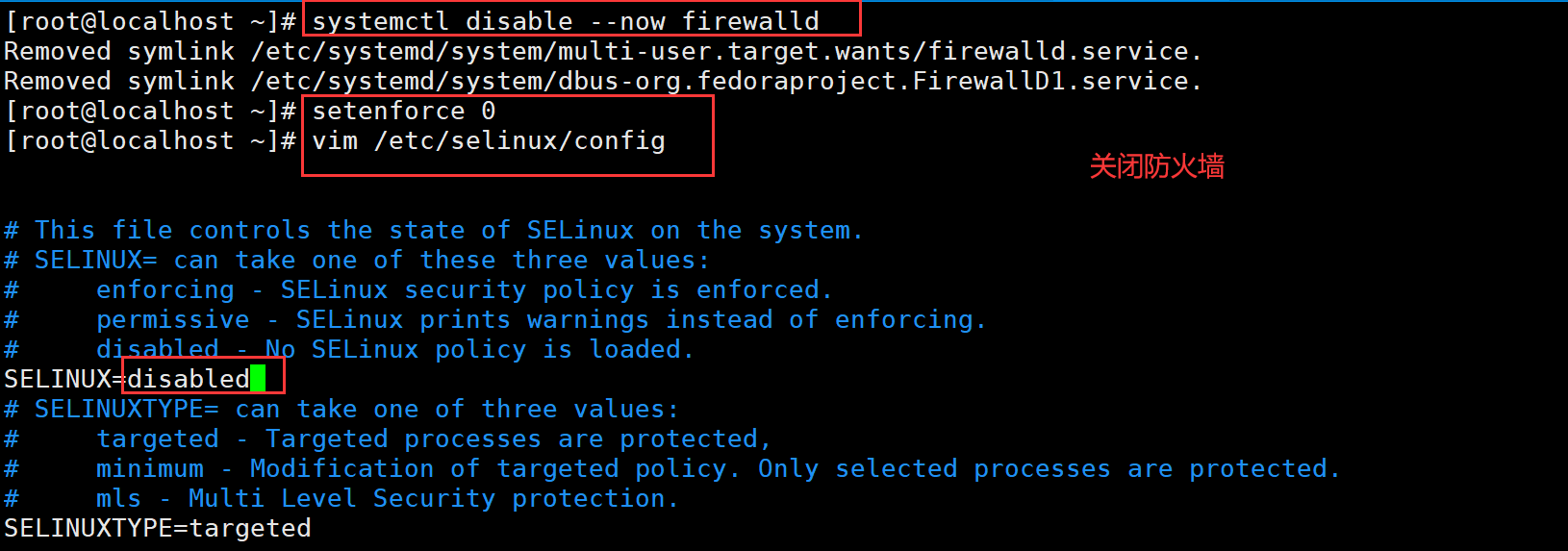

#关闭防火墙

systemctl stop firewalld

systemctl disable firewalld

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

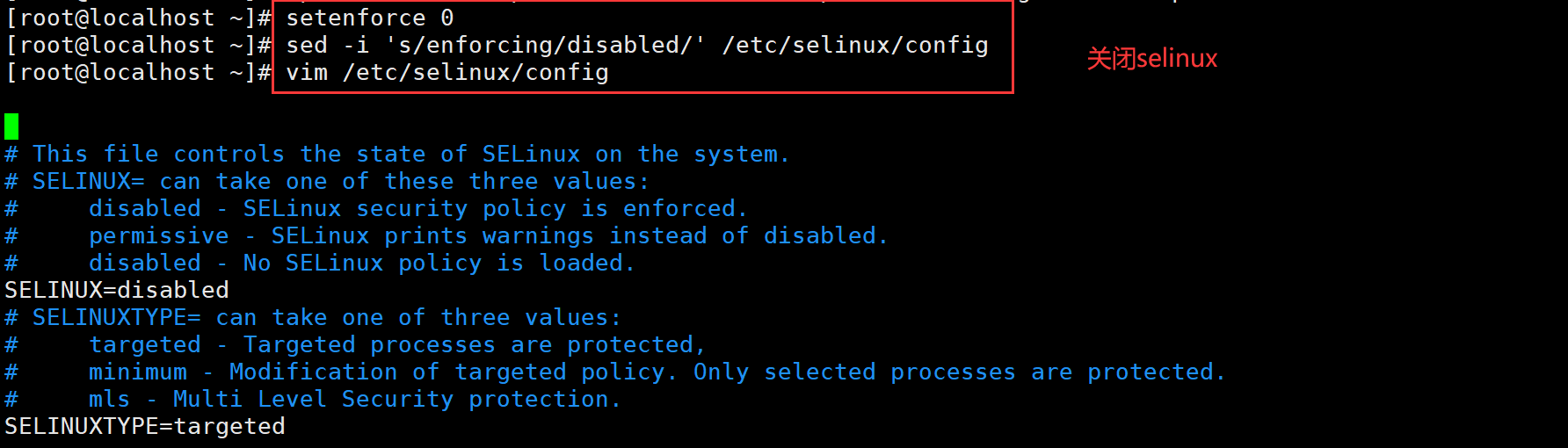

#关闭selinux

setenforce 0

sed -i 's/enforcing/disabled/' /etc/selinux/config

vim /etc/selinux/config

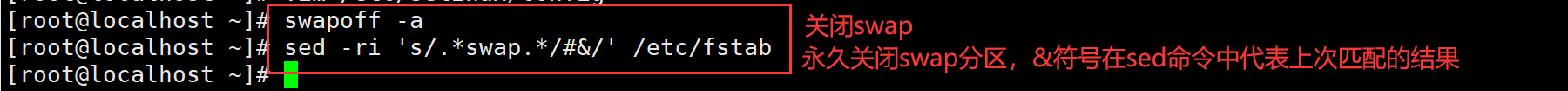

#关闭swap

swapoff -a #交换分区必须要关闭

#永久关闭swap分区,&符号在sed命令中代表上次匹配的结果

sed -ri 's/.*swap.*/#&/' /etc/fstab

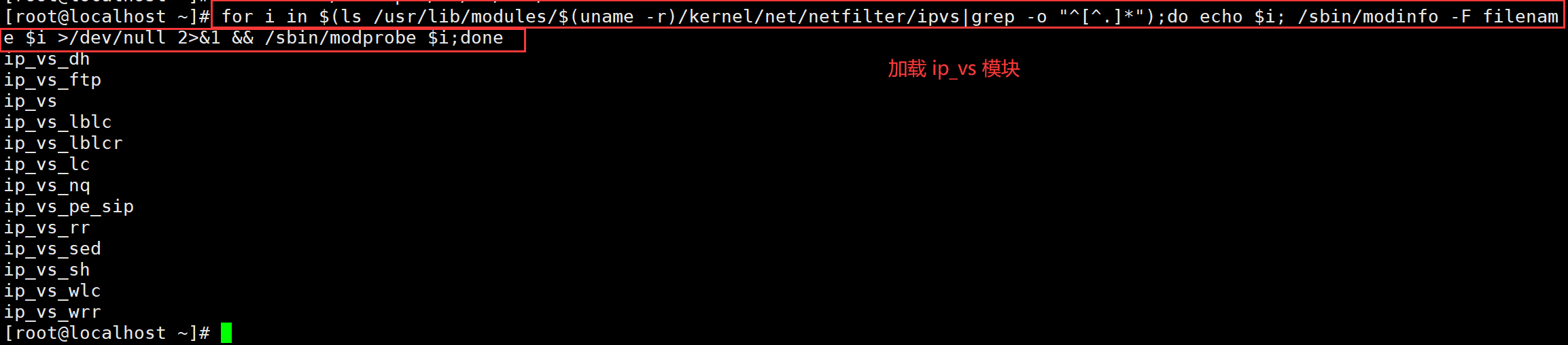

#加载 ip_vs 模块

for i in $(ls /usr/lib/modules/$(uname -r)/kernel/net/netfilter/ipvs|grep -o "^[^.]*");do echo $i; /sbin/modinfo -F filename $i >/dev/null 2>&1 && /sbin/modprobe $i;done

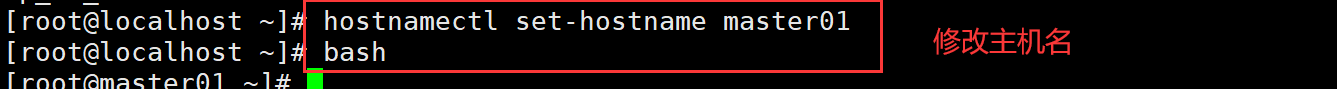

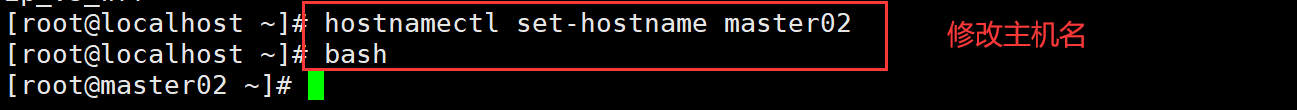

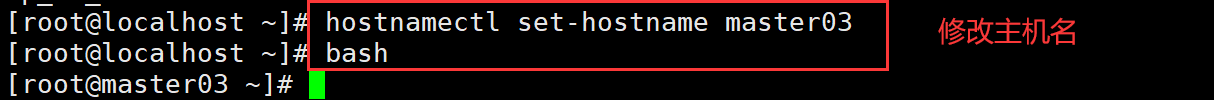

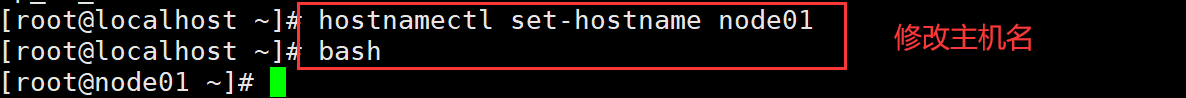

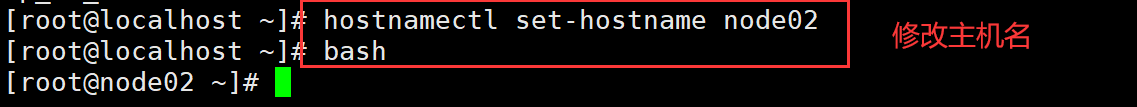

#修改主机名

hostnamectl set-hostname master01

hostnamectl set-hostname master02

hostnamectl set-hostname master03

hostnamectl set-hostname node01

hostnamectl set-hostname node02

bash

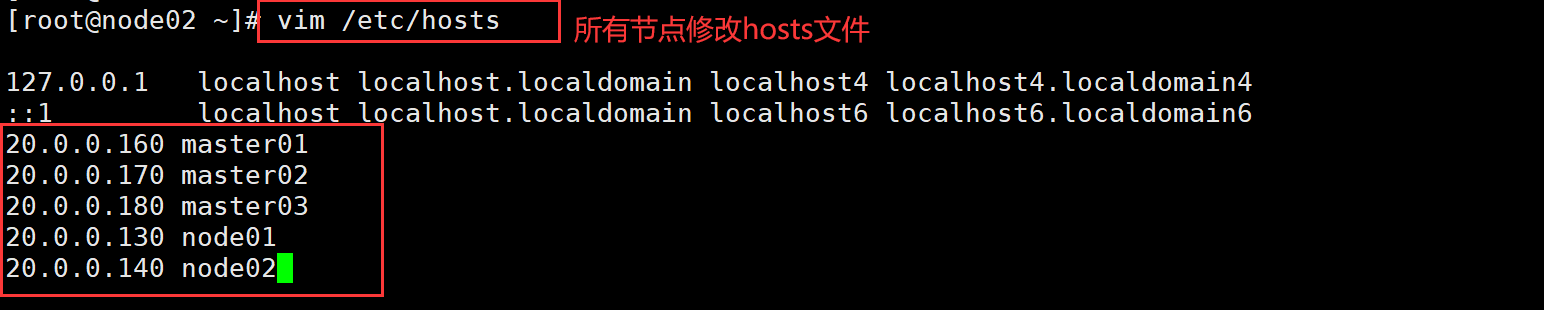

#在master添加hosts

vim /etc/hosts

20.0.0.160 master01

20.0.0.170 master02

20.0.0.180 master03

20.0.0.130 node01

20.0.0.140 node02

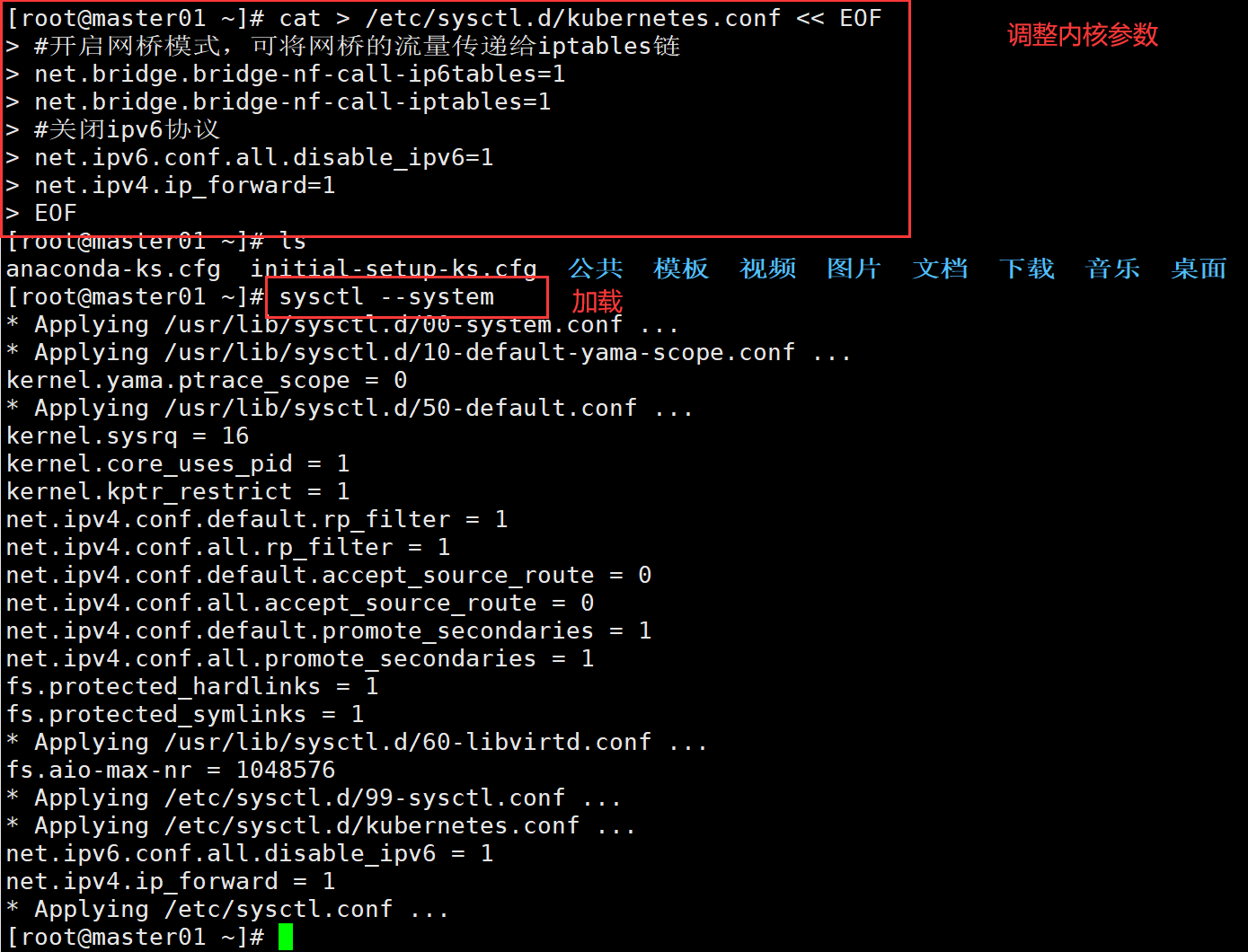

#调整内核参数

cat > /etc/sysctl.d/kubernetes.conf << EOF

#开启网桥模式,可将网桥的流量传递给iptables链

net.bridge.bridge-nf-call-ip6tables=1

net.bridge.bridge-nf-call-iptables=1

#关闭ipv6协议

net.ipv6.conf.all.disable_ipv6=1

net.ipv4.ip_forward=1

EOF

ls

sysctl --system #加载

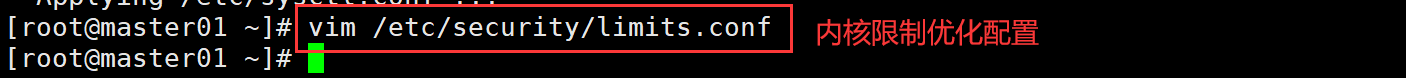

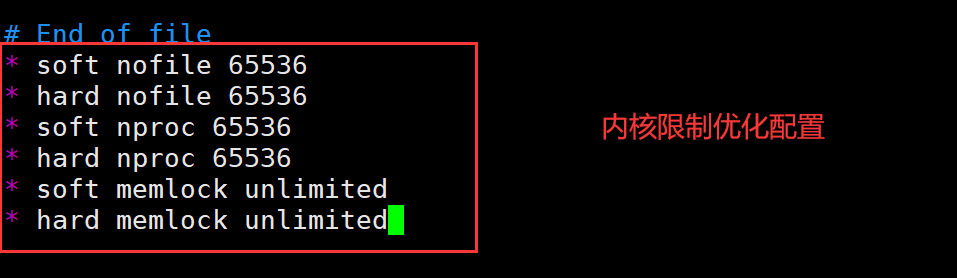

#内核限制优化配置

vim /etc/security/limits.conf

* soft nofile 65536

* hard nofile 65536

* soft nproc 65536

* hard nproc 65536

* soft memlock unlimited

* hard memlock unlimited

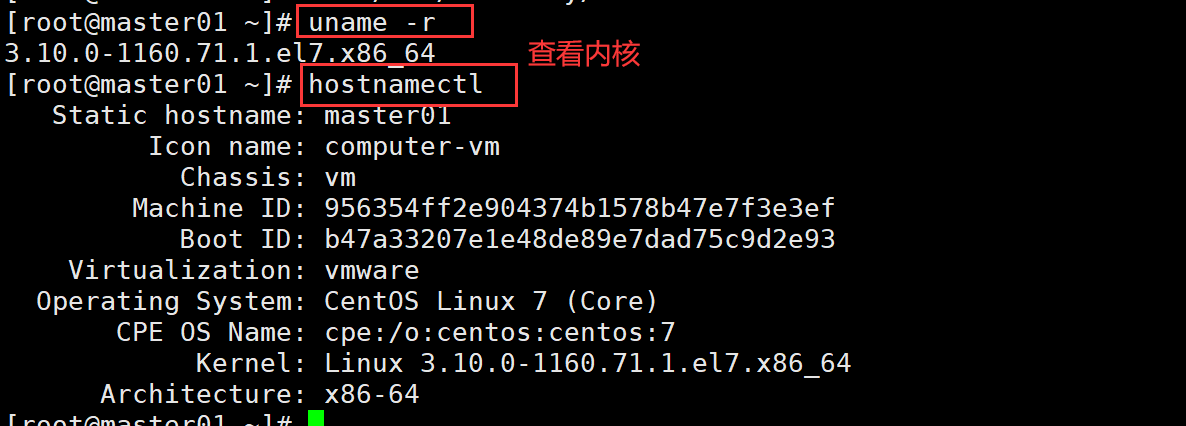

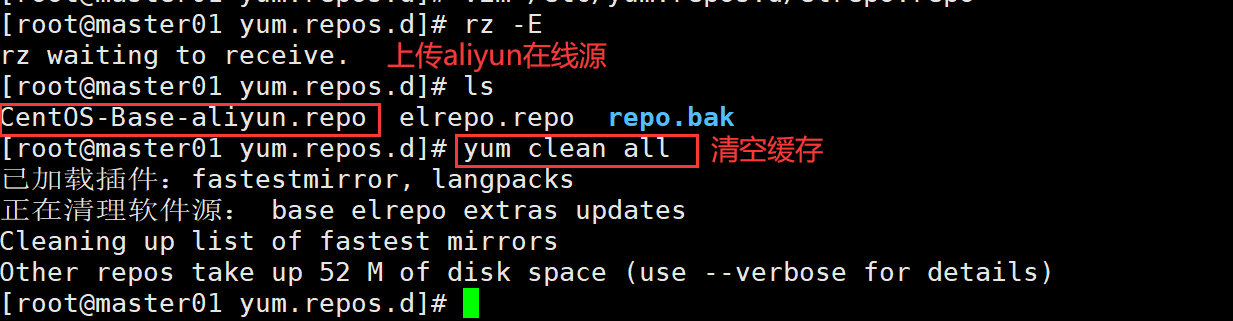

#升级内核

uname -r #查看内核

hostnamectl

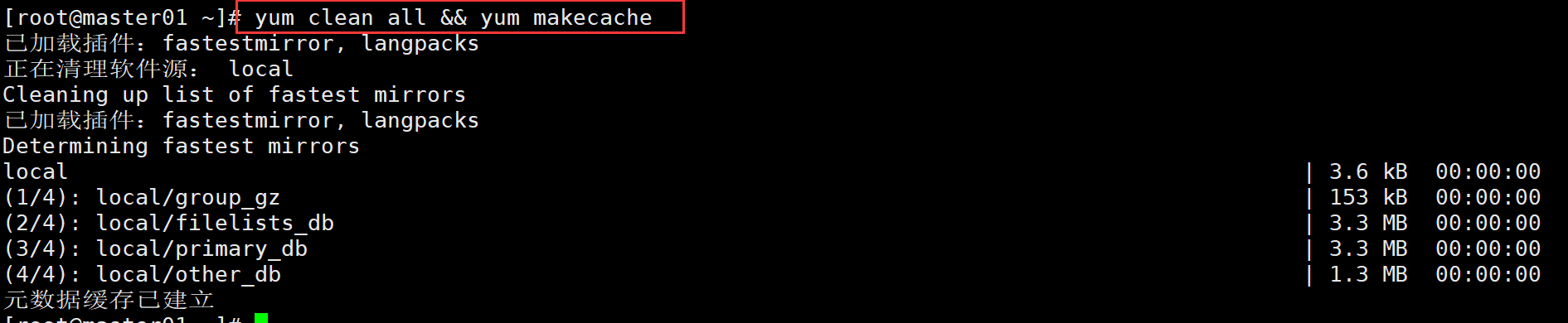

yum clean all && yum makecache

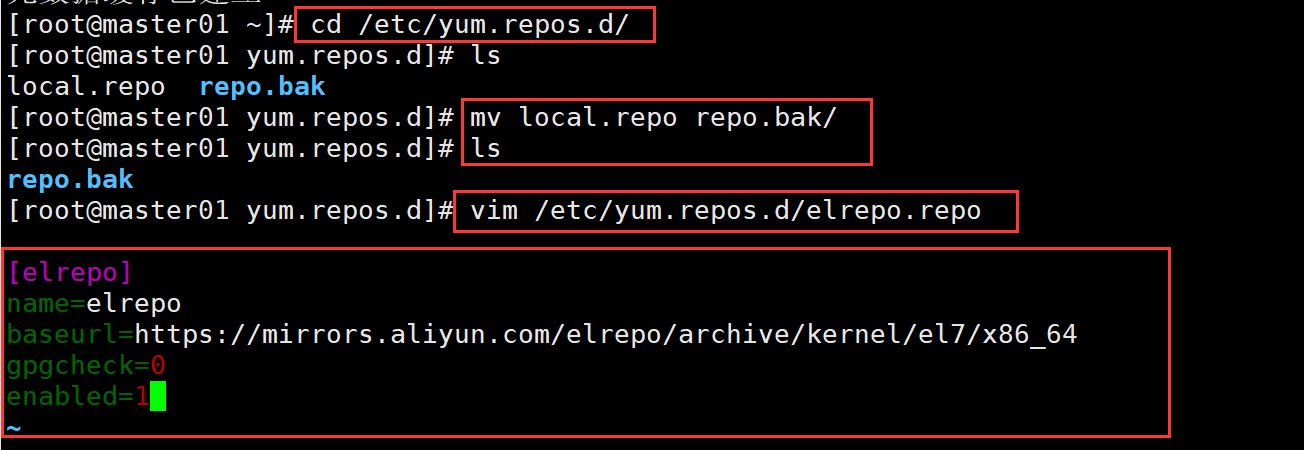

cd /etc/yum.repos.d/

ls

mv local.repo repo.bak/

ls

vim /etc/yum.repos.d/elrepo.repo

[elrepo]

name=elrepo

baseurl=https://mirrors.aliyun.com/elrepo/archive/kernel/el7/x86_64

gpgcheck=0

enabled=1

rz -E #上传aliyun的在线源

CentOS-Base-aliyun.repo

yum clean all #清空缓存

yum install -y kernel-lt kernet-lt-devel #集群升级内核

#awk -F\' '$1=="menuentry " {print i++ " : " $2}' /etc/grub2.cfg #查看内核序号

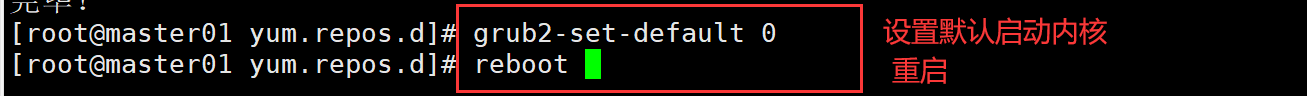

grub2-set-default 0 #设置默认启动内核

reboot #重启操作系统

uname -r

hostnamectl #查看生效版本

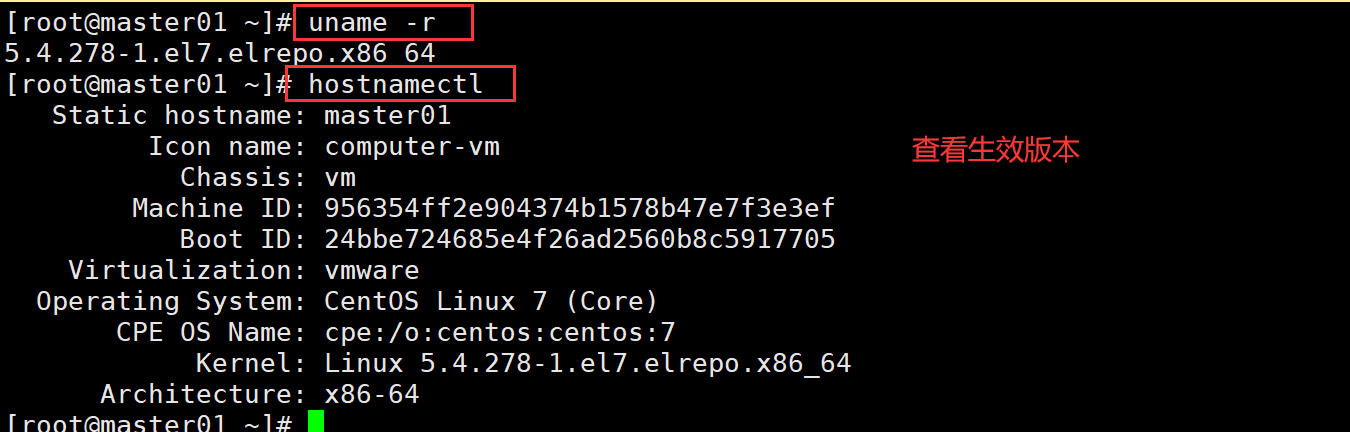

2.所有节点安装docker

#yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum install -y docker-ce docker-ce-cli containerd.io

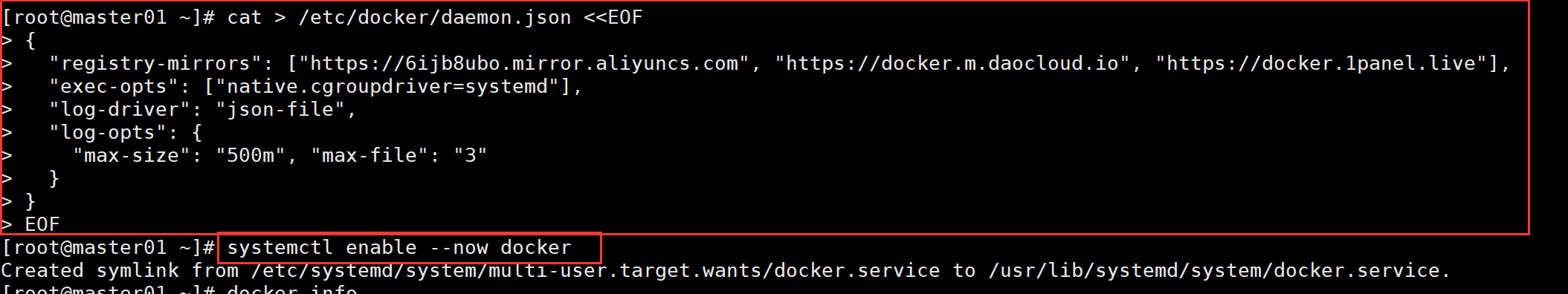

cat > /etc/docker/daemon.json <<EOF

{

"registry-mirrors": ["https://6ijb8ubo.mirror.aliyuncs.com", "https://docker.m.daocloud.io", "https://docker.1panel.live"],

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "500m", "max-file": "3"

}

}

EOF

systemctl enable --now docker

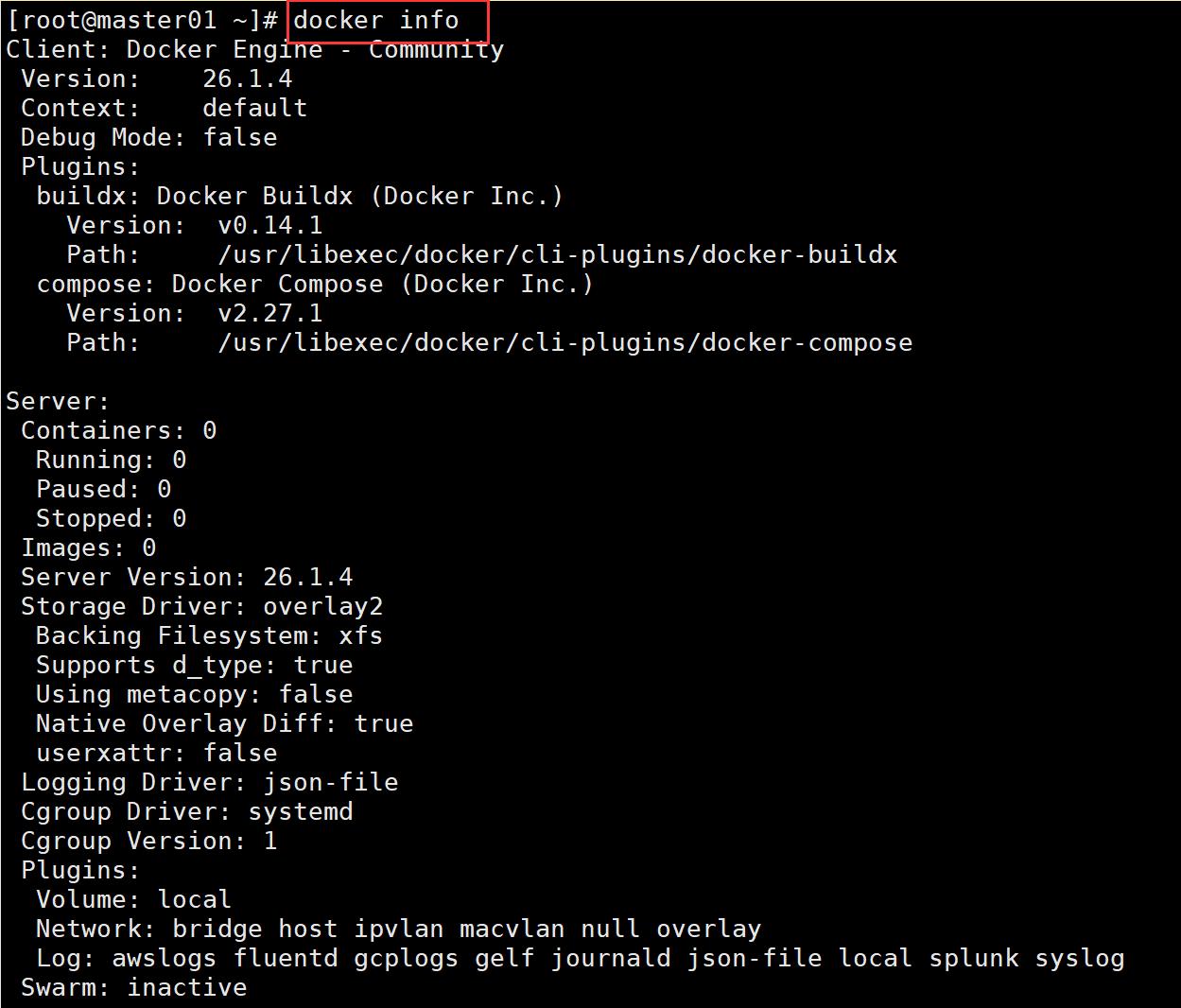

docker info

3.所有节点安装kubeadm

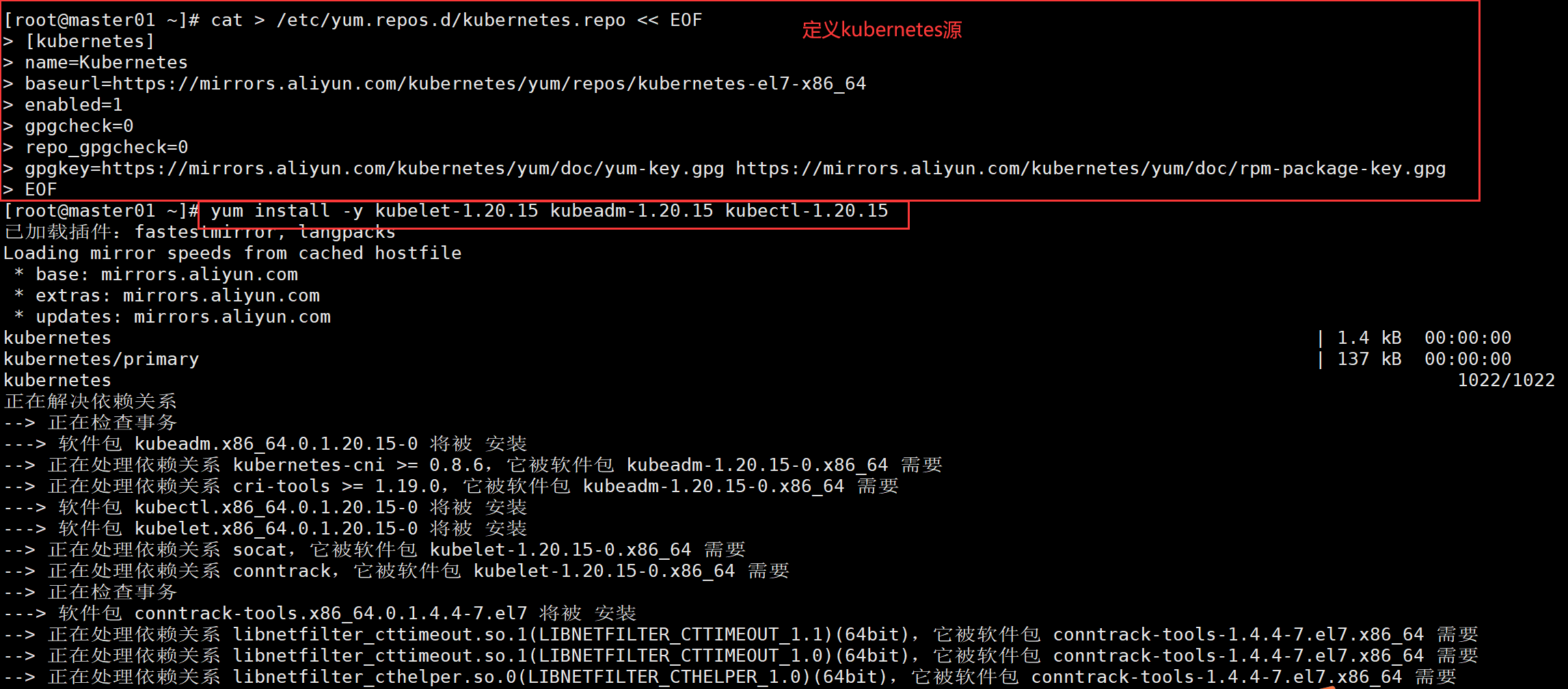

//定义kubernetes源

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum install -y kubelet-1.20.15 kubeadm-1.20.15 kubectl-1.20.15

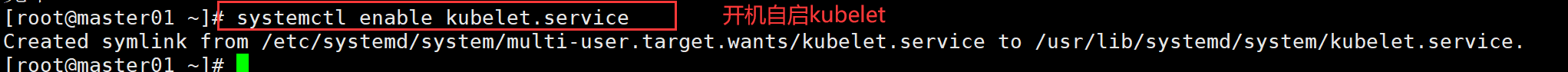

//开机自启kubelet

systemctl enable kubelet.service

4.Nginx负载均衡部署

20.0.0.100 lb01

20.0.0.110 lb02

#### 在lb01、lb02节点上操作####

systemctl disable --now firewalld

setenforce 0

vim /etc/selinux/config

disabled

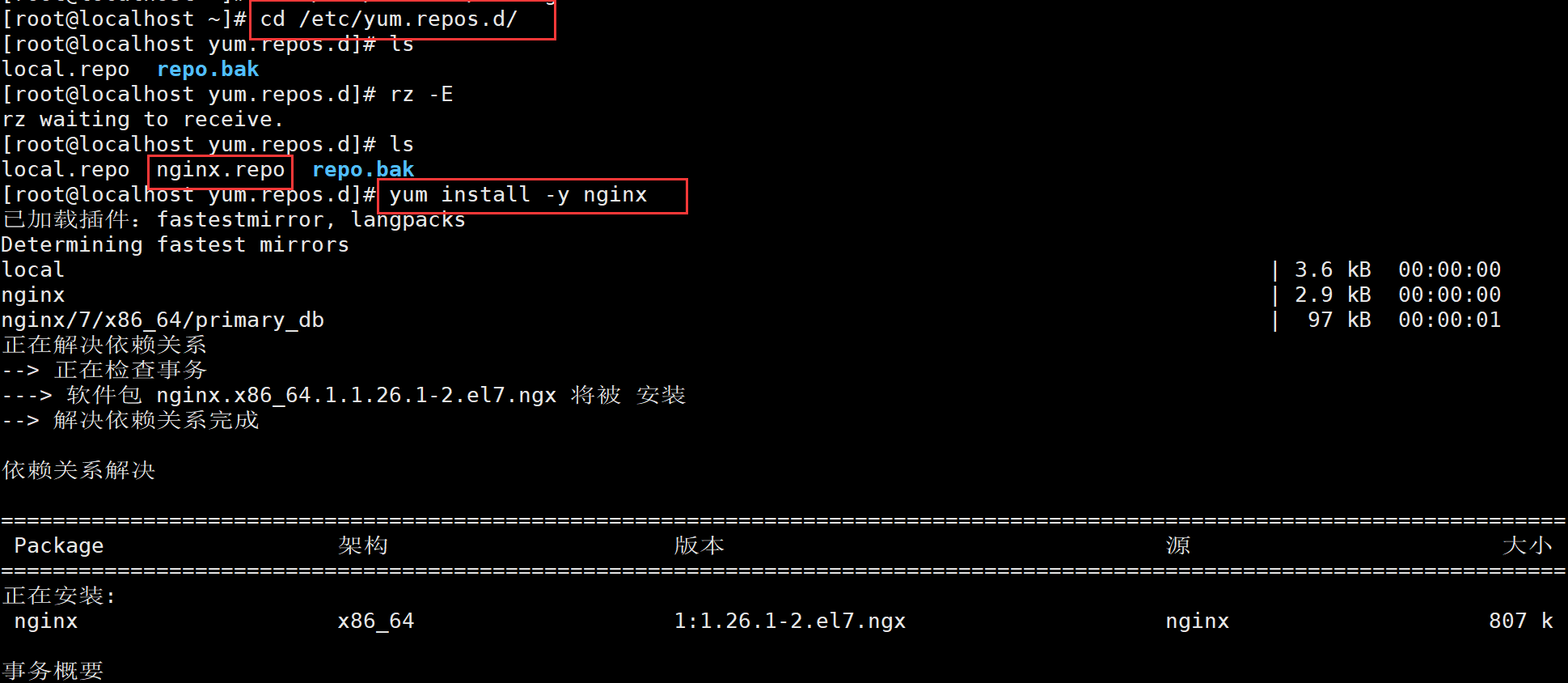

cd /etc/yum.repos.d/

ls

rz -E 上传nginx.repo

yum install -y nginx

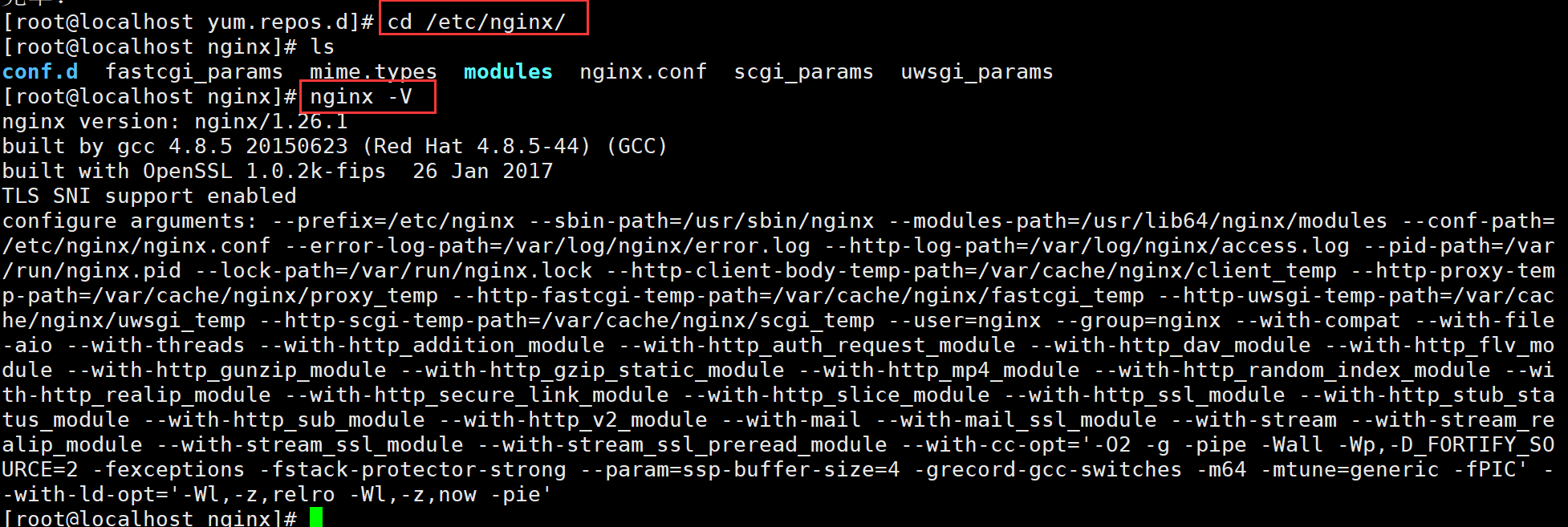

cd /etc/nginx/

ls

nginx -V

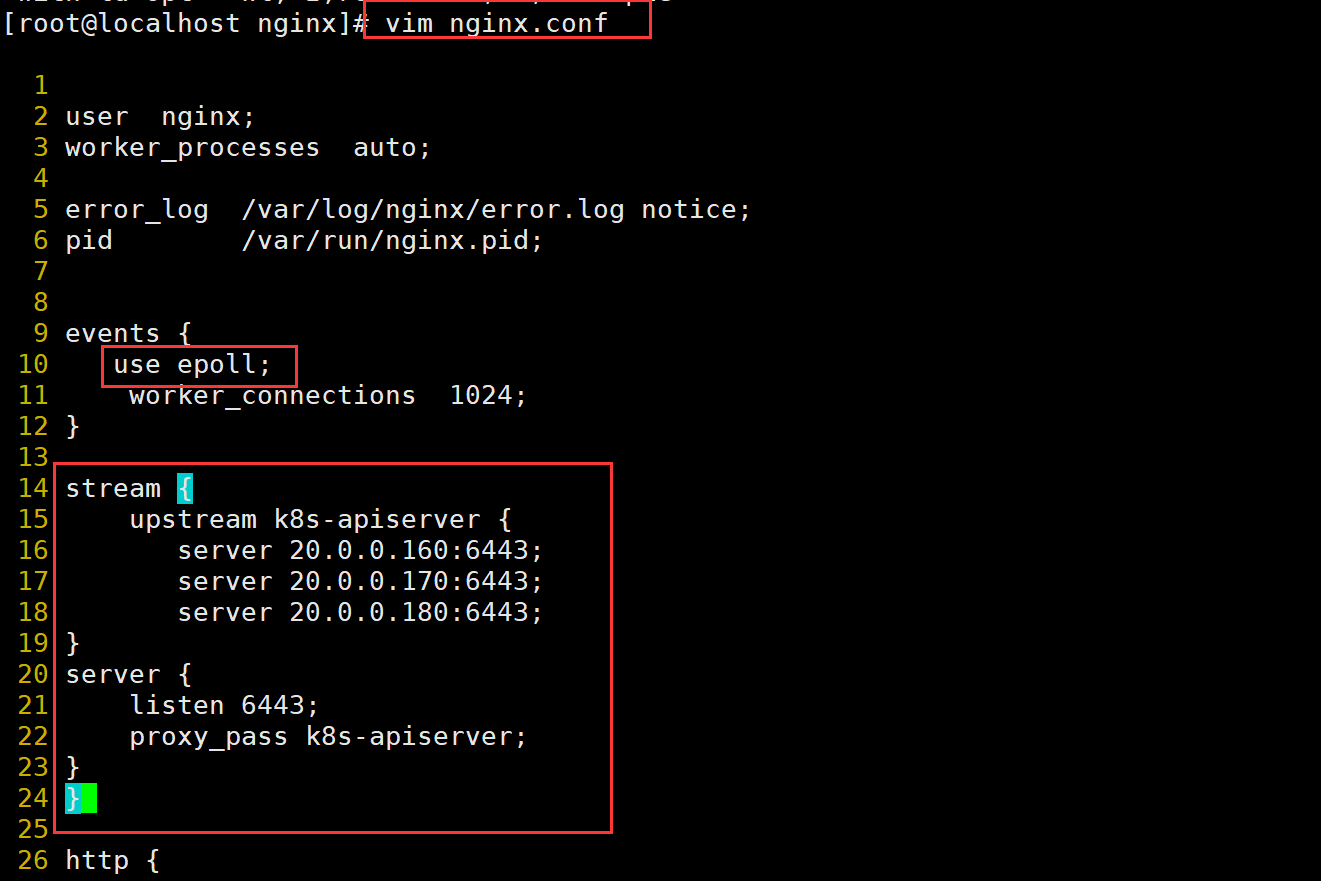

//修改nginx配置文件,配置四层反向代理负载均衡,指定k8s群集2台master的节点ip和6443端口

vim nginx.conf

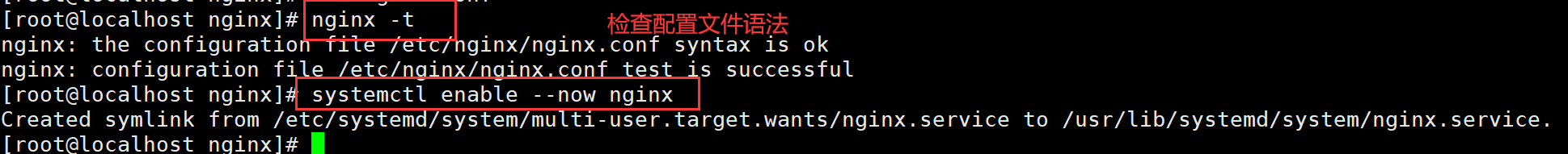

//检查配置文件语法

nginx -t

//启动nginx服务,查看已监听6443端口

systemctl enable --now nginx

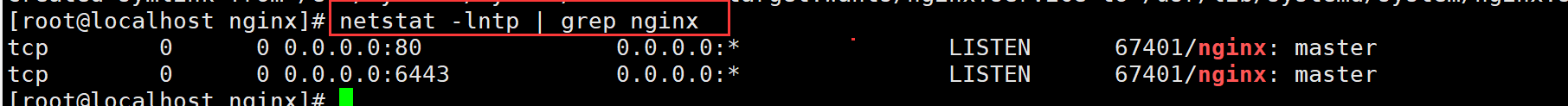

netstat -lntp | grep nginx

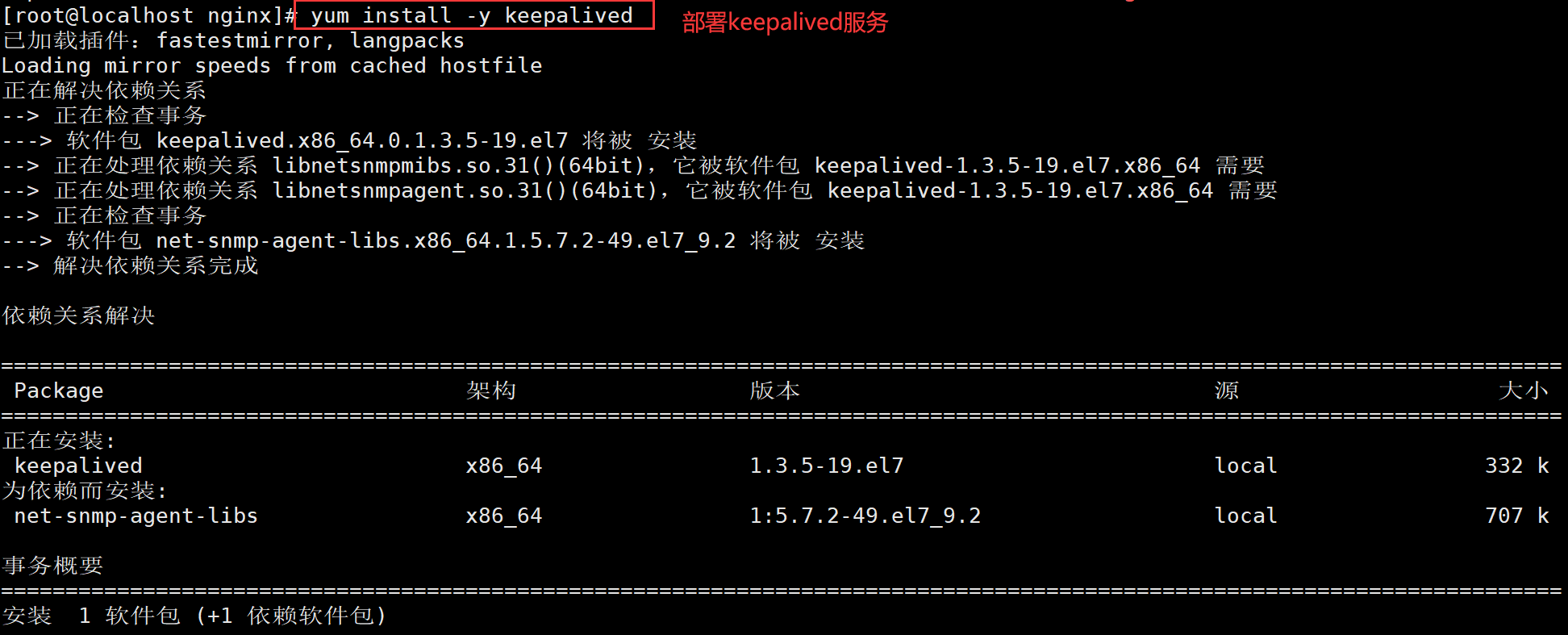

//部署keepalived服务

yum install -y keepalived

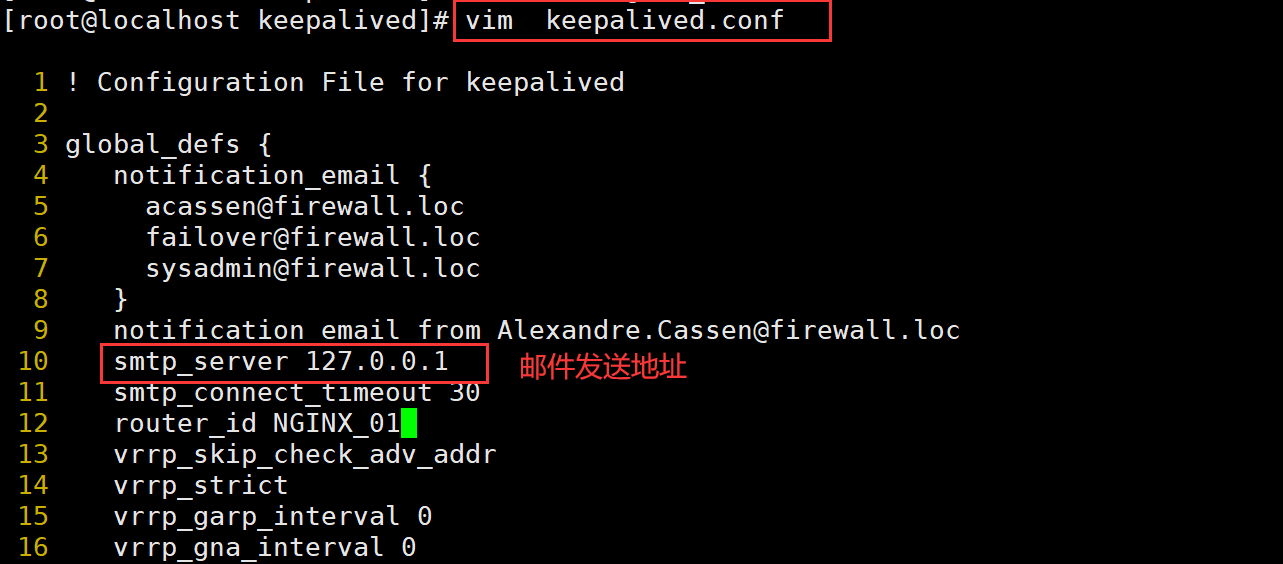

#### 在lb01节点上操作####

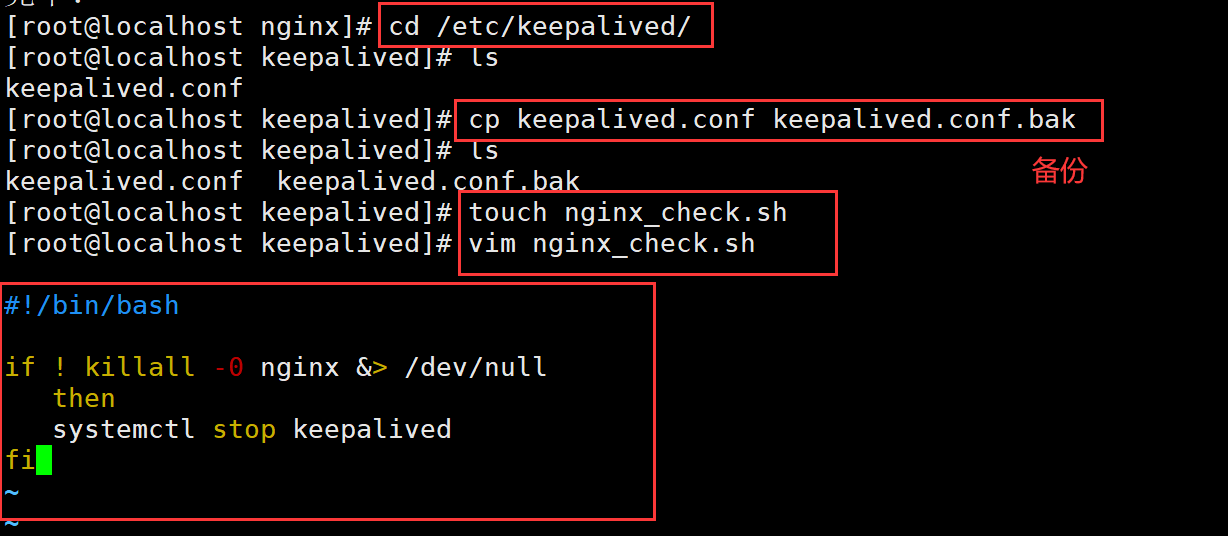

//修改keepalived配置文件

cd /etc/keepalived/

ls

cp keepalived.conf keepalived.conf.bak

ls

touch nginx_check.sh

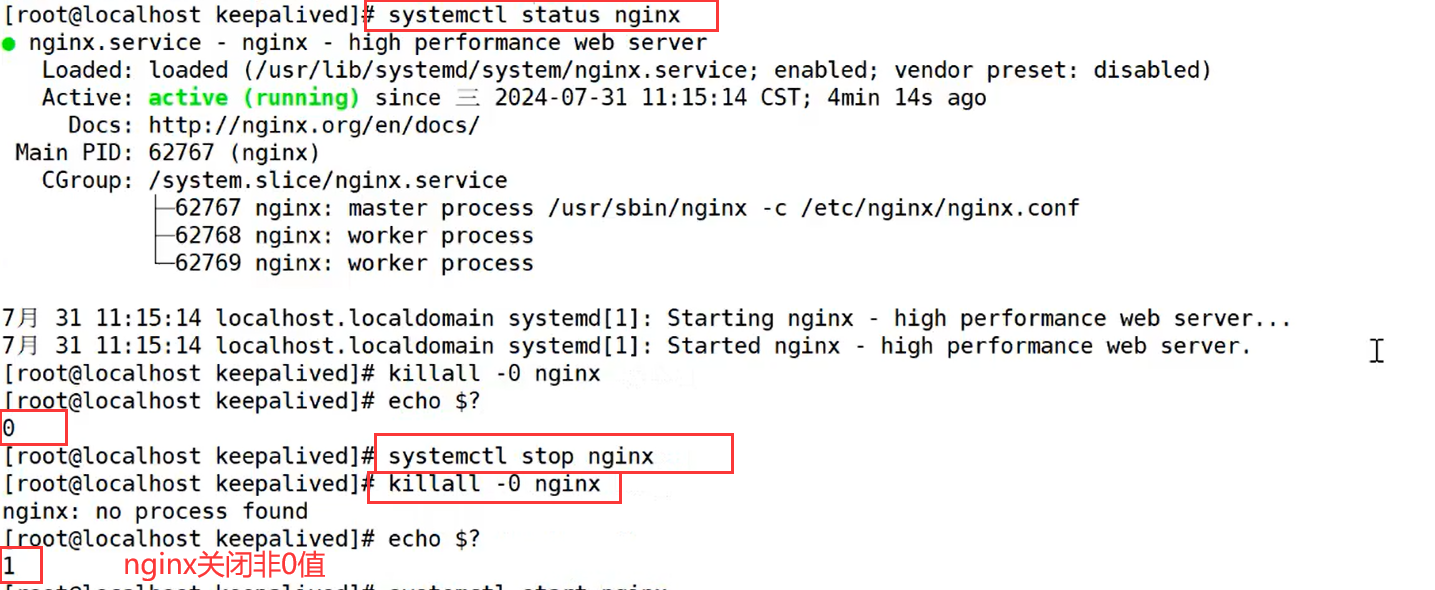

vim nginx_check.sh

#!/bin/bash

if ! killall -0 nginx &> /dev/null

then

systemctl stop keepalived

fi

chmod +x nginx_check.sh

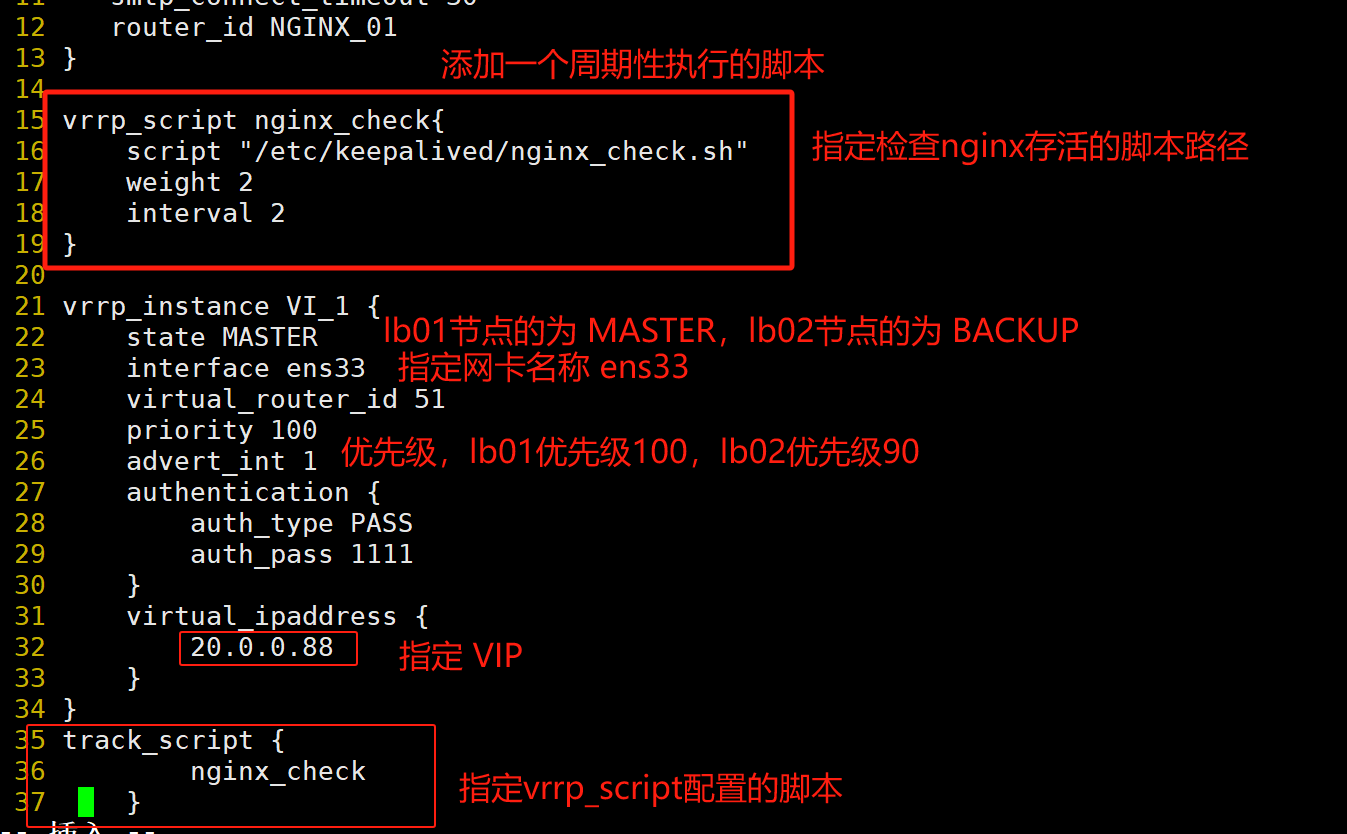

vim keepalived.conf

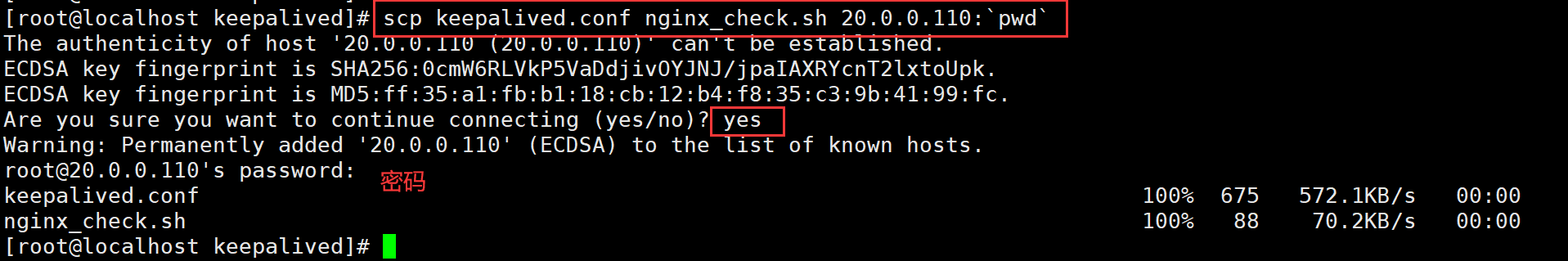

scp keepalived.conf nginx_check.sh 20.0.0.110:`pwd`

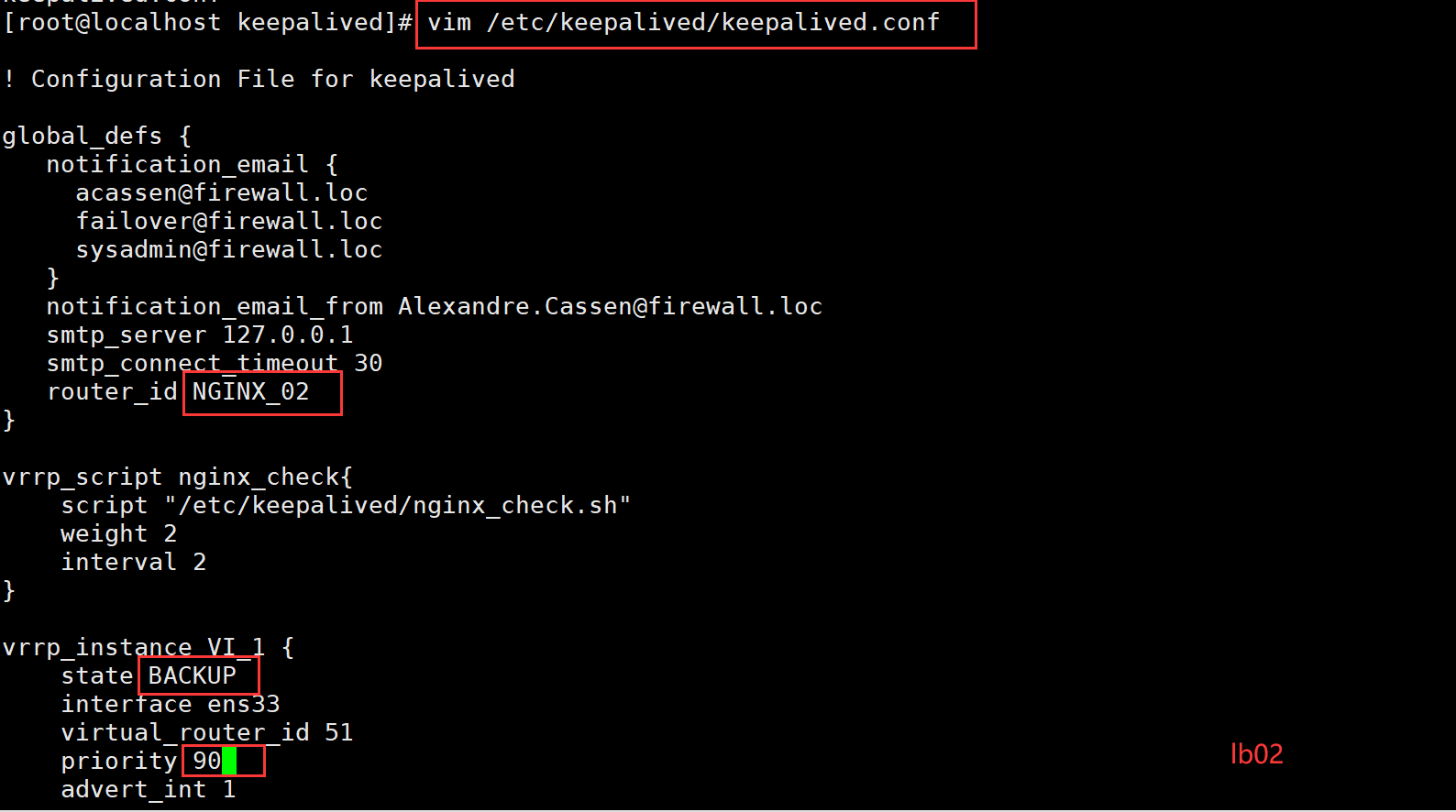

#### 20.0.0.110 lb02 #####

vim /etc/keepalived/keepalived.conf

router_id NGINX_01

state BACKUP

priority 90

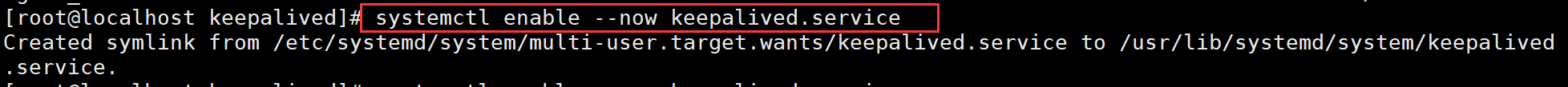

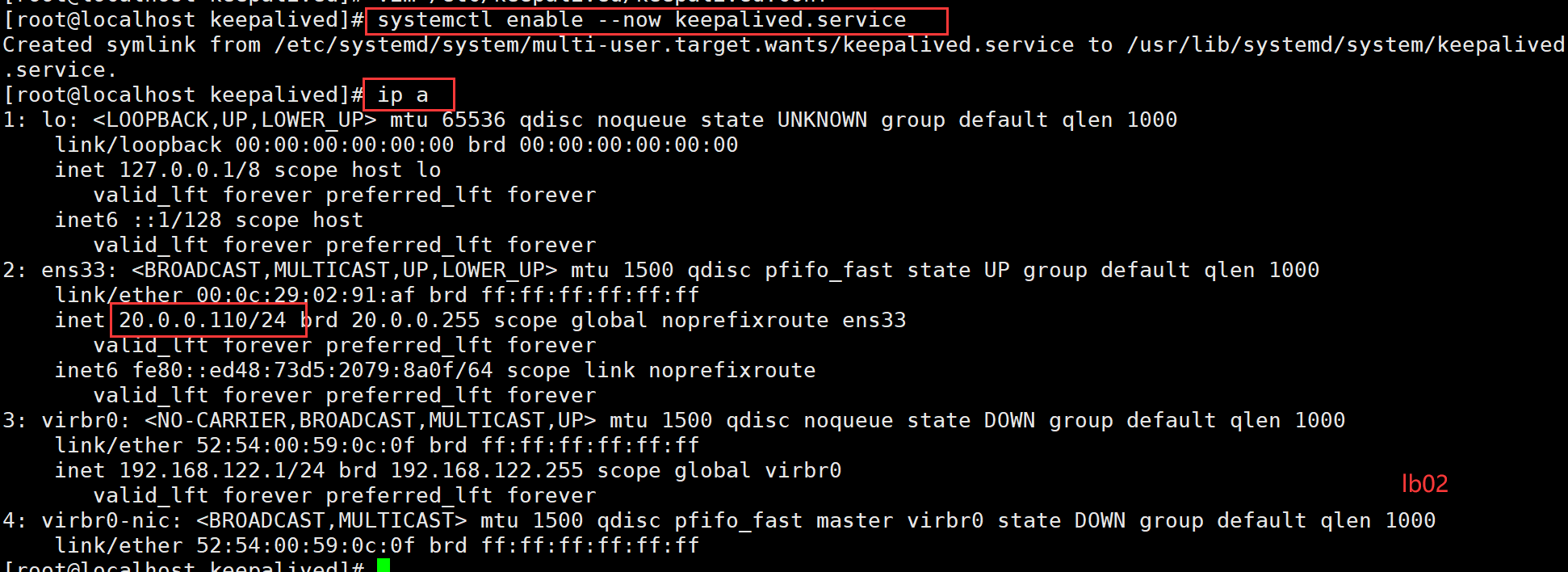

systemctl enable --now keepalived.service

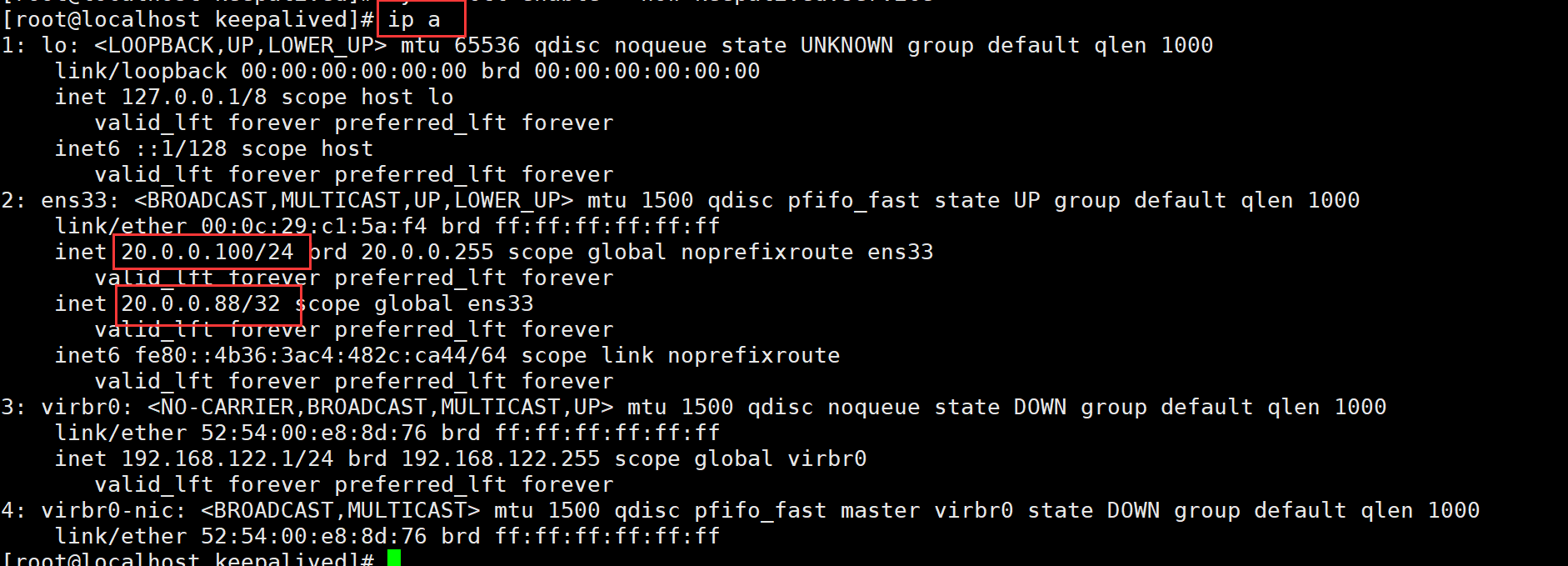

ip a

#验证

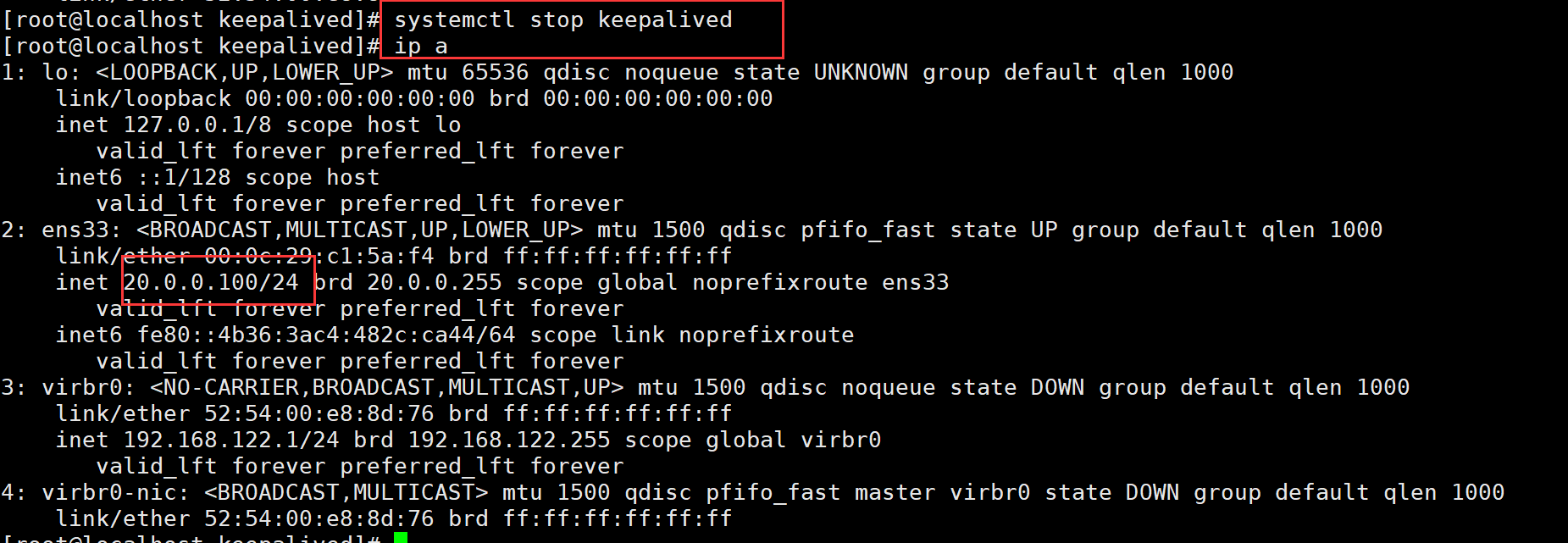

#####20.0.0.100 lb01#####

systemctl stop nginx

ip a

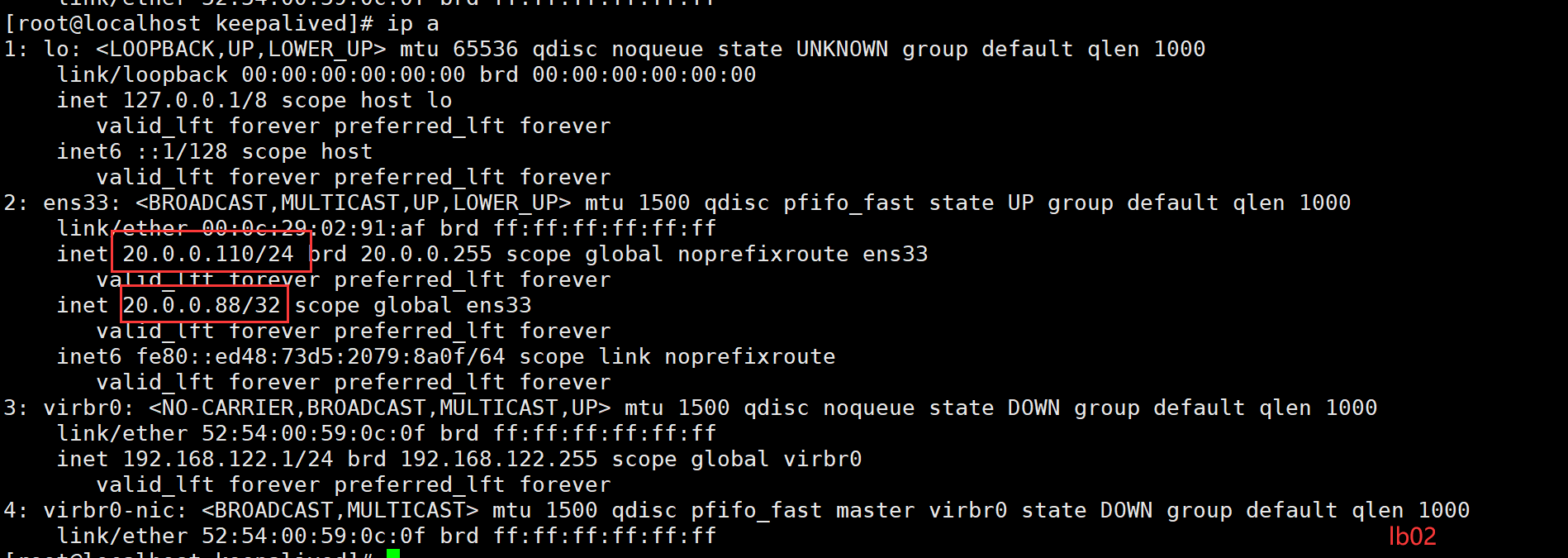

#####20.0.0.110 lb01#####

ip a

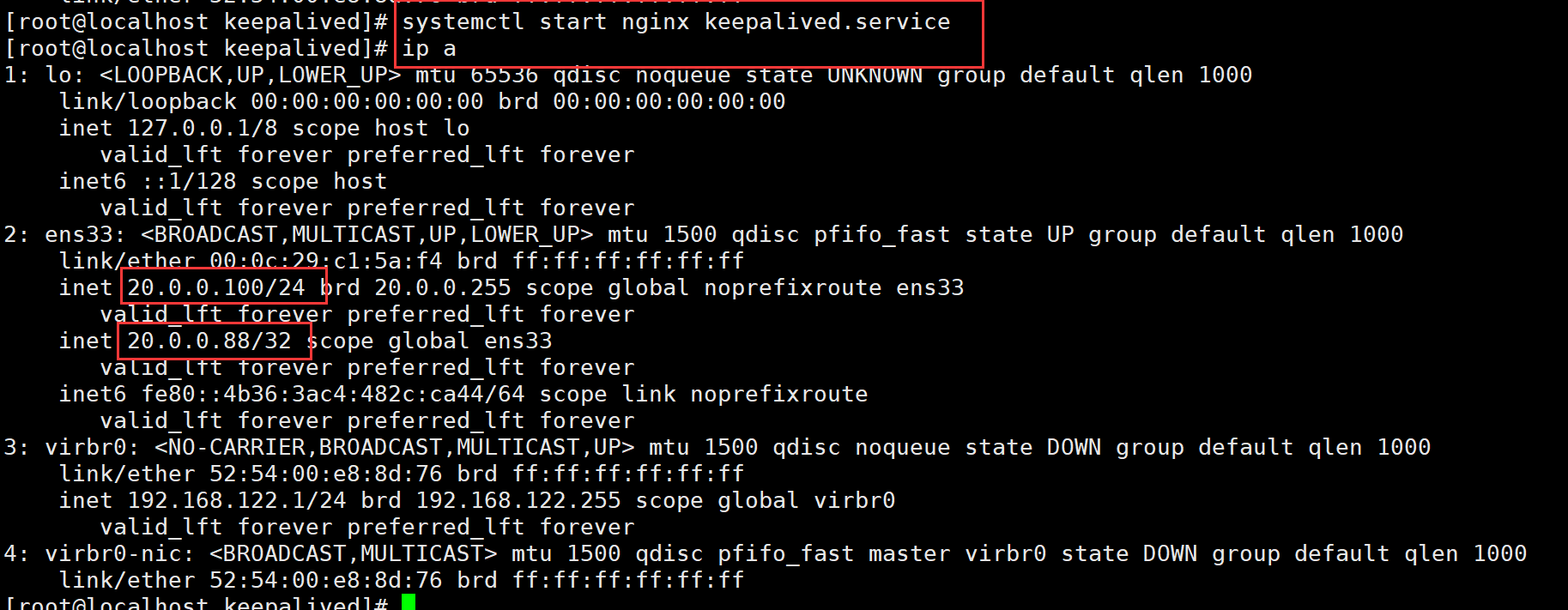

#####20.0.0.110 lb01#####

systemctl start nginx keepalived.service

验证

5.部署K8S集群

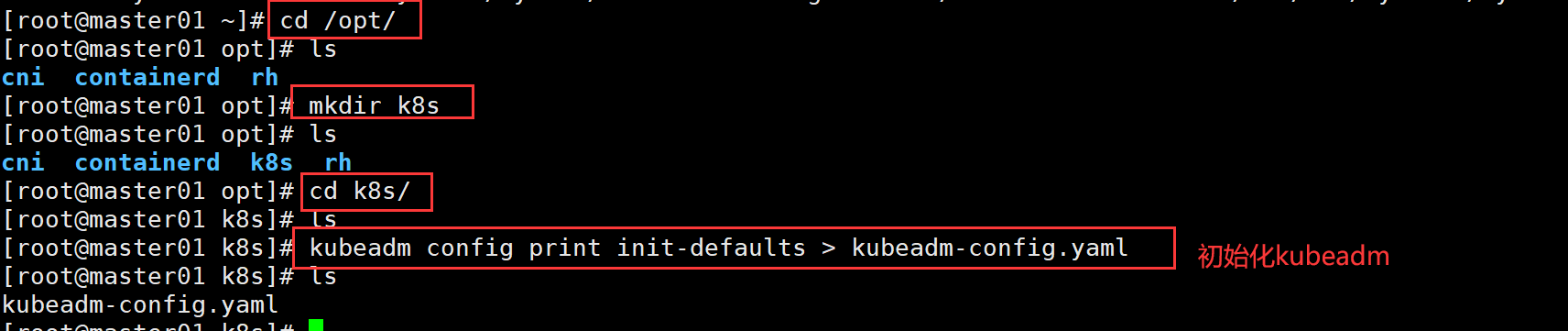

//在 master01 节点上操作

cd /opt/

mkdir k8s

cd k8s/

ls

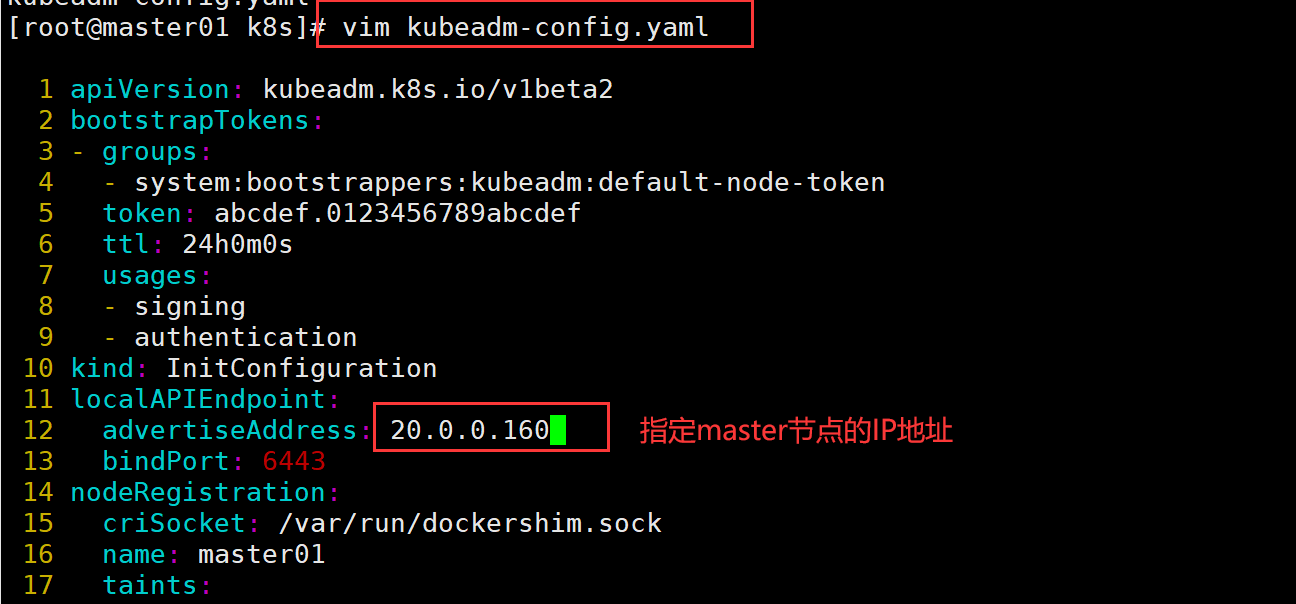

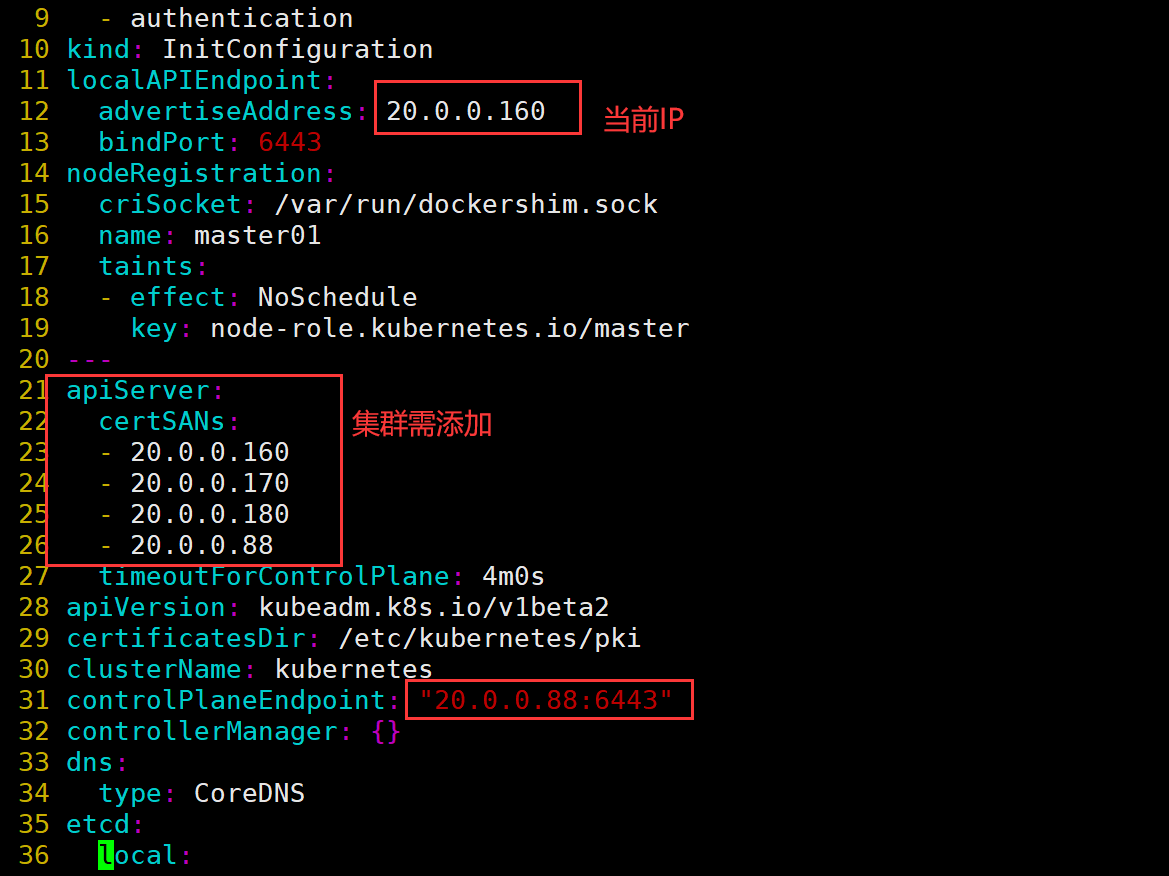

//初始化kubeadm

kubeadm config print init-defaults > kubeadm-config.yaml

ls

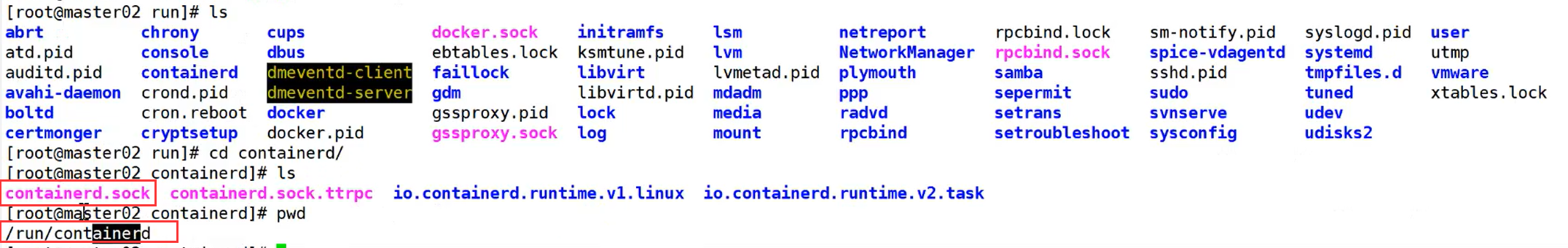

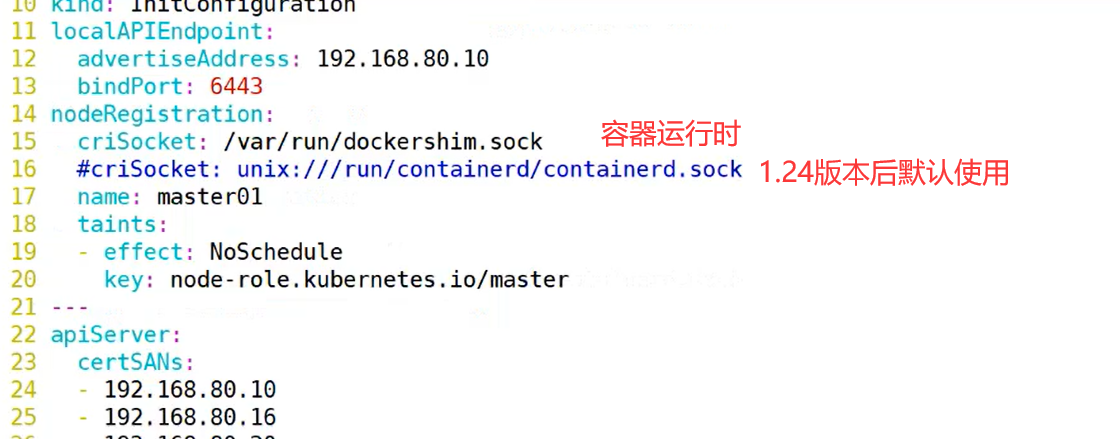

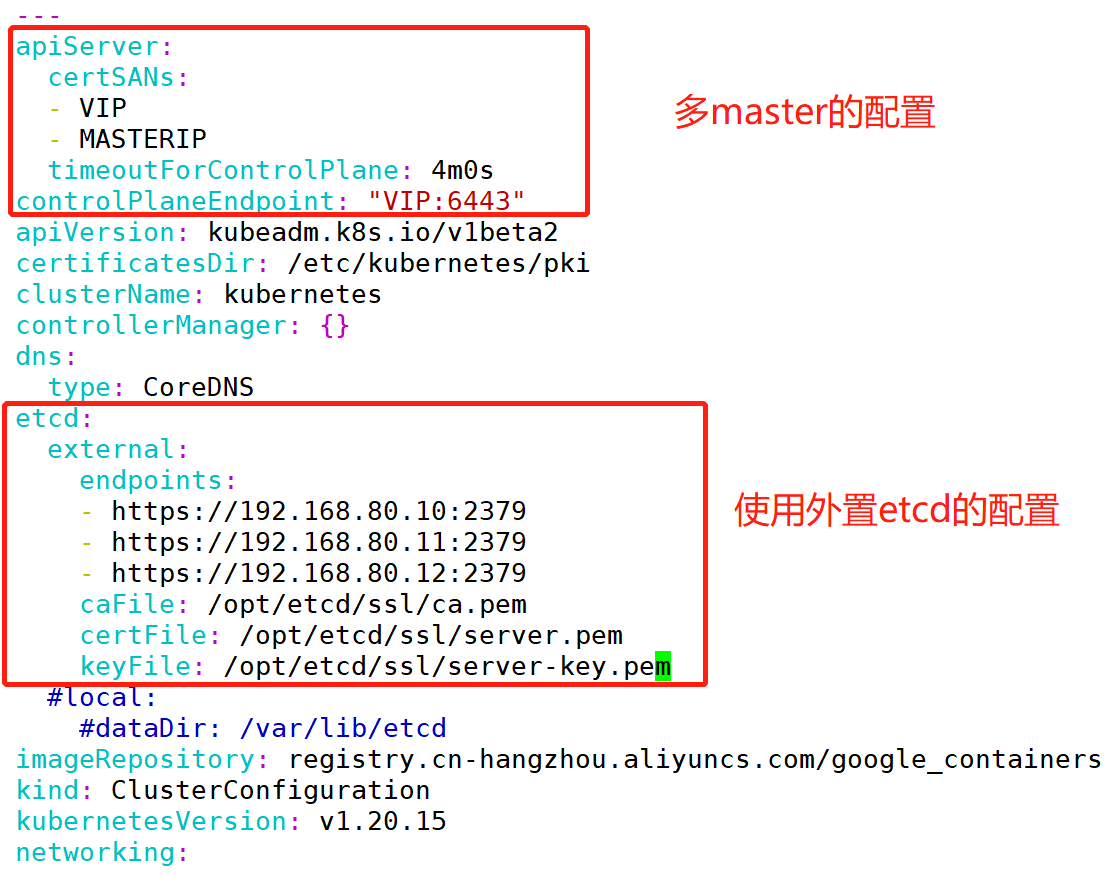

vim kubeadm-config.yaml

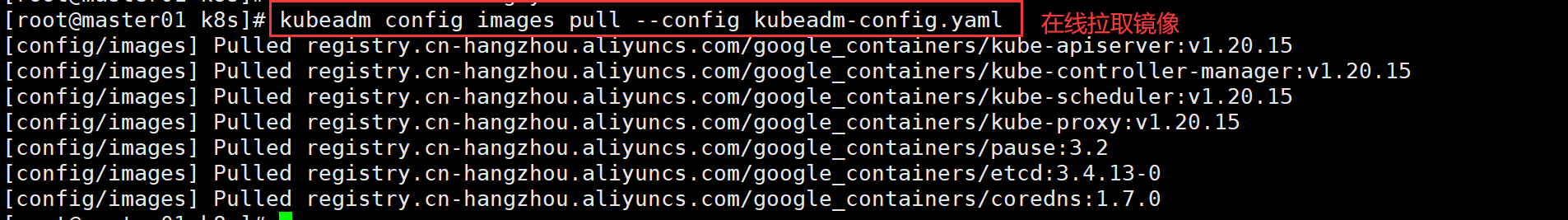

//在线拉取镜像

cd /opt/k8s/

kubeadm config images list --kubernetes-version 1.20.15 #查看需要哪些镜像

kubeadm config images pull --config kubeadm-config.yaml

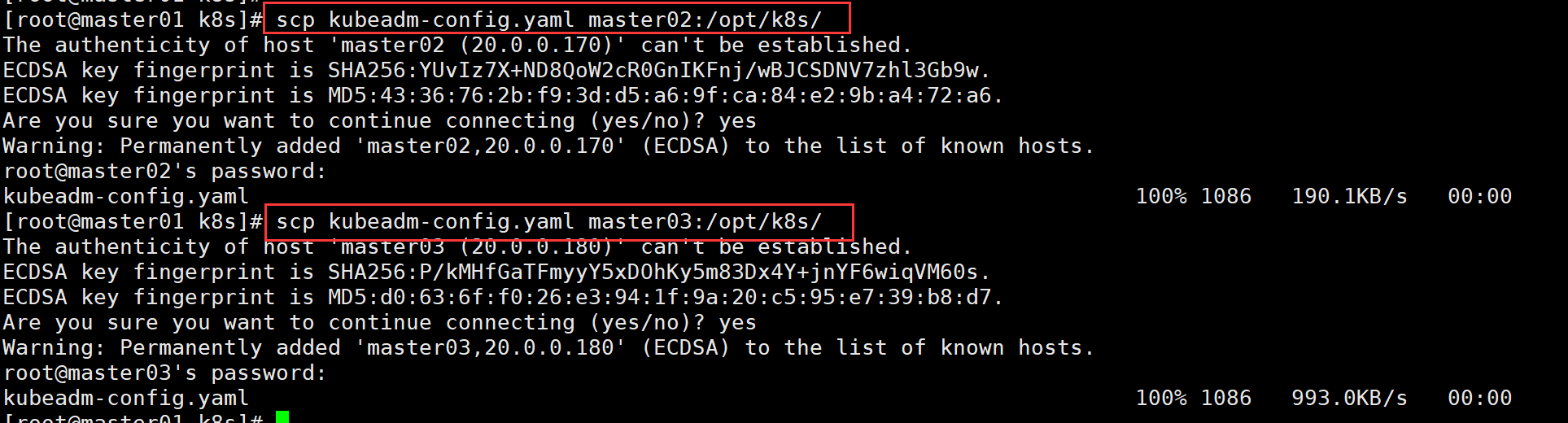

//在 master01 节点上操作

scp kubeadm-config.yaml master 02:/opt/k8s/

scp kubeadm-config.yaml master 03:/opt/k8s/

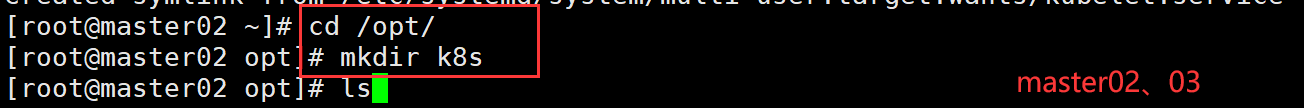

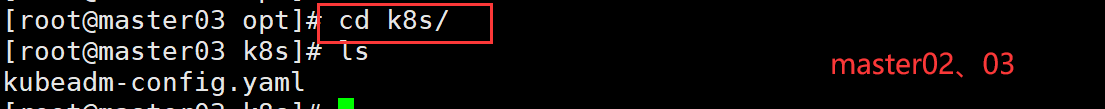

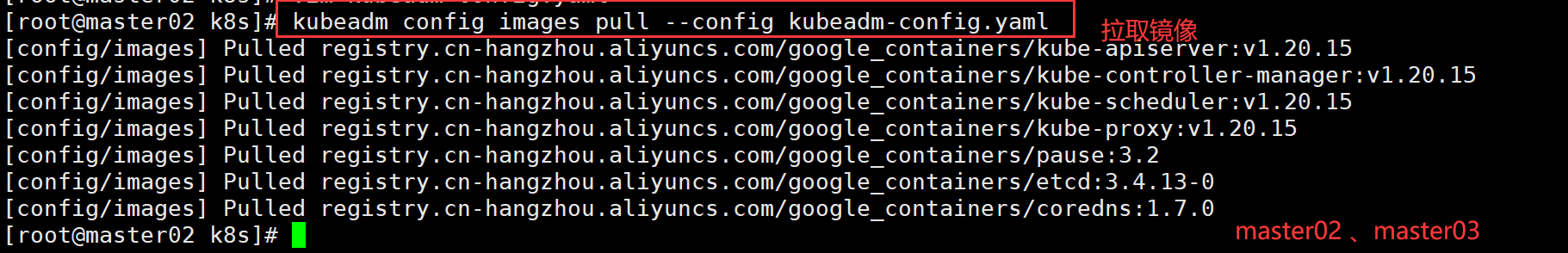

//在 master02 、master03节点上操作

cd /opt/

mkdir k8s

ls

kubeadm config images pull --config kubeadm-config.yaml

注:首行缩进两空格,冒号后空格

- 在线拉取镜像

![]()

![]()

![]()

![]()

![]()

![]()

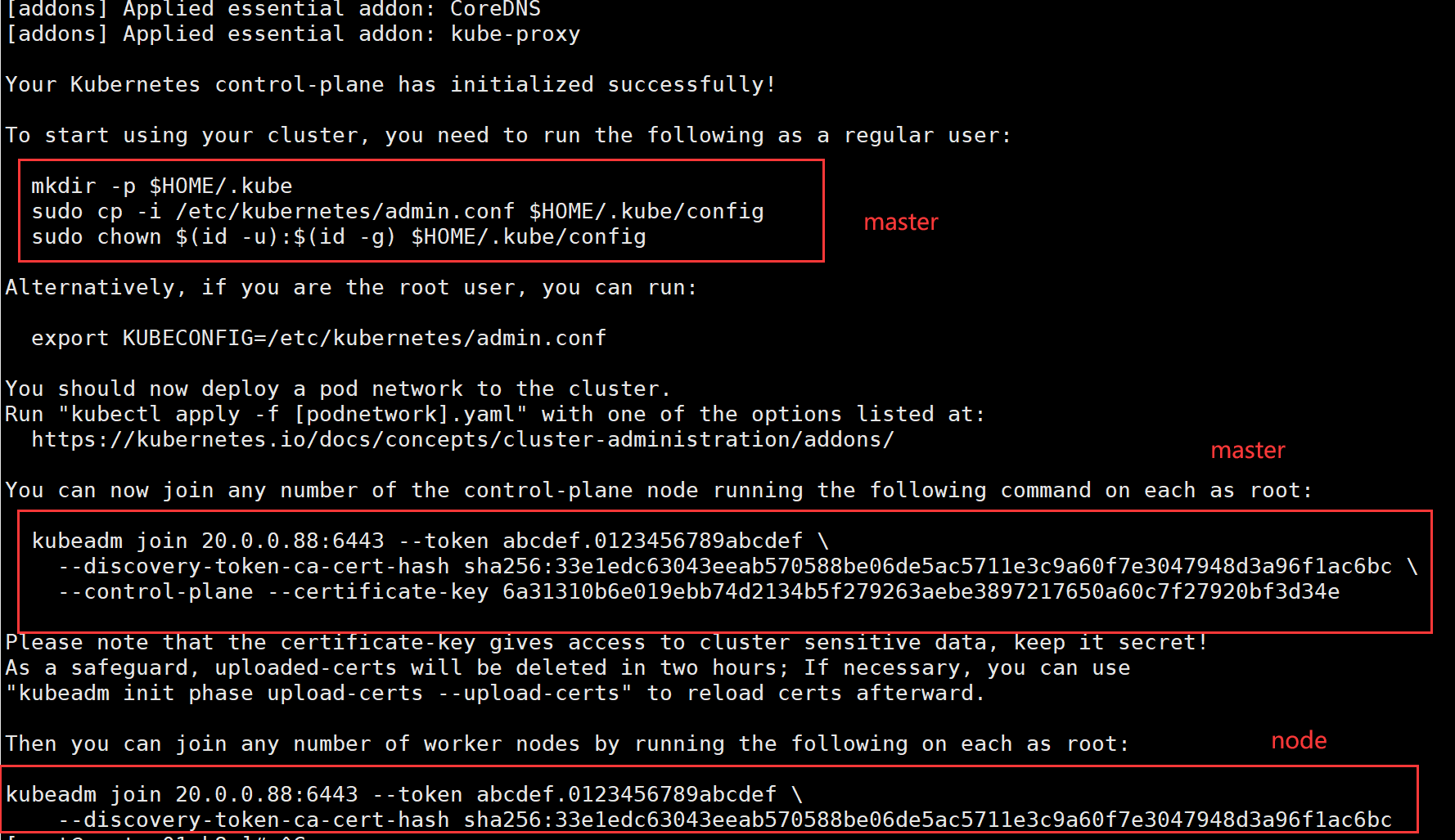

//初始化 master01

#kubeadm reset ##重置

kubeadm init --config=kubeadm-config.yaml --upload-certs | tee kubeadm-init.log

#--upload-certs 参数可以在后续执行加入节点时自动分发证书文件

#tee kubeadm-init.log 用以输出日志

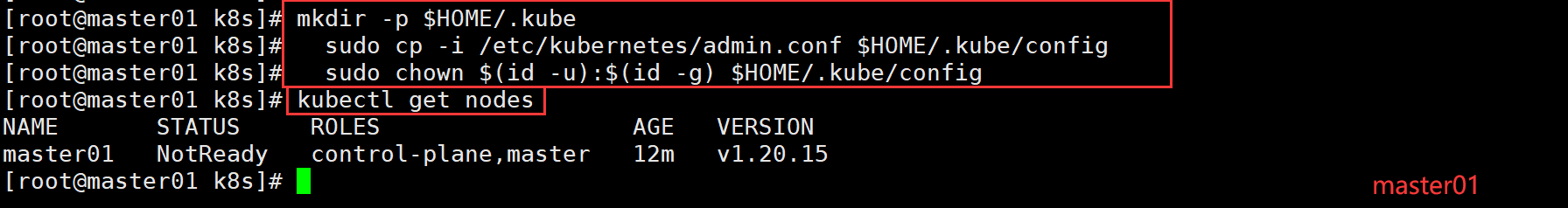

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config #在master节点查看节点状态

kubectl get nodes

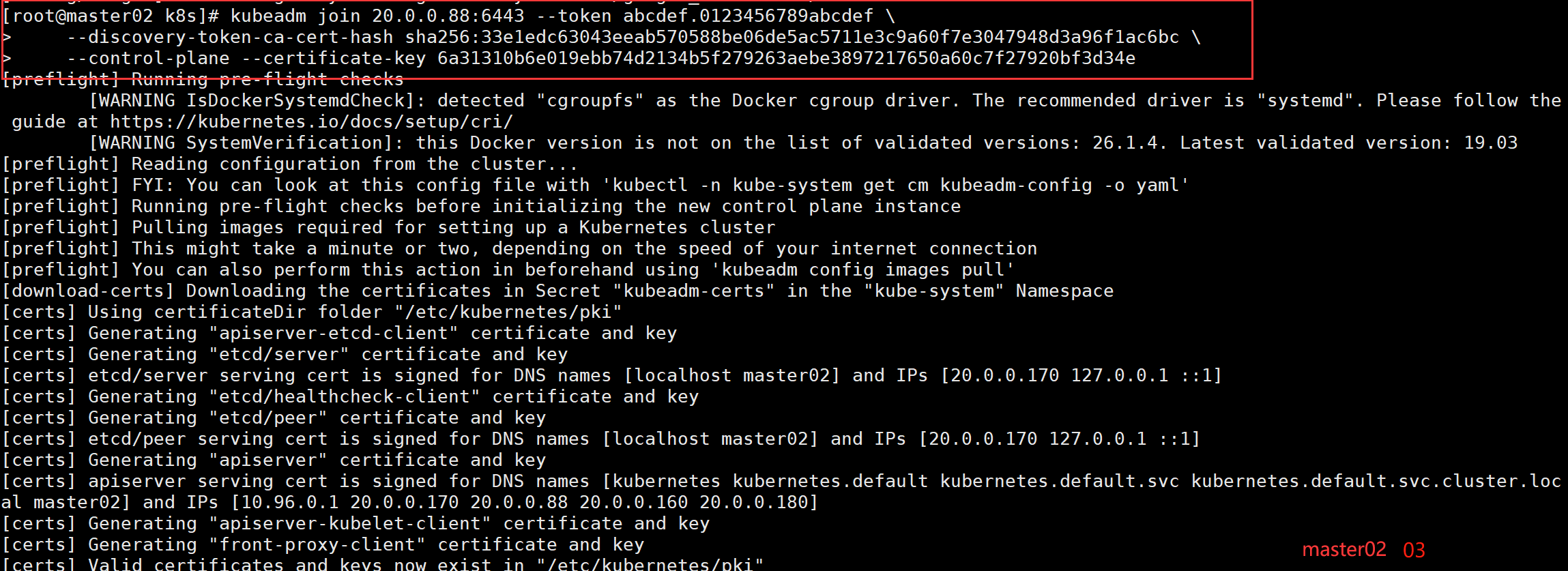

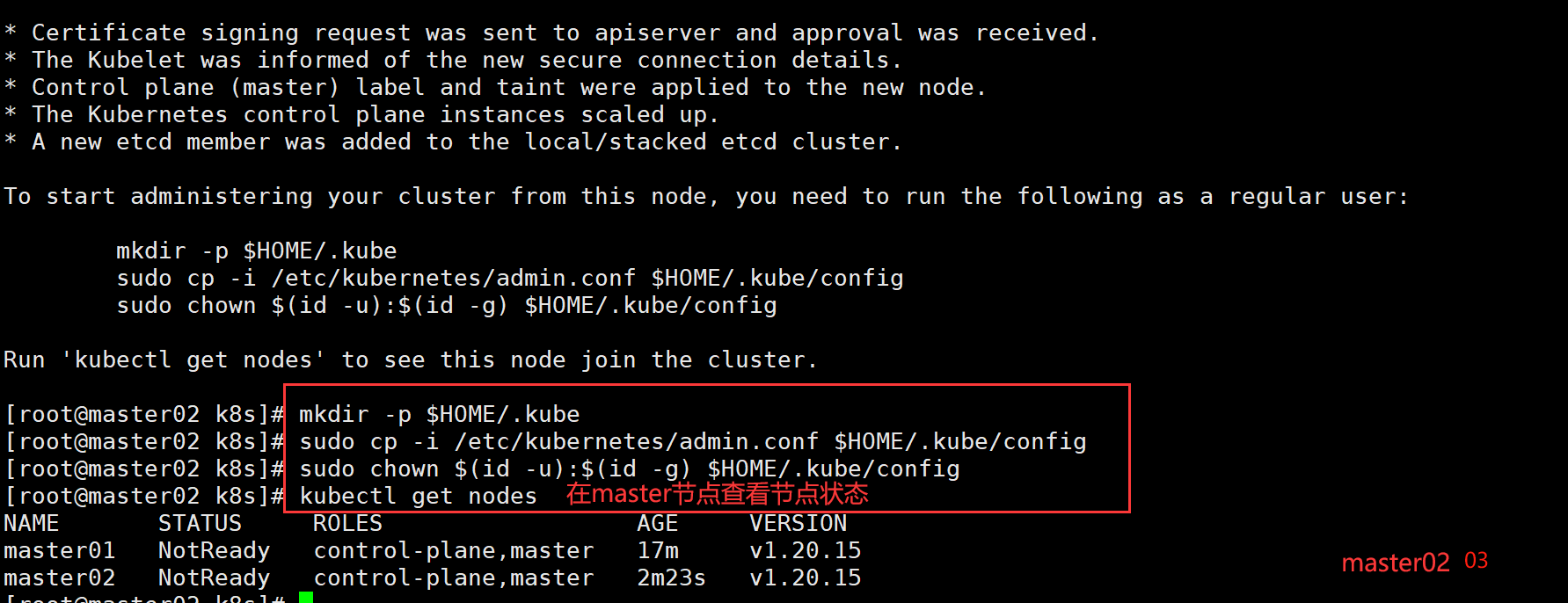

//在 master02 、master03节点上操作

kubeadm join 20.0.0.88:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:33e1edc63043eeab570588be06de5ac5711e3c9a60f7e3047948d3a96f1ac6bc \

--control-plane --certificate-key 6a31310b6e019ebb74d2134b5f279263aebe3897217650a60c7f27920bf3d34e

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

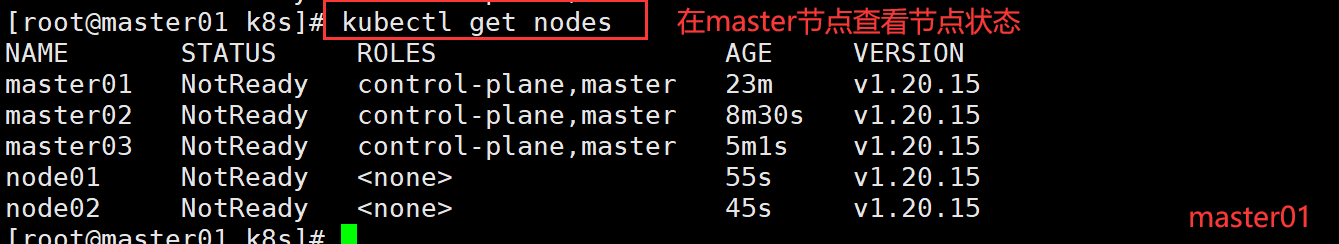

kubectl get nodes

//在 node 节点上执行 kubeadm join 命令加入群集

kubeadm join 20.0.0.88:6443 --token abcdef.0123456789abcdef \

--discovery-token-ca-cert-hash sha256:33e1edc63043eeab570588be06de5ac5711e3c9a60f7e3047948d3a96f1ac6bc

6.所有节点部署网络插件flannel

20.0.0.160 master01

20.0.0.170 master02

20.0.0.180 master03

20.0.0.130 node01

20.0.0.140 node02

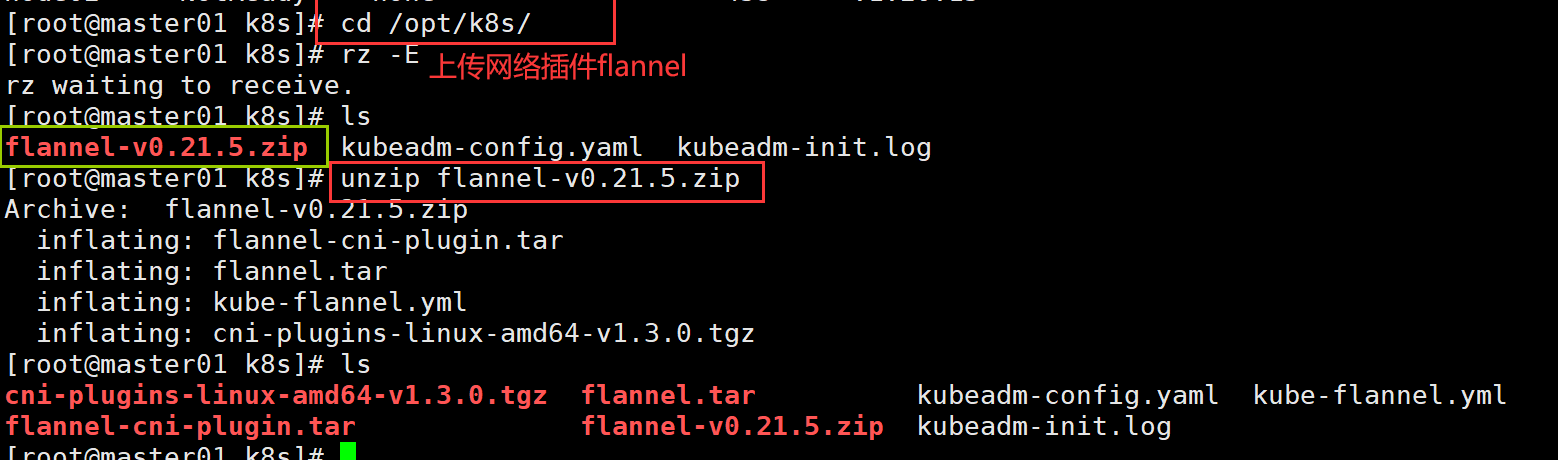

//在 master01 节点上操作

cd /opt/k8s/

rz -E 上传flannel-v0.21.5.zip

unzip flannel-v0.21.5.zip

ls

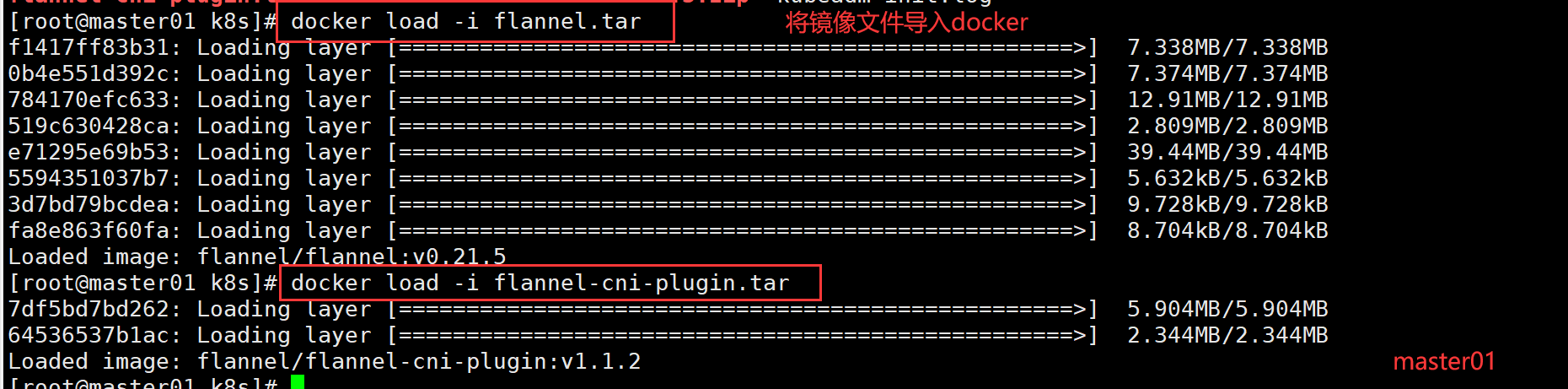

docker load -i flannel.tar

docker load -i flannel-cni-plugin.tar

//在node 01 02 节点上操作

cd /opt/

mkdir k8s

cd k8s/

//在 master01 节点上操作

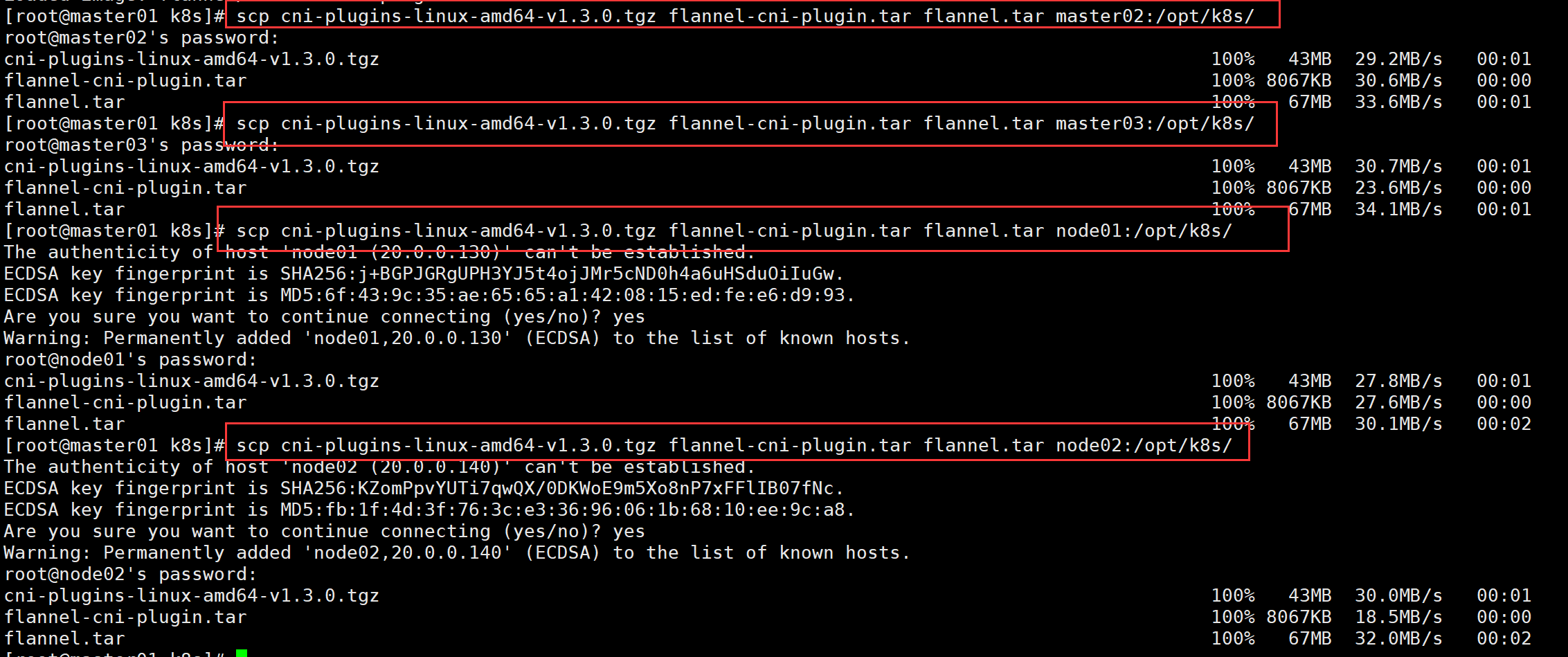

scp cni-plugins-linux-amd64-v1.3.0.tgz flannel-cni-plugin.tar flannel.tar master02:/opt/k8s/

scp cni-plugins-linux-amd64-v1.3.0.tgz flannel-cni-plugin.tar flannel.tar master03:/opt/k8s/

scp cni-plugins-linux-amd64-v1.3.0.tgz flannel-cni-plugin.tar flannel.tar node01:/opt/k8s/

scp cni-plugins-linux-amd64-v1.3.0.tgz flannel-cni-plugin.tar flannel.tar node02:/opt/k8s/

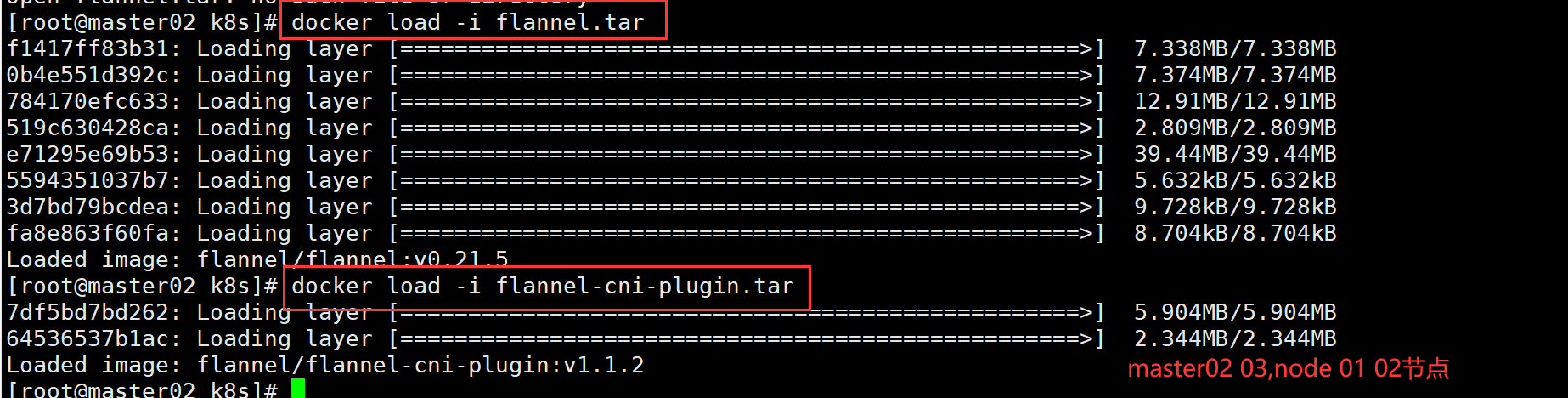

//在 master02 03,node 01 02节点上操作

docker load -i flannel.tar

docker load -i flannel-cni-plugin.tar

//在 所有 节点上操作

20.0.0.160 master01

20.0.0.170 master02

20.0.0.180 master03

20.0.0.130 node01

20.0.0.140 node02

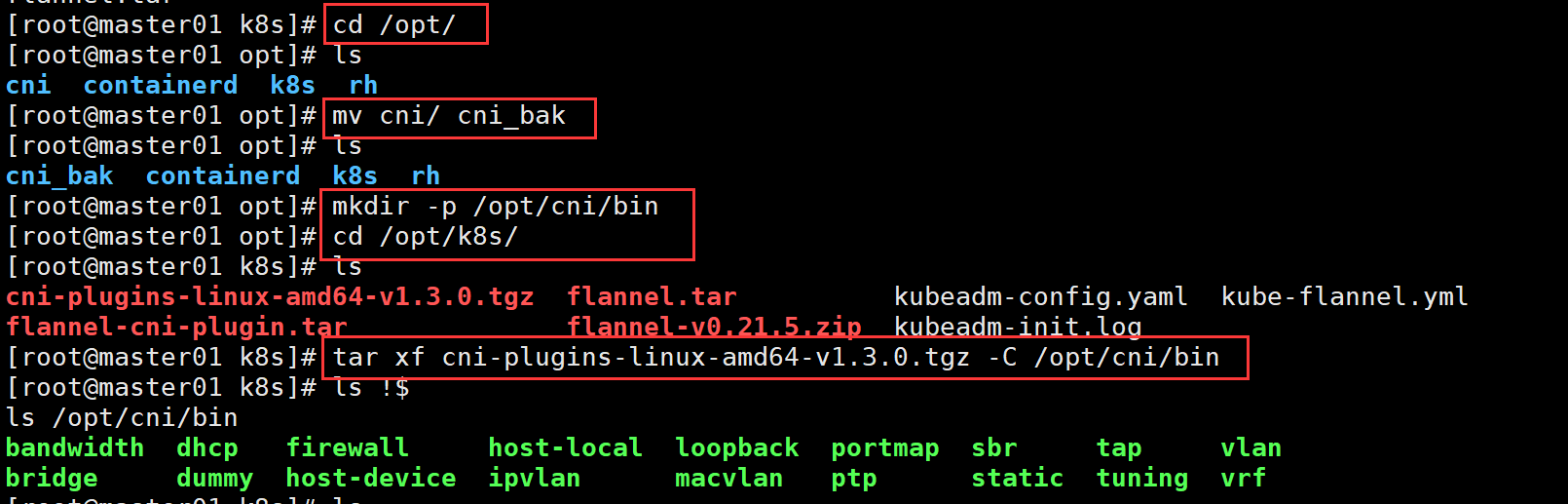

cd /opt/

ls

mv cni/ cni_bak

ls

mkdir -p /opt/cni/bin

cd /opt/k8s/

tar xf cni-plugins-linux-amd64-v1.3.0.tgz -C /opt/cni/bin

ls !$

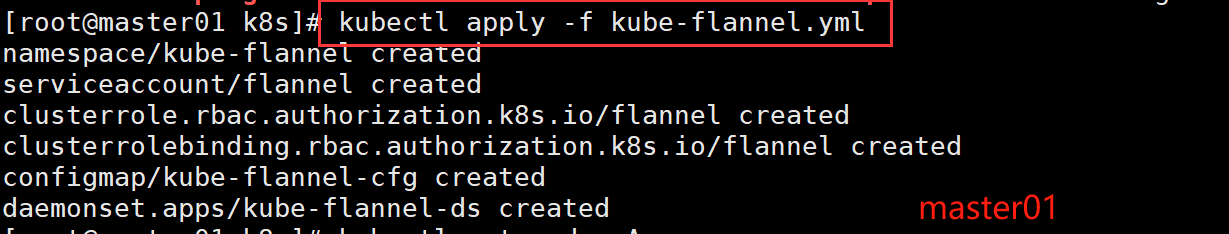

//在 master 节点创建 flannel 资源

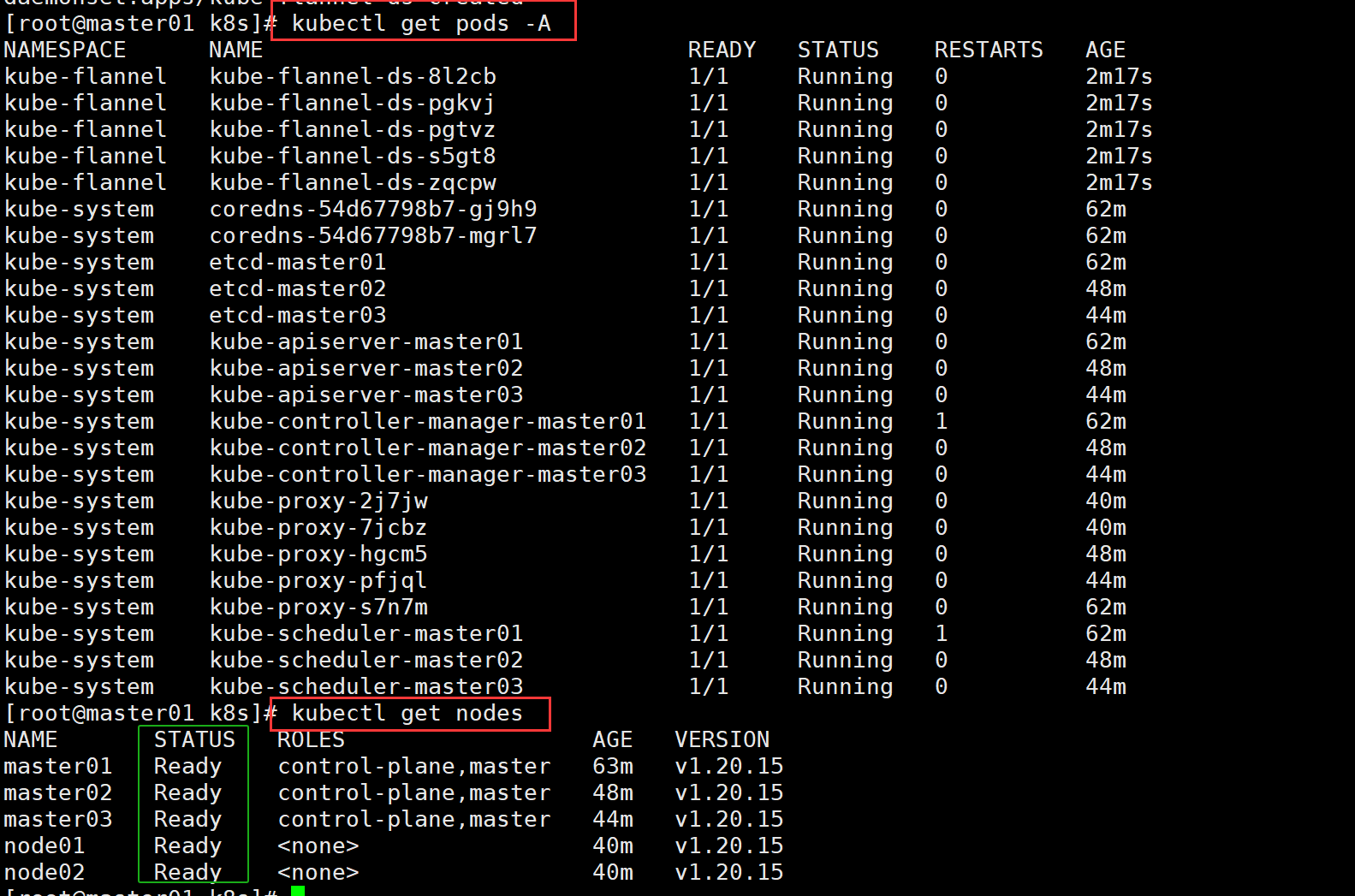

kubectl apply -f kube-flannel.yml

kubectl get pods -A

kubectl get nodes #在master节点查看节点状态

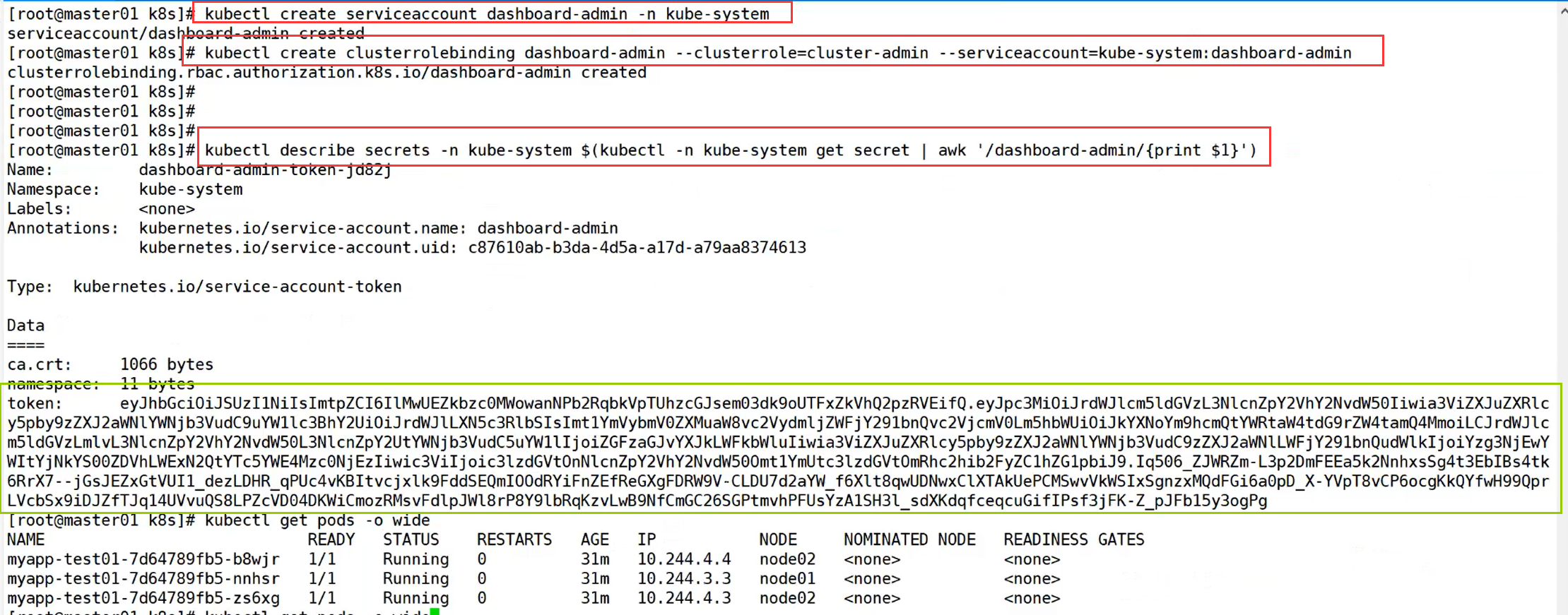

#创建service account并绑定默认cluster-admin管理员集群角色

kubectl create serviceaccount dashboard-admin -n kube-system

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

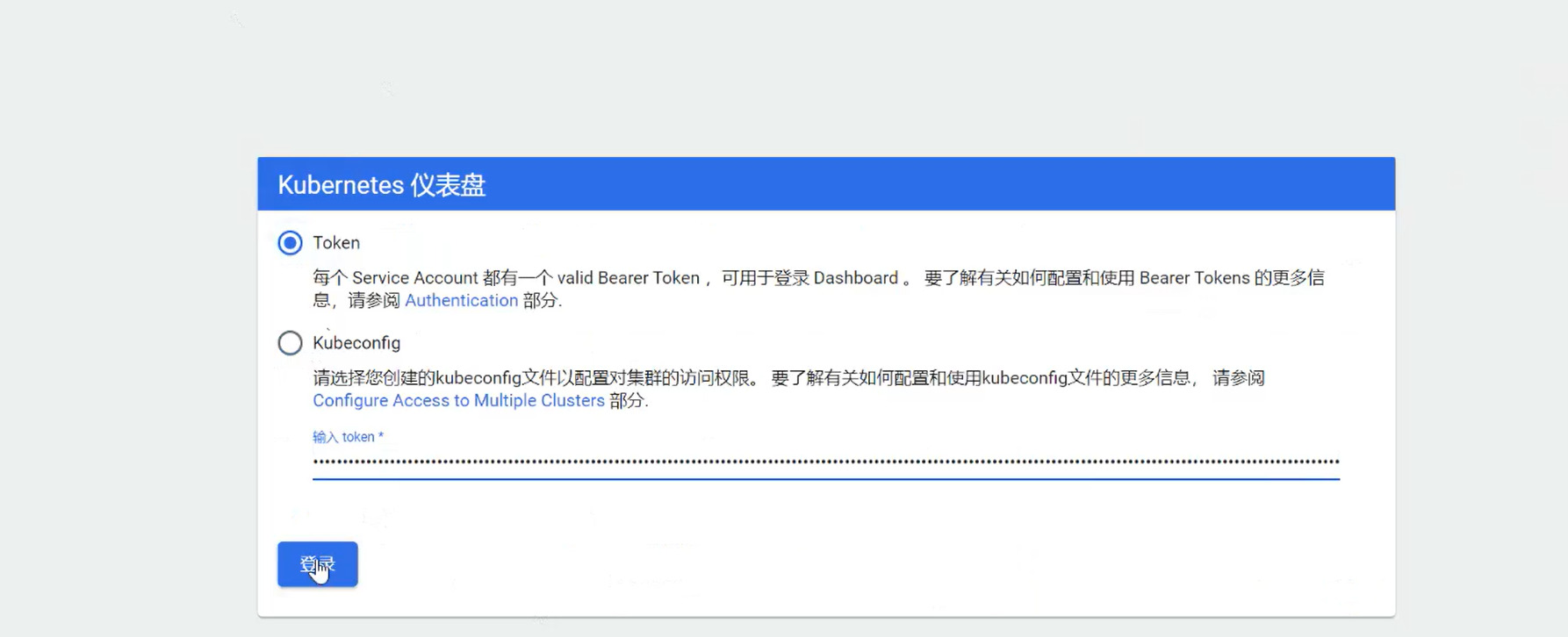

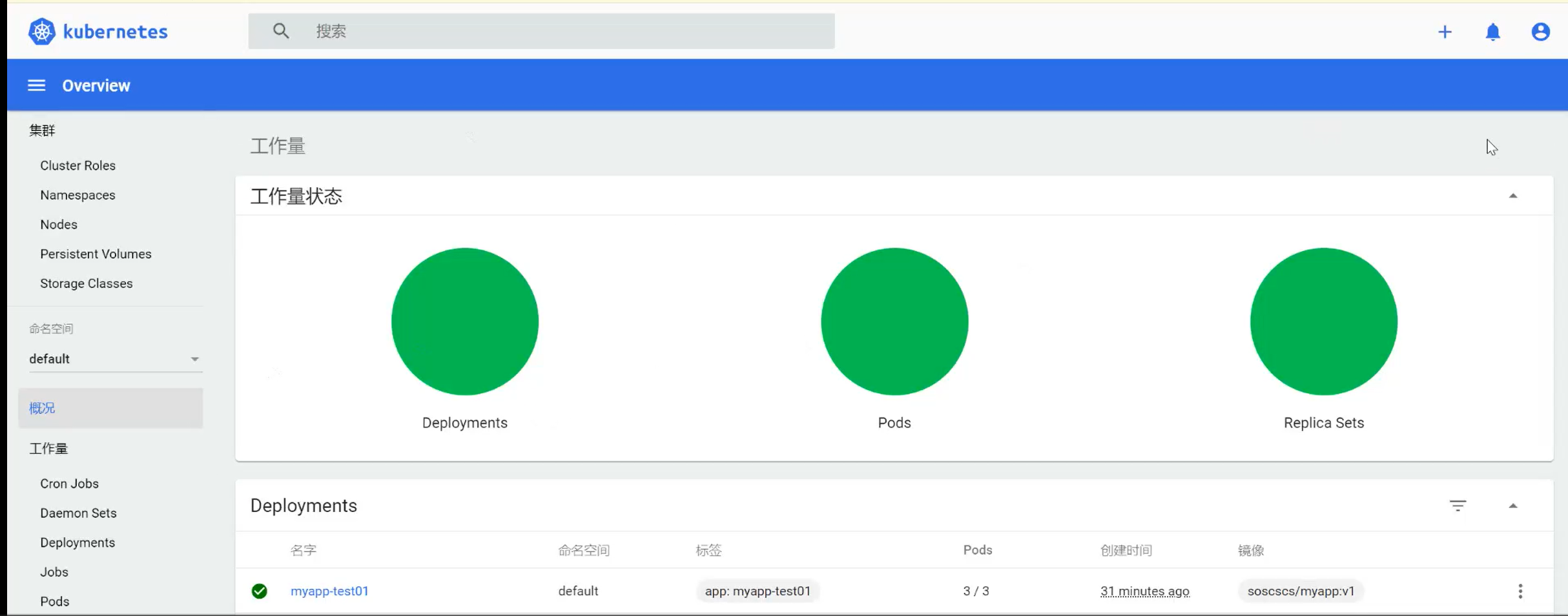

//测试访问

https://20.0.0.130:30001

浙公网安备 33010602011771号

浙公网安备 33010602011771号