Android 源码解析 之 MediaPlayer

Android 源码解析 之 MediaPlayer

我们可以使用如下工具方法获取视频或者音频时长。

internal fun getDuration(context: Context, uri: Uri): Int {

val mediaPlayer: MediaPlayer? = MediaPlayer.create(context, uri)

val duration: Int = mediaPlayer?.duration ?: 0

Log.i(TAG, "MusicScreen -> duration: $duration")

mediaPlayer?.release()

return duration

}

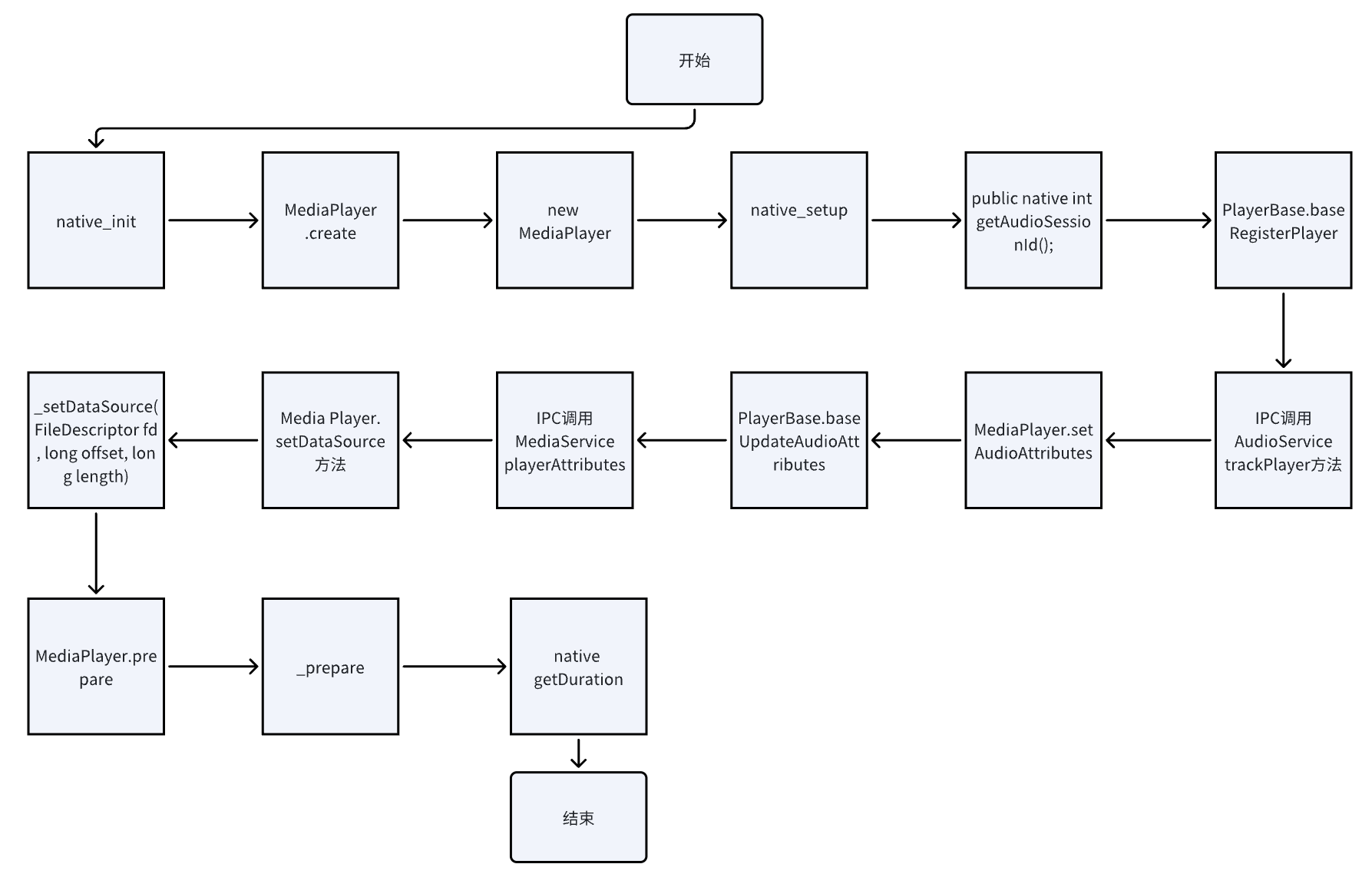

调用流程图如下:

Android中的sp、wp

system/core/libutils/binder/include/utils/StrongPointer.h

// ---------------------------------------------------------------------------

namespace android {

template<typename T> class wp;

// ---------------------------------------------------------------------------

template<typename T>

class sp {

public:

inline constexpr sp() : m_ptr(nullptr) { }

// The old way of using sp<> was like this. This is bad because it relies

// on implicit conversion to sp<>, which we would like to remove (if an

// object is being managed some other way, this is double-ownership). We

// want to move away from this:

//

// sp<Foo> foo = new Foo(...); // DO NOT DO THIS

//

// Instead, prefer to do this:

//

// sp<Foo> foo = sp<Foo>::make(...); // DO THIS

//

// Sometimes, in order to use this, when a constructor is marked as private,

// you may need to add this to your class:

//

// friend class sp<Foo>;

template <typename... Args>

static inline sp<T> make(Args&&... args);

// ...

// TODO: Ideally we should find a way to increment the reference count before running the

// constructor, so that generating an sp<> to this in the constructor is no longer dangerous.

template <typename T>

template <typename... Args>

sp<T> sp<T>::make(Args&&... args) {

T* t = new T(std::forward<Args>(args)...);

sp<T> result;

result.m_ptr = t;

t->incStrong(t);

return result;

}

system/core/libutils/binder/include/utils/RefBase.h

template <typename T>

class wp

{

public:

typedef typename RefBase::weakref_type weakref_type;

inline constexpr wp() : m_ptr(nullptr), m_refs(nullptr) { }

// ...

}

可以使用智能指针的对象必须继承自RefBase.

源码查看

MediaPlayer.java

// holder为null,audioAttributes为null,audioSessionId为0

public static MediaPlayer create(Context context, Uri uri, SurfaceHolder holder,AudioAttributes audioAttributes, int audioSessionId) {

try {

MediaPlayer mp = new MediaPlayer(context, audioSessionId); // 1

final AudioAttributes aa = audioAttributes != null ? audioAttributes :

new AudioAttributes.Builder().build();

mp.setAudioAttributes(aa); // 2

mp.setDataSource(context, uri); // 3

if (holder != null) {

mp.setDisplay(holder);

}

mp.prepare();

return mp;

} catch (IOException ex) {

Log.d(TAG, "create failed:", ex);

// fall through

} catch (IllegalArgumentException ex) {

Log.d(TAG, "create failed:", ex);

// fall through

} catch (SecurityException ex) {

Log.d(TAG, "create failed:", ex);

// fall through

}

return null;

}

可以看到 MediaPlayer的create的方法返回一个可空的MediaPlayer对象,并且处理掉了异常。

其父类是PlayerBase

protected void baseRegisterPlayer(int sessionId) {

try {

mPlayerIId = getService().trackPlayer(

new PlayerIdCard(mImplType, mAttributes, new IPlayerWrapper(this),

sessionId));

} catch (RemoteException e) {

Log.e(TAG, "Error talking to audio service, player will not be tracked", e);

}

}

void baseUpdateAudioAttributes(@NonNull AudioAttributes attr) {

if (attr == null) {

throw new IllegalArgumentException("Illegal null AudioAttributes");

}

try {

getService().playerAttributes(mPlayerIId, attr);

} catch (RemoteException e) {

Log.e(TAG, "Error talking to audio service, audio attributes will not be updated", e);

}

synchronized (mLock) {

mAttributes = attr;

}

}

native_init

frameworks/base/media/jni/android_media_MediaPlayer.cpp

struct fields_t {

jfieldID context;

jfieldID surface_texture;

jmethodID post_event;

jmethodID proxyConfigGetHost;

jmethodID proxyConfigGetPort;

jmethodID proxyConfigGetExclusionList;

};

static fields_t fields;

static void

android_media_MediaPlayer_native_init(JNIEnv *env)

{

jclass clazz;

clazz = env->FindClass("android/media/MediaPlayer");

if (clazz == NULL) {

return;

}

fields.context = env->GetFieldID(clazz, "mNativeContext", "J");

if (fields.context == NULL) {

return;

}

fields.post_event = env->GetStaticMethodID(clazz, "postEventFromNative",

"(Ljava/lang/Object;IIILjava/lang/Object;)V");

if (fields.post_event == NULL) {

return;

}

fields.surface_texture = env->GetFieldID(clazz, "mNativeSurfaceTexture", "J");

if (fields.surface_texture == NULL) {

return;

}

env->DeleteLocalRef(clazz);

clazz = env->FindClass("android/net/ProxyInfo");

if (clazz == NULL) {

return;

}

fields.proxyConfigGetHost =

env->GetMethodID(clazz, "getHost", "()Ljava/lang/String;");

fields.proxyConfigGetPort =

env->GetMethodID(clazz, "getPort", "()I");

fields.proxyConfigGetExclusionList =

env->GetMethodID(clazz, "getExclusionListAsString", "()Ljava/lang/String;");

env->DeleteLocalRef(clazz);

// Modular DRM

FIND_CLASS(clazz, "android/media/MediaDrm$MediaDrmStateException");

if (clazz) {

GET_METHOD_ID(gStateExceptionFields.init, clazz, "<init>", "(ILjava/lang/String;)V");

gStateExceptionFields.classId = static_cast<jclass>(env->NewGlobalRef(clazz));

env->DeleteLocalRef(clazz);

} else {

ALOGE("JNI android_media_MediaPlayer_native_init couldn't "

"get clazz android/media/MediaDrm$MediaDrmStateException");

}

gPlaybackParamsFields.init(env);

gSyncParamsFields.init(env);

gVolumeShaperFields.init(env);

}

这段代码获取了一些必要的参数比如mNativeContext,其指向了native层的播放器的指针。

native_setup

frameworks/base/media/jni/android_media_MediaPlayer.cpp

static void

android_media_MediaPlayer_native_setup(JNIEnv *env, jobject thiz, jobject weak_this,

jobject jAttributionSource,

jint jAudioSessionId)

{

ALOGV("native_setup");

Parcel* parcel = parcelForJavaObject(env, jAttributionSource);

android::content::AttributionSourceState attributionSource;

attributionSource.readFromParcel(parcel);

sp<MediaPlayer> mp = sp<MediaPlayer>::make(

attributionSource, static_cast<audio_session_t>(jAudioSessionId));

if (mp == NULL) {

jniThrowException(env, "java/lang/RuntimeException", "Out of memory");

return;

}

// create new listener and give it to MediaPlayer

sp<JNIMediaPlayerListener> listener = new JNIMediaPlayerListener(env, thiz, weak_this);

mp->setListener(listener);

// Stow our new C++ MediaPlayer in an opaque field in the Java object.

setMediaPlayer(env, thiz, mp);

}

static sp<MediaPlayer> setMediaPlayer(JNIEnv* env, jobject thiz, const sp<MediaPlayer>& player)

{

Mutex::Autolock l(sLock);

sp<MediaPlayer> old = (MediaPlayer*)env->GetLongField(thiz, fields.context);

if (player.get()) {

player->incStrong((void*)setMediaPlayer);

}

if (old != 0) {

old->decStrong((void*)setMediaPlayer);

}

env->SetLongField(thiz, fields.context, (jlong)player.get());

return old;

}

setMediaPlayer将Jave层的mNativeContext指针指向了native层的播放器.并处理了一下智能指针的计数。

sp<MediaPlayer>::make意思就是调用MediaPlayer的构造函数创建了了一个MediaPlayer对象。

frameworks/av/media/libmedia/include/media/mediaplayer.h

frameworks/av/media/libmedia/mediaplayer.cpp

MediaPlayer::MediaPlayer(const AttributionSourceState& attributionSource,

const audio_session_t sessionId) : mAttributionSource(attributionSource)

{

ALOGV("constructor");

mListener = NULL;

mCookie = NULL;

mStreamType = AUDIO_STREAM_MUSIC;

mAudioAttributesParcel = NULL;

mCurrentPosition = -1;

mCurrentSeekMode = MediaPlayerSeekMode::SEEK_PREVIOUS_SYNC;

mSeekPosition = -1;

mSeekMode = MediaPlayerSeekMode::SEEK_PREVIOUS_SYNC;

mCurrentState = MEDIA_PLAYER_IDLE;

mPrepareSync = false;

mPrepareStatus = NO_ERROR;

mLoop = false;

mLeftVolume = mRightVolume = 1.0;

mVideoWidth = mVideoHeight = 0;

mLockThreadId = 0;

if (sessionId == AUDIO_SESSION_ALLOCATE) {

mAudioSessionId = static_cast<audio_session_t>(

AudioSystem::newAudioUniqueId(AUDIO_UNIQUE_ID_USE_SESSION));

} else {

mAudioSessionId = sessionId;

}

AudioSystem::acquireAudioSessionId(mAudioSessionId, (pid_t)-1, (uid_t)-1); // always in client.

mSendLevel = 0;

mRetransmitEndpointValid = false;

}

getAudioSessionId

frameworks/base/media/jni/android_media_MediaPlayer.cpp

static jint android_media_MediaPlayer_get_audio_session_id(JNIEnv *env, jobject thiz) {

ALOGV("get_session_id()");

sp<MediaPlayer> mp = getMediaPlayer(env, thiz);

if (mp == NULL ) {

jniThrowException(env, "java/lang/IllegalStateException", NULL);

return 0;

}

return (jint) mp->getAudioSessionId();

}

frameworks/av/media/libmedia/mediaplayer.cpp

audio_session_t MediaPlayer::getAudioSessionId()

{

Mutex::Autolock _l(mLock);

return mAudioSessionId;

}

system/media/audio/include/system/audio.h

typedef enum : int32_t {

AUDIO_SESSION_DEVICE = HAL_AUDIO_SESSION_DEVICE,

AUDIO_SESSION_OUTPUT_STAGE = HAL_AUDIO_SESSION_OUTPUT_STAGE,

AUDIO_SESSION_OUTPUT_MIX = HAL_AUDIO_SESSION_OUTPUT_MIX,

#ifndef AUDIO_NO_SYSTEM_DECLARATIONS

AUDIO_SESSION_ALLOCATE = 0,

AUDIO_SESSION_NONE = 0,

#endif

} audio_session_t;

typedef int audio_unique_id_t;

/* A unique ID with use AUDIO_UNIQUE_ID_USE_EFFECT */

typedef int audio_effect_handle_t;

/* Possible uses for an audio_unique_id_t */

typedef enum {

AUDIO_UNIQUE_ID_USE_UNSPECIFIED = 0,

AUDIO_UNIQUE_ID_USE_SESSION = 1, // audio_session_t

// for allocated sessions, not special AUDIO_SESSION_*

AUDIO_UNIQUE_ID_USE_MODULE = 2, // audio_module_handle_t

AUDIO_UNIQUE_ID_USE_EFFECT = 3, // audio_effect_handle_t

AUDIO_UNIQUE_ID_USE_PATCH = 4, // audio_patch_handle_t

AUDIO_UNIQUE_ID_USE_OUTPUT = 5, // audio_io_handle_t

AUDIO_UNIQUE_ID_USE_INPUT = 6, // audio_io_handle_t

AUDIO_UNIQUE_ID_USE_CLIENT = 7, // client-side players and recorders

// FIXME should move to a separate namespace;

// these IDs are allocated by AudioFlinger on client request,

// but are never used by AudioFlinger

AUDIO_UNIQUE_ID_USE_MAX = 8, // must be a power-of-two

AUDIO_UNIQUE_ID_USE_MASK = AUDIO_UNIQUE_ID_USE_MAX - 1

} audio_unique_id_use_t;

/* Reserved audio_unique_id_t values. FIXME: not a complete list. */

#define AUDIO_UNIQUE_ID_ALLOCATE AUDIO_SESSION_ALLOCATE

mAudioSessionId是MediaPlayer对象初始化时赋值的,构造参数传递过来的是0,即AUDIO_SESSION_ALLOCATE,所以调用如下方法创建一个

AudioSystem::newAudioUniqueId(AUDIO_UNIQUE_ID_USE_SESSION));

frameworks/av/media/libaudioclient/AudioSystem.cpp

audio_unique_id_t AudioSystem::newAudioUniqueId(audio_unique_id_use_t use) {

// Must not use AF as IDs will re-roll on audioserver restart, b/130369529.

const sp<IAudioFlinger> af = get_audio_flinger();

if (af == nullptr) return AUDIO_UNIQUE_ID_ALLOCATE;

return af->newAudioUniqueId(use);

}

sp<IAudioFlinger> AudioSystem::get_audio_flinger() {

return AudioFlingerServiceTraits::getService();

}

class AudioFlingerServiceTraits {

public:

// ------- required by ServiceSingleton

static constexpr const char* getServiceName() { return "media.audio_flinger"; }

static void onNewService(const sp<media::IAudioFlingerService>& afs) {

onNewServiceWithAdapter(createServiceAdapter(afs));

}

static void onServiceDied(const sp<media::IAudioFlingerService>& service) {

ALOGW("%s: %s service died %p", __func__, getServiceName(), service.get());

{

std::lock_guard l(mMutex);

if (!mValid) {

ALOGW("%s: %s service already invalidated, ignoring", __func__, getServiceName());

return;

}

if (!mService || mService->getDelegate() != service) {

ALOGW("%s: %s unmatched service death pointers, ignoring",

__func__, getServiceName());

return;

}

mValid = false;

if (mClient) {

mClient->clearIoCache();

} else {

ALOGW("%s: null client", __func__);

}

}

AudioSystem::reportError(DEAD_OBJECT);

}

static constexpr mediautils::ServiceOptions options() {

return mediautils::ServiceOptions::kNone;

}

// ------- required by AudioSystem

static sp<IAudioFlinger> getService(

std::chrono::milliseconds waitMs = std::chrono::milliseconds{-1}) {

static bool init = false;

audio_utils::unique_lock ul(mMutex);

if (!init) {

if (!mDisableThreadPoolStart) {

ProcessState::self()->startThreadPool();

}

if (multiuser_get_app_id(getuid()) == AID_AUDIOSERVER) {

mediautils::skipService<media::IAudioFlingerService>(mediautils::SkipMode::kWait);

mWaitMs = std::chrono::milliseconds(INT32_MAX);

} else {

mediautils::initService<media::IAudioFlingerService, AudioFlingerServiceTraits>(); // 1

mWaitMs = std::chrono::milliseconds(

property_get_int32(kServiceWaitProperty, kServiceClientWaitMs));

}

init = true;

}

if (mValid) return mService; // 2

if (waitMs.count() < 0) waitMs = mWaitMs;

ul.unlock();

// mediautils::getService() installs a persistent new service notification.

auto service = mediautils::getService<

media::IAudioFlingerService>(waitMs);

ALOGD("%s: checking for service %s: %p", __func__, getServiceName(), service.get()); // 3

ul.lock();

// return the IAudioFlinger interface which is adapted

// from the media::IAudioFlingerService.

return mService;

}

// ....

}

代码1处代表client进程的调用,获取IAudioFlingerService代理,并且传入了AudioFlingerServiceTraits, 如果代理失效则执行代码3处。

理解代码1处,如下所示:

frameworks/av/media/utils/include/mediautils/ServiceSingleton.h

template<typename Service, typename ServiceTraits>

bool initService(const ServiceTraits& serviceTraits = {}) {

const auto serviceHandler = details::ServiceHandler::getInstance(Service::descriptor);

return serviceHandler->template init<Service>(serviceTraits);

}

一步一步解析。

out/soong/.intermediates/frameworks/av/media/libaudioclient/audioflinger-aidl-cpp-source/gen/include/android/media/IAudioFlingerService.h

namespace android {

namespace media {

class LIBBINDER_EXPORTED IAudioFlingerServiceDelegator;

class LIBBINDER_EXPORTED IAudioFlingerService : public ::android::IInterface {

public:

typedef IAudioFlingerServiceDelegator DefaultDelegator;

DECLARE_META_INTERFACE(AudioFlingerService)

// ...

frameworks/native/libs/binder/include/binder/IInterface.h

#define DECLARE_META_INTERFACE(INTERFACE) \

public: \

static const ::android::String16 descriptor; \

static ::android::sp<I##INTERFACE> asInterface(const ::android::sp<::android::IBinder>& obj); \

virtual const ::android::String16& getInterfaceDescriptor() const; \

I##INTERFACE(); \

virtual ~I##INTERFACE(); \

static bool setDefaultImpl(::android::sp<I##INTERFACE> impl); \

static const ::android::sp<I##INTERFACE>& getDefaultImpl(); \

\

将AudioFlingerService带入上面的宏得到如下:

#define DECLARE_META_INTERFACE(AudioFlingerService)

public: \

static const ::android::String16 descriptor; \

static ::android::sp<IAudioFlingerService> asInterface(const ::android::sp<::android::IBinder>& obj); \

virtual const ::android::String16& getInterfaceDescriptor() const; \

IAudioFlingerService(); \

virtual ~IAudioFlingerService(); \

static bool setDefaultImpl(::android::sp<IAudioFlingerService> impl); \

static const ::android::sp<IAudioFlingerService>& getDefaultImpl(); \

\

#:代表转为字符串,例如:#Hello_World 代表 字符串 "Hello_World"

##: 代表拼接:Hello##World 代表 HelloWorld

且看descriptor如何被赋值。

out/soong/.intermediates/frameworks/av/media/libaudioclient/audioflinger-aidl-cpp-source/gen/android/media/IAudioFlingerService.cpp

namespace android {

namespace media {

DO_NOT_DIRECTLY_USE_ME_IMPLEMENT_META_INTERFACE(AudioFlingerService, "android.media.IAudioFlingerService")

} // namespace media

} // namespace android

frameworks/native/libs/binder/include/binder/IInterface.h

// Macro for an interface type.

#define DO_NOT_DIRECTLY_USE_ME_IMPLEMENT_META_INTERFACE(INTERFACE, NAME) \

const ::android::StaticString16 I##INTERFACE##_descriptor_static_str16( \

__IINTF_CONCAT(u, NAME)); \

const ::android::String16 I##INTERFACE::descriptor(I##INTERFACE##_descriptor_static_str16); \

DO_NOT_DIRECTLY_USE_ME_IMPLEMENT_META_INTERFACE0(I##INTERFACE, I##INTERFACE, Bp##INTERFACE)

可以看到INTERFACE为AudioFlingerService,NAME为"android.media.IAudioFlingerService"

展开为

#define DO_NOT_DIRECTLY_USE_ME_IMPLEMENT_META_INTERFACE(AudioFlingerService, android.media.IAudioFlingerService) \

const ::android::StaticString16 IAudioFlingerService_descriptor_static_str16( \

__IINTF_CONCAT(u, android.media.IAudioFlingerService)); \

const ::android::String16 IAudioFlingerService::descriptor(IAudioFlingerService_descriptor_static_str16); \

DO_NOT_DIRECTLY_USE_ME_IMPLEMENT_META_INTERFACE0(IAudioFlingerService, IAudioFlingerService, BpAudioFlingerService)

frameworks/av/media/utils/ServiceSingleton.cpp

template<typename T>

requires (std::is_same_v<T, const char*> || std::is_same_v<T, String16>)

std::shared_ptr<ServiceHandler> ServiceHandler::getInstance(const T& name) {

using Key = std::conditional_t<std::is_same_v<T, String16>, String16, std::string>;

[[clang::no_destroy]] static constinit std::mutex mutex;

[[clang::no_destroy]] static constinit std::shared_ptr<

std::map<Key, std::shared_ptr<ServiceHandler>>> map GUARDED_BY(mutex);

static constinit bool init GUARDED_BY(mutex) = false;

std::lock_guard l(mutex);

if (!init) {

map = std::make_shared<std::map<Key, std::shared_ptr<ServiceHandler>>>();

init = true;

}

auto& handler = (*map)[name];

if (!handler) {

handler = std::make_shared<ServiceHandler>();

if constexpr (std::is_same_v<T, String16>) {

handler->init_cpp(); // 1

} else /* constexpr */ {

handler->init_ndk();

}

}

return handler;

}

name为android.media.IAudioFlingerService

代码1处创建一个空的::android::IInterface.

理解代码3处:

frameworks/av/media/utils/include/mediautils/ServiceSingleton.h

template<typename Service>

auto getService(std::chrono::nanoseconds waitNs = {}) {

const auto serviceHandler = details::ServiceHandler::getInstance(Service::descriptor);

return interfaceFromBase<Service>(serviceHandler->template get<Service>(

waitNs, true /* useCallback */)); // 1

}

template <typename Service>

auto get(std::chrono::nanoseconds waitNs, bool useCallback) {

audio_utils::unique_lock ul(mMutex);

auto& service = std::get<BaseInterfaceType<Service>>(mService);

// early check.

if (mSkipMode == SkipMode::kImmediate || (service && mValid)) return service;

// clamp to avoid numeric overflow. INT64_MAX / 2 is effectively forever for a device.

std::chrono::nanoseconds kWaitLimitNs(

std::numeric_limits<decltype(waitNs.count())>::max() / 2);

waitNs = std::clamp(waitNs, decltype(waitNs)(0), kWaitLimitNs);

const auto end = std::chrono::steady_clock::now() + waitNs;

for (bool first = true; true; first = false) {

// we may have released mMutex, so see if service has been obtained.

if (mSkipMode == SkipMode::kImmediate || (service && mValid)) return service;

int options = 0;

if (mSkipMode == SkipMode::kNone) {

const auto traits = getTraits_l<Service>(); // 1

// first time or not using callback, check the service.

if (first || !useCallback) {

auto service_new = checkServicePassThrough<Service>(

traits->getServiceName());

if (service_new) {

mValid = true;

service = std::move(service_new);

// service is a reference, so we copy to service_fixed as

// we're releasing the mutex.

const auto service_fixed = service;

ul.unlock();

traits->onNewService(interfaceFromBase<Service>(service_fixed)); // 2

ul.lock();

setDeathNotifier_l<Service>(service_fixed);

ul.unlock();

mCv.notify_all();

return service_fixed;

}

}

// install service callback if needed.

if (useCallback && !mServiceNotificationHandle) {

setServiceNotifier_l<Service>();

}

options = static_cast<int>(traits->options());

}

// check time expiration.

const auto now = std::chrono::steady_clock::now();

if (now >= end &&

(service

|| mSkipMode != SkipMode::kNone // skip is set.

|| !(options & static_cast<int>(ServiceOptions::kNonNull)))) { // null allowed

return service;

}

// compute time to wait, then wait.

if (mServiceNotificationHandle) {

mCv.wait_until(ul, end);

} else {

const auto target = now + kPollTime;

mCv.wait_until(ul, std::min(target, end));

}

// loop back to see if we have any state change.

}

}

根据前面的mediautils::initService<media::IAudioFlingerService, AudioFlingerServiceTraits>();代码1处拿到了AudioFlingerServiceTraits并调用了onNewService方法

frameworks/av/media/libaudioclient/AudioSystem.cpp

class AudioFlingerServiceTraits {

public:

// ------- required by ServiceSingleton

static constexpr const char* getServiceName() { return "media.audio_flinger"; }

static void onNewService(const sp<media::IAudioFlingerService>& afs) {

onNewServiceWithAdapter(createServiceAdapter(afs));

}

static void onNewServiceWithAdapter(const sp<IAudioFlinger>& service) {

ALOGD("%s: %s service obtained %p", __func__, getServiceName(), service.get());

sp<AudioSystem::AudioFlingerClient> client;

bool reportNoError = false;

{

std::lock_guard l(mMutex);

ALOGW_IF(mValid, "%s: %s service already valid, continuing with initialization",

__func__, getServiceName());

if (mClient == nullptr) {

mClient = sp<AudioSystem::AudioFlingerClient>::make();

} else {

mClient->clearIoCache();

reportNoError = true;

}

mService = service;

client = mClient;

mValid = true;

}

// TODO(b/375280520) consider registerClient() within mMutex lock.

const int64_t token = IPCThreadState::self()->clearCallingIdentity();

service->registerClient(client);

IPCThreadState::self()->restoreCallingIdentity(token);

if (reportNoError) AudioSystem::reportError(NO_ERROR);

}

// ...

终于看到IAudioFlingerService的binder的代理对象转为了AudioFlingerClientAdapter然后赋值给了AudioFlingerServiceTraits对象的mService的IAudioFlinger对象.

最后调用了frameworks/av/services/audioflinger/AudioFlinger.cpp的nextUniqueId方法。太绕了wtf! 😦;

音频服务的trackPlayer方法

frameworks/base/services/core/java/com/android/server/audio/AudioService.java

顾名思义追踪播放器状态。

通过 Binder 调用系统服务,通知音频系统“有新的播放器对象产生了”,并返回 player ID。这个 ID 是系统内部唯一标识符,后续操作(音量、焦点、混音)都通过这个 ID 定位玩家。

PlayerIdCard 是一个包装类,携带 播放器信息:

mImplType → 播放器实现类型(如 MediaPlayer、AudioTrack 等)

mAttributes → 音频属性(音源类型、用途、flags 等)

new IPlayerWrapper(this) → Binder 回调代理,让系统可以回调播放器(如播放状态变化、焦点通知)

sessionId → 绑定的音频 session

playerAttributes

_setDataSource

_prepare

getDuration

TODO 1. Handler Native 2、invalidate doFrame 3、Binder Native

Android 源码解析 之 MediaPlayer

Android 源码解析 之 MediaPlayer

浙公网安备 33010602011771号

浙公网安备 33010602011771号