爬虫之scrapy简单案例之猫眼

在爬虫py文件下

class TopSpider(scrapy.Spider): name = 'top' allowed_domains = ['maoyan.com'] start_urls = ['https://maoyan.com/board/4'] def parse(self, response): dds = response.xpath('//dl/dd') for dd in dds: dic = MaoyanItem() # dic = {} dic['name'] = dd.xpath('.//p[@class="name"]//text()').extract_first() dic['star'] = dd.xpath('.//p[@class="star"]/text()').extract_first().replace('\n', '').replace(' ', '') dic['releasetime'] = dd.xpath('.//p[@class="releasetime"]/text()').extract_first() score1 = dd.xpath('.//p[@class="score"]/i[1]/text()').extract_first() score2 = dd.xpath('.//p[@class="score"]/i[2]/text()').extract_first() dic['score'] = score1 + score2 # 详情页 xqy_url = 'https://maoyan.com' + dd.xpath('.//p[@class="name"]/a/@href').extract_first() yield scrapy.Request(xqy_url, callback=self.xqy_parse, meta={'dic': dic}) # 翻页 next_url = response.xpath('//a[text()="下一页"]/@href').extract_first() if next_url: url = 'https://maoyan.com/board/4' + next_url yield scrapy.Request(url, callback=self.parse) def xqy_parse(self,response): dic = response.meta['dic'] dic['type'] = response.xpath('//ul/li[@class="ellipsis"][1]/text()').extract_first() dic['area_time'] = response.xpath('//ul/li[@class="ellipsis"][2]/text()').extract_first().replace('\n', '').replace(' ', '') yield dic

在items.py 文件中写入要展示的字段

class DoubanItem(scrapy.Item): title = scrapy.Field() inf = scrapy.Field() score = scrapy.Field() peo = scrapy.Field() brief = scrapy.Field()

在pipelines.py文件写入要打印的文本

class DoubanPipeline(object): def open_spider(self, spider): self.file = open('douban.txt', 'a', encoding='utf-8') def process_item(self, item, spider): self.file.write(str(item)+'\n') def close_spider(self, spider): self.file.close()

pipelines.py文件也可用MongoDB书写

1 from pymongo import MongoClient 2 3 4 class DoubanPipeline(object): 5 def open_spider(self,spider): 6 # self.file = open('douban.txt','a',encoding='utf8') 7 self.client = MongoClient() 8 self.collection = self.client['库名']['集合名'] 9 self.count = 0 10 11 def process_item(self, item, spider): 12 # self.file.write(str(item)+'\n') 13 item['_id'] = self.count 14 self.count += 1 15 self.collection.insert_one(item) 16 return item 17 18 def close_spider(self, spider): 19 # self.file.close() 20 self.client.close()

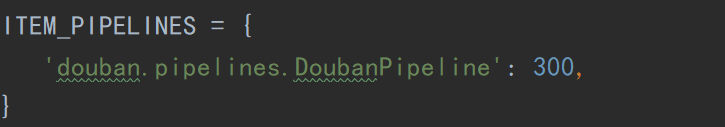

另外,记得在setting.py文件中配置一些信息,如

或者ROBOTS协议以及其他

浙公网安备 33010602011771号

浙公网安备 33010602011771号